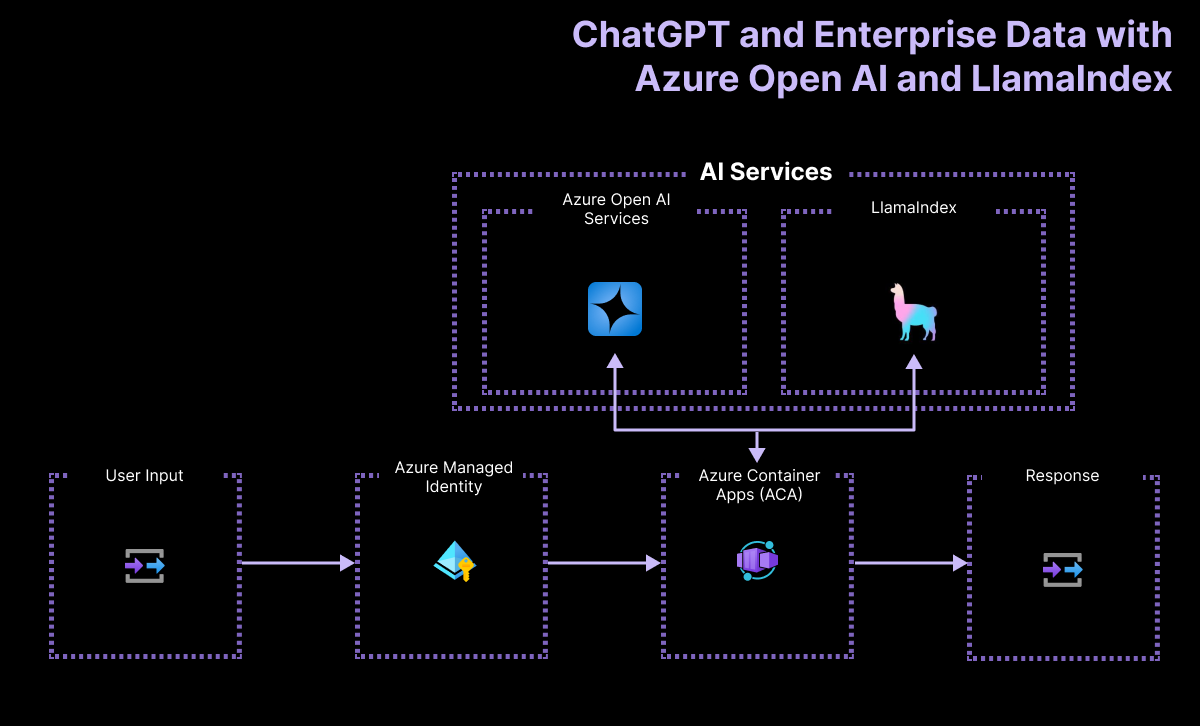

This sample shows how to quickly get started with LlamaIndex for TypeScript on Azure. The application is hosted on Azure Container Apps. You can use it as a starting point for building more complex RAG applications.

(Like and fork this sample to receive lastest changes and updates)

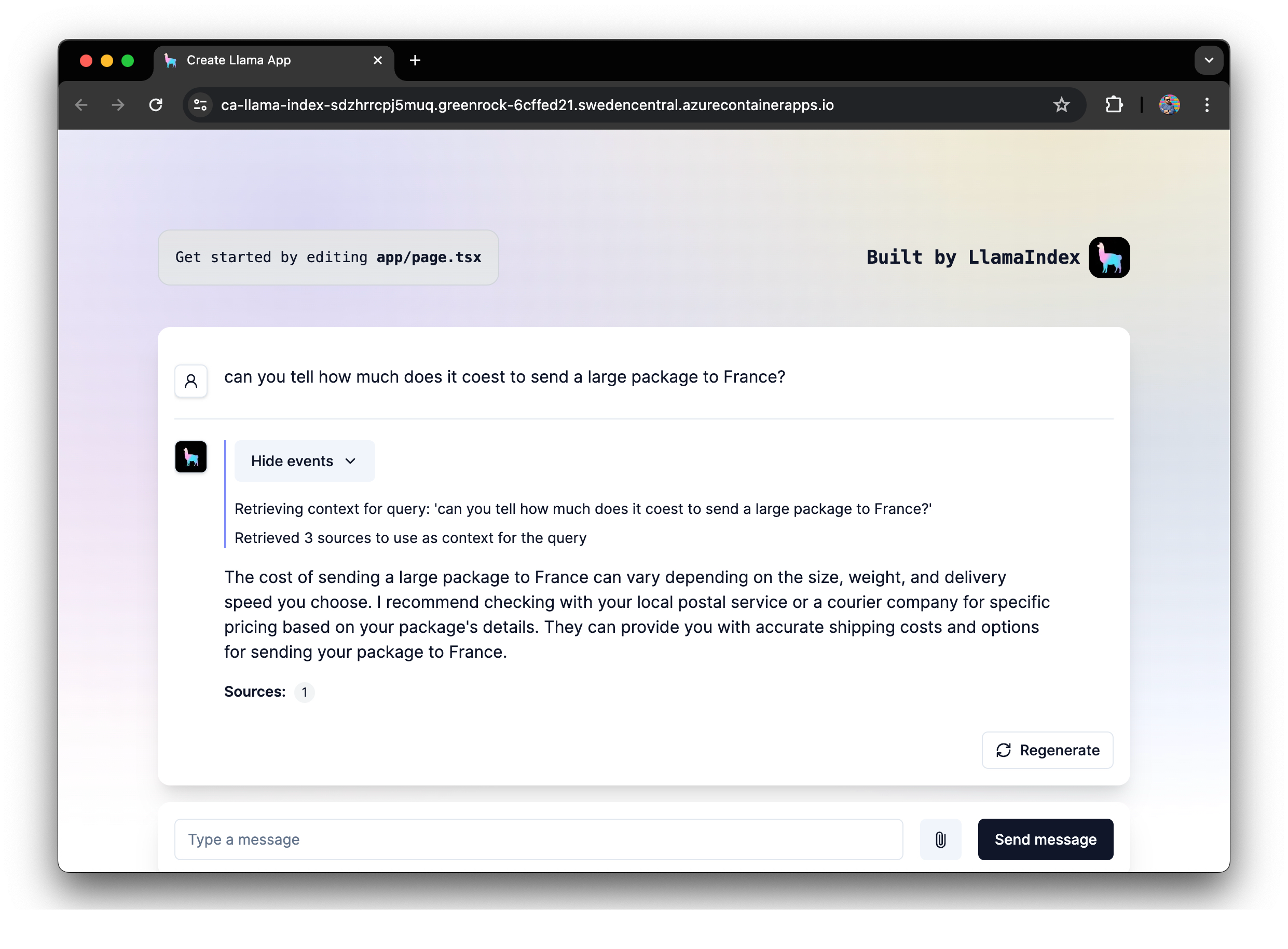

This project demonstrates how to build a simple LlamaIndex application using Azure OpenAI. The app is set up as a chat interface that can answer questions about your data. You can add arbitrary data sources to your chat, like local files, websites, or data retrieved from a database. The app will ingest any supported files you put in ./data/ directory. This sample app uses LlamaIndex.TS that is able to ingest any PDF, text, CSV, Markdown, Word and HTML files.

This application is built around a single component:

-

A full-stack Next.js application that is hosted on Azure Container Apps in just a few commands. This app uses LlamaIndex.TS, a TypeScript library that can ingest any PDF, text, CSV, Markdown, Word and HTML files.

-

The app uses Azure OpenAI to answer questions about the data you provide. The app is set up to use the

gpt-35-turbomodel and embeddings to provide the best and fastest answers to your questions.

You have a few options for getting started with this template. The quickest way to get started is GitHub Codespaces, since it will setup all the tools for you, but you can also set it up locally. You can also use a VS Code dev container

This template uses gpt-35-turbo version 1106 which may not be available in all Azure regions. Check for up-to-date region availability and select a region during deployment accordingly

- We recommend using

swedencentral

You can run this template virtually by using GitHub Codespaces. The button will open a web-based VS Code instance in your browser:

-

Open a terminal window

-

Sign into your Azure account:

azd auth login

-

Provision the Azure resources and deploy your code:

azd up

-

Install the app dependencies:

npm install

A related option is VS Code Dev Containers, which will open the project in your local VS Code using the Dev Containers extension:

-

Start Docker Desktop (install it if not already installed)

-

In the VS Code window that opens, once the project files show up (this may take several minutes), open a terminal window.

-

Sign into your Azure account:

azd auth login

-

Provision the Azure resources and deploy your code:

azd up

-

Install the app dependencies:

npm install

-

Configure a CI/CD pipeline:

azd pipeline config

To start the web app, run the following command:

npm run devOpen the URL http://localhost:3000 in your browser to interact with the bot.

You need to install following tools to work on your local machine:

- Docker

- Node.js LTS

- Azure Developer CLI

- Git

- PowerShell 7+ (for Windows users only)

- Important: Ensure you can run

pwsh.exefrom a PowerShell command. If this fails, you likely need to upgrade PowerShell. - Instead of Powershell, you can also use Git Bash or WSL to run the Azure Developer CLI commands.

- Important: Ensure you can run

- This template uses

gpt-35-turboversion1106which may not be available in all Azure regions. Check for up-to-date region availability and select a region during deployment accordingly- We recommend using

swedencentral

- We recommend using

Then you can get the project code:

- Fork the project to create your own copy of this repository.

- On your forked repository, select the Code button, then the Local tab, and copy the URL of your forked repository.

- Open a terminal and run this command to clone the repo:

git clone <your-repo-url>

-

Bring down the template code:

azd init --template llama-index-javascript

This will perform a git clone

-

Sign into your Azure account:

azd auth login

-

Install all dependencies:

npm install

-

Provision and deploy the project to Azure:

azd up

-

Configure a CI/CD pipeline:

azd pipeline config

Once your deployment is complete, you should see a .env file at the root of the project. This file contains the environment variables needed to run the application using Azure resources.

To run the sample, run the following commands, which will start the Next.js app.

-

Open a terminal and navigate to the root of the project, then run app:

npm run dev

Open the URL http://localhost:3000 in your browser to interact with the Assistant.

This template uses gpt-35-turbo version 1106 which may not be available in all Azure regions. Check for up-to-date region availability and select a region during deployment accordingly

- We recommend using

swedencentral

Pricing varies per region and usage, so it isn't possible to predict exact costs for your usage. However, you can use the Azure pricing calculator for the resources below to get an estimate.

- Azure Container Apps: Consumption plan, Free for the first 2M executions. Pricing per execution and memory used. Pricing

- Azure OpenAI: Standard tier, GPT and Ada models. Pricing per 1K tokens used, and at least 1K tokens are used per question. Pricing

Warning

To avoid unnecessary costs, remember to take down your app if it's no longer in use, either by deleting the resource group in the Portal or running azd down --purge.

Note

When implementing this template please specify whether the template uses Managed Identity or Key Vault

This template has either Managed Identity or Key Vault built in to eliminate the need for developers to manage these credentials. Applications can use managed identities to obtain Microsoft Entra tokens without having to manage any credentials. Additionally, we have added a GitHub Action tool that scans the infrastructure-as-code files and generates a report containing any detected issues. To ensure best practices in your repo we recommend anyone creating solutions based on our templates ensure that the Github secret scanning setting is enabled in your repos.

Here are some resources to learn more about the technologies used in this sample:

- LlamaIndexTS Documentation - learn about LlamaIndex (Typescript features).

- Generative AI For Beginners

- Azure OpenAI Service

- Azure OpenAI Assistant Builder

- Chat + Enterprise data with Azure OpenAI and Azure AI Search

You can also find more Azure AI samples here.

If you can't find a solution to your problem, please open an issue in this repository.

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.