- Ping Zhu (pz2232)

- Yang Hu (yh2948)

- Zhichao Yang (zy2280)

-

Environment:

- Python 3.5

- Pytorch

- Ubuntu 16.04.4 LTS

- 4 core, 40G memory, with 4 GPUs

- Install the cocoAPI from pycocotools, and append it to your system path while running the code to generate captions vocabulary.

-

Follow the steps bellow to run the code

- Download the image from this link. We are using

2017 Val images [5K/1GB]to train the data and do the validation over2017 Test images [41K/6GB]. - Run

create_vocab_dic.pyto get a dictionary of all the vocabs appear more than five times in all of the captions. We will get a filevocab.pklunder the path./data/vocab.pklby default. - Run

image_resize.py --image_dir path_to_your_image_file --output path_to_save_imageto crop the image (255 x 255) to (224 x 224) to utilize the pretrained resnet152 model provided by the packet pytorchvision. - The

utils.pyhas helper functions to transform the image and captions helpful to evaluate the model and transfer the images and captions. - The

data_loader.pywrites a wrapper over torch DataLoader to load coco dataset with COCO provied API. And it reformats the data per batch includes the merging captions, adds the padding, returns the caption length, etc. - You can load the pretrained model result from the directory

data\pretrainedmodel to directly test the result. - You can run the

view_datato see the attention method used. - Run the jupyter notebook

main.ipynbto train on the pretrained model or do everything from the scratch. Then you should see the loss and perplexity of the decoder printed. - You can do the prediction with

predit.ipynb

- Download the image from this link. We are using

| |CIDEr | Bleu_1 | Bleu_2 |Bleu_3 |Bleu_4 | ROUGE_L| METEOR | :---- | :-------: | :----------: |:-------: |:-------- :| :-------- :|: -------: |:------- :| | C5 |0.671694 | 0.661281 | 0.476947| 0.325450 | 0.216090| 0.481938| 0.212928| | C40 |0.704519 | 0.843240 | 0.724644 | 0.587346 | 0.452872 |0.610584| 0.282690|

- Account and password for coco evaluation:

- Account name: matthew_zhu

- Password: fortune_teller_dl_final

- Evalutaion from COCO is saved in

eval_on_cocodirectory. - Prediction results on

val2014andtest2014are saved underresultdirectory.

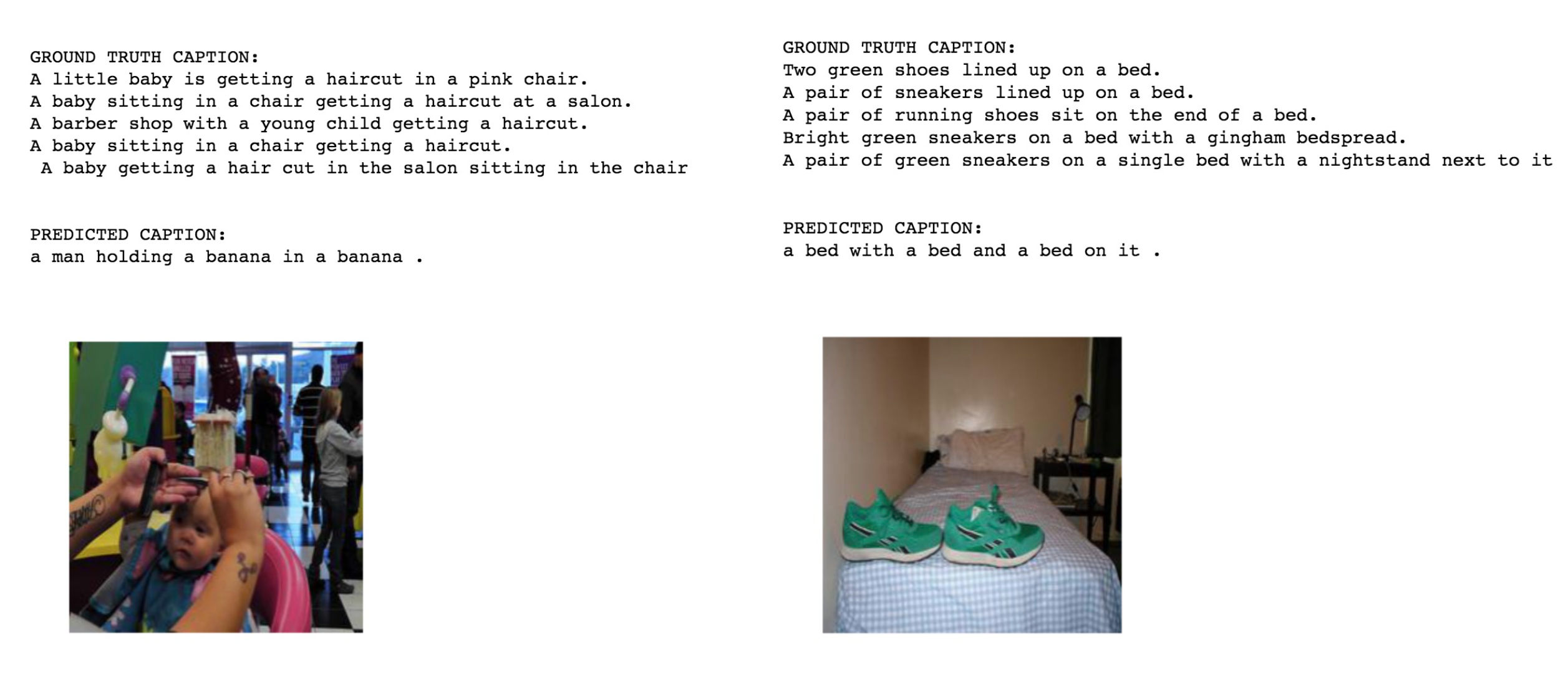

Our model just exactly implements the Kowning When to Look model right now, we may add beam search to predict caption and use Top Down model to extract image feature later.

- Encoder:

- Using Resnet 152 to extract the image features

Vand getsv_g, which later used to createx_tand feeds into LSTM.

- Using Resnet 152 to extract the image features

- Decoder:

- Embed the caption to get

w_tand concate it withv_gto formx_t - Say the max length of all captions for current batch is #, Run the LSTM(RNN) # times, and store the hidden and cell states of all these timestamps. Meanwhile, extract the sentinal information from the LSTM.

- Feed the hidden states, cell states and sentinal information to the attention block. From the attention block we can calculate the attention weights

α_t, sentinal weightβ_tandc_hat. - Feed the hidden state of LSTM and

c_hatto MLP to get a score matrixbatch_size x # x len(vocab), where each row denotes a slot in the caption output and each column represents a word in the dictionary. We select the word with hightest score for each caption slot and form the final caption.(Greedy)

- Embed the caption to get