Fairness in Autonomous Driving: Towards Understanding Confounding Factors in Object Detection in Challenging Weather (CVPR 2024 Workshop)

Bimsara Pathiraja, Caleb Liu, Ransalu Senanayake

Abstract: The deployment of autonomous vehicles (AVs) is rapidly expanding to numerous cities. At the heart of AVs, the object detection module assumes a paramount role, directly influencing all downstream decision-making tasks by considering the presence of nearby pedestrians, vehicles, and more. Despite high accuracy of pedestrians detected on held-out datasets, the potential presence of algorithmic bias in such object detectors, particularly in challenging weather conditions, remains unclear. This study provides a comprehensive empirical analysis of fairness in detecting pedestrians in a state-of-the-art transformer-based object detector. In addition to classical metrics, we introduce novel probability-based metrics to measure various intricate properties of object detection. Leveraging the state-of-the-art FACET dataset and the Carla high-fidelity vehicle simulator, our analysis explores the effect of protected attributes such as gender, skin tone, and body size on object detection performance in varying environmental conditions such as ambient darkness and fog. Our quantitative analysis reveals how the previously overlooked yet intuitive factors, such as the distribution of demographic groups in the scene, the severity of weather, the pedestrians' proximity to the AV, among others, affect object detection performance.

Will be available soonOur key contributions are as follows:

- We propose confidence score-based metrics for fairness evaluation to bridge the gap between object detection and fairness evaluation. To our knowledge, this marks the first attempt to formulate such metrics, specifically tailored for fairness assessment in object detection.

- We demonstrate, using results from the real-world dataset, how minor data alterations could invert perceived biases. Such findings underscore the necessity of simulations to unravel the nuanced factors that affects of fairness in object detection.

- We conduct comprehensive experiments and present the trends in AV biases for protected attributes under numerous weather conditions utilizing SOTA transformer-based object detection model.

For Carla installation and usage instructions follow guidelines here

You can reproduce the results of Carla experiments using following steps.

- Start Carla in background

./CarlaUE4.sh -RenderOffScreen

- Run Carla simulation and saving RGB and segmented images. You can use Carla pedestrain catalogue for finding suitable pedestrian ids.

python Carla-codes/main_args.py --person 0001 --weather fog --intensity 0

- Generate bounding box annotations using the segmentated images

python Carla-codes/bbx_json.py

- Generate DETR predictions for the rgb images

python Carla-codes/detrr-carla.py

- Get metric values

python Carla-codes/metrics.py

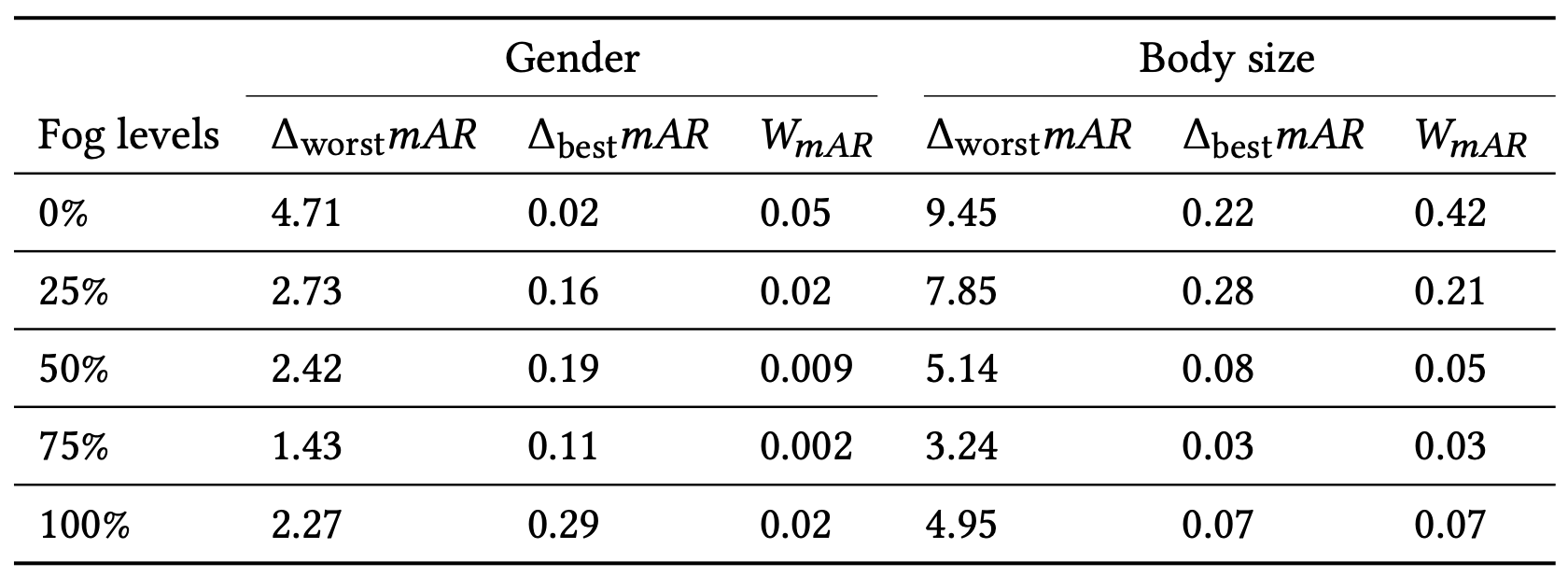

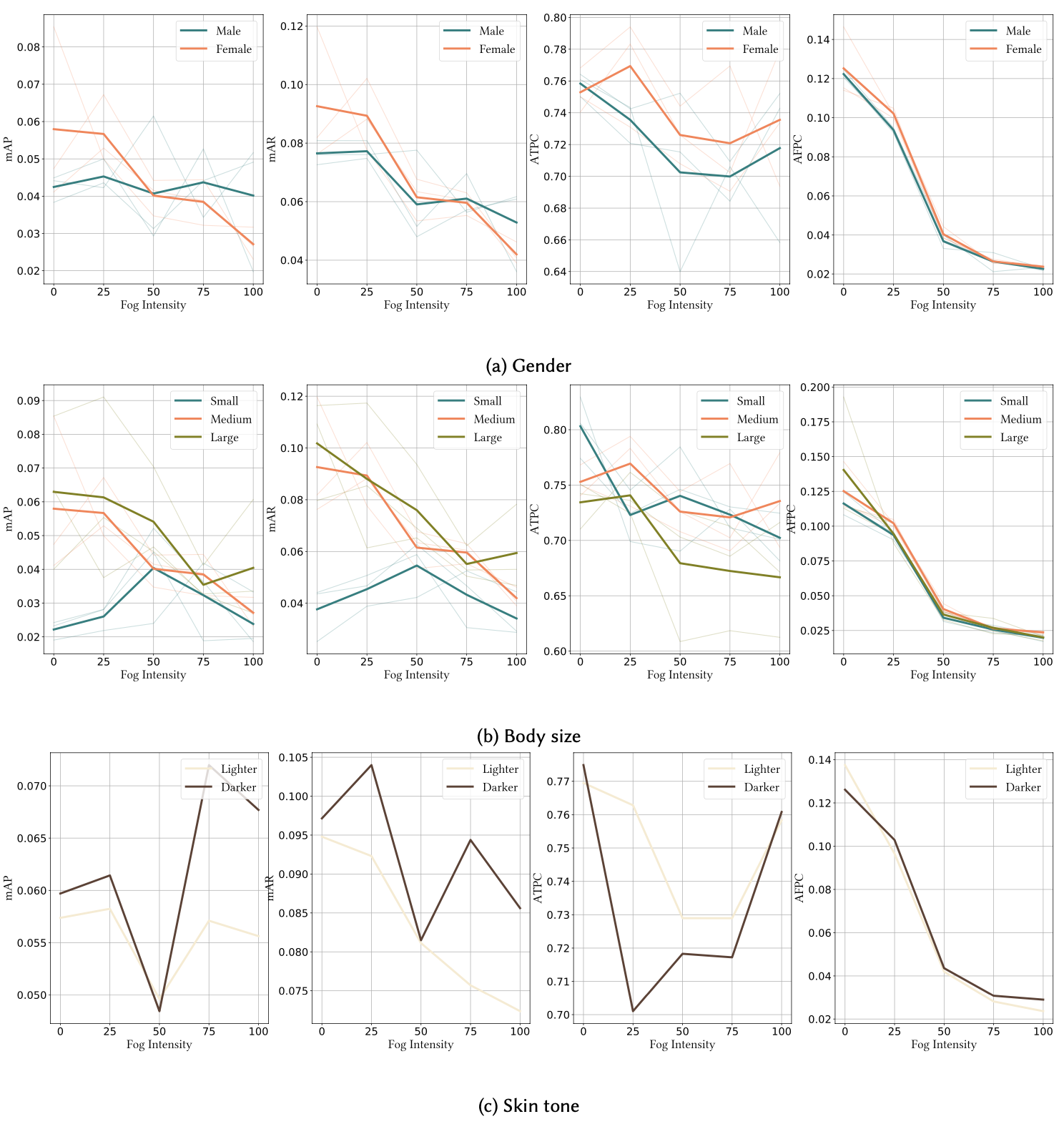

The disparity analysis of the performance of the object detector for Carla experiments based on gender, skin tone and the body sizes are shown. Even though gender and skin tone do not affect much, small body size (children) is detected poorly. Each category of gender and body size plots use three types of pedestrains and the bold line represents the average line for each group. The skin tone plots use only one pedestrian type for each category due to the limited choices in the Carla catalogue of pedestrians.

In case of any query, create issue or contact bpathir1@asu.edu