This repository serves as a showcase to demonstrate the shift from OPC UA Samples to PubSub message format and its impact to underlying cloud processing layers

OPC Publisher is a fully-supported Microsoft product that bridges the gap between industrial assets and the Microsoft Azure cloud. It does so by connecting OPC UA-enabled assets or industrial connectivity software to your Microsoft Azure cloud. It publishes the telemetry data it gathers to Azure IoT Hub in various formats, including IEC62541 OPC UA PubSub standard format (from version 2.6 onwards). OPC Publisher runs on Azure IoT Edge as a Module or on plain Docker as a container. Because it leverages the .NET cross-platform runtime, it runs natively on both Linux and Windows 10.

See here for more details.

Implements an OPC-UA server with different nodes generating random data, anomalies and configuration of user defined nodes. You can refer to the following website to get more details.

OPC Publisher comes along with the default message format “Samples”, this is a non-standardized, simple JSON format. OPC Publisher version 2.6 and above supports a standardized OPC UA JSON format named “PubSub”. By switching the configuration to use this newer, industry-standardized message format, you can benefit from increasing schema stability, future proofing solution, etc.

[

{

"NodeId": "nsu=http://microsoft.com/Opc/OpcPlc/;s=RandomUnsignedInt32",

"EndpointUrl": "opc.tcp://opcplc:50000/",

"ApplicationUri": "urn:OpcPlc:opcplc",

"Timestamp": "2022-10-21T11:26:36.064142Z",

"Value": {

"Value": 1029320596,

"SourceTimestamp": "2022-10-21T11:26:35.9594394Z",

"ServerTimestamp": "2022-10-21T11:26:35.9594399Z"

}

}

][

{

"MessageId": "13",

"MessageType": "ua-data",

"PublisherId": "opc.tcp://opcplc:50000_9908E95C",

"Messages": [

{

"DataSetWriterId": "1000",

"MetaDataVersion": {

"MajorVersion": 1,

"MinorVersion": 0

},

"Payload": {

"nsu=http://microsoft.com/Opc/OpcPlc/;s=RandomSignedInt32": {

"Value": 12,

"SourceTimestamp": "2022-07-18T08:26:56.3987889Z"

}

}

}

]

}

]In general, events created on the edge device are sent to the connected cloud environment for further processing, analyzing and to provide users the right interfaces and services to consume the data. A recommended way of processing and enriching events for the "Hot Path" is to use Azure Stream Analytics jobs (see Azure IoT reference architecture). That also makes it very easy to add static reference data (machine metadata) to the message on a comfortable way.

OPC Publisher makes it very easy to change from default Sample message format to PubSub structure. Only a parameter mm with a value PubSub needs to be added to the deployment manifest of the OPC Publisher module which is supported from version 2.6.

"settings": {

"image": "mcr.microsoft.com/iotedge/opc-publisher:2.8.3",

"createOptions": {

"Hostname": "opcpublisher",

"Cmd": [

"--pf=/appdata/config/publisher1.json",

"--lf=/appdata/publisher.log",

"--mm=PubSub",

"--me=Json",

"--fm=true",

"--bs=1",

"--di=20",

"--aa",

"--rs=true",

"--ri=true"

],

"HostConfig": {

"Binds": [

"c:/iiotedge:/appdata"

],

"ExtraHosts": [

"localhost:127.0.0.1"

]

}

}

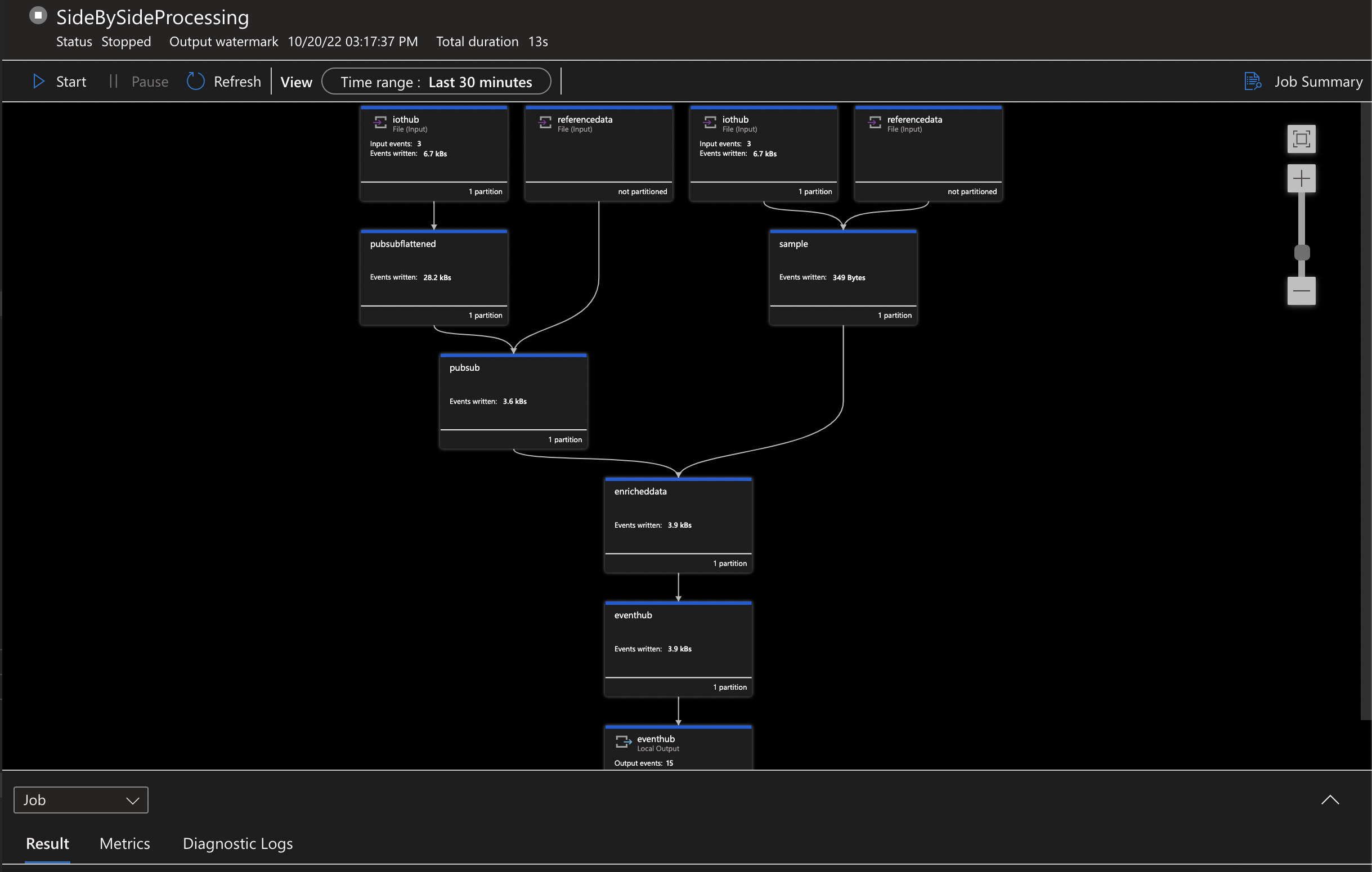

}While the processing of the Sample message format is straight forward, you need to consider the more complex message structure of the the PubSub format which has a key/value pair structure for signals and their telemetry values. Hence, it is required to flatten the messages in a first step and to make all messages from a batch array available by using GetArrayElements and GetRecordProperties functions.

WITH

PubSubFlattened AS(

SELECT

ih.*,

ih.IoTHub.ConnectionDeviceId,

payload.PropertyValue.StatusCode.Code AS ValueStatusCode,

payload.PropertyValue.StatusCode.Symbol AS ValueStatusCodeSymbol,

payload.PropertyName AS PayloadName,

payload.PropertyValue.Value AS PayloadValue,

payload.PropertyValue.SourceTimestamp AS PayloadSourceTimestamp,

payload.PropertyValue.ServerTimestamp AS PayloadServerTimestamp

FROM

IoTHub AS ih

CROSS APPLY

GetArrayElements(ih.Messages) as msg -- GetArrayElements: https://docs.microsoft.com/en-us/stream-analytics-query/getarrayelements-azure-stream-analytics

CROSS APPLY

GetRecordProperties(msg.ArrayValue.Payload) AS payload -- GetRecordProperties: https://docs.microsoft.com/en-us/stream-analytics-query/getrecordproperties-azure-stream-analytics

WHERE

ih.PublisherId IS NOT NULL --identifier in message for PubSub format, not available in Sample format

AND

ih.MessageType IS NOT NULL --identifier in message for PubSub format, not available in Sample format

)If you already processed messages with the default Sample format, you would have the scenario that messages with both PubSub and Sample structure arrive at the IoTHub and have to be processed properly by the ASA job. A UNION construct resolves that problem where the query condition needs to separate the different formats by evaluating an identifier in the message.

WHERE

ih.PublisherId IS NOT NULL --identifier in message for PubSub format, not available in Sample format- Install Docker Desktop

- Install Azure IoT Tools Visual Studio Code extension that includes Azure IoT Edge extension now

- Install Azure Stream Analytics Tools

Although the sample is built to fully run locally by using json input files, you can also run it by setting up a simple Azure cloud infrastructure containing the following resources:

- Create Azure IoT Hub

- Register IoT Edge Device

- Create Sql Database --> add one reference data table that contains your device´s metadata by using Sql Server Management Studio, Azure Data Studio or the Azure Portal

- Optional: Create Event Hub --> not required if you use local output when running the ASA job

- Add your credentials and connection strings to

- ./streamanalytics/PubPubProcessing/Inputs/IoTHub.json

- ./streamanalytics/PubPubProcessing/Inputs/ReferenceData.json

- ./streamanalytics/PubPubProcessing/Outputs/EventHub.json

- ./streamanalytics/PubPubProcessing/Outputs/EventHub.json

- Add right sql query in ./streamanalytics/PubSubProcessing/Inputs/ReferenceData.snapshot.sql to obtain metadata

- Optional: Create Stream Analytics job, you have to add the content from file SideBySideProcessing.asaql to the job query as reference data input and also add the input and output connectors --> this step is not required to run the sample if a local run from VS Code is simulated

- Connect Azure IoT Hub (device) explorer to your IoT Hub and right-click your IoT Edge device and select "Setup IoT Edge Simulator"

- Right-click file deployment..template.json and select "Build and Run IoT Edge Solution in Simulator"

- Right click ./streamanalytics/PubSubProcessing/SideBySideProcessing.asaql and select "ASA: Start Local Run" to start the ASA job

- Option a: select "Use Live Input and Local Output" --> so you can consume the the events that are auto-generated by OPC PLC module and published to IoT Hub by OPC Publisher module

- Option b: select "Use Local Input and Local Output" --> so you can skip step 7 and 8 and the run uses the IoTHubLocal.json and ReferenceDataLocal.json as input mocks