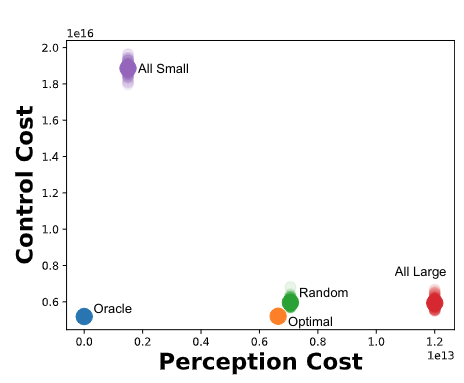

Robotic perception models, such as Deep Neural Networks (DNNs), are becoming more computationally intensive and there are several models being trained with accuracy and latency trade-offs. However, modern latency accuracy trade-offs largely report mean accuracy for single-step vision tasks, but there is little work showing which model to invoke for multistep control tasks in robotics. The key challenge in a multi-step decision making is to make use of the right models at right times to accomplish the given task. That is, the accomplishment of the task with a minimum control cost and minimum perception time is a desideratum; this is known as the model selection problem. In this work, we precisely address this problem of invoking the correct sequence of perception models for multi-step control. In other words, we provide a provably optimal solution to the model selection problem by casting it as a multi-objective optimization problem balancing the control cost and perception time. The key insight obtained from this solution is how the variance of the perception models matters (not just the mean accuracy) for multistep decision making, and to show how to use diverse perception models as a primitive for energy-efficient robotics. Further, this demonstrates the approach on a photo-realistic drone landing simulation using visual navigation in AirSim. This proposed policy achieved 38.04% lower control cost with 79.1% less perception time than other competing benchmarks.

Python 3.9.xNumPySciPympmathpytorchtorchvisiontensorflowefficientnet_pytorchcv2tqdmseabornpandas- Gurobi Python Interface:

-

Download the repository to your desired location

/my/location/: -

Once the repository is downloaded, please open

~/.bashrc, and add the lineexport CLD_OFLD_NO_IID_ROOT_DIR=/my/location/Cloud_Offloading_NoIID/, mentioned in the following steps:-

vi ~/.baschrc -

Once

.bashrcis opened, please add the location, where the tool was downloaded, to a path variableCLD_OFLD_NO_IID_ROOT_DIR(This step is crucial to run the tool):-

export CLD_OFLD_NO_IID_ROOT_DIR=/my/location/Cloud_Offloading_NoIID/

-

-

- Please download the models, in a folder

models, in location/my/location/Cloud_Offloading_NoIID/. The dataset is provided in a shared Google drive.

Note: If you have already downloaded the trained perception model, this step is not required, unless you want to train your own models.

-

The dataset can be downloaded from a shared Google drive.

-

The folder

datashould have the following structure:-

airsim_rec.txt -

test -

validation -

train

-

-

An example image of the dataset:

After all the above steps are performed, the location /my/location/Cloud_Offloading_NoIID/ should have the following folders:

datalibmodelsoutputParameters.pyREADME.mdsrc

Once the dependencies are installed properly, and the path variable is set, the steps provided in the should run without any error.

Following are some of the crucial functionalities offered by this prototype tool:

- Training

EfficientNetmodels for drone landing. - Testing the

EfficientNetmodels. - Compute Mean and Variance of the models.

- Perform Model Selection.

-

Note: These steps are optional. We already provide the trained models.

-

Once the dataset is downloaded and placed in proper location, the models can be trained by simply running the following script, in the location

/src/:-

python Train.py

-

-

To change parameters, such as

epochandbatchsize, please modify the file/lib/TrainerEffNetEarlyStop.py. -

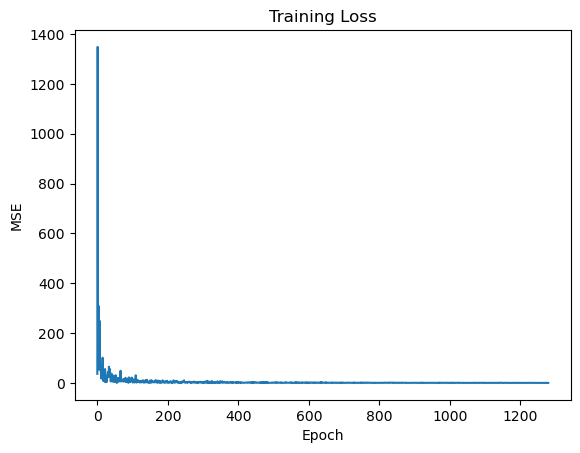

A sample training plot is given below:

-

The models can be tested by running the following script in location

/src/:-

python Test.py

-

-

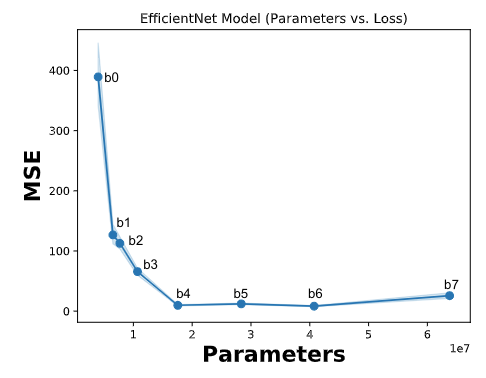

Following is the accuracy plot:

-

Note: The pickle containing the computed mean and variances are already provided in

/models/; therefore these steps are again optional. -

The mean and the variances of all the models can be computed using the following script in

/src:-

python CompMeanVar.py

-

-

The above step should create

model_stats_150.pklin/models/. -

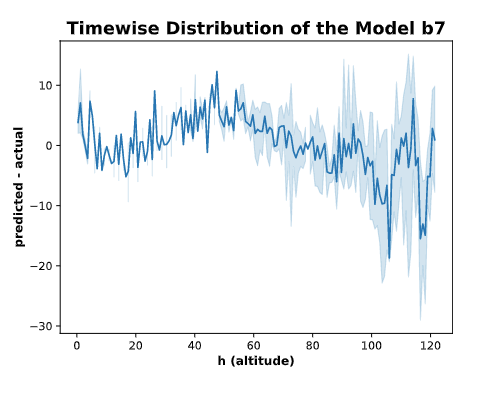

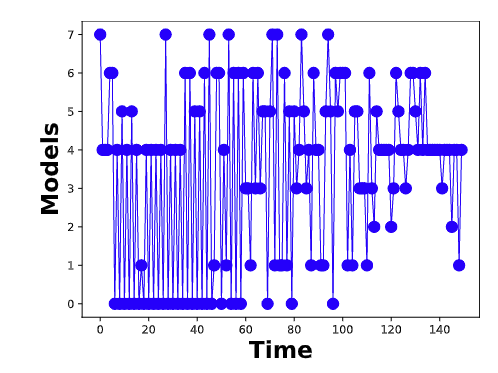

An example figure of the computed mean and variance of

EfficientNet-b7is given as: