Reference:

P. Vecchiotti, N. Ma, S. Squartini, and G. J. Brown, “END-TO-END BINAURAL SOUND LOCALISATION FROM THE RAW WAVEFORM,” in 2019 IEEE INTERNATIONAL CONFERENCE ON ACOUSTICS, SPEECH AND SIGNAL PROCESSING (ICASSP), 345 E 47TH ST, NEW YORK, NY 10017 USA, 2019, pp. 451–455.

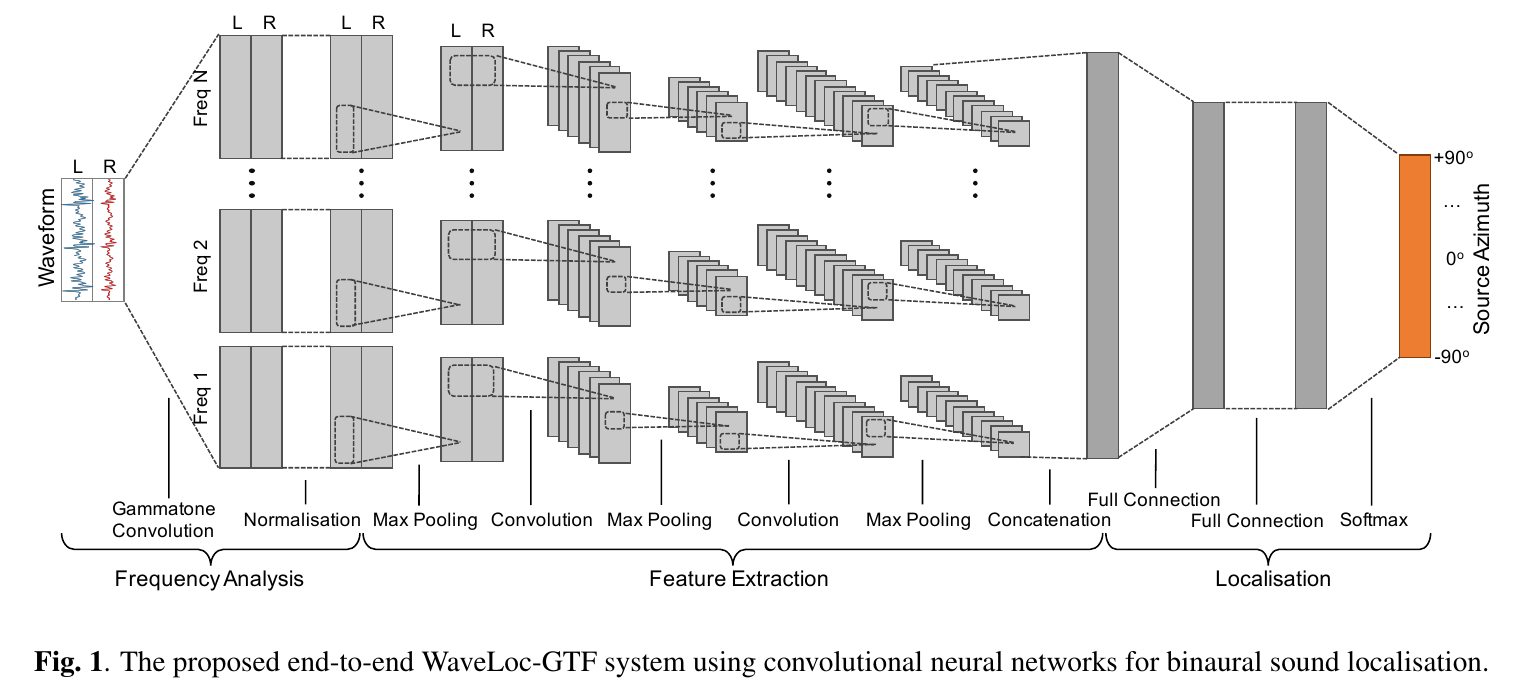

Only WaveLoc-GTF is implemented

-

BRIR

Surrey binaural room impulse response (BRIR) database, including anechoic room and 4 reverberation room.

Room A B C D RT_60(s) 0.32 0.47 0.68 0.89 DDR(dB) 6.09 5.31 8.82 6.12 -

Sound source

TIMIT database

Sentences per azimuth

Train Validate Evaluate 24 6 15

For For each reverberant room, the rest 3 reverberant rooms and anechoic room are used for training

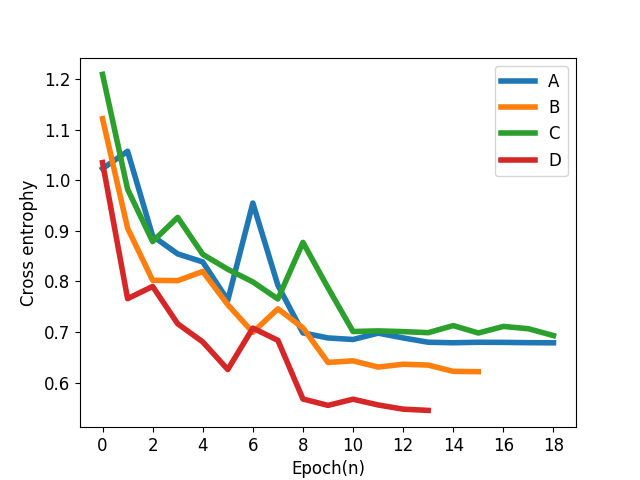

Training curves

Root mean square error(RMSE) is used as the metrics of performance. For each reverberant room, the evaluation was performed 3 times to get more stable results and the test dataset was regenerated each time.

Since binaural sound is directly fed to models without extra preprocess and there may be short pulses in speech, the localization result was reported based on chunks rather than frames. Each chunk consisted of 25 consecutive frames.

| Reverberant room | A | B | C | D |

|---|---|---|---|---|

| My result | 1.5 | 2.0 | 1.4 | 2.7 |

| Result in paper | 1.5 | 3.0 | 1.7 | 3.5 |

- tensorflow-1.14

- pysofa (can be installed by pip)

- BasicTools (in my other repository)