The official implementation of the paper:

Vista: A Generalizable Driving World Model with High Fidelity and Versatile Controllability

Shenyuan Gao, Jiazhi Yang, Li Chen, Kashyap Chitta, Yihang Qiu, Andreas Geiger, Jun Zhang, Hongyang Li

[arXiv] [video demos]

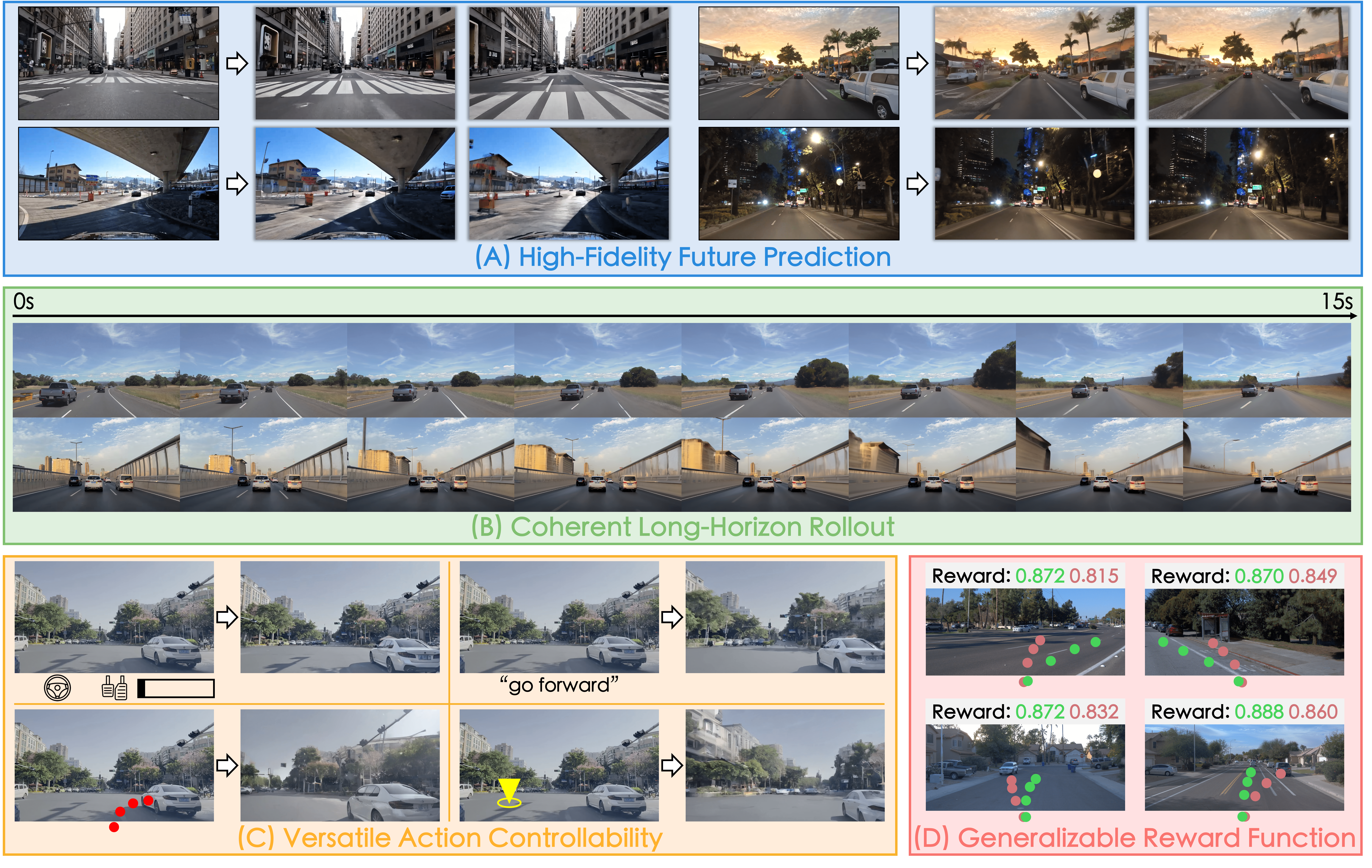

🔖 Vista is a generalizable driving world model that can:

- Predict high-fidelity futures in various scenarios.

- Extend its predictions to continuous and long horizons.

- Execute multi-modal actions (steering angles, speeds, commands, trajectories, goal points).

- Provide rewards for different actions without accessing ground truth actions.

- [2024/05/28] We released the implementation of our model.

- [2024/05/28] We released our paper on arXiv.

- Installation, training, and sampling scripts (within one week).

- Model weights release.

- Model configuration optimization.

- More detailed instructions.

- Online demo for interaction.

Our implementation is based on generative-models from Stability AI. Thanks for their open-source work!

If any parts of our paper and code help your research, please consider citing us and giving a star to our repository.

@article{gao2024vista,

title={Vista: A Generalizable Driving World Model with High Fidelity and Versatile Controllability},

author={Shenyuan Gao and Jiazhi Yang and Li Chen and Kashyap Chitta and Yihang Qiu and Andreas Geiger and Jun Zhang and Hongyang Li},

journal={arXiv preprint arXiv:2405.17398},

year={2024}

}

@inproceedings{yang2024genad,

title={{Generalized Predictive Model for Autonomous Driving}},

author={Jiazhi Yang and Shenyuan Gao and Yihang Qiu and Li Chen and Tianyu Li and Bo Dai and Kashyap Chitta and Penghao Wu and Jia Zeng and Ping Luo and Jun Zhang and Andreas Geiger and Yu Qiao and Hongyang Li},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2024}

}