BionicGPT is an on-premise replacement for ChatGPT, offering the advantages of Generative AI while maintaining strict data confidentiality

BionicGPT can run on your laptop or scale into the data center.

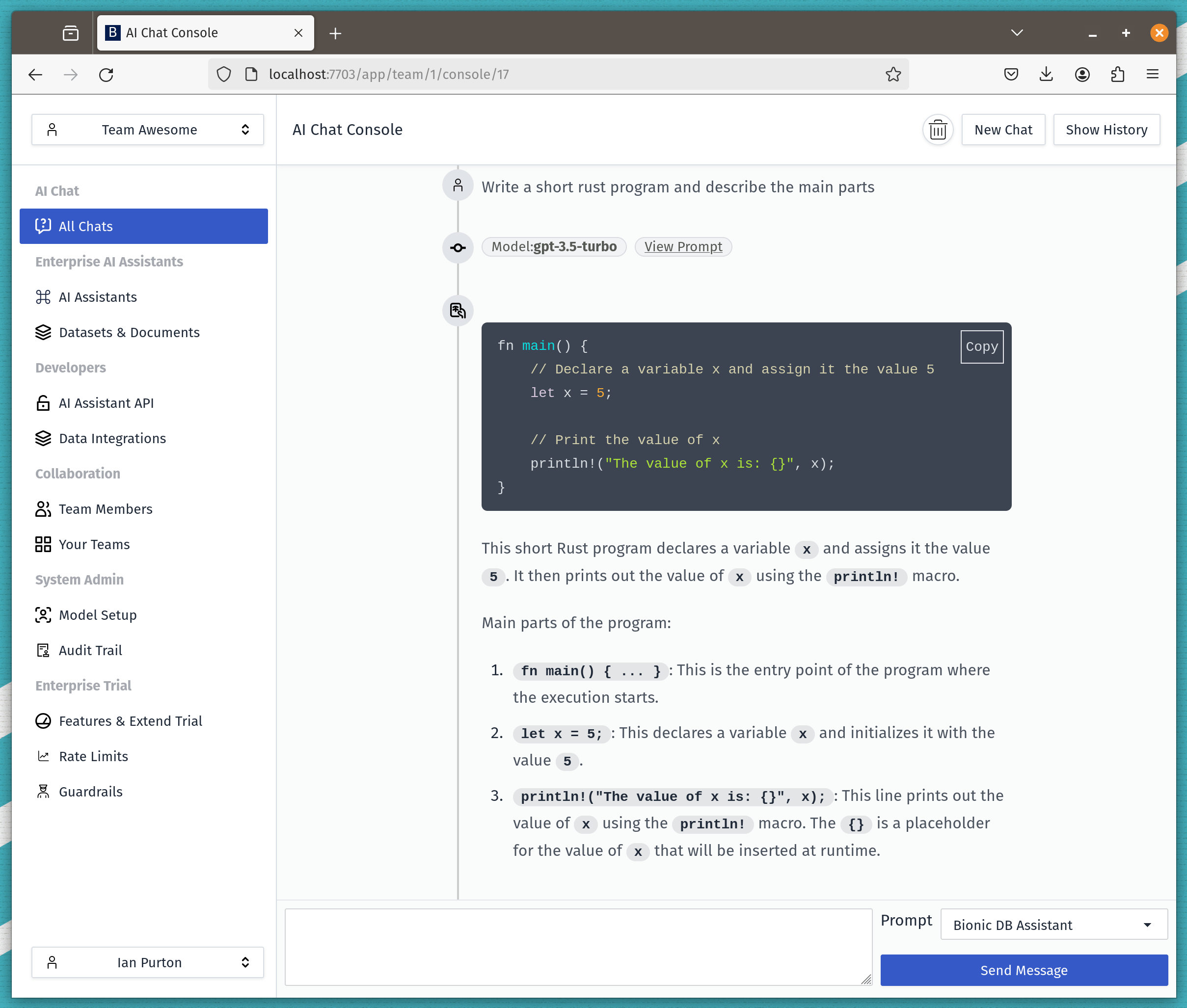

- 🖥️ Intuitive Interface: Our chat interface takes inspiration from ChatGPT, ensuring a user-friendly experience.

- 🌈 Theme Customization: The theme for Bionic is completely customizable allowing you to brand Bionic as you like.

- ⚡ Ultra Fast UI: Enjoy fast and responsive performance from our Rust based UI.

- 📜 Chat History: Effortlessly access and manage your conversation history.

- 🤖 AI Assistants: Users can create assistants that work with their own data to enhance the AI.

- 🗨️ Share Assistants with Team Members: Generate and share assistants seamlessly between users, enhancing collaboration and communication.

- 📋 RAG Pipelines: Assistants are full scale enterprise ready RAG pipelines that can be launched in minutes.

- 📑 Any Documents: 80% of enterprise data exists in difficult-to-use formats like HTML, PDF, CSV, PNG, PPTX, and more. We support all of them.

- 💾 No Code: Confgure embeddings engine and chunking lagorithms all through our UI.

- 🗨️ System Prompts: Configure system prompts to get the LLM to reply in the way you want.

- 👫 Teams: Your company is made up of Teams of people and Bionic utilises this setup for maximum effect.

- 👫 Invite Team Members: Teams manage themselves in a comntrolled way.

- 🙋 Manage Teams: Manage who has access to Bionic with your SSO system.

- 👬 Virtual Teams: Create teams within teams to

- 🚠 Switch Teams: Switch betweens teams whilst still keeping data isolated.

- 🚓 RBAC: Use your SSO system to configure which features users have access to.

- 👮 SAST: Static Application Security Testing - Our CI/CD pipeline runs SAST so we can identify risks before the code is built.

- 📢 Authorization RLS - We use Row Level Security in Postgres as another check to ensure data is not leaked between unauthorized users.

- 🚔 CSP: Our Content Security Policy is at the highest level and stops all manner of security threats.

- 🐳 Minimal containers: We build containers from Scratch whenever possible to limit supply chain attacks.

- ⏳ Non root containers: We run containers as non root to limit horizontal movement during an attack.

- 👮 Audit Trail: See who did what and when.

- ⏰ Postgres Roles: We run the minimum level of permissions for our postgres connections.

- 📣 SIEM integration: Integrate with your SIEM system for threat detection and investigation.

- ⌛ Resistant to timing attacks (api keys): Coming soon.

- 📭 SSO: We didn't build our own authentication but use industry leading and secure open source IAM systems.

- 👮 Secrets Management: Our Kubernetes operator creates secrets using secure algorithms at deployment time.

- 📈 Observability API: Compatible with Prometheus for measuring load and usage.

- 🤖 Dashboards: Create dashboards with Grafana for an overview of your whole system.

- 📚 Monitor Chats: All questions and responses are recording and available in the Postgres database.

- 📈 Fairly share resources: Without token limits it's easy for your models to become overloaded.

- 🔒 Reverse Proxy: All models are protected with our reverse proxy that allows you to set limits and ensure fair usage across your users.

- 👮 Role Based: Apply token usage limits based on a users role from your IAM system.

- 🔐 Assistants API: Any assistant you create can easily be turned into an Open AI compatible API.

- 🔑 Key Management: Users can create API keys for Assitants they have access to.

- 🔏 Throttling limits: All API keys follow the users throttling limits ensuring fair access to the models.

- 📁 Batch Guardrails: Apply rules to documents uploaded by our batch data pipeline.

- 🏅 Streaming Guardrails: LLMs deliver results in streams, we can apply rules in realtime as hte stream flies by.

- 👾 Prompt injection: We can gusrd against prompt injections attacks as well as many more.

- 🤖 Full support for open source models running locally or in your data center.

- 🌟 Multiple Model Support: Install and manage as many models as you want.

- 👾 Easy Switch: Seamlessly switch between different chat models for diverse interactions.

- ⚙️ Many Models Conversations: Effortlessly engage with various models simultaneously, harnessing their unique strengths for optimal responses. Enhance your experience by leveraging a diverse set of models in parallel.

⚠️ Configurable UI: Give users access or not to certain features based on roles you give them in your IAM system.- 🚦 With limits: Apply token usage limits based on a users role.

- 🎫 Fully secured: Rules are applied in our server and defence in depth secured one more time with Postgres RLS.

- 📤 100s of Sources: With our Airbyte integration you can batch upload data from sources such as Sharepoint, NFS, FTP, Kafka and more.

- 📥 Batching: Run upload once a day or every hour. Set the way you want.

- 📈 Real time: Capture data in real time to ensure your models are always using the latest data.

- 🚆 Manual Upload: Users have the ability to manually uplaod data so RAG pipelines can be setup in minutes.

- 🍟 Datastes: Data is stored in datasets and our security ensures data can't leak between users or teams.

- 📚 OCR: We can process documents using OCR to unlock even more data.

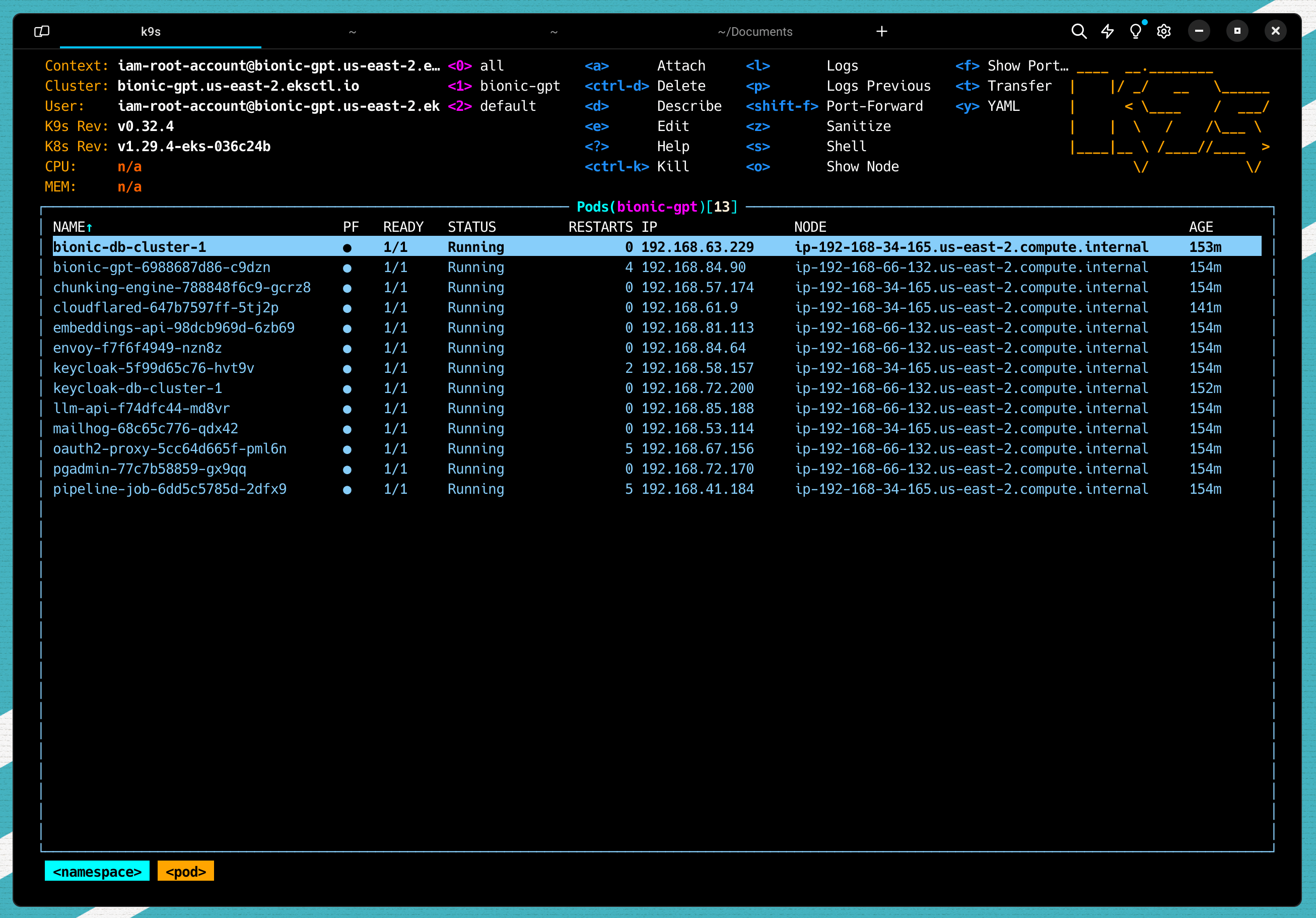

- 🚀 Effortless Setup: Install seamlessly using Kubernetes (k3s, Docker Desktop or the cloud) for a hassle-free experience.

- 🌟 Continuous Updates: We are committed to improving Bionic with regular updates and new features.

follow our guide to running Bionic-GPT on your local machine.

For companies that need better security, user management and professional support

This covers:

- ✅ Help with integrations

- ✅ Feature Prioritization

- ✅ Custom Integrations

- ✅ LTS (Long Term Support) Versions

- ✅ Professional Support

- ✅ Custom SLAs

- ✅ Secure access with Single Sign-On

- ✅ Continuous Batching

- ✅ Data Pipelines

- Schedule a Chat 👋

- Connect on Linked in 💭

- Our emails ✉️ ian@bionic-gpt.com / dio@bionic-gpt.com

BionicGPT is optimized to run on Kubernetes and implements the full pipeline of LLM fine tuning from data acquisition to user interface.