Ingesting Massive Volumes, Unleashing Real-Time Queries

Explore the docs »

View Demo

·

Report Bug

·

Request Feature

Table of Contents

intro.final.-.Made.with.Clipchamp.mp4

LogFlow Insight is a robust log ingester and real-time log analysis tool.

The key features are:

- Easy method to store logs: Simple HTTP server that can accept logs from any device or service

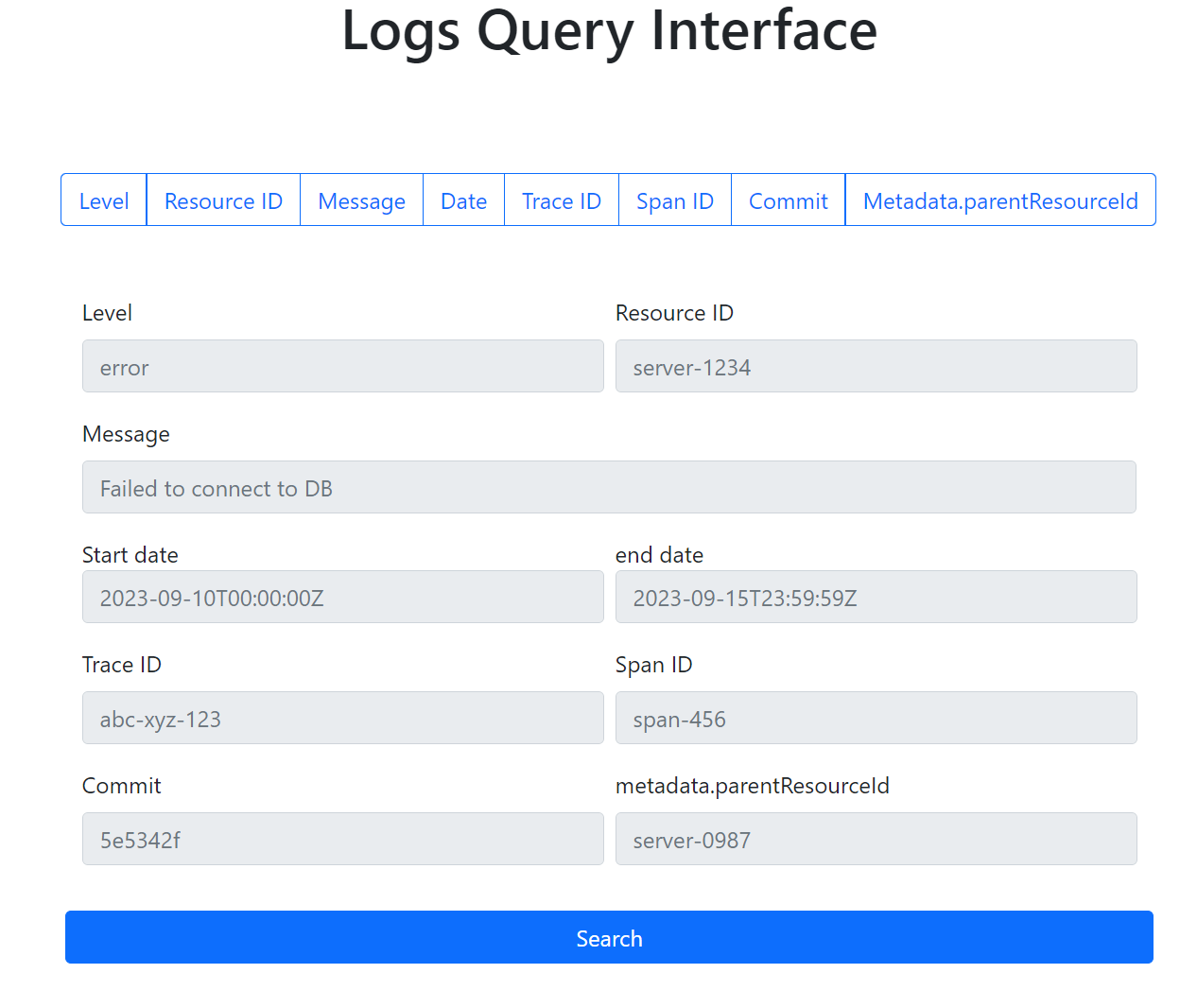

- Intuitive UI: Effortlessly query logs based on multiple filters

- High Throughput: Able to handle large volume of log ingestion

- Real-Time Analysis: Logs are available to query as soon as they are ingested

- Speed: Get query results in lightning-fast speed

- High Availability: Deploy once and use from anywhere, anytime

- Scalable: Scale horizontally with simple configuration tweaks

- Easy Setup: Follow few simple installation steps to get started quickly

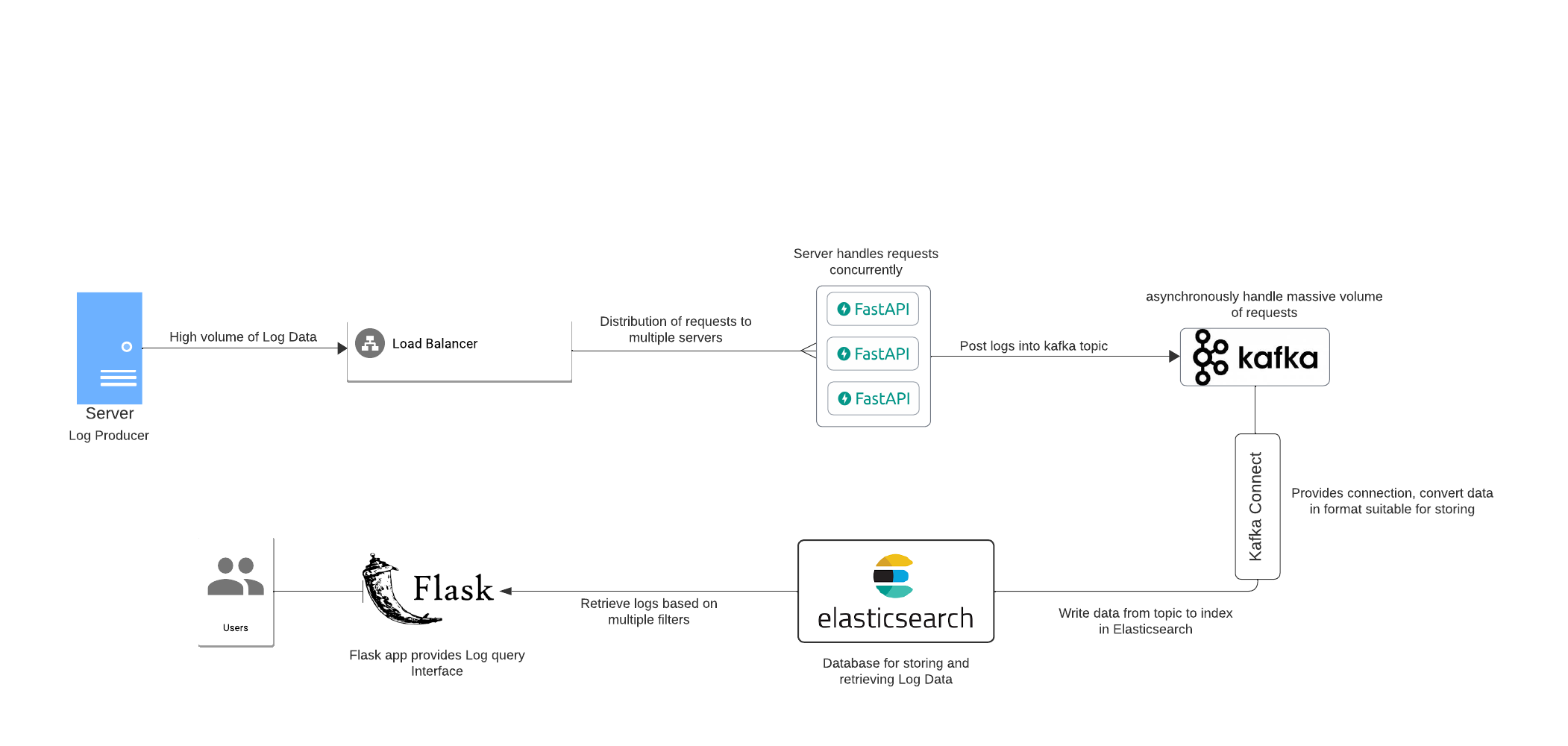

The software is designed to work as a distributed system with client-facing server for logs ingestion(using FastAPI) and a UI frontend for searching logs(using Flask). Kafka brokers are used to asynchronously handle high volume of logs sent by the ingester server. Kafka-Connect is used to consume logs from Kafka topics, serialize the data and send for storage. Elasticsearch is used as the database for quick indexing and full-text search capabilities. Justification for the decisions for each of the components is given below-

- Elasticsearch:

- Lucene Engine: It is built on top of Apache Lucene which uses an inverted index which is a data structure optimized for quick full-text searches and aggregate operations for data analysis.

- Distributed and Sharded Architecture: Elasticsearch distributes data across multiple cluster nodes and divides it into shards, allowing parallel processing and fault tolerance.

- Near Real-Time Search: Elasticsearch offers near real-time search capabilities. As soon as data is indexed, it becomes searchable.

- Kafka:

- High Volume: Kafka is designed for high-throughput scenarios. Kafka's design minimizes disk I/O, making it efficient in handling massive message volumes with minimal latency.

- Fault-Tolerance: Kafka replicates data across multiple brokers, ensuring that even if some nodes fail, data remains available.

- Scalability: Kafka is horizontally scalable, allowing it to handle enormous volumes of data by distributing it across multiple nodes. It gurantees no data loss.

- Kafka-Connect:

- Batch-Processing: Kafka Connect utilize batch processing mechanisms, enabling it to collect and process data in larger chunks or batches thereby increasing throughput and minimizing load on database.

- Reliability: Kafka-Connect includes support for fault recovery, ensuring that if a connector or node fails, the system can recover and resume operations.

- Schema Evolution: Kafka Connect supports schema evolution, allowing for changes in data structure over time without disrupting the data pipeline.

- FastAPI:

- Concurrency: FastAPI leverages Python's asyncio to handle asynchronous operations. It allows handling multiple concurrent requests without blocking, maximizing the server's efficiency.

- Performance: FastAPI is built on top of Starlette and Pydantic. Starlette is a high-performance web framework, while Pydantic provides quick data serialisation.

- Flask:

- Lightweight and Flexible: Flask is designed as a minimal framework to get started. It has the support of multiple extensions for different use cases and integration.

- Fewer Dependencies: Flask has minimal dependencies beyond Python itself. This makes deployment and maintenance easier.

- Quick Setup: Flask allows quick setup to get a basic web server up and running with just a few lines of code.

Below is the basic diagram for the system. I would highly recommend you check out my video explanation of the complete project here to get a better understanding.

Note that I have not used load balancer in the demo. I would highly recommend it if you are going for production.

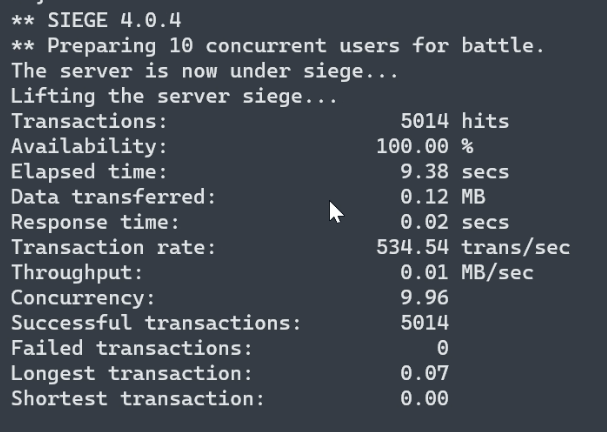

Load test has been performed on this system using siege. Test is run on a linux based system with 16GB RAM and all services running in docker containers. Note that single instance of FastAPI, Elasticsearch and Kafka Broker is used without load balancing.

I have create a Youtube video showing the demo of this system, the link to which is here.

To get a local copy up and running follow these simple example steps.

Make sure that you have docker installed. Docker would help us to work with the above components using containers very easily. If you don't have Docker, visit the official site to install it.

-

Clone the repo

git clone https://github.com/biswajit-k/log-ingester-elasticsearch.git

-

Head into

/connect-pluginsfolder and unzip the.zipfile inside the folder itselfcd connect-plugins unzip confluentinc-kafka-connect-elasticsearch-14.0.11.zip -

Get the components running in containers according to

docker-compose.yamlconfigurationsdocker compose up -d

Wait until kafka-connect becomes healthy. Finally,

below services will be available-

- Log Ingester Server:

http://localhost:3000 - Query Interface:

http://localhost:5000 - Elasticserach:

http://localhost:9200

You won't need to do anything with Elasticsearch unless you are developing this software.

├── docker-compose.yaml

├── .env

├── README.md

├── LICENSE.txt

├── example_log.json

└── connect-plugins

├── confluentinc-kafka-connect-elasticsearch-14.0.11.zip

└── ingester

├── main.py

├── models.py

├── config.py

├── requirements.txt

├── .local.env

├── dockerfile

└── query_interface

├── templates

├── index.html

├── app.py

├── models.py

├── .local.env

├── requirements.txt

├── dockerfile

.env file is used for global environment variables when running services inside container.

.local.env file contains environment variable for service when it is run outside container locally.

-

Ingesting Logs:

You can check if the ingester server is started by simply sending a GET request at

http://localhost:3000, it should give you a simple hello world response. Now you are ready to ingest logs. Send a POST request to the server athttp://localhost:3000/logsin the JSON format specified in theexample_log.jsonfile -

Query Interface:

Simply head over to

http://localhost:5000to access the interface.

Note: You can tailor the format according to your needs by changing the data model in models.py and app/main.py in both ingester and query_interface folders

For demo, please refer to the Demo Video

Some further improvements in design and implementation are mentioned below-

Enhancing Durability

Elasticsearch instances can go down if they get massive loads of data. Also, backing up data takes time and during this time our database would be down. So there are chances of losing log data for that duration . If log data is valuable and we can't afford to lose any of it then we could also add a transactional database which would parallelly also store these logs. A transactional database being ACID complaint would ensure that the data is not lost in case Elasticsearch instances go down.

Improving Elasticsearch Fault Tolerance

Having cluster and replicas of Elasticsearch instances will ensure that if some instance goes down others are available to index logs and provide search result.

See the contribution section on how yo propose improvements.

Contributions are what makes the open-source community such an amazing place to learn, inspire, and create. Any contributions you make are greatly appreciated.

If you have a suggestion that would make this better, please fork the repo and create a pull request. You can also simply open an issue with the tag "enhancement". Don't forget to give the project a star! Thanks again!

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin feature/AmazingFeature) - Open a Pull Request

Distributed under the MIT License. See LICENSE.txt for more information.

Biswajit Kaushik - linkedin, biswajitkaushik02@gmail.com

Project Link: https://github.com/biswajit-k/log-ingester-elasticsearch