Reproduce different architectural patterns for Confluent Cluster Disaster Recovery and their failover and failback procedures.

Active-Active Platform over Confluent Cloud with Clients hosted on K8s Cluster with automatic restart on config changes

-

Two Confluent Cloud Clusters following the active-active DR pattern:

-

Kubernetes Cluster deployed (connectivity to CCloud cluster required)

-

Reloader pod deployed on your preferred namespace (default namespace can be a good option so any other one will have access to it). For standard deployment you can execute:

kubectl apply -f https://raw.githubusercontent.com/stakater/Reloader/master/deployments/kubernetes/reloader.yaml

-

Create topics you want to use on both DC if auto.create.topics.enable is false

-

Cluster Linking: To setup the

active-active DR patternyou will need:- Create a

.envfile underccloud-resourcesfolder it will contain:

export ENV= # CCLOUD Environment you're working on # export TOPIC= # Default topic where clients will produce/consume # export NP_TOPIC= # Topic where no proxy clients will produce/consume # export CCLOUD_NORTH_CLUSTERID= # DC-1 Cluster Id # export CCLOUD_NORTH_URL= # DC-1 Bootstrap server # export CCLOUD_NORTH_APIKEY= # DC-1 App key # export CCLOUD_NORTH_PASSWD= # DC-1 password # export CCLOUD_WEST_CLUSTERID= # DC-2 Cluster Id # export CCLOUD_WEST_URL= # DC-2 Bootstrap url # export CCLOUD_WEST_APIKEY= # DC-2 Api Key # export CCLOUD_WEST_PASSWD= # DC-2 Password #

- The following scripts will use

.envfile to configure the resource creation - All mirror topics will be prefixed with

<DC-Name>-

- Run

ccloud-resources/cluster-linking/cluster-linking-north-west.shto create DC-1 to DC-2cluster link - Run

ccloud-resources/cluster-linking/create-mirror-topic-north-west.shto create the mirror topic on DC2 cluster for proxy based clients - Run

ccloud-resources/cluster-linking/create-mirror-noproxy-topic-north-west.shto create the mirror topic for on DC-2 cluster for non proxy based clients - Run

ccloud-resources/cluster-linking/cluster-linking-west-north.shto create DC-2 to DC-1cluster link - Run

ccloud-resources/cluster-linking/create-mirror-topic-west-north.shto create mirror topic on DC1 for proxy based clients - Run

ccloud-resources/cluster-linking/create-mirror-noproxy-topic-west-north.shto create mirror topic on DC1 for non proxy based clients

- Create a

-

Java Clients (producer/consumer) images on a available registry (you can use the resource definition provided under k8's resources folder)

-

ConfigMaps/Secrets need to be annotated to make

reloaderaware of the config changes:annotations: reloader.stakater.com/match: "true"

-

Client application

deploymentwill be annotated with config maps that we want to actively listen changes:annotations: configmap.reloader.stakater.com/reload: "java-cloud-producer-config,kafka-proxy-config"

-

Java Client with Proxy

- Resources are intended to be created on a

clientsnamespace. - Clients will be deployed with a grepplabs/kafka-proxy container as sidecar, that will act as a layer 7 kafka protocol aware proxy.

-

Create 2 configmap using the file

k8s-resources/proxy/kafka-proxy-configmap.yamlas a template. For each configmap, add the details of DC Cluster and API Key/Secret. This will be the config map the produce refers when it start:kubectl apply -f k8s-resources/proxy/kafka-proxy-configmap.yaml

-

Producer using a Proxy

-

Configure how the producer will call the proxy (as a sidecar):

kubectl apply -f k8s-resources/proxy/java-cloud-producer-configmap.yaml

-

Run the producer:

kubectl apply -f k8s-resources/proxy/java-cloud-producer.yaml

-

Send Messages using the producer:

Get the EXTERNAL-IP where the producer expose its API:

kubectl get svc -n clients

and send the message using this command:

curl -X POST http://<EXTERNAL-IP>:8080/chuck-says

-

-

Consumer using Proxy

- Apply consumer config:

kubectl apply -f k8s-resources/proxy/java-cloud-consumer-configmap.yaml

- Apply consumer resources:

kubectl apply -f k8s-resources/proxy/java-cloud-consumer.yaml

- The consumer will consume from a topic group based on pattern that covers the topology described on

active-active pattern

-

When disaster happens we will change the

k8s-resources/proxy/kafka-proxy-configmap.yamlwith the values of DC2 Cluster -

Reloaderwill trigger arolling updateon any deployment annotated to listen changes of this CM. -

As failback procedure we just need to restore the connection data on

k8s-resources/proxy/kafka-proxy-configmap.yamlto the DC 1 values and after arolling updateclients will be again connecting to the original cluster.

- Resources are intended to be created on a

-

Java Client without Proxy

- Resources are intended to be created on a

nproxy-clientsnamespace.

-

Producer without proxy

-

Create a configmap using the file

k8s-resources/no-proxy/java-cloud-producer-noproxy-configmap.yamlas a template. For each configmap, add the details of DC Cluster and API Key/Secret. This will be the config map the produce refers when it start:kubectl apply -f k8s-resources/no-proxy/java-cloud-producer-noproxy-configmap.yaml

-

Configure how the producer that will call CCloud Cluster directly:

```bash kubectl apply -f k8s-resources/no-proxy/java-cloud-producer-noproxy.yaml ``` -

Send Messages using the producer:

Get the EXTERNAL-IP where the producer expese its API: ```bash kubectl get svc -n noproxy-clients ``` and send the message using this command: ```bash curl -X POST http://<EXTERNAL-IP>:8080/chuck-says ``` -

When disaster happens we will change the

k8s-resources/no-proxy/java-cloud-producer-noproxy-configmap.yamlwith the values of DC2 Cluster -

Reloaderwill trigger arolling updateon any deployment annotated to listen changes of this CM. -

As failback procedure we just need to restore the connection data on

k8s-resources/no-proxy/java-cloud-producer-noproxy-configmap.yamlto the DC 1 values and after arolling updateclients will be again connecting to the original cluster.

-

-

Consumer without proxy

- Create a config map using

k8s-resources/no-proxy/java-cloud-consumer-noproxy-configmap.yamlas template as consumer it will contain the cluster informationkubectl apply -f k8s-resources/no-proxy/java-cloud-consumer-noproxy.yaml

- Apply the consumer resources:

kubectl apply -f k8s-resources/no-proxy/java-cloud-consumer.yaml

Consumer Clientwill be created configure to consume from a pattern of topics that matches with theactive-active patternnaming defined.

- Create a config map using

- Resources are intended to be created on a

-

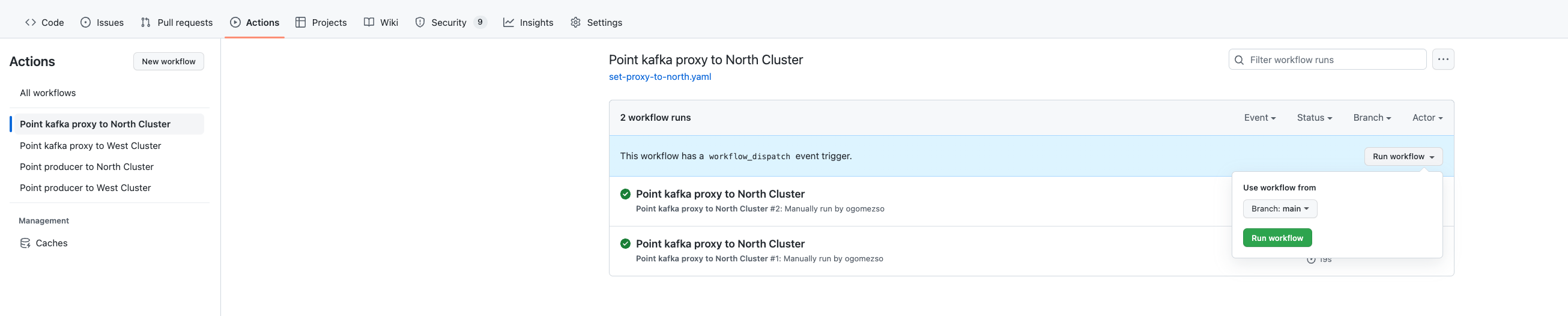

The change of the proper configuration (kafka-proxy or client config) on the subsequent config map is fully automated based git hub actions and Azure AKS you can find the actions definition under .github/workflows folder.

First thing you will need is get yout AZURE_CREDENTIALS by running:

az ad sp create-for-rbac --name "chuck" --role contributor --scopes /subscriptions/<azure-subscription-id>/resourceGroups/<resource-group> --sdk-authand set the json response as repository secret on github.

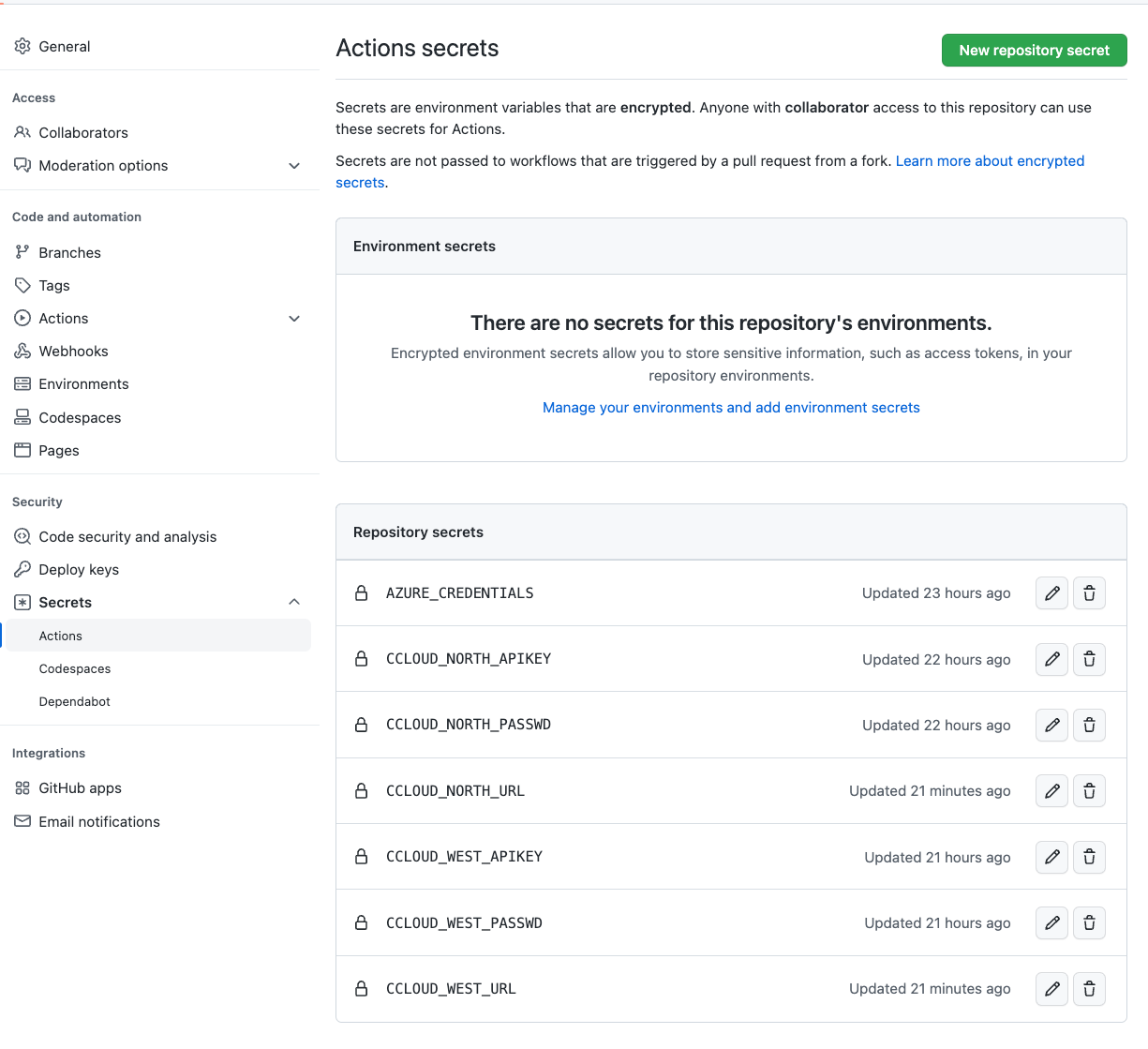

You also will need to setup secrets for the CCLOUD resources config.

Find the secrets needed on the image bellow.

Just go to the Actions tab on your repo choose the action and branch from you want to perform.

For proxy clients a change on proxy configuration will be enough to change configuration on both consumer and producer and perform a rolling update.

In case of no proxy clients the configuration is change directly on the client so you need to apply on both of them separately.

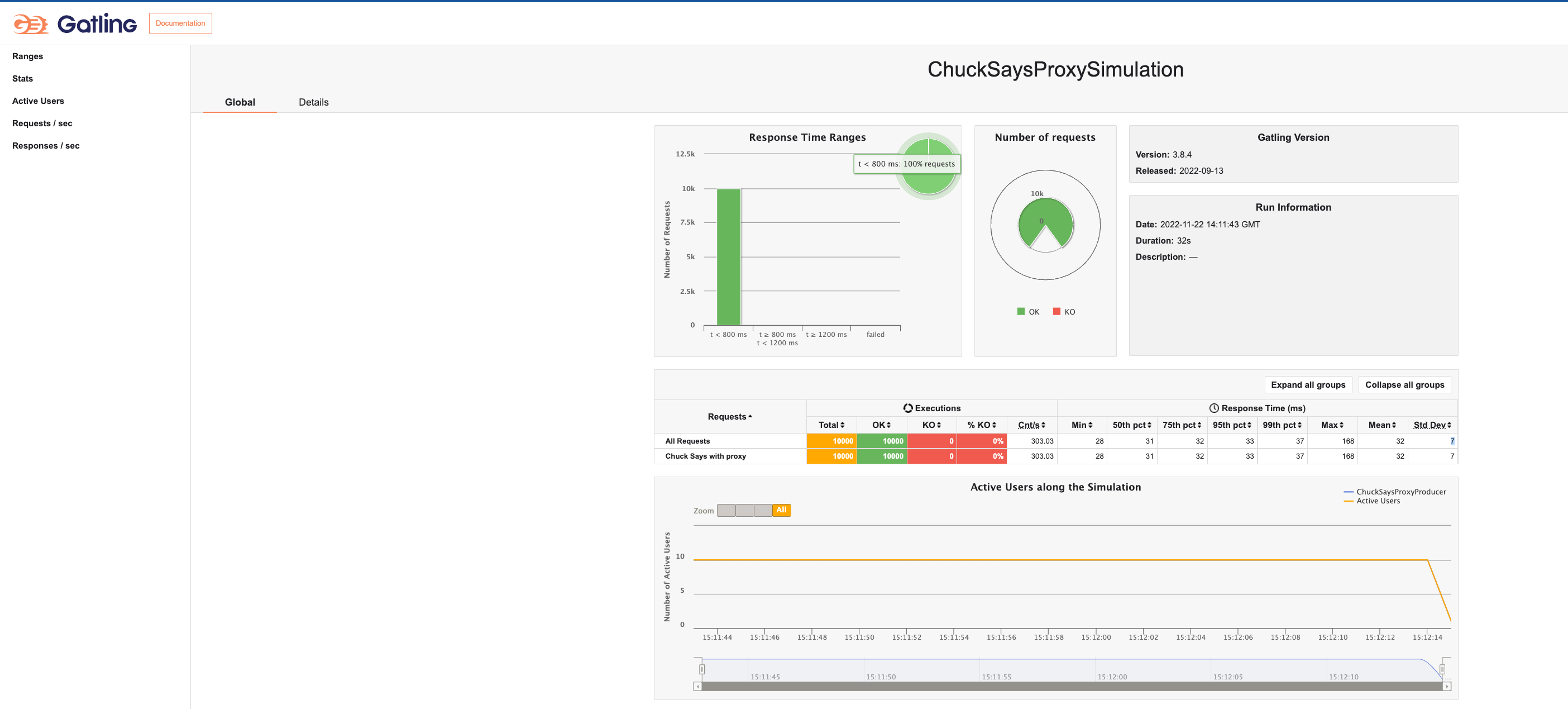

A basic performance test suite is implemented using Gatling.

The test will run 1000 request for 10 concurrent users for proxy and no proxy producers

To run it set up perftest/src/test/resources/application.conf file with the public endpoints for your proxy and no-proxy clients go to perftest folder and run:

mvn gatling:testafter that you can find the report folders with a index.html file under perftest/target/gatling