This project aims to demonstrate the key differences between Apache Flink® DataStream API & Apache Kafka® Streams Processor API.

This project is under Apache 2.0 license.

Please note that, due to missing ZonedDateTime serialization in Apache Flink® Kryo embedded library (2.24.0) version, we reused some existing serializer implementation from the official project. You'll find the detailed license requirements in the project root. It will be removed as soon as the Apache Flink® is Updated (FLIP-317)

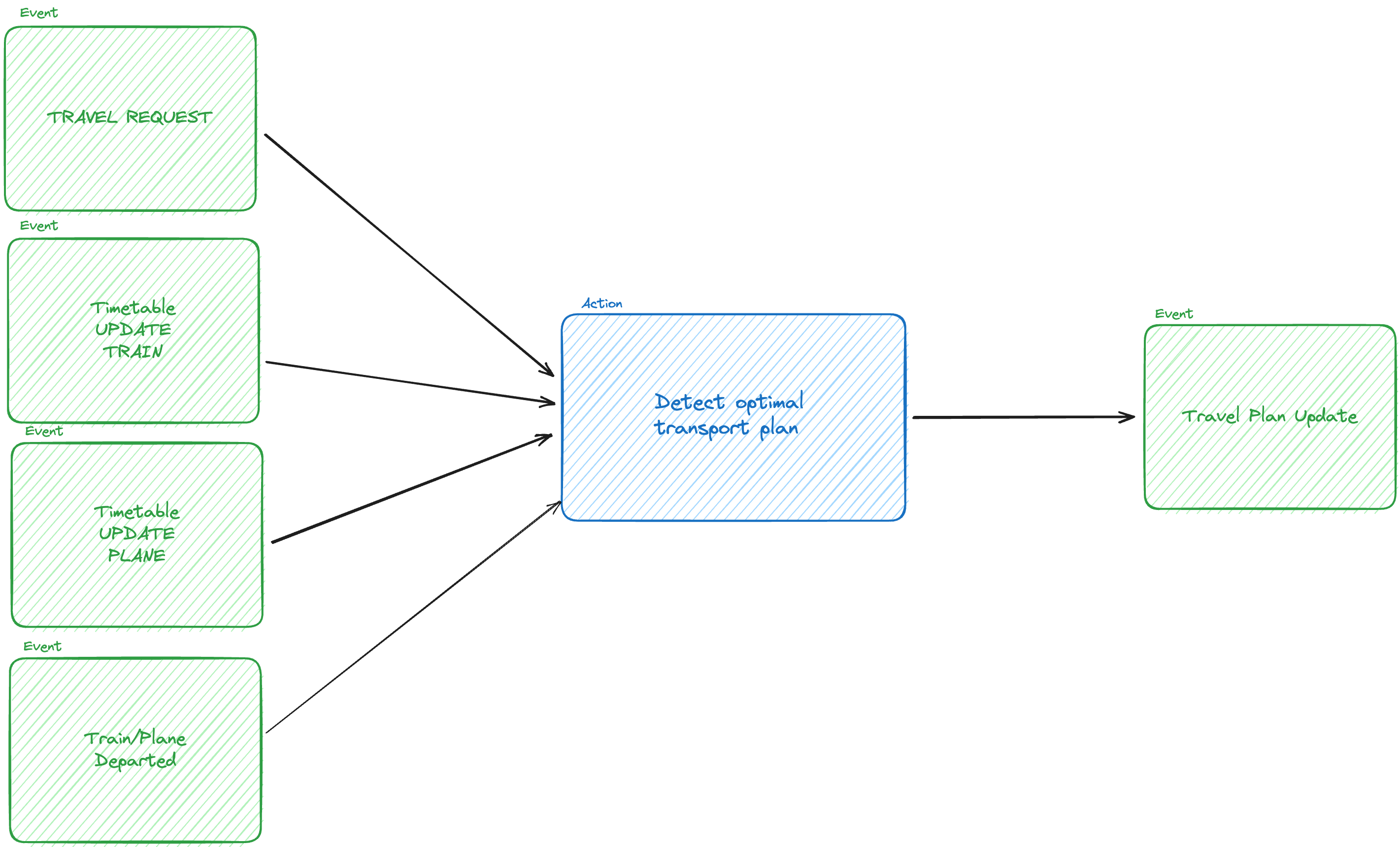

Starting from a dummy transport optimization issue, we aim to compare the approach in design & implementation between Apache Kafka® Streams & Apache Flink® DataStream.

Individuals wants to know when the next most efficient travel is planned for a specific connection (ex : DFW (Dallas) to ATL (Atlanta))

Four existing events are available to consume (We will use a Apache Flink® Datagen job) :

- CustomerTravelRequest : a new customer emit the wish for a travel on a connection

- PlaneTimeTableUpdate : a new plane as been scheduled or moved around

- TrainTimeTableUpdate : a new train as been scheduled or moved around

- Departure : a train or plane departed

We want to manage all those scenarios :

- The customer asks for an available connection : he receive an immediate alert

- The customer asks for an unavailable connection : he will receive a notification as soon as transport solution is available

- An existing customer request is impacted by new transport availability or timetable update : the customer will receive the new optimal transportation information

- An existing customer request is impacted by a transport departure

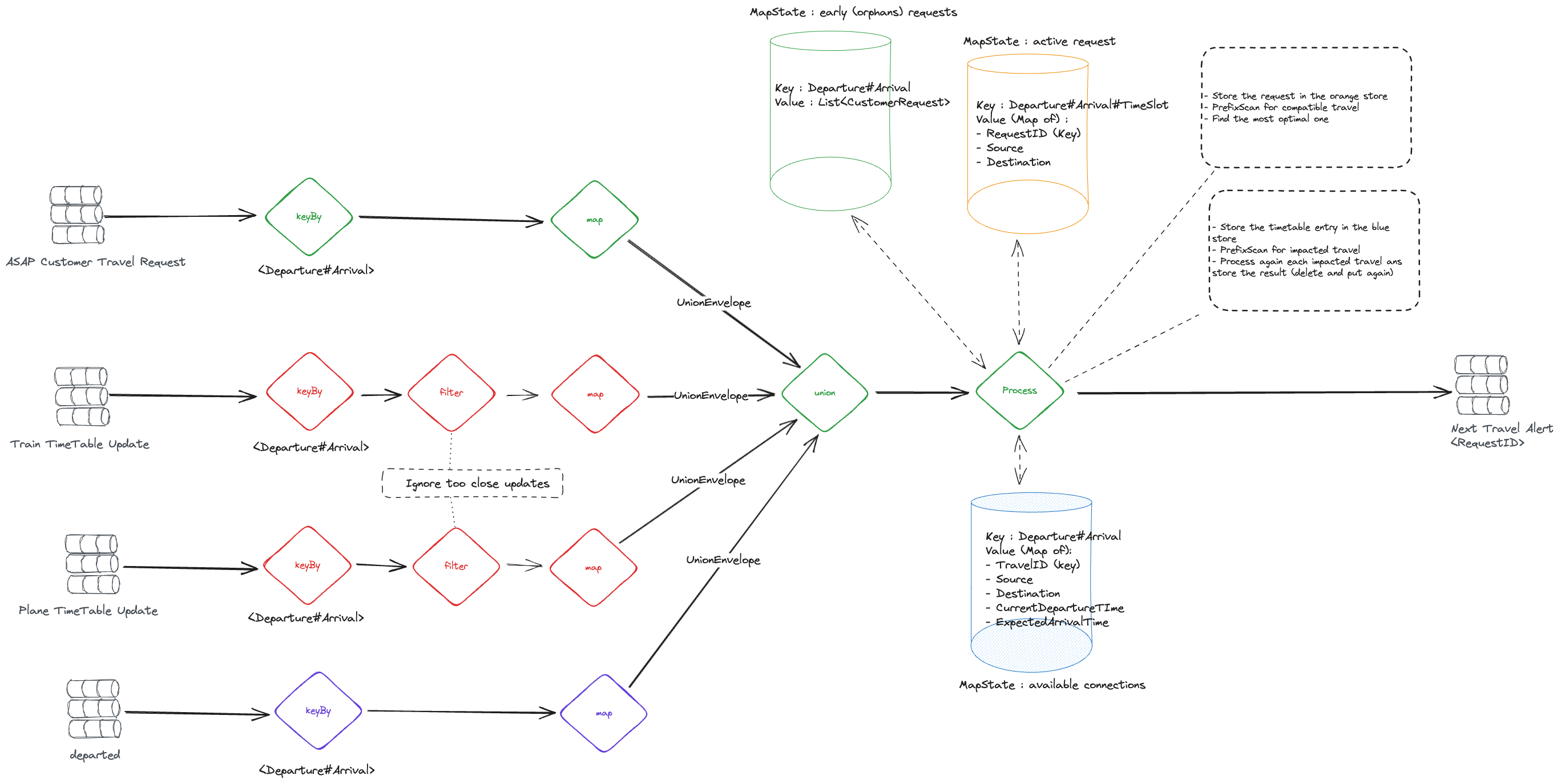

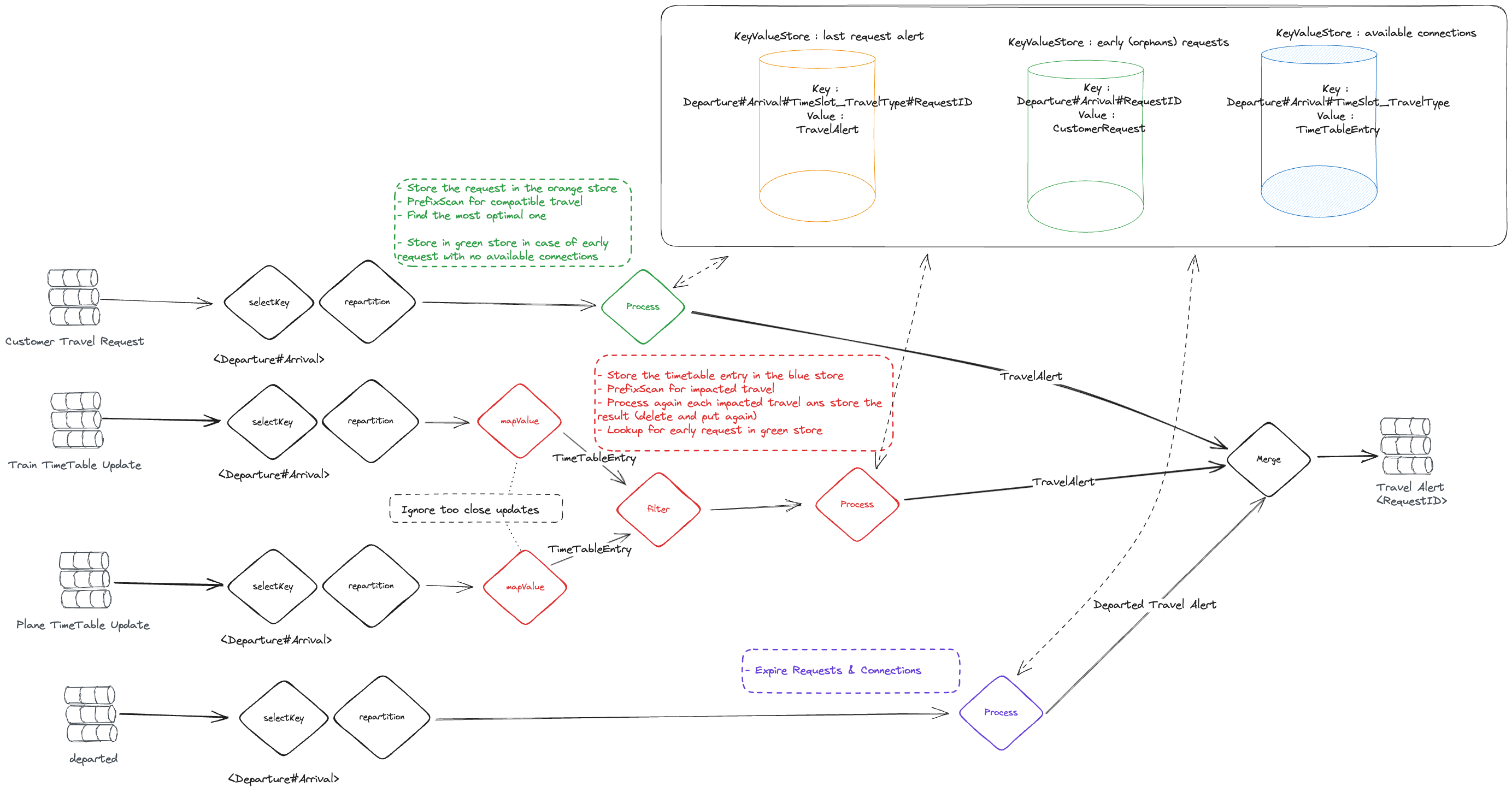

DISCLAIMER : We consciously chose to split Train & Plane timetable updates to challenge N-Ary reconciliation capabilities of both technologies. We are aware that all of this could have been simplified by merging those two events in one.

The current implementation of "most efficient" is currently : the available connection that will arrive the sooner.

Apache Flink® required specifically :

-

some dummy List & Map models to achieve "Range Lookup" in the statestores

-

an Envelope (Container) model to merge different object types in one in order to achieve N-Ary processing

We only added a data model for time table updates:

- Java 11 (not less not more)

- Maven 3.X

mvn clean package- Java 11 (not less not more)

- A Apache Flink® CLuster

- A consumer.properties & producer.properties files in your resource folder (here's a template)

flink run -c org.lboutros.traveloptimizer.flink.datagen.DataGeneratorJob target/flink-1.0-SNAPSHOT.jar

flink run -c org.lboutros.traveloptimizer.flink.jobs.TravelOptimizerJob target/flink-1.0-SNAPSHOT.jar- Implement a more complex efficiency logic to validate the business logic scalability of our designs