Our goal is to help to create capacity recommendations for ACM and capacity recommendations for ACM Observability only. Needs for capacity planning varies greatly. It depends on the scenario as explained below:

| Setup | Action | Red Hat recommends | |

|---|---|---|---|

| 1. | Existing (brown-field) set up: ACM hub is running with real clusters being managed under it | Use ACM Inspector to extract data out of the system. | ACM team can recommend based on this output and number of clusters the hub will have to manage etc. This is a fairly quick manual process for a now - a tool will be shortly published. |

| 2. | No ACM running (green-field) but clusters that would be managed exists | Use Metrics Extractor to extract the metrics out of the system. Use Search resource extractor to extract the search objects that would be collected. | ACM team can size Observability & Search & ACM management needs based on this data and number of clusters it will manage etc. Observability sizings can be done using this data and this notebook. We are working on a similar tool for search. |

| 3. | No ACM running (green-field) and clusters that would be managed do not exist | Proceed to read below. | This the most complex case of all. |

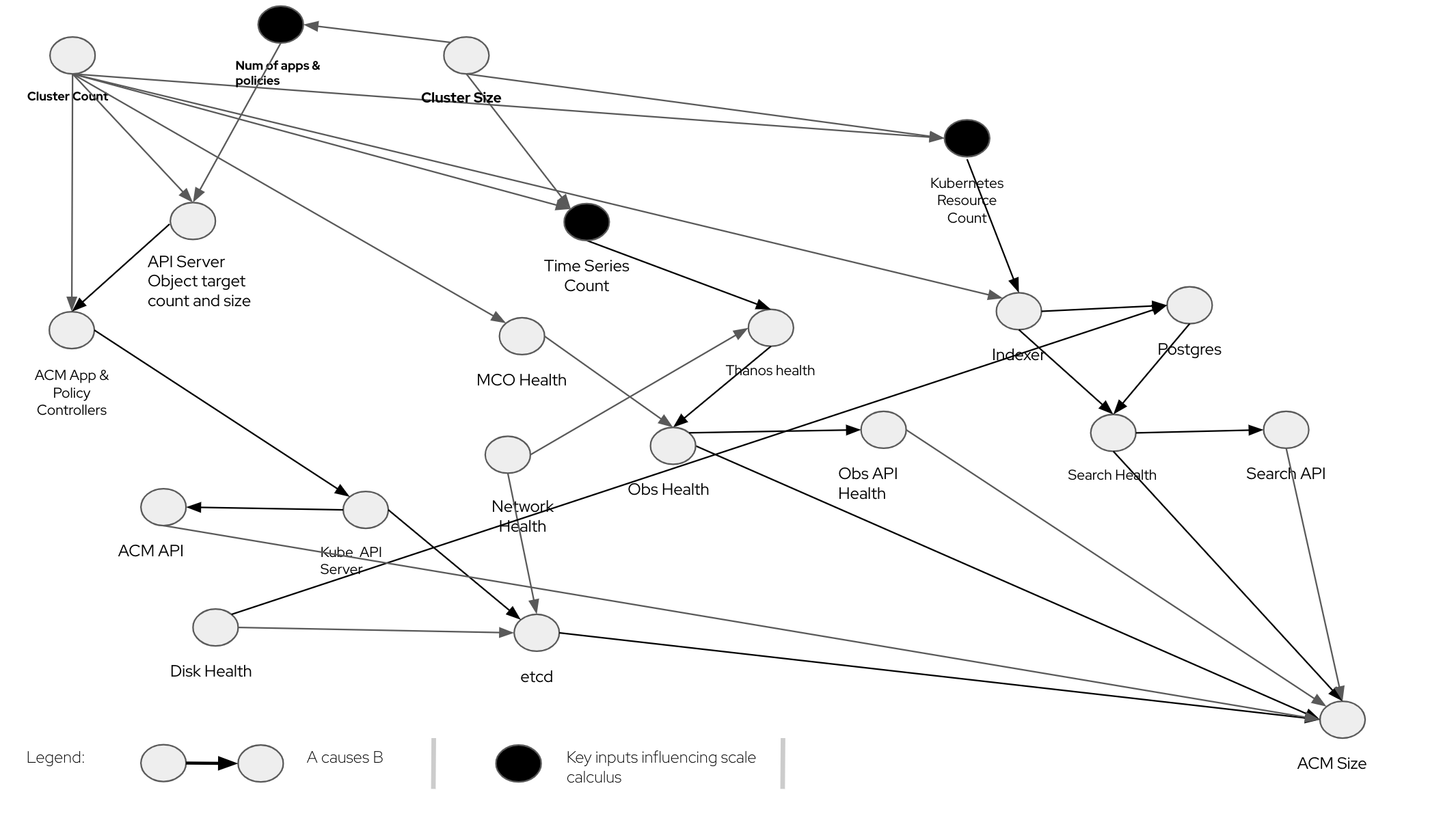

Below is a causal diagram of ACM from standpoint of scalability. This is definitely not a full complete ACM Causal diagram. That would be much more complex. Let us take a moment to review this figure and get the key idea behind this.

The big black dots are key drivers of ACM sizing along with the number of clusters it is managing. In other words, if we know the :

- Num of apps & policies (ie how many applications and policies are defined on the cluster) and this depends on the cluster size. For the sake of brevity this node represents both applications and policies. So this works if there is only applications, or only policies or both.

- Time series count (depends on how large the cluster is and what kind of work is running on them)

- Resoure count (depends on how large the cluster is and what kind of work is running on them)

ACM scaling model is

conditionally independent of the real cluster size. Ofcourse the number of clusters is still important. You could appreciate that given this model, when we do real performance measurement, we can simulate/create a number of clusters with any size (could be kind cluster, could be Single Node OpenShift clusters) than clusters of specific sizes. It is much simpler to do the former instead of the latter.

So, to trace one line of the flow end to end:

Num of apps & policies drives the API Server Object target count and size which in turn drives load on the ACM App & Policy Controllers. The ACM App & Policy Controllers are also influenced by the Cluster Count - ie number of clusters - into which the applications and policies these have to be replicated to. These in turn creates resources on the Kube API Server. These resources are created in etcd. Therefore etcd health is one of the key drivers of ACM Health. And etcd health is also dependent on Network health and Disk health.

This helps us to understand the data needed to determine the ACM Hub Cluster size.

We need the following information to calculate the resources required to run Observability on top of ACM.

- number_of_managed_clusters: say 100

- number_of_master_node_in_hub_cluster: say 3

- number_of_addn_worker_nodes: say 47 and we assume these are above the base number: 3

- number_of_vcore_per_node: say 16

- number_of_application_pods_per_node: say 20. This is for the application and not ACM/Openshift workload.

- number_of_namespaces: say 20. These are namespaces that will be created by the customer outside what is created by ACM/Openshift workload.

- number_of_container_per_pod:1. Do not change this as of now.

- number_of_samples_per_hour:12. This means metric will be sent every 5 min.

- number_of_hours_pv_retention_hrs:24. This means that data will retained in PV for 24 hrs which is standard. Do not reduce this number for production systems.

- number_of_days_for_storage:365. This the amount of days of retention in the object store.

- Use this pythonNotebook.

- Substitute the values in the notebook under section:

Critical Input Parameters to Sizewith values described above. - Then run the notebook. It will produce the recommendations.

- This pythonNotebook is deprecated.

This produces more accurate results than Scenario - 1 above.

- Number of timeseries from Setup #2 above

- number_of_samples_per_hour:12. This means metric will be sent every 5 min.

- number_of_hours_pv_retention_hrs:24. This means that data will retained in PV for 24 hrs which is standard. Do not reduce this number for production systems.

- number_of_days_for_storage:365. This the amount of days of retention in the object store.

- Use this pythonNotebook.

- Substitute the values in the notebook under section:

Critical Input Parameters to Sizewith values described above. - Then run the notebook. It will produce the recommendations.

- This pythonNotebook is deprecated.

- First, we have to calculate how many time series will we need to persist. This is calculated in 2 steps.

- Calculated Number of time series for Base 3M+3W deployment for a Cluster (based on lab data)

- Calcluated Number of Time series for Additional Worker Nodes

- Then from total number of time series, we infer

- Memory requirement (2 hours of this time series data is stored in memory)

- CPU Requirement (this is a little weak at the moment. We have not seen too much dependency on CPU yet so we have not focussed too much on it)

- Disk needed for PVs (volume of data stored is dictated by settings in MultiCluster Observability CR)

- Storage needed for Object store (volume of data stored is dictated by settings in MultiCluster Observability CR)

We need the following information to calculate the resources required to run ACM. Observability sizing is not included here.

- managed_clusters: say 500

- nos_of_worker_nodes_per_cluster: say 40

- nos_of_pods_per_cluster: say 5000

- nos_of_namespace: say 200

- nos_of_apps_per_cluster_distributed_by_hub: say 10. This implies ACM App life cycle is being used.

- nos_of_policies_per_cluster: say 30

- nos_of_acm_hub: say 10. We will assume the clusters to be distributed evenly across the hubs for sizing.

Each managed cluster using ACM applications and policies created on it creates corresponding objects on the Kubernetes APIServer of the ACM hub. As the number of managed cluster and corresponding applications and policies grow, the load on the Kubernetes APIServer increases. There is a finite limit on how big this footprint on APIServer can be - this is mainly governed by the size of ETCD database backing the APIServer.

- Check if the specified load profile is in danger of crossing this limit. That will merit more than 1 ACM hub

- Check if the specified load profile merits a highly performant etcd system backed ie backed by NVMe disks

- If topology of Hub Server is 3M+3W, check for corresponding data samples to size/project.

- If topology of Hub Server is 3M, check for corresponding data samples to size/project.

This is manual as of now. Please reach out the RHACM Engineering team for this.