Our goal is to take Prometheus style metrics, stream it to Kafka and apply streaming analytics in Spark. We are also looking at key cluster events (not to be confused with alerts; alerts already come in through the metric stream) like cluster scaling, node memory pressure, cluster upgrade etc. Speaking in a more generic way - the goal is to build AI/ML/Statistical models around streaming observability data. We could stray to data at rest aka batch processing as well.

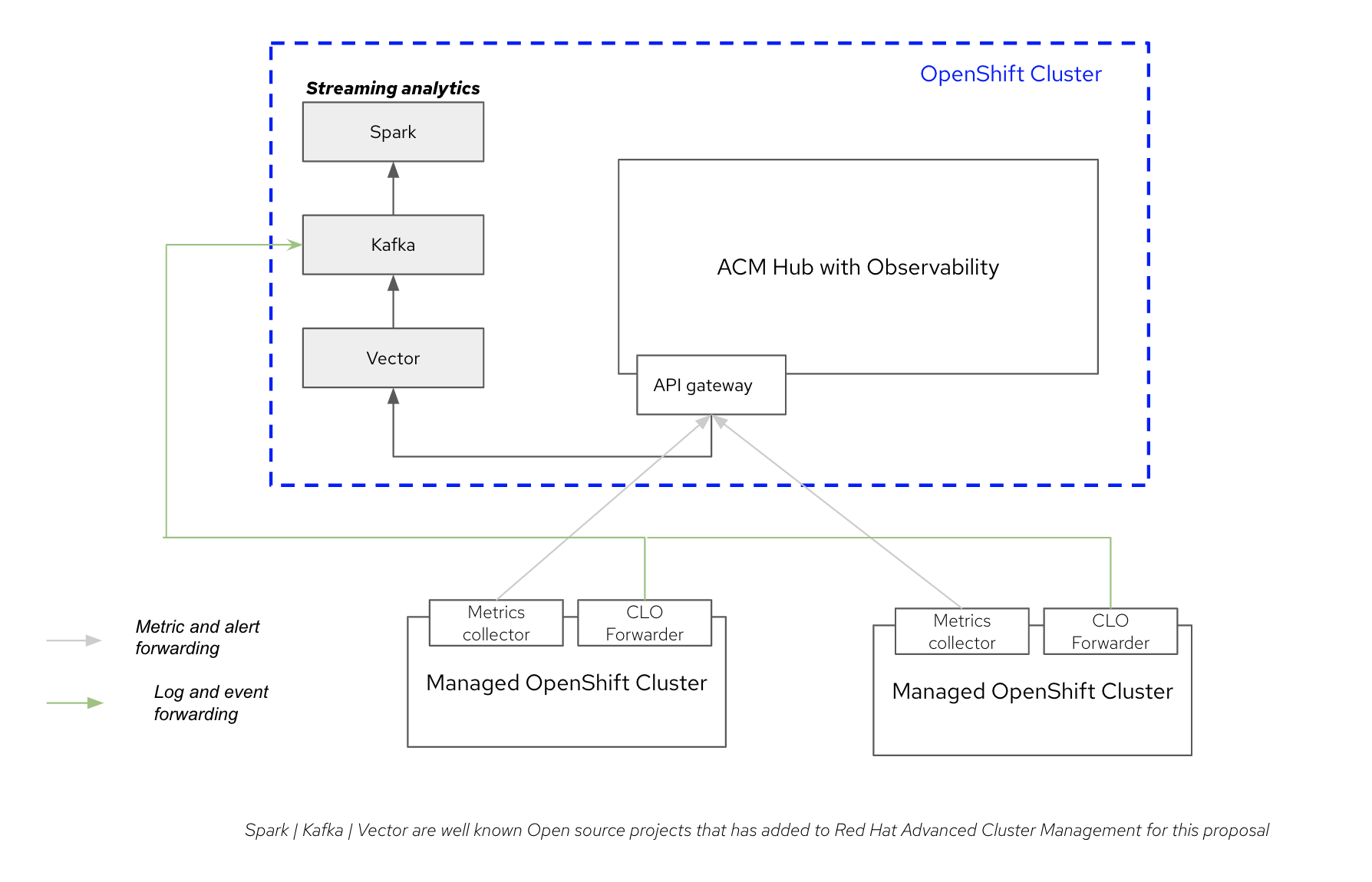

The data flow set up as implemented by the code here is as below.

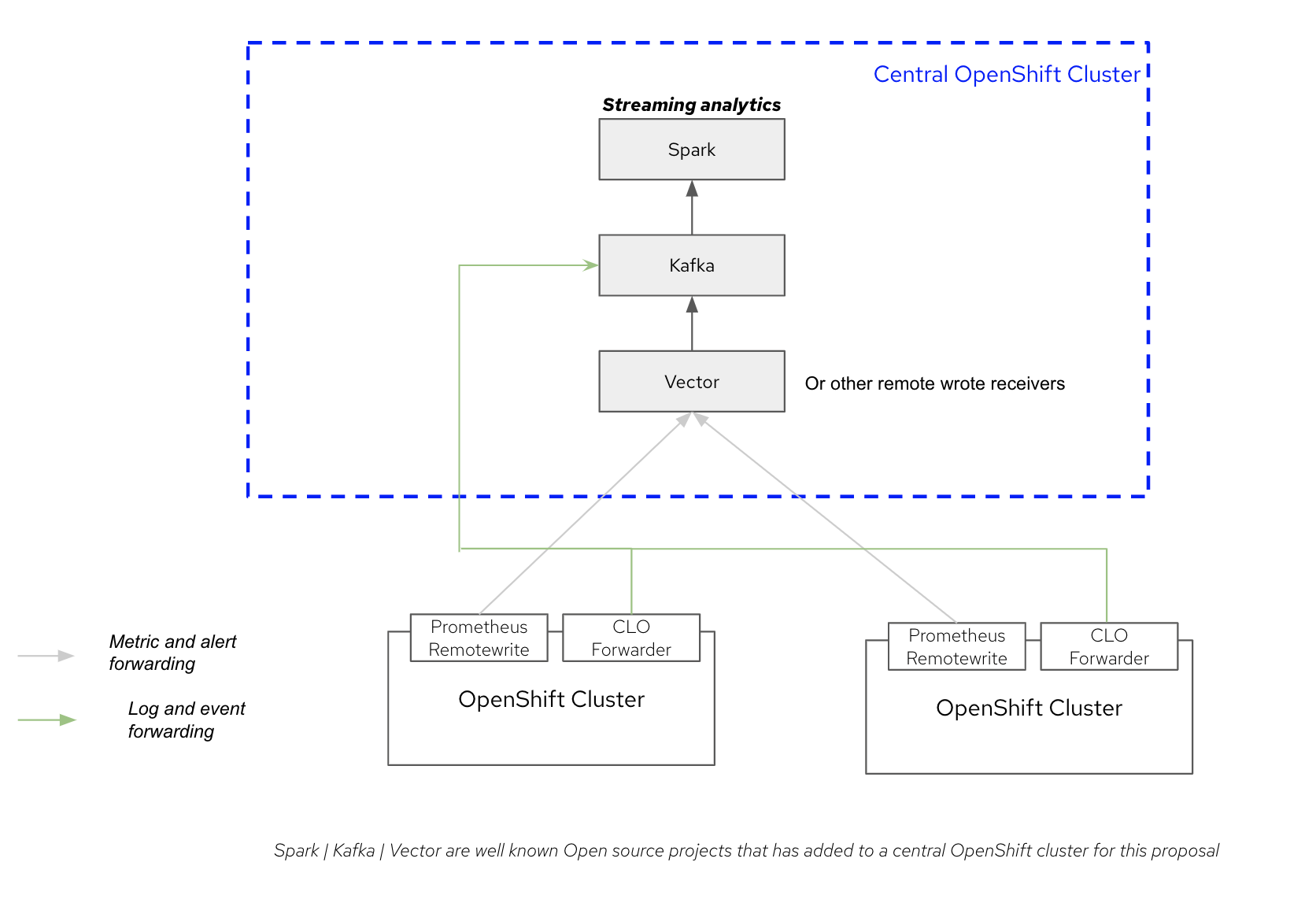

As you could easily guess, we could easily do this same flow using Prometheus RemoteWrite API instead of ACM. Pros and cons of the above two different methods are really not relevant to the objective of this repository.

If you used a different metric stream (non-Prometheus) principles applied would be exactly the same. In the python module called in Spark - simpleKafkaMetricConsumer.py in this example - the schema as seen in metrics topic would need to be changed.

So, a data flow like shown below could be achieved very easily.

We will follow Spark Structured Streaming.

As a starter, we have added a few simple examples - one for logs and one for metrics.

-

Streaming Metric Analytic -

Gets streaming metrics and finds out only the active alerts that are firing. Code here.

-

Streaming Log/Event Analytic -

Gets streaming Infrastructure Logs and Kubernetes events and filters out only the current kubernetes events. Code here.

-

Streaming Anomaly Detection on metric -

follows soon

For installing the pre-requistes:

- Install ACM and cofigure Observability

- Install and configure Kafka

- Install and configure Vector

- Configure Observability to route all metrics to Vector

- Install and configure ClusterLogging Operator- if also interested in logs and events.

follow this.

For installing and Testing Spark, follow this.

For building docker container for Spark Driver, follow this.

For launching a real Spark Application, follow this.