A Flask extension for automatically adding OpenAPI documentation to your Flask app.

OpenAPI Builder is an extension for your RESTful Flask API to easily build OpenAPI (formerly known as Swagger) documentation using the standard specification. It is designed to generate the documentation for your resources, by specifying a few simple parameters per endpoint. OpenAPI Builder is not dependent on a specific (de-)serialization library (such as marshmallow or halogen), since its goal is abstract them. The most powerful feature of this package is to inspect the (de-)serialization class. Thus, extending your class will automatically update your documentation. This ensures that your documentation is always up to date.

To summarize, the key features are:

- Generate documentation according to the OpenAPI standard. This is the internet standard for writing RESTful API documentation.

- Keep documentation up to date. This is due to generating the documentation by inspecting the (de-)serialization class.

- Reduce up to 90% development time writing documentation. This is due to automating the repetitive work of writing documentation.

- Abstract upon any (de-)serialization class. It works with the parsing library of your choice.

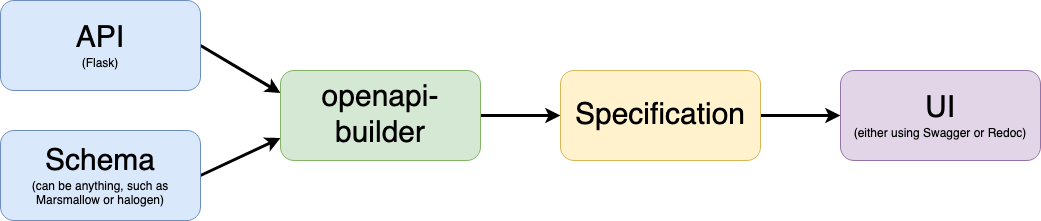

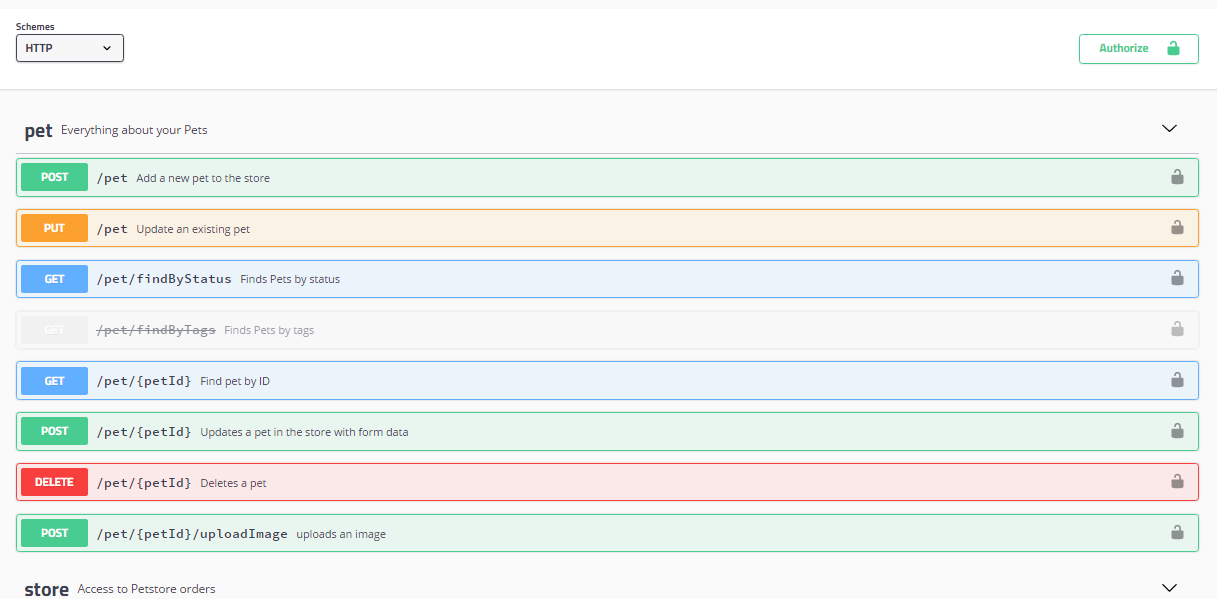

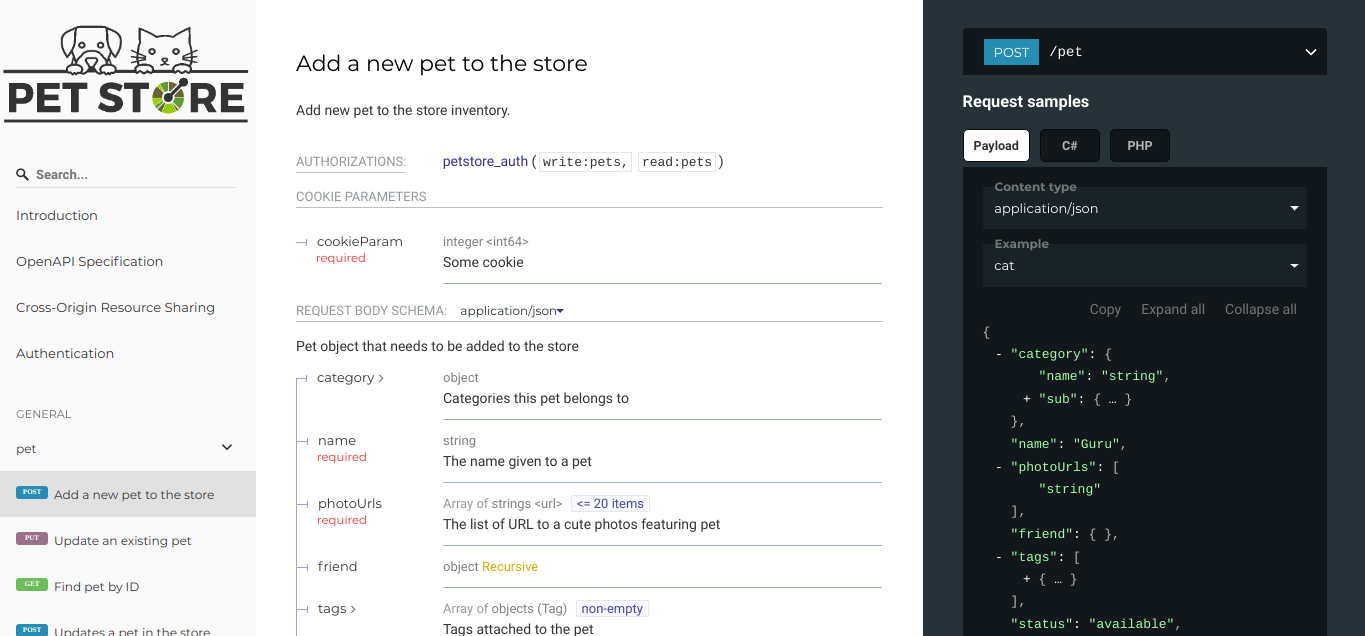

OpenAPI Builder uses the Flask application and schemas to retrieve information about the interaction of your API. This information is used to generate a specification, which is a JSON-file according to the industry standard Open API format. This specification can be visualized using any UI that supports the Open API specification, such as Swagger UI, or Redoc.

Installation is simple via PIP:

pip install openapi-builderAfter installation, it's time to build the documentation for the resources in your Flask application, and expose them so your external users can read them. For working examples, see this folder.

The extension can be initialized using the following snippet:

from flask import Flask

from openapi_builder import OpenApiDocumentation

app = Flask(__name__)

# option 1: initialize directly

documentation = OpenApiDocumentation(app=app)

# option 2: add the app later.

documentation = OpenApiDocumentation()

documentation.init_app(app)The generated documentation is automatically exposed at http://localhost:5000/documentation. If you don't want this, you can disable it in the options. The included documentation UI is taken from the swagger-codegen page.

After the documentation UI has been setup, it's time to add documentation for your resources. This can be achieved

using the add_documentation decorator. Given an example endpoint for your RESTful API, that might look like

this:

from flask import Flask

from marshmallow import Schema, fields

class UserSchema(Schema):

"""User response schema."""

name = fields.Str()

"""Name of the user."""

email = fields.Email()

"""Email of the user."""

register_date = fields.DateTime()

"""When the user was registered."""

app = Flask(__name__) # use the same app as used for the UI.

@app.route("/users")

def users():

"""Returns a list of users, serialized using Marshmallow."""

users = [

User(name="John", lastname="Doe", email="johndoe@gmail.com"),

User(name="Jane", lastname="Doe", email="janedoe@gmail.com"),

]

return jsonify(UserSchema(many=True).dumps(users))Documentation for this resource is generated by adding the decorator.

...

from http import HTTPStatus

from openapi_builder import add_documentation

...

@app.route("/users")

@add_documentation(

responses={HTTPStatus.OK: UserSchema(many=True)},

summary="Returns a list of users.",

description="More extensive information that fully describes the endpoint.",

)

def users():

...For a full overview of all applicable parameters, see this configuration page.

That's all folks. You can view your documentation at http://localhost:5000/documentation, and inspect the configuration at: http://localhost:5000/documentation-configuration. Whenever the schema is updated by adding/removing/updating a new property, it is automatically reflected in the OpenAPI documentation.

Contributions are welcome! If you can see a way to improve this package:

- Do click the fork button

- Make your changes and submit a pull request.

Or to report a bug or request something new, make an issue.

This section describes development standards for this project.

Black is an uncompromising Python code formatter. By using it, you cede control over minutiae of hand-formatting. But in return, you no longer have to worry about formatting your code correctly, since black will handle it. Blackened code looks the same for all authors, ensuring consistent code formatting within your project.

The format used by Black makes code review faster by producing the smaller diffs.

Black's output is always stable. For a given block of code, a fixed version of black will always produce the same output. However, it should be noted that different versions of black will produce different outputs. Black is configured here:

This repository comes with a pre-commit stack. This is a set of git hooks which are executed every time a commit is made. The hooks catch errors as they occur, and will automatically fix some of these errors.

To set up the pre-commit hooks, run the following code from within the repo directory:

pip install -r requirements-dev.txt pre-commit install

Whenever trying to commit code which is flagged by the pre-commit hooks, the commit will not go through. Some of the pre-commit hooks (such as black, isort) will automatically modify the code to fix the issues. When this happens, you'll have to stage the changes made by the commit hooks and then commit again. Other pre-commit hooks will not modify the code and will just tell you about issues which you'll then have to manually fix.

To run the pre-commit stack on all the files at any time:

pre-commit run --all-files

To force a commit to go through without passing the pre-commit hooks use the --no-verify flag:

git commit --no-verify

The pre-commit stack which comes with the template is highly opinionated, and includes the following operations:

- Code is reformatted to use the black style. Any code inside docstrings will be formatted to black using blackendocs. All code cells in Jupyter notebooks are also formatted to black using black_nbconvert.

- All Jupyter notebooks are cleared using nbstripout.

- Imports are automatically sorted using isort.

- flake8 is run to check for conformity to the python style guide PEP-8, along with several other formatting issues.

- setup-cfg-fmt is used to format any setup.cfg files.

- Several hooks from pre-commit are used to screen for non-language specific git issues, such as incomplete git merges, overly large files being committed to the repo, bugged JSON and YAML files. JSON files are also prettified automatically to have standardised indentation. Entries in requirements.txt files are automatically sorted alphabetically.

- Several hooks from pre-commit specific to python are used to screen for rST formatting issues, and ensure noqa flags always specify an error code to ignore.

Once it is set up, the pre-commit stack will run locally on every commit. The pre-commit stack will also run on github with one of the action workflows, which ensures PRs are checked without having to rely on contributors to enable the pre-commit locally.

The script docs/conf.py is based on the Sphinx default configuration.

It is set up to work well out of the box, with several features added in.

Documentation is deployed to GitHub Pages and is available at https://flyingbird95.github.io/openapi-builder/.

The gh-pages documentation is refreshed every time there is a push to the master branch.

Note that only one copy of the documentation is served (the latest version).

The web documentation can be built locally with:

pip install -r requirements-docs.txt make -C docs html

And view the documentation like so:

sensible-browser docs/_build/html/index.html

Or build the pdf documentation:

make -C docs latexpdf

On Windows, this becomes:

cd docs make html make latexpdf cd ..

- The README.rst will become part of the generated documentation (via a link file

docs/source/readme.rst). Note that the first line of README.rst is not included in the documentation, since this is expected to contain badges which we render on GitHub, but not include in the documentation pages. - The docstrings in all modules, functions, classes and methods will be used to build a set of API documentation using autodoc.

Our

docs/conf.pyis also set up to automatically call autodoc whenever it is run, and the output files which it generates are on the gitignore list. This means it will automatically generate a fresh API description which exactly matches the current docstrings every time the documentation is generated. - Docstrings can be formatted in plain reST, or using the numpy format (recommended), or Google format. Support for numpy and Google formats is through the napoleon extension (which is enabled by default).

- The reference functions in the python core and common packages and they will automatically be hyperlinked to the appropriate documentation in the documentation.

This is done using intersphinx mappings, which can be seen at the bottom of the

docs/conf.pyfile. - The documentation theme is sphinx-book-theme. Alternative themes can be found at sphinx-themes.org, sphinxthemes.com, and writethedocs.

Package metadata is consolidated into one place, the file openapi_builder/__meta__.py.

This is done to only write the metadata once in this centralised location, and everything else (packaging, documentation, etc) picks it up from there.

This is similar to single-sourcing the package version, but for all metadata.

This information is available to end-users with import openapi_builder; print(openapi_builder.__meta__).

The version information is also accessible at openapi_builder.__version__, as per PEP-396.

The setup.py script is used to build and install the package.

The package can be installed from source with:

pip install .

or alternatively with:

python setup.py install

But do remember that as a developer, the package should be installed in editable mode, using either:

pip install --editable .

or:

python setup.py develop

which will mean changes to the source will affect the installed package immediately without having to reinstall it.

By default, when the package is installed only the main requirements, listed in requirements.txt will be installed with it.

Requirements listed in requirements-dev.txt, requirements-docs.txt, and requirements-test.txt are optional extras.

The setup.py script is configured to include these as extras named dev, docs, and test.

They can be installed along with:

pip install .[dev]

etc.

Any additional files named requirements-EXTRANAME.txt will also be collected automatically and made available with the corresponding name EXTRANAME.

Another extra named all captures all of these optional dependencies.

The README file is automatically included in the metadata when setup.py build wheels for PyPI.

The rest of the metadata comes from openapi_builder/__meta__.py.

Our template setup.py file is based on the example from setuptools documentation, and the comprehensive example from Kenneth Reitz (released under MIT License), with further features added.

GitHub features the ability to run various workflows whenever code is pushed to the repo or a pull request is opened. This is one service of several services that can be used to continually run the unit tests and ensure changes can be integrated together without issue. It is also useful to ensure that style guides are adhered to

Five workflows are included:

- docs

- The docs workflow ensures the documentation builds correctly, and presents any errors and warnings nicely as annotations. The available html documentation is automatically deployed to the gh-pages branch and https://flyingbird95.github.io/openapi-builder/.

- pre-commit

- Runs the pre-commit stack. Ensures all contributions are compliant, even if a contributor has not set up pre-commit on their local machine.

- lint

- Checks the code uses the black style and tests for flake8 errors. Note that the lint workflow is superfluous, due to the pre-commit hooks.

- test

- Runs the pytest, and pushes coverage reports to Codecov.

- release candidate tests

- The release candidate tests workflow runs the unit tests on more Python versions and operating systems than the regular test workflow. This runs on all tags, plus pushes and PRs to branches named like "v1.2.x", etc. Wheels are built for all the tested systems, and stored as artifacts for convenience when shipping a new distribution.

When the publish job is enabled on the release candidate tests workflow, it can also push built release candidates to the Test PyPI server.