Official implementation of Decentralization and Acceleration Enables Large-Scale Bundle Adjustment. Taosha Fan, Joseph Ortiz, Ming Hsiao, Maurizio Monge, Jing Dong, Todd Murphey, Mustafa Mukadam. In Robotics Science and Systems (RSS), 2023.

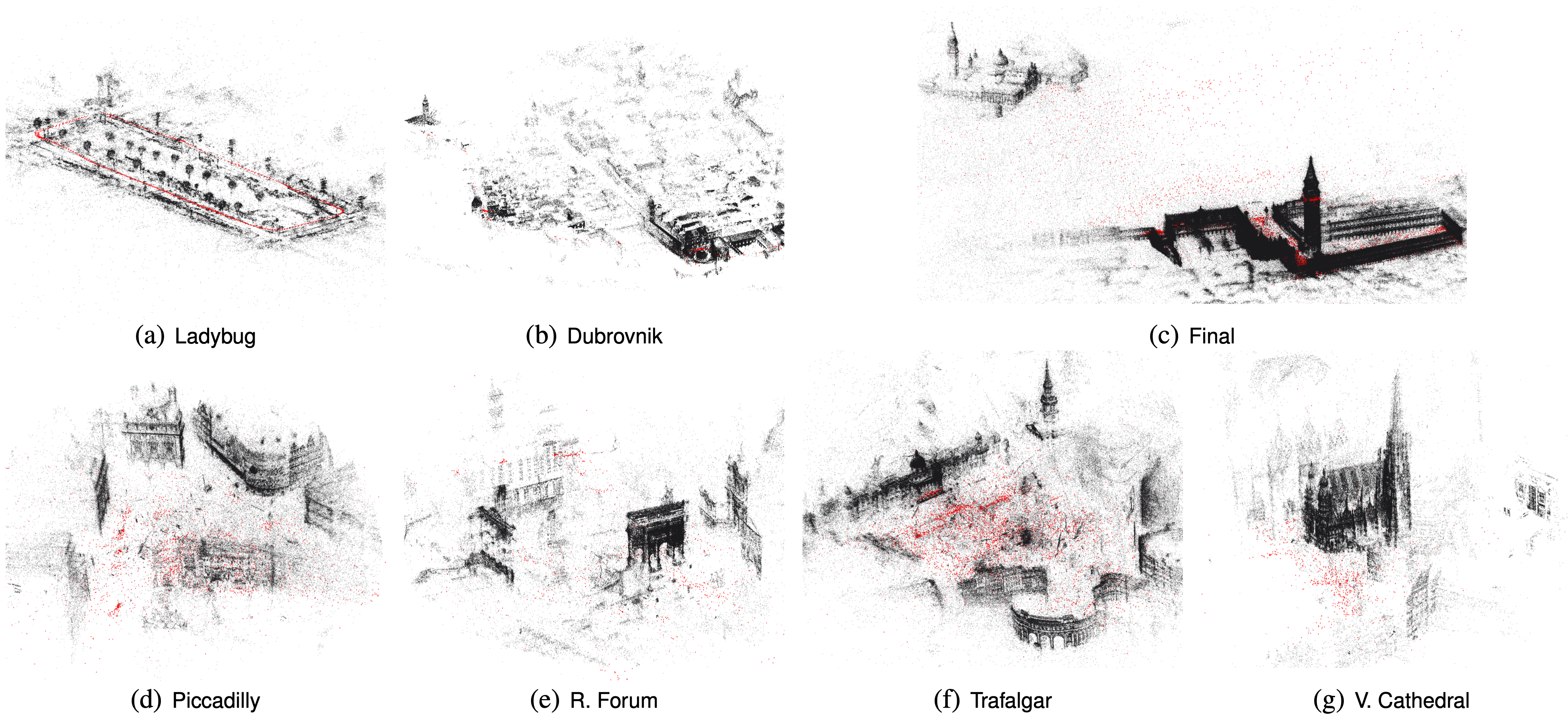

In this work, we present Decentralized and Accelerated Bundle Adjustment (DABA), a method that addresses the compute and communication bottleneck for bundle adjustment problems of arbitrary scale. Despite limited peer-to-peer communication, DABA achieves provable convergence to first-order critical points under mild conditions. Through extensive benchmarking with public datasets, we have shown that DABA converges much faster than comparable decentralized baselines, with similar memory usage and communication load. Compared to centralized baselines using a single device, DABA, while being decentralized, yields more accurate solutions with significant speedups of up to 953.7x over Ceres and 174.6x over DeepLM.

- Eigen >= 3.4.0

- CUDA >= 11.4

- CMake >= 3.18

- OpenMPI

- NCCL

- CUB >= 11.4

- Thrust >= 2.0

- Glog

- Boost >= 1.60

- Ceres >= 2.0 (optional)

Download BAL Dataset

wget https://grail.cs.washington.edu/projects/bal/data/ladybug/problem-1723-156502-pre.txt.bz2

bzip2 -dk problem-1723-156502-pre.txt.bz2git clone https://github.com/facebookresearch/DABA.git

cd DABA

mkdir release

cd release

cmake -DCMAKE_BUILD_TYPE=Release ..

make -j16mpiexec -n NUM_DEVICES ./bin/mpi_daba_bal_dataset --dataset /path/to/your/dataset --iters 1000 --loss "trivial" --accelerated true --save trueIf you find this work useful for your research, please cite our paper:

@article{fan2023daba,

title={Decentralization and Acceleration Enables Large-Scale Bundle Adjustment},

author={Fan, Taosha and Ortiz, Joseph and Hsiao, Ming and Monge, Maurizio and Dong, Jing and Murphey, Todd and Mukadam, Mustafa},

journal={arXiv:2305.07026},

year={2023},

}

The majority of this project is licensed under MIT License. However, a portion of the code is available under the Apache 2.0 license.