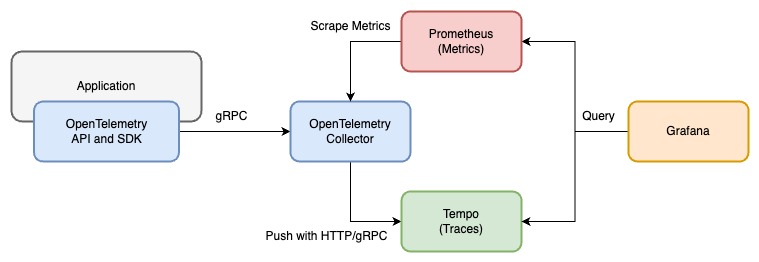

Monitoring application performance with OpenTelemetry SDK, OpenTelemetry Collector, Prometheus, and Grafana:

- OpenTelemetry SDK: Generate traces and push them to OpenTelemetry Collector with zero-code instrumentation

- OpenTelemetry Collector: Receive traces and process them to metrics with Span Metrics Connector

- Prometheus: Scrape metrics from OpenTelemetry Collector

- Grafana: Visualize metrics

- Tempo: Collect traces, optional for the main goal to process traces to metrics

This project is inspired by Jaeger Service Performance Monitoring.

-

Start all services with docker-compose

docker-compose up -d

This project includes FastAPI(Python) and Spring Boot(Java) to demonstrate the out-of-the-box ability of OpenTelemetry zero-code instrumentation. Comment out the services you don't want to run in

docker-compose.yml. -

Send requests with siege to the applications:

bash request-script.sh

Or you can send requests with k6:

k6 run --vus 3 --duration 300s k6-script.js

-

Login to Grafana(http://localhost:3000/) with default admin user and check the predefined dashboard

OpenTelemetry APM- Username:

admin - Password:

admin

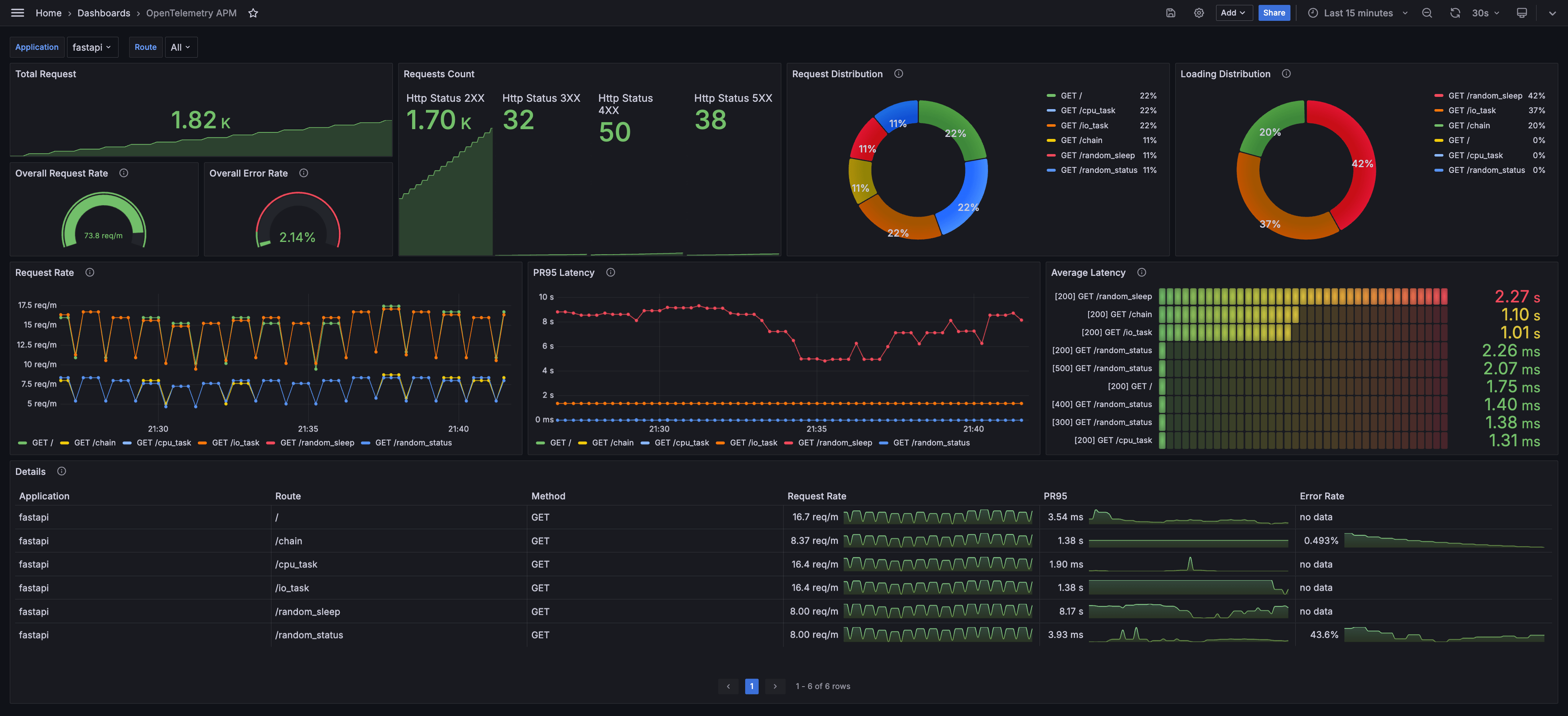

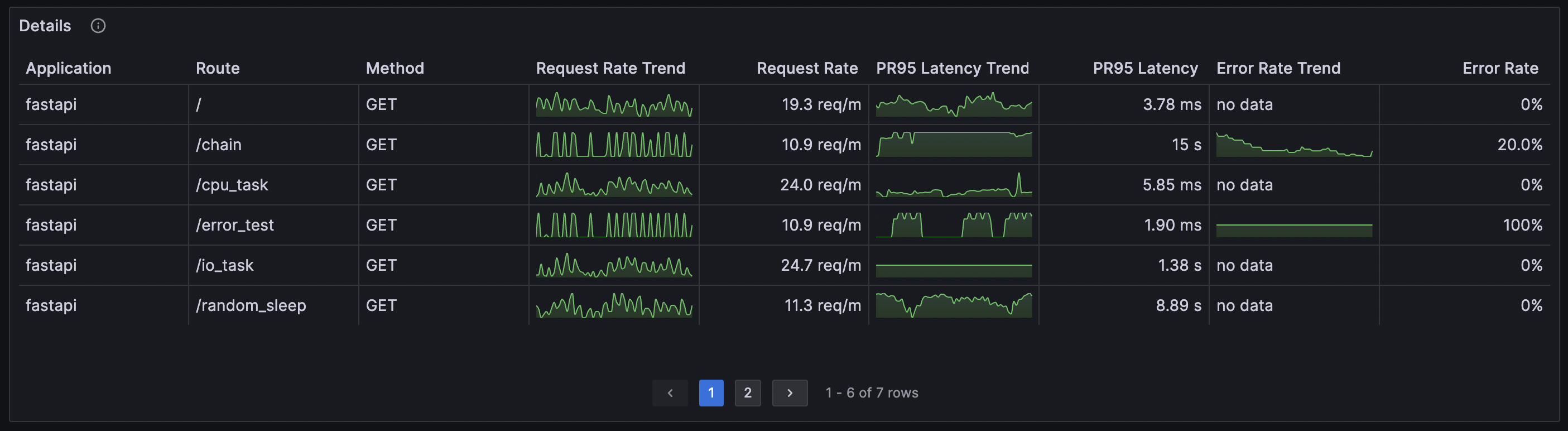

Dashboard screenshot:

This dashboard is also available on Grafana Dashboards.

- Username:

OpenTelemetry provides two ways to instrument your application:

- Code-based Instrumentation: Modify the application codebase to create spans and export them.

- Zero-code Instrumentation: Utilize the mechanism from the language runtime to inject code into the application to create spans and export them without modifying the application codebase.

In this project, we use zero-code instrumentation to instrument the applications.

OpenTelemetry Instrumentation for Java provides an zero-code way(Java 1.8+ is required) to instrument the application by the agent jar as follows:

java -javaagent:path/to/opentelemetry-javaagent.jar -jar myapp.jarThe agent supports a lot of libraries, including Spring Web MVC. According to the document:

It can be used to capture telemetry data at the “edges” of an app or service, such as inbound requests, outbound HTTP calls, database calls, and so on.

So we don't need to modify any line of code in our codebase. The agent will handle everything automatically.

The configurations, like the exporter setting, are listed on the document, and are consumed by the agent from one or more of the following sources (ordered from highest to lowest priority):

- system properties

- environment variables

- the configuration file

- the ConfigPropertySource SPI

In this project we use environment variables to set the agent configuration:

# docker-compose.yml

spring-boot:

image: ghcr.io/blueswen/opentelemetry-apm/springboot:latest

environment:

- OTEL_EXPORTER=otlp_span

- OTEL_EXPORTER_OTLP_ENDPOINT=http://otel-collector:4317

- OTEL_EXPORTER_OTLP_INSECURE=true

- OTEL_METRICS_EXPORTER=none

- OTEL_RESOURCE_ATTRIBUTES=service.name=sprint-boot

command: "java -javaagent:/opentelemetry-javaagent.jar -jar /app.jar"Check Spring Boot with Observability for more details, if you are interested in Spring Boot with Observability.

OpenTelemetry Instrumentation for Python provides an zero-code way for multiple libraries and frameworks to instrument the application. With zero-code instrumentation, it will inject bytecode dynamically to gather telemetry.

For using zero-code instrumentation, we need to install at least three packages:

- opentelemetry-distro: OpenTelemetry API and SDK

- opentelemetry-exporter: Exporter for traces and metrics which have to correspond to the exporter setting of the agent, we use opentelemetry-exporter-otlp here

- opentelemetry-instrumentation: Provides instrumentation for various libraries and frameworks. There are two methods for installation:

- Direct installation of the instrumentation package, for example, opentelemetry-instrumentation-fastapi, opentelemetry-instrumentation-requests, opentelemetry-instrumentation-httpx, etc.

- Alternatively, use the opentelemetry-bootstrap command from opentelemetry-instrumentation. Execute

opentelemetry-bootstrap -a installto automatically install all available instrumentation libraries based on the currently installed libraries and frameworks. In this project, we utilize this method to install all available instrumentation libraries.

There are two ways to set the configuration of OpenTelemetry Instrumentation for Python:

In this project we use environment variables to set the zero-code instrumentation configuration:

# docker-compose.yml

fastapi:

image: ghcr.io/blueswen/opentelemetry-apm/fastapi:latest

environment:

- OTEL_TRACES_EXPORTER=otlp

- OTEL_EXPORTER_OTLP_ENDPOINT=http://otel-collector:4317

- OTEL_METRICS_EXPORTER=none

- OTEL_SERVICE_NAME=fastapi

command: "opentelemetry-instrument python main.py"Here is a sample command with CLI arguments:

opentelemetry-instrument \

--traces_exporter otlp \

--metrics_exporter none \

--service_name fastapi \

--exporter_otlp_endpoint http://otel-collector:4317 \

python main.pyCheck FastAPI with Observability for more details, if you are interested in FastAPI with Observability.

OpenTelemetry Javascript Contrib provides an zero-code way to instrument the application for multiple Node.js frameworks and libraries.

To use zero-code instrumentation, we need to install two packages:

- @opentelemetry/api

- @opentelemetry/auto-instrumentations-node: Zero-code instrumentation was formerly known as automatic instrumentation, so the package name is

auto-instrumentations-node.

Then, we can use the environment variables to config the zero-code instrumentation and use the require argument to load the auto-instrumentations:

export OTEL_TRACES_EXPORTER=otlp

export OTEL_EXPORTER_OTLP_ENDPOINT=http://otel-collector:4317

export OTEL_EXPORTER_OTLP_PROTOCOL=grpc

export OTEL_SERVICE_NAME=express

node --require @opentelemetry/auto-instrumentations-node app.jsIn this project we use environment variables to set the zero-code instrumentation configuration:

# docker-compose.yml

express:

image: ghcr.io/blueswen/opentelemetry-apm/express:latest

environment:

- OTEL_EXPORTER_OTLP_PROTOCOL=grpc

- OTEL_EXPORTER_OTLP_ENDPOINT=http://otel-collector:4317

- OTEL_SERVICE_NAME=express

command: "node --require '@opentelemetry/auto-instrumentations-node/register' app.js"Check more details about zero-code instrumentation in the document and GitHub Repo. The environment variables are listed in the Java SDK configuration document.

Work in progress

Work in progress

Work in progress

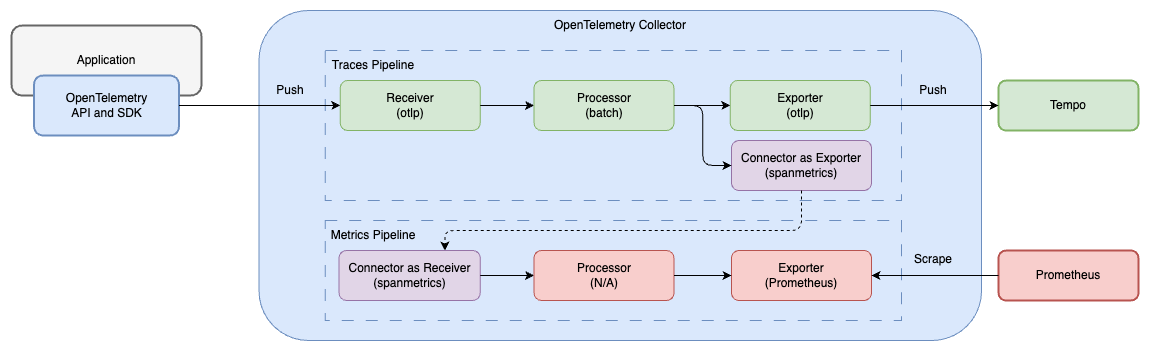

OpenTelemetry Collector is a vendor-agnostic agent for collecting telemetry data, which can receive different telemetry data formats and export them to different backends. In this project, we use OpenTelemetry Collector to receive traces from the applications over OTLP and process them to metrics with Span Metrics Connector.

Contrib distribution of OpenTelemetry Collector is required for the Span Metrics Connector feature. OpenTelemetry Collector only has the core components, and the Contrib distribution provides additional components, including the Span Metrics Connector.

# docker-compose.yml

otel-collector:

image: otel/opentelemetry-collector-contrib:0.91.0 # Use Contrib distribution

command:

- "--config=/conf/config.yaml"

volumes:

- ./etc/otel-collector-config.yaml:/conf/config.yaml

ports:

- "4317" # OTLP gRPC receiver

- "4318" # OTLP http receiver

- "8889" # Prometheus metrics exporter port

restart: on-failure

depends_on:

- tempoTo enable Span Metrics Connector and expose metrics in Prometheus format, we need to:

- Add

spanmetricstoconnectors: Enable and configure the spanmetrics connector- dimensions: Extract span attributes to Prometheus labels

- Add

spanmetricsto traces pipelineexporters: Let the traces pipeline export traces to the spanmetrics connector - Add

spanmetricsto metrics pipelinereceivers: Set the spanmetrics connector as the receiver of the metrics pipeline, and the data is from the traces pipeline exporter - Add

prometheusto metrics pipelineexporters: Expose metrics in Prometheus format on port 8889

The pipeline diagram and configuration file are as follows:

# etc/otel-collector-config.yaml

receivers:

otlp:

protocols:

grpc: # enable gRPC protocol, default port 4317

http: # enable http protocol, default port 4318

exporters:

otlp:

endpoint: tempo:4317

tls:

insecure: true

prometheus:

endpoint: "0.0.0.0:8889" # expose metrics on port 8889

connectors:

spanmetrics:

dimensions:

- name: http.method # extract http.method attribute from span to Prometheus label http_method

- name: http.status_code # extract http.status_code attribute from span to Prometheus label http_status_code

- name: http.route # extract http.route attribute from span to Prometheus label http_route

processors:

batch: # Compress spans into batches to reduce network traffic

service:

pipelines:

traces:

receivers: [otlp]

processors: [batch]

exporters: [spanmetrics, otlp]

metrics/spanmetrics:

receivers: [spanmetrics]

exporters: [prometheus]Since opentelemetry-collector-contrib 0.85.0 the histogram metrics of duration be renamed from duration_count to duration_milliseconds_count or duration_seconds_count according to the unit of the metrics. Ensure the version of opentelemetry-collector-contrib is 0.85.0+ if you want to use the predefined dashboard in this project.

Check more details about Connector in the document.

OpenTelemetry Collector only provides metrics in Prometheus format, so we need to use Prometheus to scrape metrics from OpenTelemetry Collector.

# docker-compose.yml

prometheus:

image: prom/prometheus:v2.48.1

ports:

- "9090:9090"

volumes:

- ./etc/prometheus.yml:/workspace/prometheus.yml

command:

- --config.file=/workspace/prometheus.ymlAdd OpenTelemetry Collector as a scrape target in the Prometheus config file:

# etc/prometheus.yml

scrape_configs:

- job_name: aggregated-trace-metrics

static_configs:

- targets: ['otel-collector:8889']In this project, Tempo is used as a backend for receiving traces from OpenTelemetry Collector, and it is optional for the main goal to process traces to metrics. You can also use Jaeger as a backend.

# docker-compose.yml

tempo:

image: grafana/tempo:2.3.1

command: [ "--target=all", "--storage.trace.backend=local", "--storage.trace.local.path=/var/tempo", "--auth.enabled=false" ]

ports:

- "14250:14250"We use Sparkline feature to visualize the metrics in the table panel. This feature is available in Grafana 9.5+ as an opt-in beta feature, and needs to be enabled with an environment variable or in the configuration file grafana.ini:

[feature_toggles]

enable = timeSeriesTable- Add Prometheus and Tempo to the data source with config file

etc/grafana/datasource.yml. - Load predefined dashboard with

etc/dashboards.yamlandetc/dashboards/fastapi-observability.json.

# grafana in docker-compose.yaml

grafana:

image: grafana/grafana:10.3.1

volumes:

- ./etc/grafana/:/etc/grafana/provisioning/datasources # data sources

- ./etc/dashboards.yaml:/etc/grafana/provisioning/dashboards/dashboards.yaml # dashboard setting

- ./etc/dashboards:/etc/grafana/dashboards # dashboard json files directory

environment:

GF_AUTH_ANONYMOUS_ENABLED: "true"

GF_AUTH_ANONYMOUS_ORG_ROLE: "Admin"

GF_FEATURE_TOGGLES_ENABLE: "timeSeriesTable" # enable sparkline feature