This repo contains a Terraform module and an Ansible role that can be imported into existing projects to setup a Teleport cluster and agents.

It should be noted that this repo only supports Terraform v1.3.7 and greater.

It should be noted that this repo only supports Ansible v2.14.1 and greater.

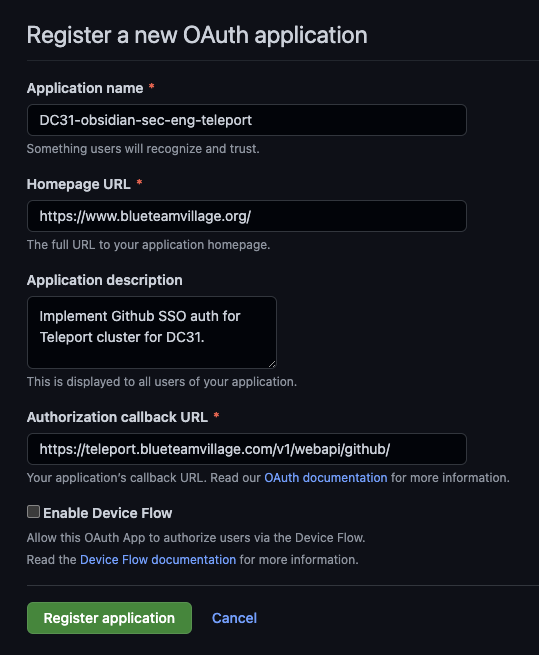

This section will walk through the process to create a Github Oauth app for Teleport SSO. These steps need to be executed by a Github org admin.

- Log into Github as an org admin

- Browse to your Github orgs settings page

- Developer settings > OAuth apps

- Select "Register an application"

- Select "Generate a new client secret"

- Copy "OAuth client ID" and "Oauth client secret"

Perform the following instructions in an existing Terraform project. Upon completion, this Terraform module will create all the necessary AWS resources for a high-availability Teleport cluster:

- Teleport all-in-one (auth, node, proxy) single cluster ec2 instance

- DynamoDB tables (cluster state, cluster events, ssl lock)

- S3 bucket (session recording storage)

- Route53

Arecord - Security Groups and IAM roles

| File | Description |

|---|---|

| dynamodb.tf | DynamoDB table provisioning. Tables used for Teleport state and events. |

| ec2.tf | EC2 instance provisioning. |

| iam.tf | IAM role provisioning. Permits ec2 instance to talk to AWS resources (S3, DynamoDB, etc) |

| outputs.tf | Export module variables to be used by other Terraform resources |

| route53.tf | Route53 zone creation. Requires a hosted zone to configure SSL. |

| s3.tf | S3 bucket provisioning. Bucket used for session recording storage. |

| secrets.tf | Creates empty secret stub for Github Oauth client secret |

| variables.tf | Inbound variables for Teleport module |

vim main.tfand add:

module "teleport" {

source = "github.com/blueteamvillage/btv-teleport-single-cluster"

#### General ####

PROJECT_PREFIX = <project name>

primary_region = <region to deploy Teleport cluster too>

public_key_name = <Name of an SSH key to provision the EC2 instance>

#### Route53 ####

route53_zone_id = "<Route 53 Zone ID for the Teleport FQDN>"

route53_domain = "<domain>"

#### VPC ####

vpc_id = <VPC ID to deploy Teleport too>

teleport_subnet_id = <Subnet ID to deploy Teleport too>

#### Inra ####

aws_account = data.aws_caller_identity.current.account_id

teleport_ami = var.ubunut-ami

}

terraform init- Import this new module

terraform apply

module.teleport.aws_dynamodb_table.teleport_locks: Creating...

module.teleport.aws_dynamodb_table.teleport: Creating...

module.teleport.aws_dynamodb_table.teleport_events: Creating...

module.teleport.aws_s3_bucket.teleport: Creating...

module.teleport.aws_s3_bucket.teleport: Creation complete after 2s [id=defcon-2023-obsidian-teleport-kxl6y]

module.teleport.aws_s3_bucket_acl.teleport: Creating...

- Log into AWS console

- Go to the Secrets Manager service

- Terraform created an empty with the following name schema:

"${var.PROJECT_PREFIX}-teleport-github-OAuth-secret" - Secret value > Retrieve secret value

- This will produce an error because no value has been set, this is expected

- Select "Set secret value"

- Set secret value to the Github OAuth client secret

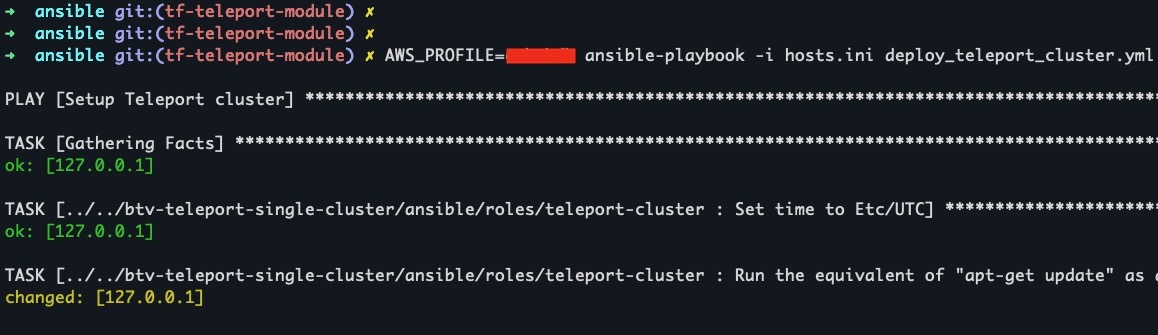

Perform the following instructions in an existing Ansible project. Upon completion, this Ansible will create a single-node Teleport "cluster" configured with Github SSO.

| File | Description |

|---|---|

| teleport-cluster/tasks/init_linux.yml | Ansible tasks to update linux and set basic OS settings |

| teleport-cluster/tasks/setup_teleport.yml | Ansible tasks to install Teleport and configure based on the tempalte in teleport-cluster/templates |

| teleport-cluster/templates/cap.yaml.j2 | Configure Github SSO as the default login mechanism |

| teleport-cluster/templates/github.yaml.j2 | Configure Github SSO for your org |

| teleport-cluster/templates/sec_infra_role.yaml.j2 | Define the resources admins have access too |

| teleport-cluster/templates/workshop_contributors.yaml.j2 | Define the resources workshop contributors have access too |

| teleport-cluster/var/main.yml | Variables for how to install and configure Teleport |

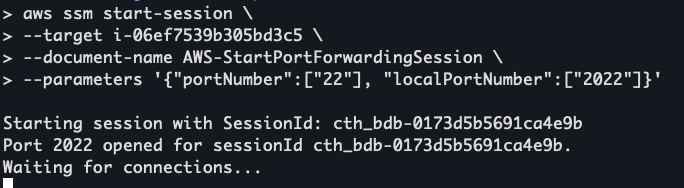

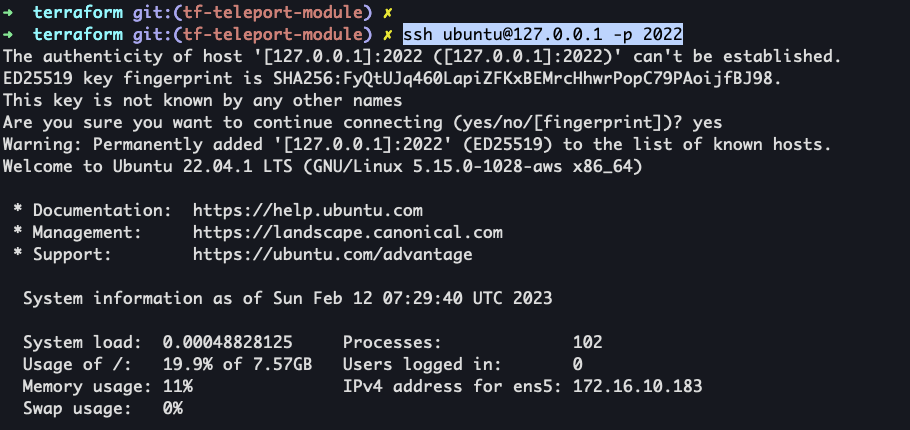

Teleport by default does not expose SSH to the public internet. To provision the EC2 instance via Ansible we can use SSM to create a tunnel that can be used by Ansible.

aws ssm start-session --target <Teleport EC2 instance ID> --document-name AWS-StartPortForwardingSession --parameters '{"portNumber":["22"], "localPortNumber":["2022"]}'

ansible-galaxy collection install amazon.awspip3 install -U boto3==1.26.69vim hosts.iniand set:

[teleport_cluster]

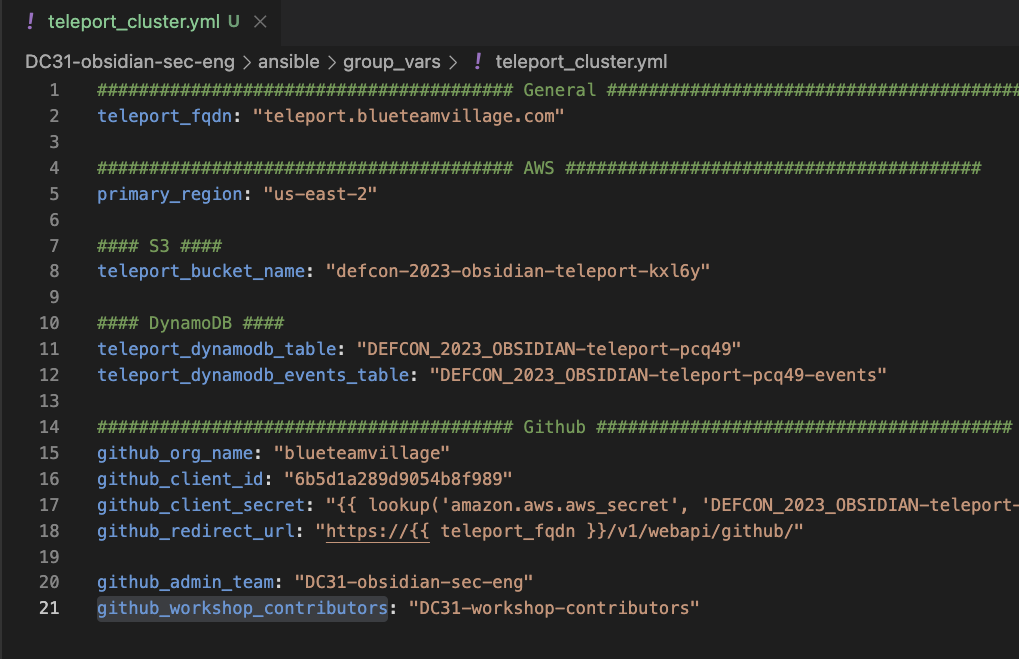

127.0.0.1 ansible_port=2022 ansible_user=ubuntugroup_vars/teleport_cluster.ymland set:- General

teleport_fqdn- Set the fully qualified domain for Teleportprimary_region- Set to the region where you want to host Teleport

- AWS

teleport_bucket_name- Set this to the name of the S3 bucket created by the Terraform for Teleportteleport_dynamodb_table- Set this to the name of the DynamoDB table created by the Terraform for Teleport- Name schmea:

"${var.PROJECT_PREFIX}-teleport-${random_string.suffix.result}" - AWS DynamoDB Table view

- Name schmea:

teleport_dynamodb_events_table- Set this to the name of the DynamoDB table created by the Terraform for Teleport- Name schmea:

"${var.PROJECT_PREFIX}-teleport-${random_string.suffix.result}-events" - AWS DynamoDB Table view

- Name schmea:

- Github

github_org_name- Name of the Github org for SSOgithub_client_id- The Github Oauth client ID - NOT a secretgithub_client_secret- Define the name of the AWS Secret that contains the Github Oauth client` secret.github_redirect_url- Leave this as the default value unless you are hosting Teleport at a different URL path.github_admin_team- Define the Github team that contains a list of users that will be admins for the Teleport clustergithub_workshop_contributors- Define the Github team that contains a list of users that will be accessing computing resources behind the Teleport cluster

- Save and exit

- General

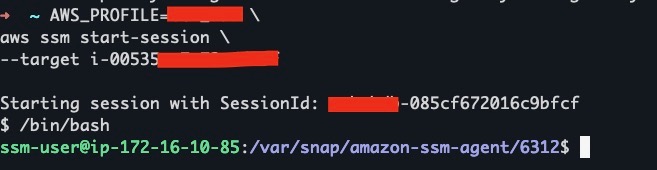

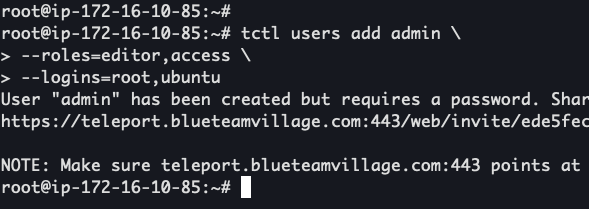

- Connect to Teleport using SSM:

aws ssm start-session --target <Teleport EC2 instance ID> /bin/bashtctl users add admin --roles=editor,access --logins=root,ubuntu- Enter the URL produced in the previous command into your browser

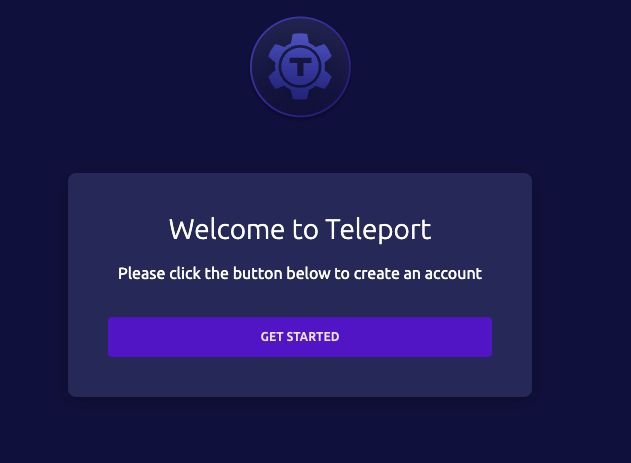

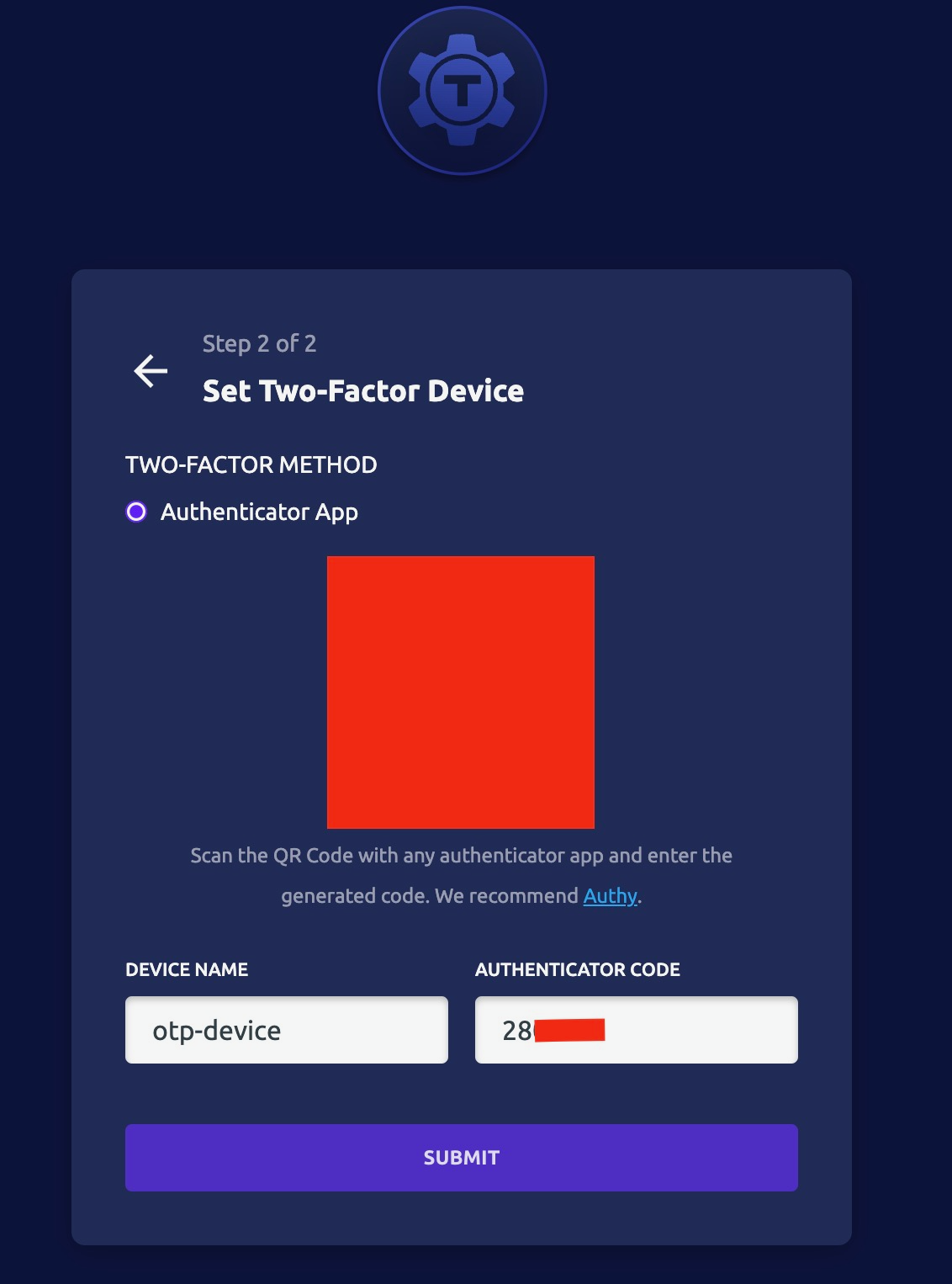

- Select "Get started"

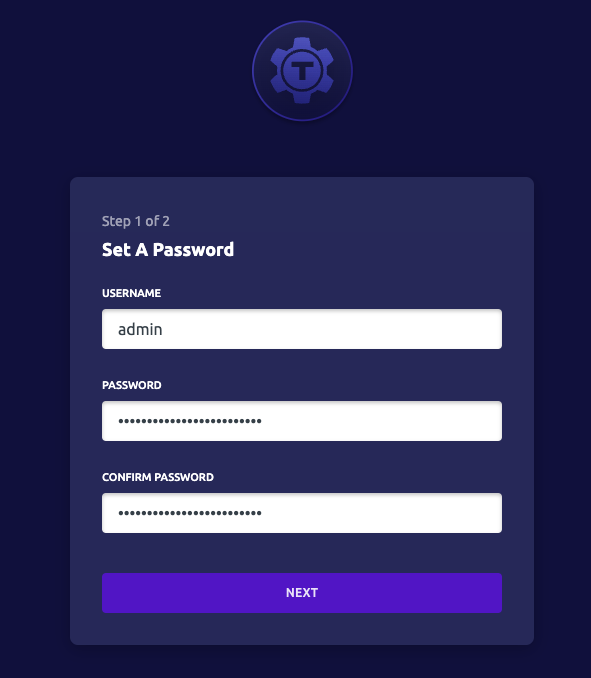

- Enter a password for the admin user

- Select "Next"

- Setup OTP

- Select "Go to dashboard"

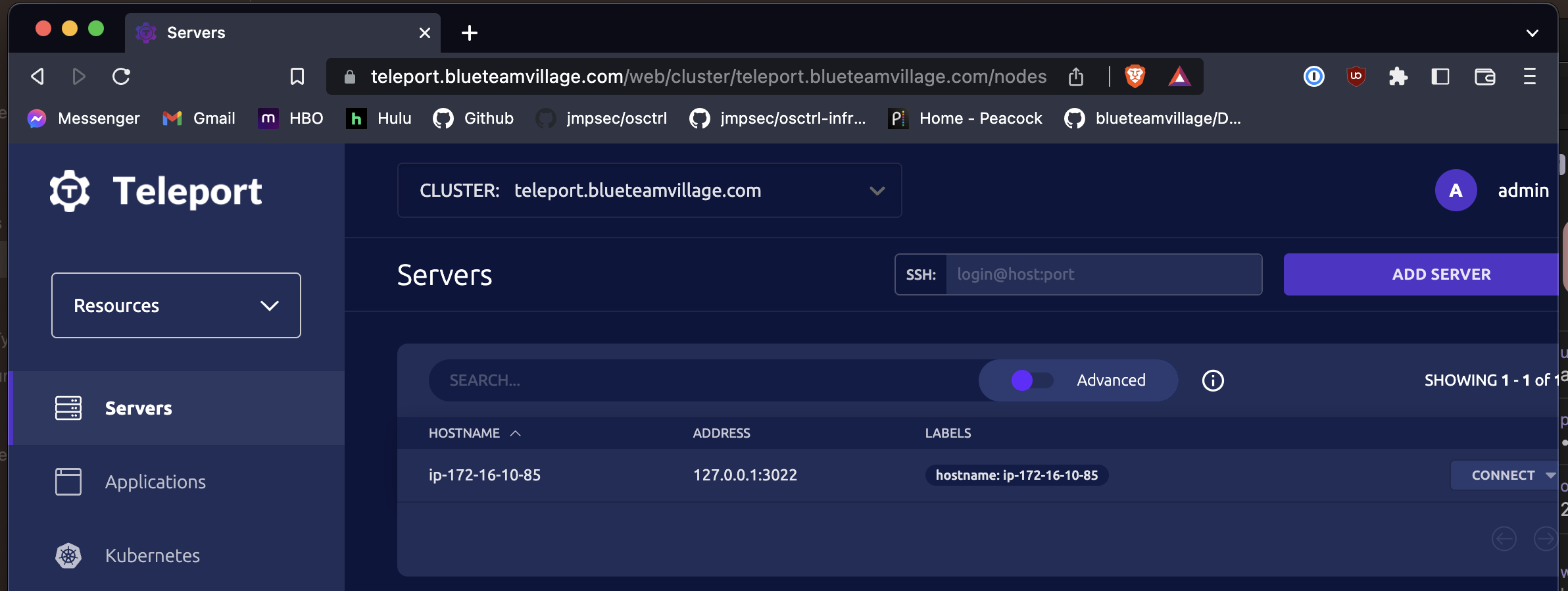

- Browese to Teleport FQDN

- Ex:

https://teleport.blueteamvillage.com/web/login

- Ex:

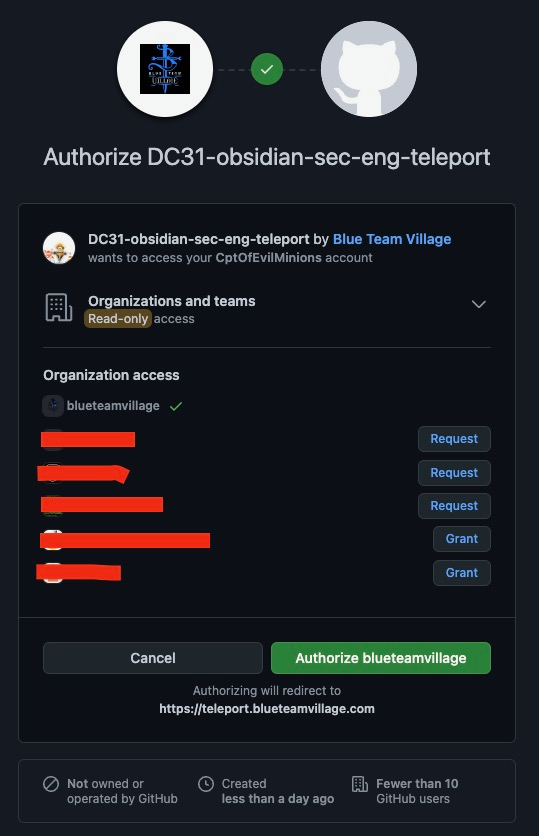

- Select "Github"

- Select "Authorize

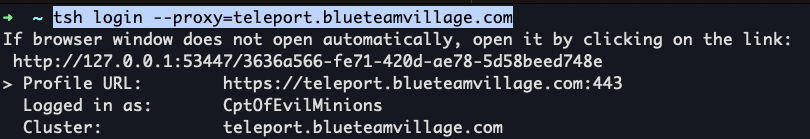

➜ tsh ls

Node Name Address Labels

--------------- -------------- ------------------------

ip-172-16-10-93 127.0.0.1:3022 hostname=ip-172-16-10-93

➜ tsh ssh ubuntu@ip-172-16-10-93

ubuntu@ip-172-16-10-93:~$ whoami

ubuntu

ubuntu@ip-172-16-10-93:~$Terraform v1.3.7Ansible v2.14.1Ubuntu Server 22.04Teleport v12awscli v2.2.31

- Teleport starter cluster

- Module Sources

- Installing Ansible on specific operating systems

- DC31-obsidian-sec-eng/terraform/README.md

- aws_security_group

- Teleport - Networking

- aws_security_group_rule

- aws_eip

- lower

- aws_iam_policy_document

- replace

- aws_s3_bucket_lifecycle_configuration

- teleport/examples/aws/terraform/starter-cluster/cluster.tf

- Set up Single Sign-On with GitHub

- Teleport TSH installation

- Teleport Storage backends

- Convert value of an Ansible variable from lower case to upper case

- Boto3 Docs 1.26.69 documentation

- Teleport Configuration Reference