This directory contains the code for the MESS evaluation of OpenSeeD. Please see the commits for our changes of the model.

Create a conda environment openseed and install the required packages. See mess/README.md for details.

bash mess/setup_env.shPrepare the datasets by following the instructions in mess/DATASETS.md. The openseed env can be used for the dataset preparation. If you evaluate multiple models with MESS, you can change the dataset_dir argument and the DETECTRON2_DATASETS environment variable to a common directory (see mess/DATASETS.md and mess/eval.sh, e.g., ../mess_datasets).

Download the OpenSeeD weights (see https://github.com/IDEA-Research/OpenSeeD)

mkdir weights

wget https://github.com/IDEA-Research/OpenSeeD/releases/download/openseed/model_state_dict_swint_51.2ap.pt -O weights/model_state_dict_swint_51.2ap.ptTo evaluate the OpenSeeD model on the MESS datasets, run

bash mess/eval.sh

# for evaluation in the background:

nohup bash mess/eval.sh > eval.log &

tail -f eval.log For evaluating a single dataset, select the DATASET from mess/DATASETS.md, the DETECTRON2_DATASETS path, and run

conda activate openseed

export DETECTRON2_DATASETS="datasets"

DATASET=<dataset_name>

# Tiny model

python eval_openseed.py evaluate --conf_files configs/openseed/openseed_swint_lang.yaml --config_overrides {\"WEIGHT\":\"weights/model_state_dict_swint_51.2ap.pt\", \"DATASETS.TEST\":[\"$DATASET\"], \"SAVE_DIR\":\"output/OpenSeeD/$DATASET\", \"MODEL.TEXT.CONTEXT_LENGTH\":18}

Note that the provided weights are trained with a context length of 18 instead of the 77 used in the config.

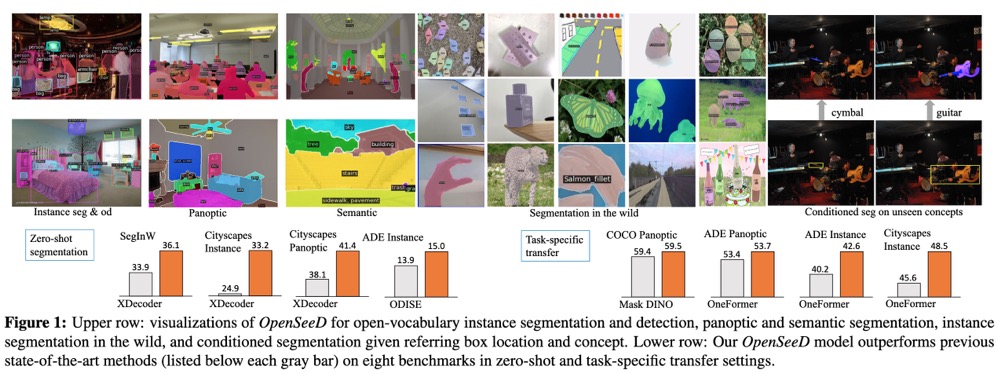

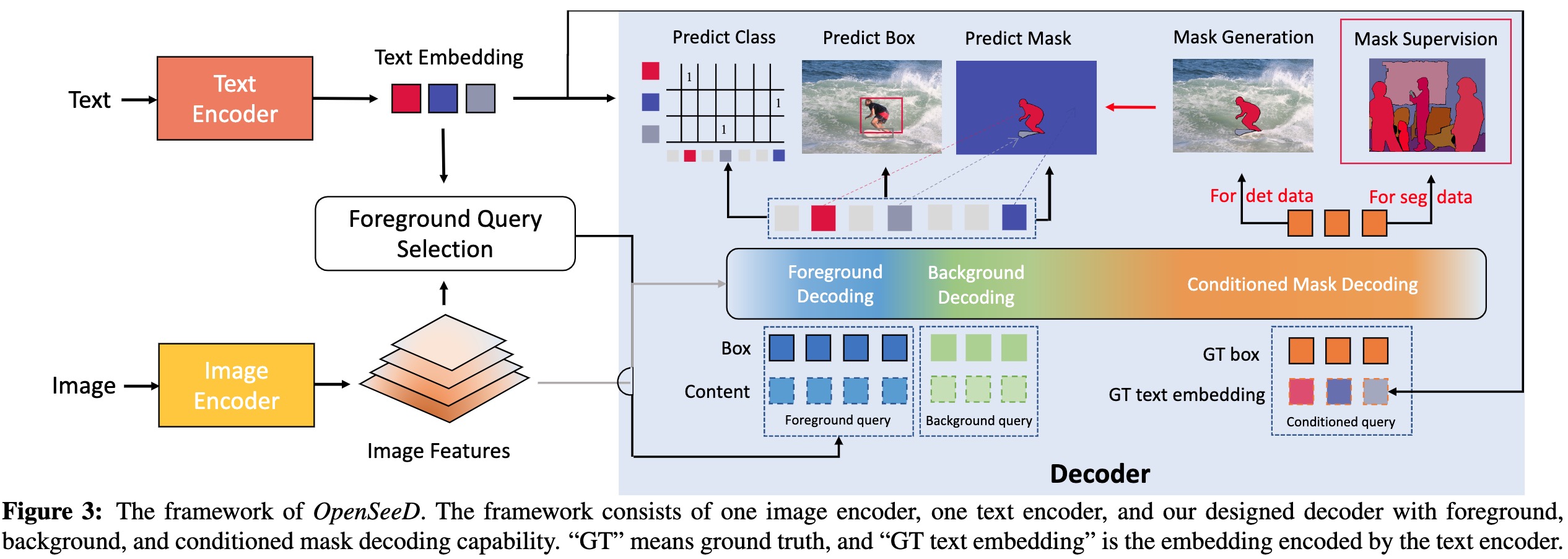

This is the official implementation of the paper "A Simple Framework for Open-Vocabulary Segmentation and Detection".

openseed_9.4m.mp4

You can also find the more detailed demo at video link on Youtube.

👉 [New] demo code is available

- A Simple Framework for Open-Vocabulary Segmentation and Detection.

- Support interactive segmentation with box input to generate mask.

pip3 install torch==1.13.1 torchvision==0.14.1 --extra-index-url https://download.pytorch.org/whl/cu113

python -m pip install 'git+https://github.com/MaureenZOU/detectron2-xyz.git'

pip install git+https://github.com/cocodataset/panopticapi.git

python -m pip install -r requirements.txt

sh install_cococapeval.sh

export DATASET=/pth/to/datasetDownload the pretrained checkpoint from here.

python demo/demo_panoseg.py evaluate --conf_files configs/openseed/openseed_swint_lang.yaml --image_path images/your_image.jpg --overrides WEIGHT /path/to/ckpt/model_state_dict_swint_51.2ap.pt🔥 Remember to modify the vocabulary thing_classes and stuff_classes in demo_panoseg.py if your want to segment open-vocabulary objects.

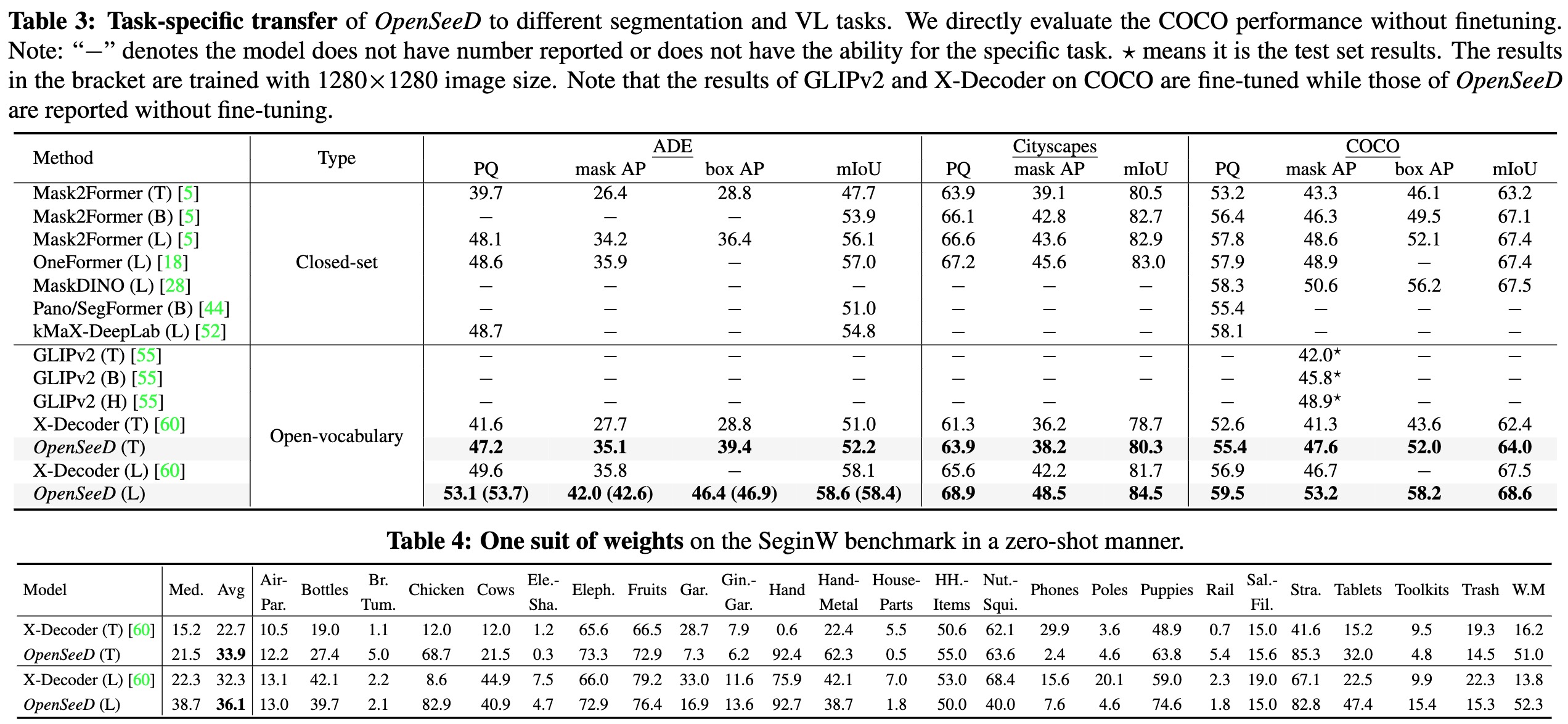

Evaluation on coco

mpirun -n 1 python eval_openseed.py evaluate --conf_files configs/openseed/openseed_swint_lang.yaml --overrides WEIGHT /path/to/ckpt/model_state_dict_swint_51.2ap.pt COCO.TEST.BATCH_SIZE_TOTAL 2You are expected to get 55.4 PQ.

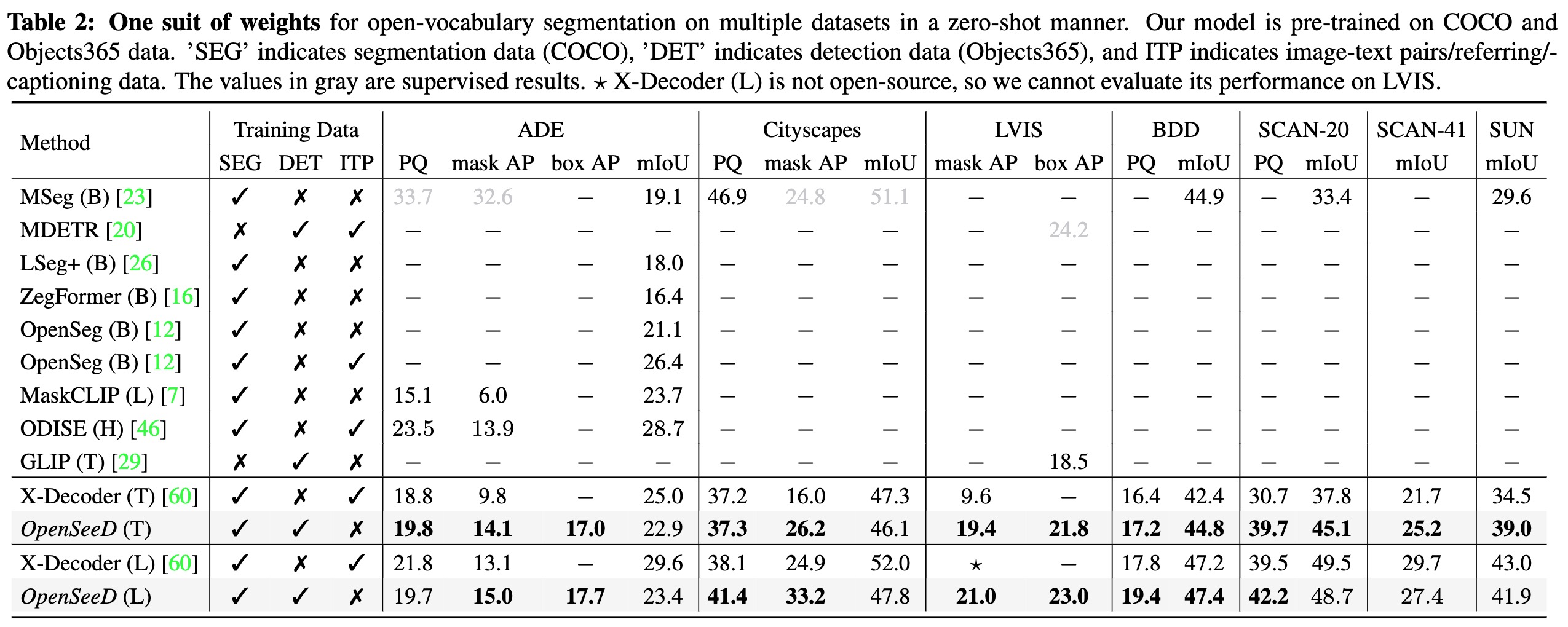

Results on open segmentation

Results on task transfer and segmentation in the wild

Results on task transfer and segmentation in the wild

If you find our work helpful for your research, please consider citing the following BibTeX entry.

@article{zhang2023simple,

title={A Simple Framework for Open-Vocabulary Segmentation and Detection},

author={Zhang, Hao and Li, Feng and Zou, Xueyan and Liu, Shilong and Li, Chunyuan and Gao, Jianfeng and Yang, Jianwei and Zhang, Lei},

journal={arXiv preprint arXiv:2303.08131},

year={2023}

}