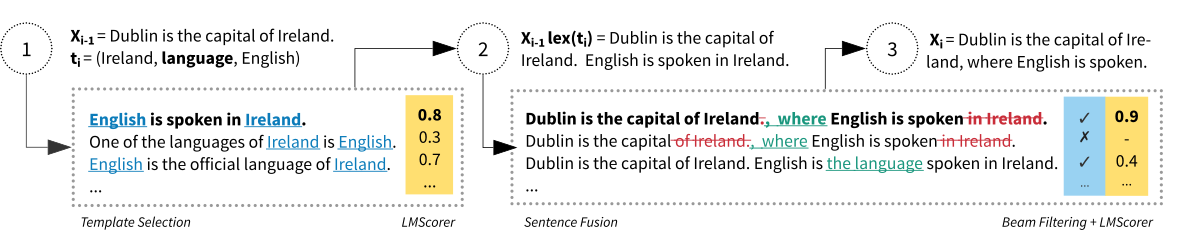

The code for generating text from RDF triples by iteratively applying sentence fusion on the templates.

A description of the method can be found in:

Zdeněk Kasner & Ondřej Dušek (2020): Data-to-Text Generation with Iterative Text Editing. In: Proceedings of the 13th International Conference on Natural Language Generation (INLG 2020).

- Install the requirements

pip install -r requirements.txt- Download the datasets and models:

./download_datasets_and_models.sh- Run an experiment on the WebNLG dataset with default settings including preprocessing the data, training the model, decoding the data and evaluating the results:

./run.sh webnlg- Python 3 & pip

- packages

- Tensorflow 1.15* (GPU version recommended)

- PyTorch 🤗 Transformers

- see

requirements.txtfor the full list

All packages can be installed using

pip install -r requirements.txtSelect tensorflow-1.15 instead of tensorflow-1.15-gpu if you wish not to use the GPU.

*The original implementation of LaserTagger based on BERT and Tensorflow 1.x was used in the experiments. Implementation of the PyTorch version of LaserTagger is currently in progress.

All datasets and models can be downloaded using the command:

./download_datasets_and_models.shThe following lists the dependencies (datasets, models and external repositiories) downloaded by the script. The script does not re-download the dependencies which are already located in their respective path.

- WebNLG dataset (v1.4)

- Cleaned E2E Challenge dataset

- DiscoFuse dataset (Wikipedia part)

- LaserTagger - fork of the original LaserTagger implementation featuring a few changes necessary for integration with the model

- E2E Metrics - a set of evaluation metrics used in the E2E Challenge

- BERT - original TensorFlow implementation from Google (utilized by LaserTagger)

- (+ LMScorer requires GPT-2; downloaded automatically by the

transformerspackage)

The pipeline involves four steps:

- preprocessing the data-to-text datasets for the sentence fusion task

- training the sentence fusion model

- running the decoding algorithm

- evaluating the results

All steps can be run separately by following the instructions below, or all at once using the script

./run.sh <experiment>

where <experiment> can be one of:

webnlg- train and evaluate on the WebNLG datasete2e- train and evaluate on the E2E datasetdf-webnlg- train on DiscoFuse and evaluate on WebNLG (zero shot domain adaptation)df-e2e- train on DiscoFuse and evaluate on E2E (zero shot domain adaptation)

Preprocessing involves parsing the original data-to-text datasets and extracting examples for training the sentence fusion model.

Example of using the preprocessing script:

# preprocessing the WebNLG dataset in the full mode

python3 preprocess.py \

--dataset "WebNLG" \

--input "datasets/webnlg/data/v1.4/en/" \

--mode "full" \

--splits "train" "test" "dev" \

--lms_device "cpu"Things you may want to consider:

- The mode for selecting the lexicalizations (

--mode) can be set tofull,best_tgtorbest. The modes are described in the supplementary material of the paper.- The default mode is

fulland runs on CPU. - Modes

best_tgtandbestuse the LMScorer and can use GPU (--lms_device gpu).

- The default mode is

- The templates for the predicates are included in the repository. In order to re-generate simple templates for WebNLG and double templates for E2E, use the flag

--force_generate_templates. However, note that double templates for E2E have been manually denoised (the generated version will not be identical to the one used in the experiments). - Using a custom dataset based on RDF triples requires editing

datasets.py: adding a custom class derived fromDatasetand overriding relevant methods. The dataset is then selected with the parameter--datasetusing the class name as an argument.

Training generally follows the pipeline for finetuning the LaserTagger model. However, instead of using individual scripts for each step, the training pipeline is encapsulated in train.py.

Example of using the training script:

python3 train.py \

--dataset "WebNLG" \

--mode "full" \

--experiment "webnlg_full" \

--vocab_size 100 \

--num_train_steps 10000Things you may want to consider:

- The size of the vocabulary determines the number of phrases used by LaserTagger (see the paper for details). The value 100 was used in the final experiments.

- The wrapper for LaserTagger is implemented in

model_tf.py. The wrapper calls the methods from the LaserTagger repository (directorylasertagger_tf) similarly to the original implementation.- This is a temporary solution: we are working on implementing a custom PyTorch version of LaserTagger, which should be more clear and flexible.

- For debugging, the number of training steps can be lowered e.g. to 100.

- Parameters

--train_onlyand--export_onlycan be used to skip other pipeline phases. - If you have the correct Tensorflow version (

tensorflow-1.15-gpu) but a GPU is not used, check if CUDA libraries were linked correctly.

Once the model is trained, the decoding algorithm is used to generate text from RDF triples. See the top figure and/or the paper for the details on the method.

Example of using the decoding script:

python3 decode.py \

--dataset "WebNLG" \

--experiment "webnlg_full" \

--dataset_dir "datasets/webnlg/data/v1.4/en/" \

--split "test" \

--lms_device "cpu" \

--vocab_size 100Things you may want to consider:

- Decoding will be faster if the LMScorer is allowed to run on GPU (

--lms_device gpu). Note however this may require a secondary GPU if the GPU is already used for LaserTagger. - Output will be stored as

out/<experiment>_<vocab_size>_<split>.hyp. - Use the flag

--use_e2e_double_templatesfor bootstrapping the decoding process from the templates for pairs of triples in the case of the E2E dataset. The templates for single triples (handcrafted for E2E) are used otherwise. - Use the flag

--no_exportin order to suppress saving the output to theoutdirectory.

The decoded output is evaluated against multiple references using the e2e-metrics package.

Example of using the evaluation script:

python3 evaluate.py \

--ref_file "data/webnlg/ref/test.ref" \

--hyp_file "out/webnlg_full_100_test.hyp" \

--lowercase@inproceedings{kasner-dusek-2020-data,

title = "Data-to-Text Generation with Iterative Text Editing",

author = "Kasner, Zden{\v{e}}k and

Du{\v{s}}ek, Ond{\v{r}}ej",

booktitle = "Proceedings of the 13th International Conference on Natural Language Generation",

month = dec,

year = "2020",

address = "Dublin, Ireland",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.inlg-1.9",

pages = "60--67"

}