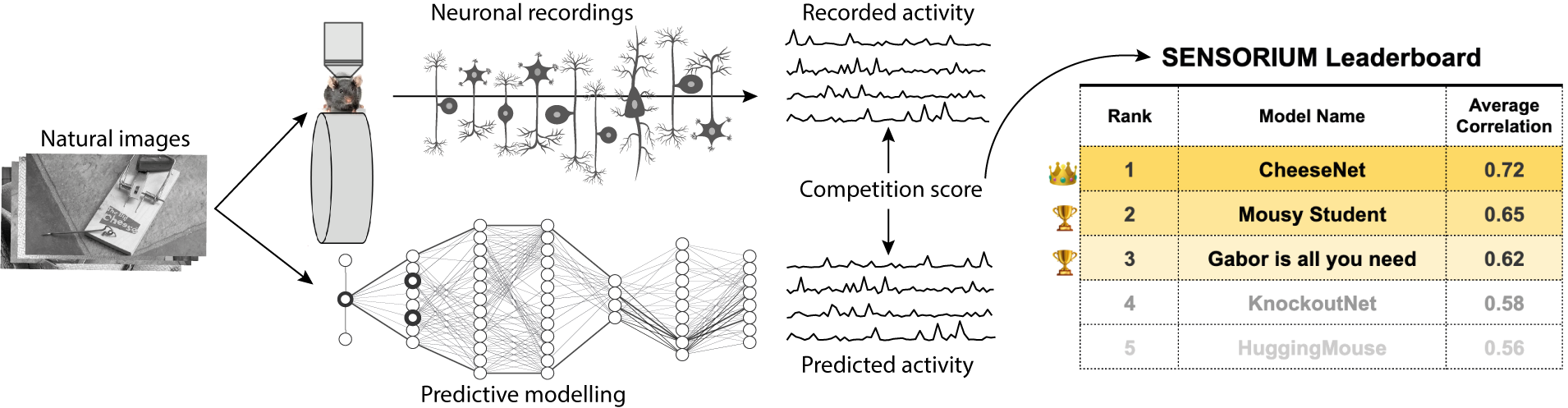

SENSORIUM is a competition on predicting large scale mouse primary visual cortex activity. We will provide large scale datasets of neuronal activity in the visual cortex of mice. Participants will train models on pairs of natural stimuli and recorded neuronal responses, and submit the predicted responses to a set of test images for which responses are withheld.

SENSORIUM is a competition on predicting large scale mouse primary visual cortex activity. We will provide large scale datasets of neuronal activity in the visual cortex of mice. Participants will train models on pairs of natural stimuli and recorded neuronal responses, and submit the predicted responses to a set of test images for which responses are withheld.

Join our challenge and compete for the best neural predictive model!

For more information about the competition, vist our website.

June 15, 2022: Start of the competition and data release. The data structure is similar to the data available at https://gin.g-node.org/cajal/Lurz2020.

Oct 15, 2022: Submission deadline.

Oct 22, 2022: Validation of all submitted scores completed. Rank 1-3 in both competition tracks are contacted to provide the code for their submission.

Nov 5, 2022: Deadline for top-ranked entries to provide the code for their submission.

Nov 15, 2022: Winners contacted to contribute to the competition summary write-up.

Below we provide a step-by-step guide for getting started with the competition.

- install docker and docker-compose

- install git

- clone the repo via

git clone https://github.com/sinzlab/sensorium.git

You can download the data from https://gin.g-node.org/cajal/Lurz2020 and unzip it into sensorium/notebooks/data

cd sensorium/

docker-compose run -d -p 10101:8888 jupyterlab

We provide four notebooks that illustrate the structure of our data, our baselines models, and how to make a submission to the competition.

Notebook 1: Inspecting the Data

Notebook 2: Re-train our Baseline Models

Notebook 3: Use our API to make a submission to our competition

Notebook 4: A full submission in 4 easy steps using our cloud-based DataLoaders (using toy data)