This GitHub Action analyses Unit Test result files and publishes the results on GitHub. It supports the JUnit XML file format and runs on Linux, macOS and Windows.

You can add this action to your GitHub workflow for (e.g.

runs-on: ubuntu-latest) runners:

- name: Publish Unit Test Results

uses: EnricoMi/publish-unit-test-result-action@v1

if: always()

with:

files: "test-results/**/*.xml"Use this for (e.g.

runs-on: macos-latest)

and (e.g.

runs-on: windows-latest) runners:

- name: Publish Unit Test Results

uses: EnricoMi/publish-unit-test-result-action/composite@v1

if: always()

with:

files: "test-results/**/*.xml"See the notes on running this action as a composite action if you run it on Windows or macOS.

Also see the notes on supporting pull requests from fork repositories and branches created by Dependabot.

The if: always() clause guarantees that this action always runs, even if earlier steps (e.g., the unit test step) in your workflow fail.

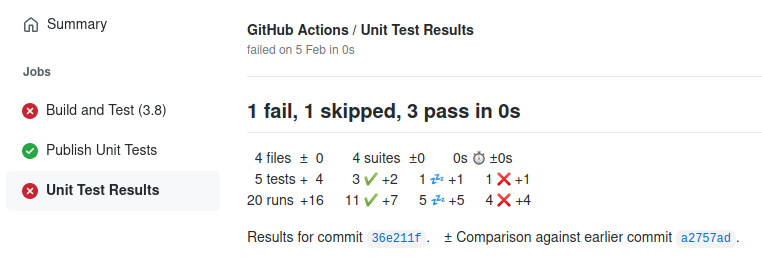

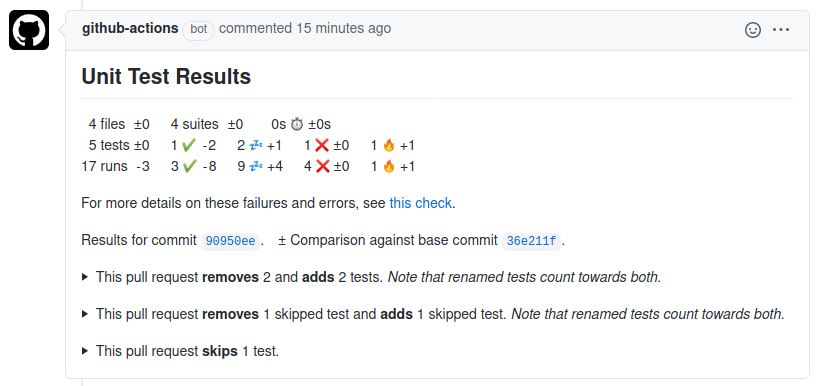

Unit test results are published in the GitHub Actions section of the respective commit:

Note: This action does not fail if unit tests failed. The action that executed the unit tests should fail on test failure. The published results however indicate failure if tests fail or errors occur. This behaviour is configurable.

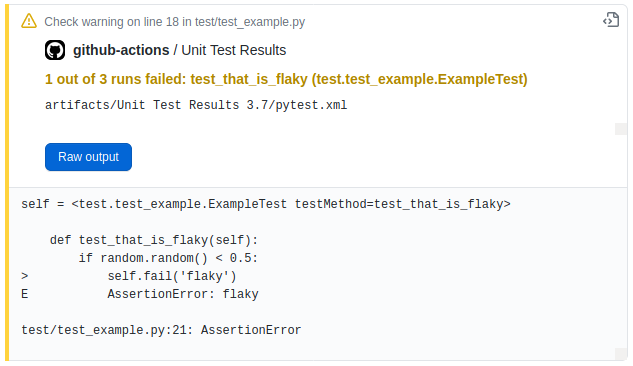

Each failing test will produce an annotation with failure details:

Note: Only the first failure of a test is shown. If you want to see all failures, set report_individual_runs: "true".

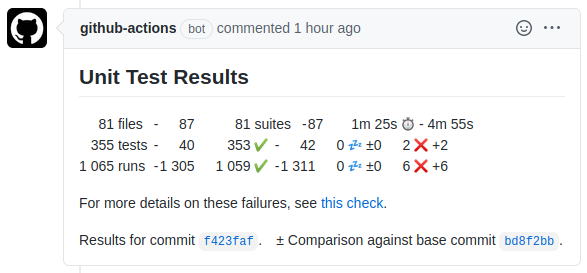

A comment is posted on the pull request of that commit. In presence of failures or errors, the comment links to the respective check page with failure details:

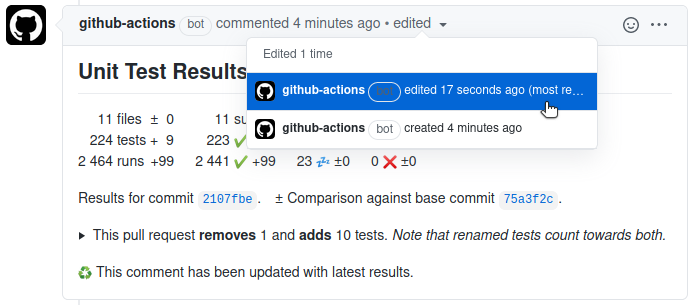

Subsequent runs of the action will update this comment. You can access earlier results in the comment edit history:

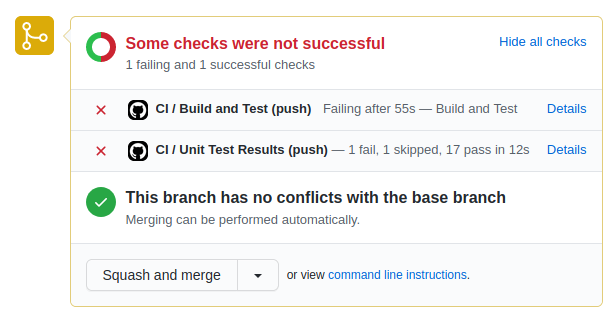

The checks section of the pull request also lists a short summary (here 1 fail, 1 skipped, 17 pass in 12s),

and a link to the GitHub Actions section (here Details):

The result distinguishes between tests and runs. In some situations, tests run multiple times, e.g. in different environments. Displaying the number of runs allows spotting unexpected changes in the number of runs as well.

The change statistics (e.g. 5 tests ±0) might sometimes hide test removal. Those are highlighted in pull request comments to easily spot unintended test removal:

Note: This requires check_run_annotations to be set to all tests, skipped tests.

The symbols have the following meaning:

| Symbol | Meaning |

|---|---|

| A successful test or run | |

| A skipped test or run | |

| A failed test or run | |

| An erroneous test or run | |

| The duration of all tests or runs |

Note: For simplicity, "disabled" tests count towards "skipped" tests.

Minimal permissions required by this action in public GitHub repositories are:

permissions:

checks: write

pull-requests: writeThe following permissions are required in private GitHub repos:

permissions:

checks: write

contents: read

issues: read

pull-requests: writeWith comment_mode: off, the pull-requests: write permission is not needed.

Files can be selected via the files option, which is optional and defaults to *.xml in the current working directory.

It supports wildcards like *, **, ? and [].

The ** wildcard matches all files and directories recursively: ./, ./*/, ./*/*/, etc.

You can provide multiple file patterns, one pattern per line. Patterns starting with ! exclude the matching files.

There have to be at least one pattern starting without a !:

with:

files: |

*.xml

!config.xmlSee the complete list of options below.

| Option | Default Value | Description |

|---|---|---|

files |

*.xml |

File patterns to select the test result XML files, e.g. "test-results/**/*.xml". Use multiline string for multiple patterns. Supports *, **, ?, []. Excludes files when starting with !. |

time_unit |

seconds |

Time values in the XML files have this unit. Supports seconds and milliseconds. |

check_name |

"Unit Test Results" |

An alternative name for the check result. |

comment_title |

same as check_name |

An alternative name for the pull request comment. |

comment_mode |

update last |

The action posts comments to a pull request that is associated with the commit. Set to create new to create a new comment on each commit, update last to create only one comment and update later on, off to not create pull request comments. |

hide_comments |

"all but latest" |

Configures which earlier comments in a pull request are hidden by the action:"orphaned commits" - comments for removed commits"all but latest" - all comments but the latest"off" - no hiding |

github_token |

${{github.token}} |

An alternative GitHub token, other than the default provided by GitHub Actions runner. |

github_retries |

10 |

Requests to the GitHub API are retried this number of times. The value must be a positive integer or zero. |

seconds_between_github_reads |

0.25 |

Sets the number of seconds the action waits between concurrent read requests to the GitHub API. |

seconds_between_github_writes |

2.0 |

Sets the number of seconds the action waits between concurrent write requests to the GitHub API. |

commit |

${{env.GITHUB_SHA}} |

An alternative commit SHA to which test results are published. The push and pull_requestevents are handled, but for other workflow events GITHUB_SHA may refer to different kinds of commits. See GitHub Workflow documentation for details. |

json_file |

no file | Results are written to this JSON file. |

fail_on |

"test failures" |

Configures the state of the created test result check run. With "test failures" it fails if any test fails or test errors occur. It never fails when set to "nothing", and fails only on errors when set to "errors". |

pull_request_build |

"merge" |

GitHub builds a merge commit, which combines the commit and the target branch. If unit tests ran on the actual pushed commit, then set this to "commit". |

event_file |

${{env.GITHUB_EVENT_PATH}} |

An alternative event file to use. Useful to replace a workflow_run event file with the actual source event file. |

event_name |

${{env.GITHUB_EVENT_NAME}} |

An alternative event name to use. Useful to replace a workflow_run event name with the actual source event name: ${{ github.event.workflow_run.event }}. |

test_changes_limit |

10 |

Limits the number of removed or skipped tests listed on pull request comments. This can be disabled with a value of 0. |

report_individual_runs |

false |

Individual runs of the same test may see different failures. Reports all individual failures when set true, and the first failure only otherwise. |

deduplicate_classes_by_file_name |

false |

De-duplicates classes with same name by their file name when set true, combines test results for those classes otherwise. |

ignore_runs |

false |

Does not process test run information by ignoring <testcase> elements in the XML files, which is useful for very large XML files. This disables any check run annotations. |

compare_to_earlier_commit |

true |

Test results are compared to results of earlier commits to show changes:false - disable comparison, true - compare across commits.' |

check_run_annotations |

all tests, skipped tests |

Adds additional information to the check run (comma-separated list):all tests - list all found tests,skipped tests - list all skipped tests,none - no extra annotations at all |

check_run_annotations_branch |

default branch | Adds check run annotations only on given branches. If not given, this defaults to the default branch of your repository, e.g. main or master. Comma separated list of branch names allowed, asterisk "*" matches all branches. Example: main, master, branch_one |

Pull request comments highlight removal of tests or tests that the pull request moves into skip state.

Those removed or skipped tests are added as a list, which is limited in length by test_changes_limit,

which defaults to 10. Listing these tests can be disabled entirely by setting this limit to 0.

This feature requires check_run_annotations to contain all tests in order to detect test addition

and removal, and skipped tests to detect new skipped and un-skipped tests, as well as

check_run_annotations_branch to contain your default branch.

The gathered test information are accessible as JSON. The json output of the action can be accessed

through the expression steps.<id>.outputs.json.

- name: Publish Unit Test Results

uses: EnricoMi/publish-unit-test-result-action@v1

id: test-results

if: always()

with:

files: "test-results/**/*.xml"

- name: Conclusion

run: echo "Conclusion is ${{ fromJSON( steps.test-results.outputs.json ).conclusion }}"Here is an example JSON:

{

"title": "4 parse errors, 4 errors, 23 fail, 18 skipped, 227 pass in 39m 12s",

"summary": " 24 files ±0 4 errors 21 suites ±0 39m 12s [:stopwatch:](https://github.com/EnricoMi/publish-unit-test-result-action/blob/v1.20/README.md#the-symbols \"duration of all tests\") ±0s\n272 tests ±0 227 [:heavy_check_mark:](https://github.com/EnricoMi/publish-unit-test-result-action/blob/v1.20/README.md#the-symbols \"passed tests\") ±0 18 [:zzz:](https://github.com/EnricoMi/publish-unit-test-result-action/blob/v1.20/README.md#the-symbols \"skipped / disabled tests\") ±0 23 [:x:](https://github.com/EnricoMi/publish-unit-test-result-action/blob/v1.20/README.md#the-symbols \"failed tests\") ±0 4 [:fire:](https://github.com/EnricoMi/publish-unit-test-result-action/blob/v1.20/README.md#the-symbols \"test errors\") ±0 \n437 runs ±0 354 [:heavy_check_mark:](https://github.com/EnricoMi/publish-unit-test-result-action/blob/v1.20/README.md#the-symbols \"passed tests\") ±0 53 [:zzz:](https://github.com/EnricoMi/publish-unit-test-result-action/blob/v1.20/README.md#the-symbols \"skipped / disabled tests\") ±0 25 [:x:](https://github.com/EnricoMi/publish-unit-test-result-action/blob/v1.20/README.md#the-symbols \"failed tests\") ±0 5 [:fire:](https://github.com/EnricoMi/publish-unit-test-result-action/blob/v1.20/README.md#the-symbols \"test errors\") ±0 \n\nResults for commit 11c02e56. ± Comparison against earlier commit d8ce4b6c.\n",

"conclusion": "success",

"stats": {

"files": 24,

"errors": 4,

"suites": 21,

"duration": 2352,

"tests": 272,

"tests_succ": 227,

"tests_skip": 18,

"tests_fail": 23,

"tests_error": 4,

"runs": 437,

"runs_succ": 354,

"runs_skip": 53,

"runs_fail": 25,

"runs_error": 5,

"commit": "11c02e561e0eb51ee90f1c744c0ca7f306f1f5f9"

},

"stats_with_delta": {

"files": {

"number": 24,

"delta": 0

},

…,

"commit": "11c02e561e0eb51ee90f1c744c0ca7f306f1f5f9",

"reference_type": "earlier",

"reference_commit": "d8ce4b6c62ebfafe1890c55bf7ea30058ebf77f2"

},

"annotations": 31

}The optional json_file allows to configure a file where extended JSON information are to be written.

Compared to above, errors and annotations contain more information than just the number of errors and annotations, respectively:

{

…,

"stats": {

…,

"errors": [

{

"file": "test-files/empty.xml",

"message": "File is empty.",

"line": null,

"column": null

}

],

…

},

…,

"annotations": [

{

"path": "test/test.py",

"start_line": 819,

"end_line": 819,

"annotation_level": "warning",

"message": "test-files/junit.fail.xml",

"title": "1 out of 3 runs failed: test_events (test.Tests)",

"raw_details": "self = <test.Tests testMethod=test_events>\n\n def test_events(self):\n > self.do_test_events(3)\n\n test.py:821:\n _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _\n test.py:836: in do_test_events\n self.do_test_rsh(command, 143, events=events)\n test.py:852: in do_test_rsh\n self.assertEqual(expected_result, res)\n E AssertionError: 143 != 0\n "

}

]

}In a scenario where your unit tests run multiple times in different environments (e.g. a strategy matrix), the action should run only once over all test results. For this, put the action into a separate job that depends on all your test environments. Those need to upload the test results as artifacts, which are then all downloaded by your publish job.

name: CI

on: [push]

jobs:

build-and-test:

name: Build and Test (Python ${{ matrix.python-version }})

runs-on: ubuntu-latest

strategy:

fail-fast: false

matrix:

python-version: [3.6, 3.7, 3.8]

steps:

- name: Checkout

uses: actions/checkout@v2

- name: Setup Python ${{ matrix.python-version }}

uses: actions/setup-python@v2

with:

python-version: ${{ matrix.python-version }}

- name: PyTest

run: python -m pytest test --junit-xml pytest.xml

- name: Upload Unit Test Results

if: always()

uses: actions/upload-artifact@v2

with:

name: Unit Test Results (Python ${{ matrix.python-version }})

path: pytest.xml

publish-test-results:

name: "Publish Unit Tests Results"

needs: build-and-test

runs-on: ubuntu-latest

if: always()

steps:

- name: Download Artifacts

uses: actions/download-artifact@v2

with:

path: artifacts

- name: Publish Unit Test Results

uses: EnricoMi/publish-unit-test-result-action@v1

with:

files: "artifacts/**/*.xml"Getting unit test results of pull requests created by Dependabot

or by contributors from fork repositories requires some additional setup. Without this, the action will fail with the

"Resource not accessible by integration" error for those situations.

In this setup, your CI workflow does not need to publish unit test results anymore as they are always published from a separate workflow.

- Your CI workflow has to upload the GitHub event file and unit test result files.

- Set up an additional workflow on

workflow_runevents, which starts on completion of the CI workflow, downloads the event file and the unit test result files, and runs this action on them. This workflow publishes the unit test results for pull requests from fork repositories and dependabot, as well as all "ordinary" runs of your CI workflow.

Add the following job to your CI workflow to upload the event file as an artifact:

event_file:

name: "Event File"

runs-on: ubuntu-latest

steps:

- name: Upload

uses: actions/upload-artifact@v2

with:

name: Event File

path: ${{ github.event_path }}Add the following action step to your CI workflow to upload unit test results as artifacts.

Adjust the value of path to fit your setup:

- name: Upload Test Results

if: always()

uses: actions/upload-artifact@v2

with:

name: Unit Test Results

path: |

test-results/*.xmlIf you run tests in a strategy matrix, make the artifact name unique for each job, e.g.:

with:

name: Unit Test Results (${{ matrix.python-version }})

path: …Add the following workflow that publishes unit test results. It downloads and extracts

all artifacts into artifacts/ARTIFACT_NAME/, where ARTIFACT_NAME will be Upload Test Results

when setup as above, or Upload Test Results (…) when run in a strategy matrix.

It then runs the action on files matching artifacts/**/*.xml.

Change the files pattern with the path to your unit test artifacts if it does not work for you.

The publish action uses the event file of the CI workflow.

Also adjust the value of workflows (here "CI") to fit your setup:

name: Unit Test Results

on:

workflow_run:

workflows: ["CI"]

types:

- completed

jobs:

unit-test-results:

name: Unit Test Results

runs-on: ubuntu-latest

if: github.event.workflow_run.conclusion != 'skipped'

steps:

- name: Download and Extract Artifacts

env:

GITHUB_TOKEN: ${{secrets.GITHUB_TOKEN}}

run: |

mkdir -p artifacts && cd artifacts

artifacts_url=${{ github.event.workflow_run.artifacts_url }}

gh api "$artifacts_url" -q '.artifacts[] | [.name, .archive_download_url] | @tsv' | while read artifact

do

IFS=$'\t' read name url <<< "$artifact"

gh api $url > "$name.zip"

unzip -d "$name" "$name.zip"

done

- name: Publish Unit Test Results

uses: EnricoMi/publish-unit-test-result-action@v1

with:

commit: ${{ github.event.workflow_run.head_sha }}

event_file: artifacts/Event File/event.json

event_name: ${{ github.event.workflow_run.event }}

files: "artifacts/**/*.xml"Note: Running this action on pull_request_target events is dangerous if combined with code checkout and code execution.

Running this action as a composite action allows to run it on various operating systems as it does not require Docker. The composite action, however, requires a Python3 environment to be setup on the action runner. All GitHub-hosted runners (Ubuntu, Windows Server and macOS) provide a suitable Python3 environment out-of-the-box.

Self-hosted runners may require setting up a Python environment first:

- name: Setup Python

uses: actions/setup-python@v2

with:

python-version: 3.8Note that the composite action modifies this Python environment by installing dependency packages. If this conflicts with actions that later run Python in the same workflow (which is a rare case), it is recommended to run this action as the last step in your workflow, or to run it in an isolated workflow. Running it in an isolated workflow is similar to the workflows shown in Use with matrix strategy.

To run the composite action in an isolated workflow, your CI workflow should upload all test result XML files:

build-and-test:

name: "Build and Test"

runs-on: macos-latest

steps:

- …

- name: Upload Unit Test Results

if: always()

uses: actions/upload-artifact@v2

with:

name: Unit Test Results

path: "test-results/**/*.xml"Your dedicated publish-unit-test-result-workflow then downloads these files and runs the action there:

publish-test-results:

name: "Publish Unit Tests Results"

needs: build-and-test

runs-on: windows-latest

# the build-and-test job might be skipped, we don't need to run this job then

if: success() || failure()

steps:

- name: Download Artifacts

uses: actions/download-artifact@v2

with:

path: artifacts

- name: Publish Unit Test Results

uses: EnricoMi/publish-unit-test-result-action/composite@v1

with:

files: "artifacts/**/*.xml"In some environments, the composite action startup can be slow due to the installation of Python dependencies. This is usually the case for Windows runners (in this example 35 seconds startup time):

Mon, 03 May 2021 11:57:00 GMT ⏵ Run ./composite

Mon, 03 May 2021 11:57:00 GMT ⏵ Check for Python3

Mon, 03 May 2021 11:57:00 GMT ⏵ Install Python dependencies

Mon, 03 May 2021 11:57:35 GMT ⏵ Publish Unit Test Results

This can be improved by caching the PIP cache directory. If you see the following warning in

the composite action output, then installing the wheel package can also be beneficial (see further down):

Using legacy 'setup.py install' for …, since package 'wheel' is not installed.

You can cache files downloaded and built by PIP

using the actions/cache action, and conditionally install the wheelpackage as follows:

- name: Cache PIP Packages

uses: actions/cache@v2

id: cache

with:

path: ~\AppData\Local\pip\Cache

key: ${{ runner.os }}-pip-${{ hashFiles('**/requirements.txt, 'composite/action.yml') }}

restore-keys: |

${{ runner.os }}-pip-

# only needed if you see this warning in action log output otherwise:

# Using legacy 'setup.py install' for …, since package 'wheel' is not installed.

- name: Install package wheel

# only needed on cache miss

if: steps.cache.outputs.cache-hit != 'true'

run: python3 -m pip install wheel

- name: Publish Unit Test Results

uses: EnricoMi/publish-unit-test-result-action/composite@v1

…Use the correct path:, depending on your action runner's OS:

- macOS:

~/Library/Caches/pip - Windows:

~\AppData\Local\pip\Cache - Ubuntu:

~/.cache/pip

With a cache populated by an earlier run, we can see startup time improvement (in this example down to 11 seconds):

Mon, 03 May 2021 16:00:00 GMT ⏵ Run ./composite

Mon, 03 May 2021 16:00:00 GMT ⏵ Check for Python3

Mon, 03 May 2021 16:00:00 GMT ⏵ Install Python dependencies

Mon, 03 May 2021 16:00:11 GMT ⏵ Publish Unit Test Results