This repository is deprecated. Check out trickster library and the "Evading classifiers in discrete domains with provable optimality guarantees" paper for an evolution and a more mature version of this approach to generating adversarial examples.

This is a proof of concept aiming at producing "imperceptible" adversarial examples for text classifiers.

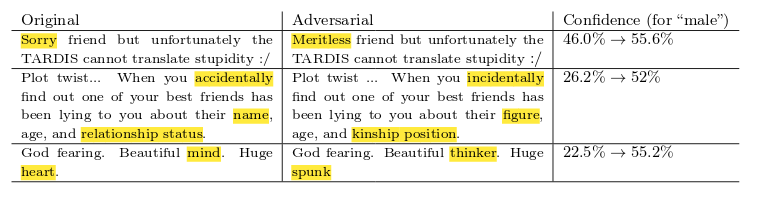

For instance, this are some adversarial examples produced by this code for a classifier of a tweet author's gender based on the tweet's text:

You need Python 3, and all system dependencies possibly required by

- Keras

- NLTK

- SpaCy

pip install -r requirements.txt

- SpaCy English language model:

python -m spacy download en - NLTK datasets (a prompt will appear upon running

paraphrase.py)

To train using default parameters simply run

python run_training.py

By default will check for the CSV data set at ./data/twitter_gender_data.csv, and save the model weights to ./data/model.dat.

Should attain about 66% accuracy on validation data set for gender recognition.

This model uses Kaggle Twitter User Gender Classification data.

To run the adversarial crafting script:

python run_demo.py

Success rate for crafting the adversarial example should be about 17%.

By default the script will write the crafted examples into ./data/adversarial_texts.csv.

This module is rather reusable, although not immensely useful for anything practical. It provides a function that "paraphrases" a text by replacing some words with their WordNet synonyms, sorting by GloVe similarity between the synonym and the original context window. Relies on SpaCy and NLTK.

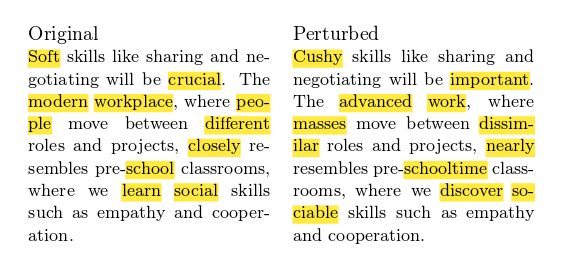

Example of paraphrase:

Please use Zenodo link to cite textfool. Not that this work is not published, and not peer-reviewed. textfool has no relationship to "Deep Text Classification Can be Fooled." by B. Liang, H. Li, M. Su, P. Bian, X. Li, and W. Shi.