This repository contains the code to perform online Few shot Learning with a webcam on FPGA using the Tensil framework on the PYNQ-Z1. It also contains the code to evaluate the performance of the network on the dataset cifar-10. Models and algorithms are based on the EASY paper.

There are two steps in the demonstration :

- first you need to take a few shots of the classes you want to recognize

- then you can start the inference

Simple as that !

Button 0: take a shot with current class

Button 1: switch to the next class

Button 2: start inference

Button 3: reset the demo

- PYNQ-Z1

- Webcam USB

- HDMI screen and cable

- Ethernet cable

- SD card (at least 8GB)

- Micro-USB cable

- Download the PYNQ-Z1 v2.7 image and flash it on the SD card and get started.

- Install tensil tcu on the PYNQ: Start by cloning the Tensil GitHub repository to your work station and then copy

drivers/tcu_pynqto/home/xilinx/tcu_pynqonto your board.git clone git@github.com:tensil-ai/tensil.git scp -r tensil/drivers/tcu_pynq xilinx@192.168.2.99:

- Copy the hardware (bitstream & metadata) and the tensil model files on the PYNQ. Demo files are available on this link.

- Clone or copy this repository on the PYNQ.

-

Connect the PYNQ to the screen and the webcam

-

Launch the demo as sudo with environment variables set:

sudo -E python3 main.py --button-keyboard button --hdmi-display tensil --path_tmodel /home/xilinx/resnet12_32_32_32_onnx_custom_perf.tmodel --path_bit /home/xilinx/design.bit

-

The demo should be running now on the HDMI screen. You can use the buttons on the PYNQ to interact with the demo as described above.

It is also possible to run the demo on your computer using pytorch. Example weights are also available on this link under the name cifar.pt1. In order to run the demo using pytorch:

python3 main.py pytorch --device-pytorch cpu --path-pytorch-weight cifar.pt1Other backbones examples and weights are available on the EASY repository: https://github.com/ybendou/easy.

The inputs are the following: {1-4} to register shots for classes {0-3}, i to start inference, r to reset the demo.

A repository is available to train a model with pytorch: https://github.com/antoine-lavrard/brain-train/tree/few_shot_demo . It is possible to train a model from scratch.

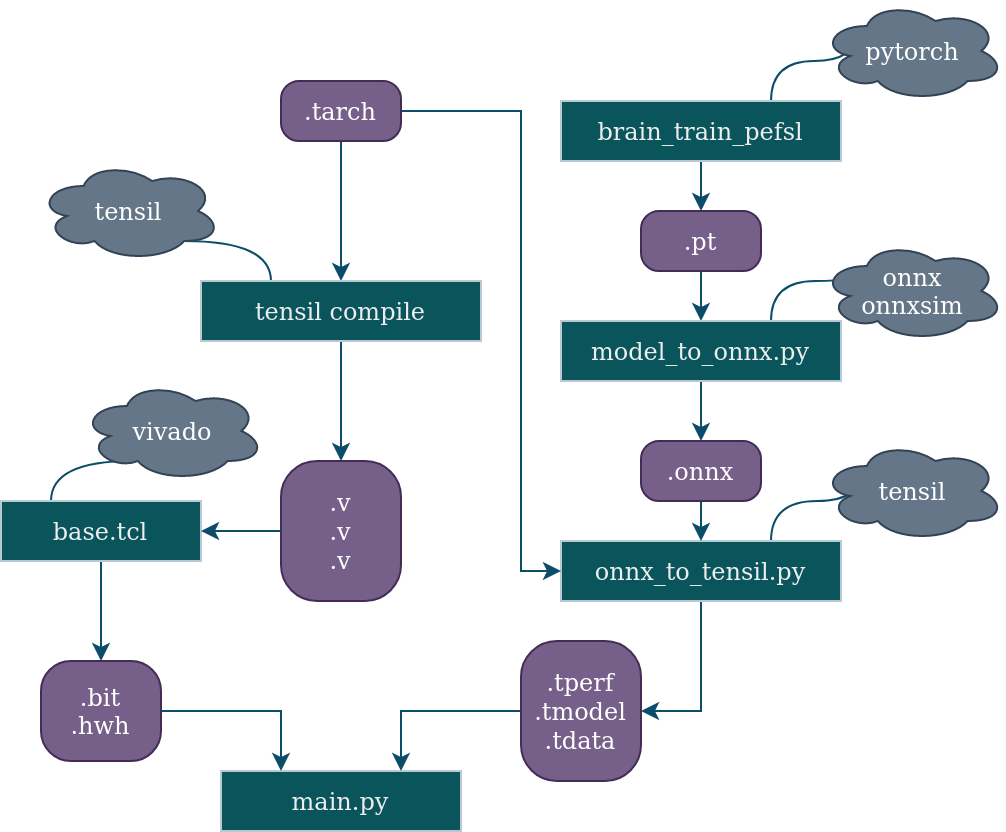

The script used to convert the model to onnx is model_to_onnx.py. Examples to launch the script are in the file. In order to be exported with this script, the networks must be implemented in the demo. Look at backbone_loader/backbone_pytorch/model.py for a list of supported models. Thus, for the aforementioned models:

python3 model_to_onnx.py --input-resolution 32 --save-name "small-strides-resnet9" --model-type "brain_resnet9_tiny_strided" --model-specification "weights/miniimagenet_resnet9s_32x32.pt" --weight-description "weight retrained on miniimagenet using image size 32"Weights available on this link.

Once you generated the onnx file for your model, you can generate the tensil model using the script onnx_to_tensil.py.

usage: onnx_to_tensil.py [-h] [--onnx-path ONNX_PATH] [--arch-path ARCH_PATH]

[--output-dir OUTPUT_DIR] [--onnx-output ONNX_OUTPUT]

options:

-h, --help show this help message and exit

--onnx-path ONNX_PATH

path to onnx file

--arch-path ARCH_PATH

path to tensil architecture file .tarch

--output-dir OUTPUT_DIR

path to script output directory

--onnx-output ONNX_OUTPUT

name of the onnx output layer

The project has been created with Vivado 2020.2. The project is available in the folder vivado_project. The project is configured for the PYNQ-Z1 board. The board files can be found in the directory vivado_project/pynq-z1. Add them to Vivado by copying it to the directory Vivado/2020.2/data/boards/board_files.

Use the tcl script vivado_project/base.tcl to generate the project.

vivado -mode batch -source base.tclYou can then open the project with Vivado and generate the bitstream.

If you want to modify the tensil architecture, replace the verilog files that are in the vivado_project/base/sources_1/imports/tensil folder with the ones that you generate with tensil. Then, regenerate the project with the tcl script.

The output files that you will need after the bitstream generation are the following:

vivado_project/base/base.runs/impl_1/base_wrapper.bitbase.gen/base/sources_1/bd/base/hw_handoff/base.hwh

Rename them to respectively to design.bit and design.hwh and copy them to the PYNQ.

It is possible to test the performance of the model on cifar 10 on PYNQ (only 32x32 networks). Download CIFAR 10 test set (cifar-10-batches-py/test_batch) and run the following command :

sudo -E python3 few_shot_evaluation.py --dataset-path /home/xilinx/cifar-10-batches-py/test_batch tensil --path_tmodel /home/xilinx/resnet12_32_32_32_onnx_custom_perf.tmodel --path_bit /home/xilinx/design.bit

You may have problem setting up the hdmi output/ input from camera, and want to verify that the demonstration is running well. In order to do that, setup a video simulation of the demo :

- download a video and put it in this repo.

- put reference images inside a folder with the folowing structure : -folder -class1_name -class2_name

- add the path as argument when you call the function

exemple :

sudo -E python3 main.py --no-display --use-saved-sample --path_shots_video data/catvsdog --camera-specification catvsdog.mp4 tensil --path_tmodel /home/xilinx/resnet12_32_32_32_onnx_custom_perf.tmodel --path_bit /home/xilinx/design.bitGet the argument specific to main.py :

python3 main.py --helpGet the argument specific to tensil:

python3 main.py tensil --help- Sometimes there is a bug with memory allocation (an error is raised). We are investigating it. For now if it happens, just reset the PYNQ.

- In the PYNQ, always launch the scripts while beeing authentify as root

- Somethimes PYNQ need to be reset between executions of the program in order to use the hdmi

- when launching the model, if the tarch of the model does not correspond to the accelerator, the scripts fail silently.

- the class id must be sequentialy set (first the 0, then 1, ect...)

- Should be at least enough elements to form queries + n_shots for the evaluation

- the current implementation of knn expect an even number of samples for every class