In this project, we try to help one music streaming startup, Sparkify, to move their user base and song database processes on to the cloud. Specifically, I build an ETL pipeline that extracts their data from AWS S3 (data storage), stages tables on AWS Redshift (data warehouse with columnar storage), and execute SQL statements that create the analytics tables from these staging tables.

Datasets used in this project are provided in two public S3 buckets. One bucket contains info about songs and artists, the second bucket has info concerning actions done by users (which song are listening, etc.. ). The objects contained in both buckets are JSON files.

The Redshift service is where data will be ingested and transformed, in fact though COPY command we will access to the JSON files inside the buckets and copy their content on our staging tables.

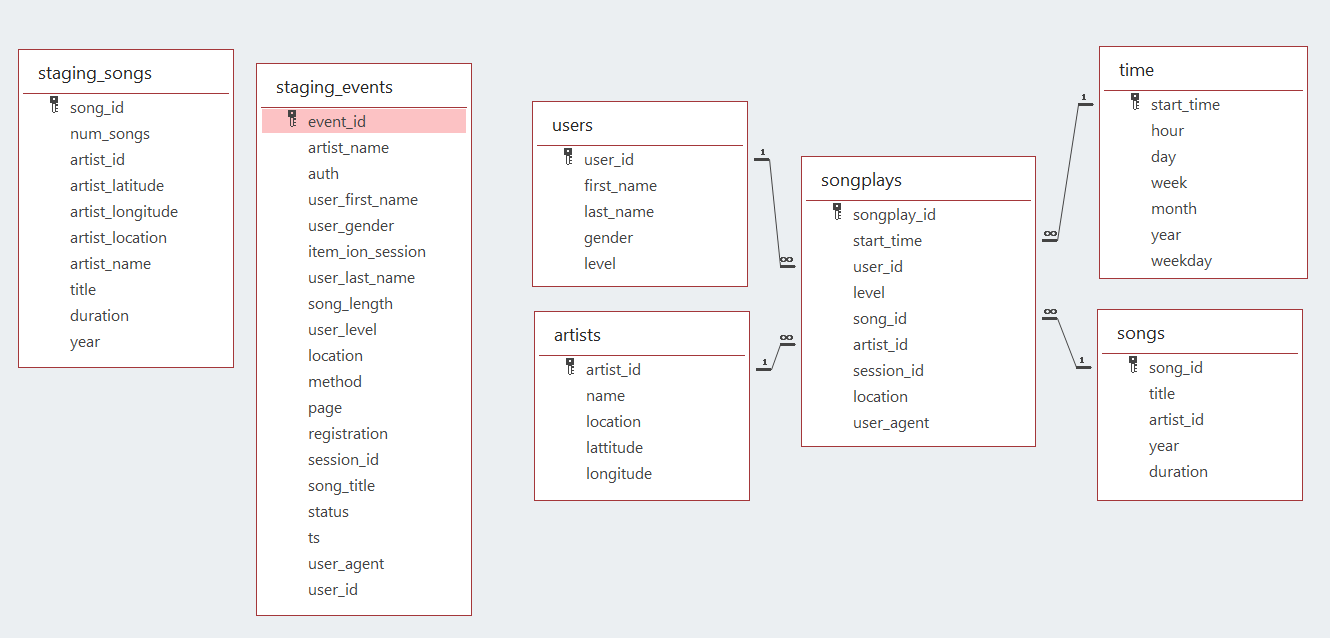

We have two staging tables which copy the JSON file inside the S3 buckets.

- staging_songs - info about songs and artists

- staging_events - actions done by users (which song are listening, etc.. )

I createa a star schema optimized for queries on song play analysis. This includes the following tables.

- songplays - records in event data associated with song plays i.e. records with page

NextSong

- users - users in the app

- songs - songs in music database

- artists - artists in music database

- time - timestamps of records in songplays broken down into specific units

The database schema is shown as follows

- Create a new

IAM userin your AWS account - Give it AdministratorAccess and Attach policies

- Use access key and secret key to create clients for

EC2,S3,IAM, andRedshift. - Create an

IAM Rolethat makesRedshiftable to accessS3 bucket(ReadOnly) - Create a

RedShift Clusterand get theDWH_ENDPOIN(Host address)andDWH_ROLE_ARNand fill the config file.

- Created tables to store the data from

S3 buckets. - Loading the data from

S3 bucketsto staging tables in theRedshift Cluster. - Inserted data into fact and dimension tables from the staging tables.

create_tables.py- This script will drop old tables (if exist) ad re-create new tables.etl.py- This script executes the queries that extractJSONdata from theS3 bucketand ingest them toRedshift.sql_queries.py- This file contains variables with SQL statement in String formats, partitioned byCREATE,DROP,COPYandINSERTstatement.dhw.cfg- Configuration file used that contains info aboutRedshift,IAMandS3

-

Create tables by running

create_tables.py. -

Execute ETL process by running

etl.py.