Disaster_Response_Pipeline

Udacity data science nanodegree project 2

Table of contents

- Libraries used

- Project Inspiration

- File Descriptions

- How to run this project

- Data Insights

- Licensing, Authors, and Acknowledgements

Libraries used

Python version 3.0. dependecies: json, plotly, numpy, pandas, nltk, flask, plotly, sklearn, sqlalchemy, os, sys, re, pickle, argparse

Project Inspiration

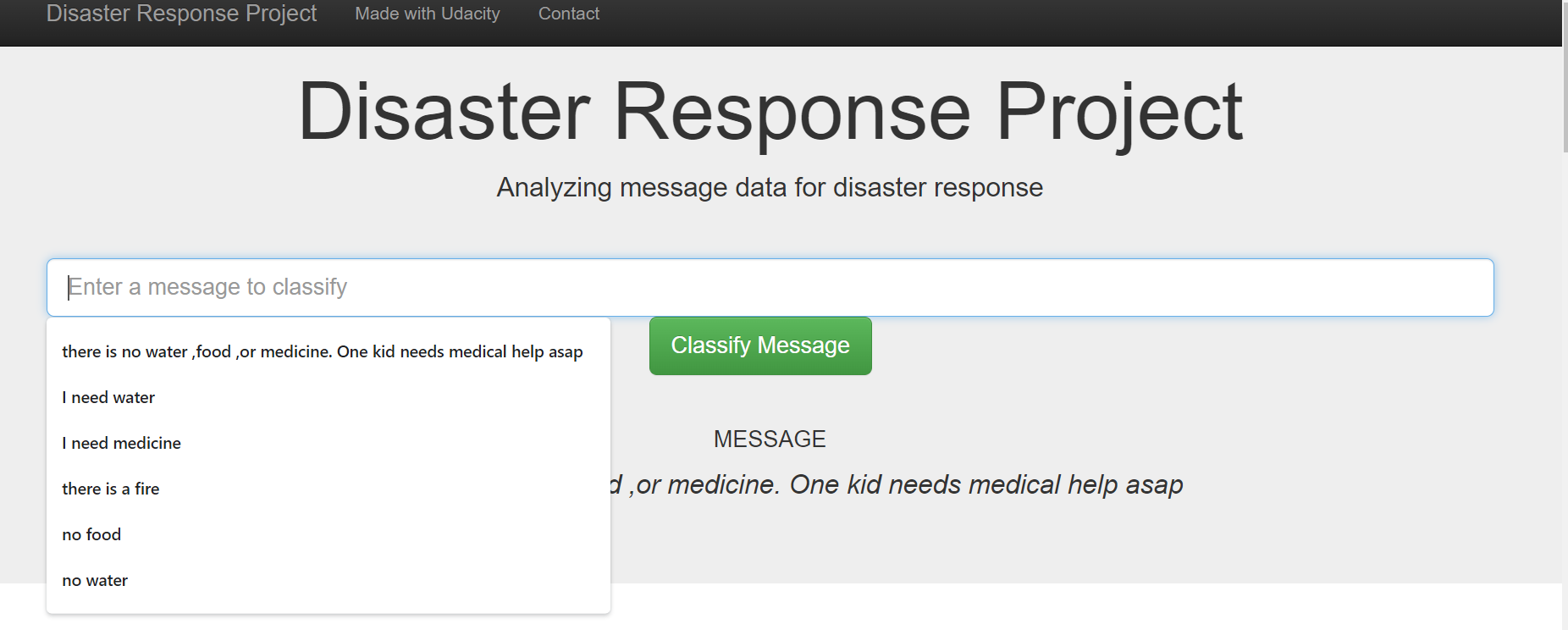

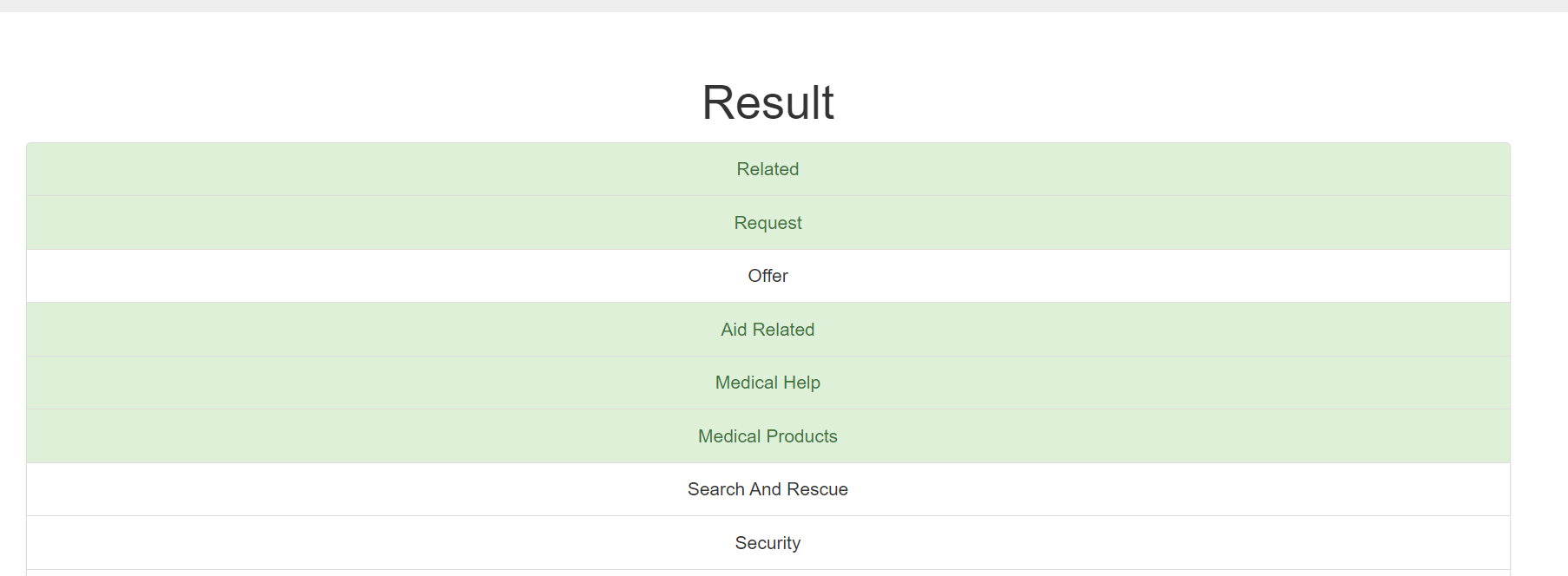

Apply data engineering skills to expand your opportunities and potential as a data scientist. In this project I analyze disaster data from Figure Eight to build a model for an API that classifies disaster messages. The project will include a web app where an emergency worker can input a new message and get classification results in several categories.

File Descriptions

app : template has master.html (main page of web app) and go.html (classification result page of web app), also run.py (Flask file that runs app)

data : data files, oringial data are in csv forms, and other database form data are cleaned data

models : saved model for web app and details of ML in train_classifier.py

asset : web app screenshot

ETL_Pipeline_Prepation.ipynb : extract,transfer and load data. Prepared the data for ML analysis, saved data as database instead of csv.

ML_Pipeline_Prepation.ipynb : serval time consuming ML algorithm process. After considering running time, I use AdaBoost instead of Random forest

all.plk : all the ML models I saved, there are 2 random forest model, 2 AdaBoost models

How to Run

- The first part of your data pipeline is the Extract, Transform, and Load process. And run ML pipeline to get trained model saved in plk file

python data/process_data.py --messages_filename

data/disaster_messages.csv --categories_filename

data/disaster_categories.csv --database_filename

data/DisasterResponse.db

python models/train_classifier.py --database_filename

data/DisasterResponse.db --model_pickle_filename

models/classifier.pkl

- Once your app is running

python run.py, if not running, do thiscd appthenpython run.py. Then open another terminal and typeenv|grep WORKthis will give you the spaceid it will start with view*** and some characters after that Now open your browser window and type https://viewa7a4999b-3001.udacity-student-workspaces.com, replace the whole viewa7a4999b with your space id you got. In this case, spaceid I got is view6914b2f4. Press enter and the app should now run for you

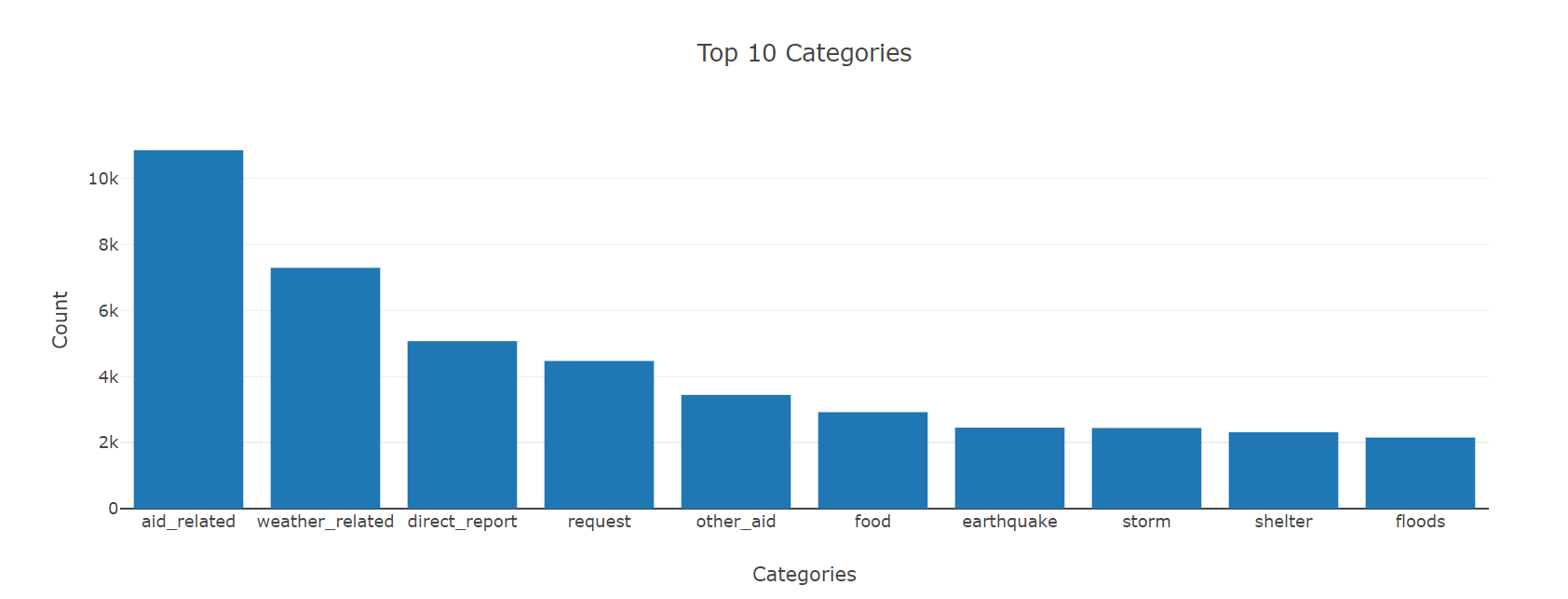

Insights

Result web app here.

Licensing, Authors, Acknowledgements

Data : Figure eight data here