The objective of this project is to design a resume rater that should be able to "rate" an applicant based on the contents of the resume. Several approaches were considered, including both supervised and unsupervised approaches. Unsupervised approaches were chosen as part of this project instead of supervised ones due to difficulty in deciding how to rank resumes.

The two approaches used were based on prior domain knowledge of how the resume process works. The approaches use keyword matching, with one a fixed keyword matching approach -- with keywords as initial parameters -- and the other a dynamic keyword matching approach, where no parameters nor hyperparameters are needed.

Based on the results, we can see that very strong feature engineering is required, and that both are able to somehow rate resumes quite well. The fixed method is more robust, and is more in-line with how humans select resumes, while the dynamic method learns the underlying keywords from the entire dataset, with the assumption that the dataset d is generated by some hidden keywords k i.e. P(D=d|K=k) and we find P(K=k|D=d) that can be used to determine the matching keywords for each data sample. The dynamic method though suffers from high variance due to its use of Gibb's Sampling, which can be minimized by ensembling.

The project also has a information extraction component to it. There are both machine learning classification and rule-based prediction approaches that can be used. But due to the time required and high bias of labelling the datasets myself (if time permits)**, the latter approach is used. Before the rule-based approach was decided, a pre-trained general Named Entity Recognition (NER) model from SpaCy[2] was used to perform entity detection. However, it has very low recall (relevant info undetected). A rule-based approach has much better recall. However, it suffers from low precision (irrelevant info captured) in resumes that are sophisticatedly designed, and a few pre-processing techniques have to be applied before applying the algorithms.

** A labelled dataset of a few hundred samples is needed for a specific sector/country. For each dataset, name entities have to be labelled with pre-defined tags. See [1] for how the entities are labelled

Before a resume is crafted, usually a job description will be posted, and then the applicant will (likely) tailor their resume to suit the job description. Depending on the size of the company, the HR will then filter the resume based on keywords that they expect to see, with keywords being either general to the company or provided by the recruitment evaluators (typically the project team). The resume lifecycle thus involves a tacit game between the candidate and the company, and the resume rater is modelled after this to-and-fro tacit interaction through reverse engineering.

The way HR filters resumes is also modelled. The modelling assumptions used are that (1) HR first matches the document based on keywords provided, and then (2) scans the document further to see whether the words are concise and not deviating much from each other. To account for experience range, it is assumed that HR does not look at how different a person's previous roles are from one another, but (3) how different a person's previous roles are to other resumes they have seen.

The first thing to do is determine the expected keywords that the human resume rater would expect to see (our ground truth), and then rate the resumes based on the expected keywords. There are two ways to determine keywords:

- by supplying them

- by learning (reverse-engineering) the keywords based on the documents themselves, with the assumption that the documents are generated based on those keywords

For 1, we only need to supply the keywords and the rater rates based on the similarity of the document to the keywords.

For 2, it is as though we are trying to figure out what the keywords the candidate expects the company to see are (for a given global space of keywords K and documents D, the candidate is trying to do d' = argmax(d, k1..kn) P(D|K), where d' is the desired document, and k1 to kn are keywords belonging to K).

We then use Latent Dirichlet Allocation (LDA), a generative model that assumes that each document d is a mixture of a small number of topics z and that each word in the document is attributed to one of the document's topics[3]. In simpler terms, it assumes d is generated by keywords from a mixture of topics Z.

It computes:

P(Z|D=d), the probability that for eachzinZ,dis generated

e.g. CV of Ben Lee: [z_1: 0.2, z_2: 0.3, z_3: 0.1, z_4: 0.4]

P(K|Z), the probability that a wordkofKis picked from topiczofZ

e.g. z_1: [computer: 0.3, engineering: 0.2, leadership: 0.1, software: 0.05, ...] == 1

z_2: [finance: 0.33, quantitative: 0.21, leadership: 0.1, asset: 0.08, ...] == 1

z_3: [management: 0.12, general: 0.1, report: 0.07, lead: 0.05, ...] == 1

z_4: [human: 0.22, resource: 0.21, recruitment: 0.1, award: 0.08, ...] == 1

We then model this as a Bayesian Network and then find the keywords using the below formula:

where P(K|D=d) is the probability that for each k in K, d is generated, and nargmax is the argmax for the top n keywords. We factorize P(K|ZD) ~= P(K|Z) since the probability of K is only directly conditioned on Z. Since P(Z|D=d) and P(K=k|Z=z) are provided by LDA, we can compute P(K|D=d) easily for all k. All we need to do is select n to specify how many keywords we want for each document d.

Example of nargmax P(K|D=d):

CV of Ben Lee: [finance: 0.3, engineering: 0.25, algorithms: 0.1, managed: 0.055, applied: 0.044, ...] == 1

Top 3 Keywords: finance, engineering, algorithm

With keywords determined (supervised) or found (unsupervised), we then use matching strategies to match keywords to the document to fulfil (1) in Domain Knowledge, as well as matching words within the document to fulfil (2) in Domain Knowledge.

- Keyword Match (KM): Look for match in keywords (Familiarity of job)

-

For each keyword, get the mean of the n'th (for n = 10..100, factor of 10) largest correlation values of the keyword vector to the words in the document, and then plot the regression of the 8-degree** polynomial of the means and get the intercept

-

For the supervised strategy, since the keywords have no weights attached to them, we average the intercepts for each keyword to get the

KMscore -

For the unsupervised strategy, since the probabiltiies of the keywords can be derived from the LDA model, we can use them as weight coefficients for each intercept in a linear combination to get the

KMscore. The weights first have to be normalized such that they sum to 1 e.g.Before: weights: z1:0.2 * {apple: 0.1, bear: 0.1, charlie: 0.1} => {apple: 0.02, bear: 0.02, charlie: 0.02} z2:0.1 * {dog: 0.1, elephant: 0.2, finger: 0.2} => {dog: 0.01, elephant: 0.02, finger: 0.02} z3:0.2 * {goat: 0.1, happy: 0.1, indigo: 0.2} => {goat: 0.02, happy: 0.02, indigo: 0.04} Sum != 1 After: weights: z1:0.4 * {apple: 0.33, bear: 0.33, charlie: 0.33} => {apple: 0.133, bear: 0.133, charlie: 0.133} z2:0.2 * {dog: 0.2, elephant: 0.4, finger: 0.4} => {dog: 0.04, elephant: 0.08, finger: 0.08} z3:0.4 * {goat: 0.25, happy: 0.25, indigo: 0.5} => {goat: 0.1, happy: 0.1, indigo: 0.2} Sum == 1

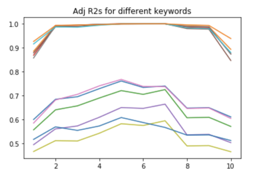

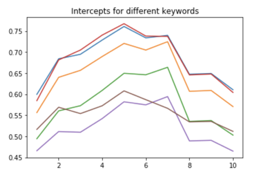

** Most intercepts plateau at 7-degree, where adjusted R2 is max. However, at 7-d, intercept ratio between keywords are very low, which means words similar to the keywords and not so similar are hard to differentiate. Also, there is a steep drop in intercept value from 7-d to 8-d. As 8-d difference is similar to 1-d, with much higher adj R2, we can get a more accurate keyword dissimilarity using 8-d.

Can see that most adj R2s plateau at 7-d

Can see that most adj R2s plateau at 7-d

Steep drop of intercept from 7-d to 8-d

Steep drop of intercept from 7-d to 8-d

regression of mean of top

regression of mean of top n correlations for a particular keyword

- Within Match (WM): Look for match within words matched by keywords (Conciseness of experience)

- For each keyword, get the mean of the correlations amongst the n'th (for n = 10..100, factor of 10) largest correlated word vectors wr.t. the keyword vector less the keyword vector to itself (since corr=1), and then plot the regression of the 8-degree** polynomial of the means and get the intercept

- For the supervised strategy, since the keywords have no weights attached to them, we average the intercepts for each keyword to get the

WMscore - For the unsupervised strategy, since the probabiltiies of the keywords can be derived from the LDA model, we can use them as weight coefficients for each intercept in a linear combination to get the

WMscore. The weights first have to be normalized such that they sum to 1.

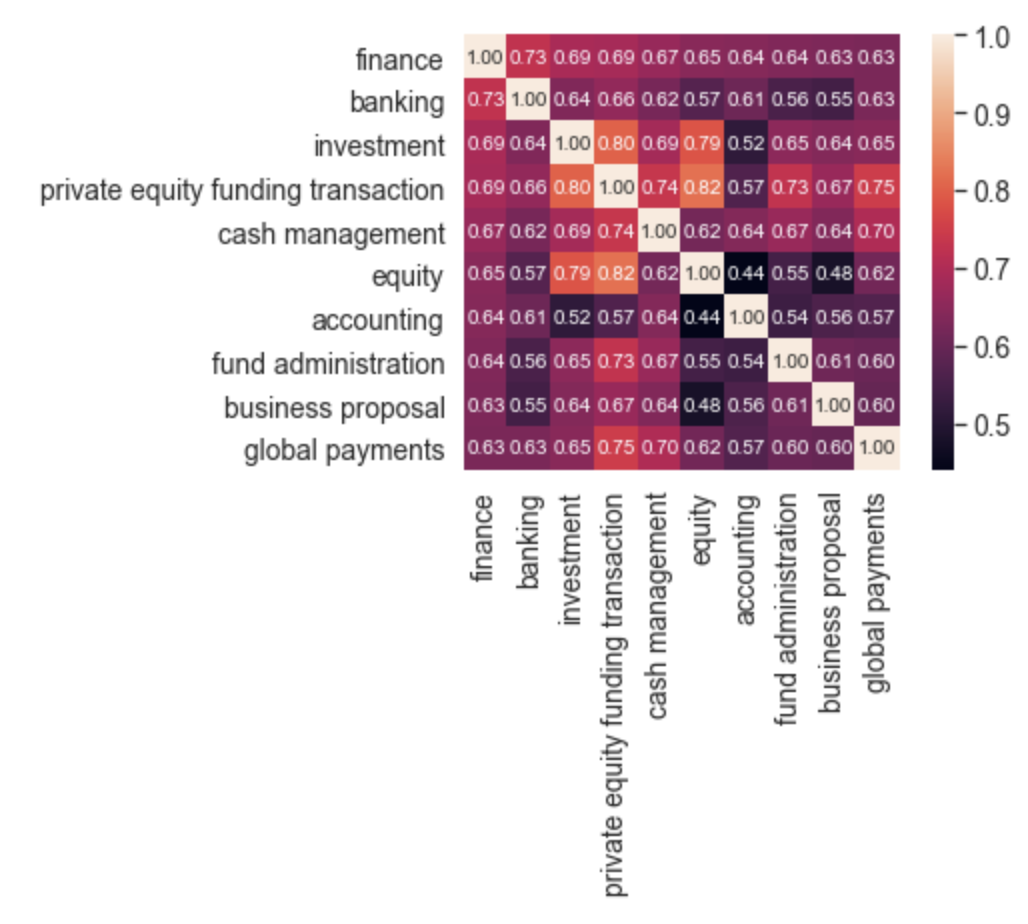

Illustration with "finance" as the keyword. The first row are the correlations w.r.t to the keyword, which are used for KM. The upper triangle less the first row and the diagonal are the within correlations of words w.r.t to the keyword. Since the correlation matrix is symmetric, the lower triangle can be ignored.

For the final rating score, we have final_score = KM * WM. We then take the mean and std of the model and then give a rating score based on how much final_score is away from the mean. The formula is given by:

diff = (final_score - mean)/sd

rating = min(10, max(0, 5 + diff))

For example, if final_score is 0.4, mean is 0.3, and sd is 0.05, then rating is rating = 5 + (0.4-0.3)/0.05 = 7. We have a min and max to cap the scores between 0 and 10, and it makes reasonable sense since we do not really expect scores to be more than 5 standard deviations away from the mean.

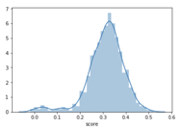

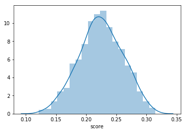

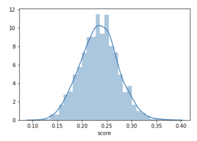

We can use this formula because the scores are pretty normally distributed. Low intra-model variance also helps with justifying the use of the score.

For a fixed Keywords-trained model

For a fixed Keywords-trained model

For an LDA-trained model

For an LDA-trained model

Another LDA-trained model

Another LDA-trained model

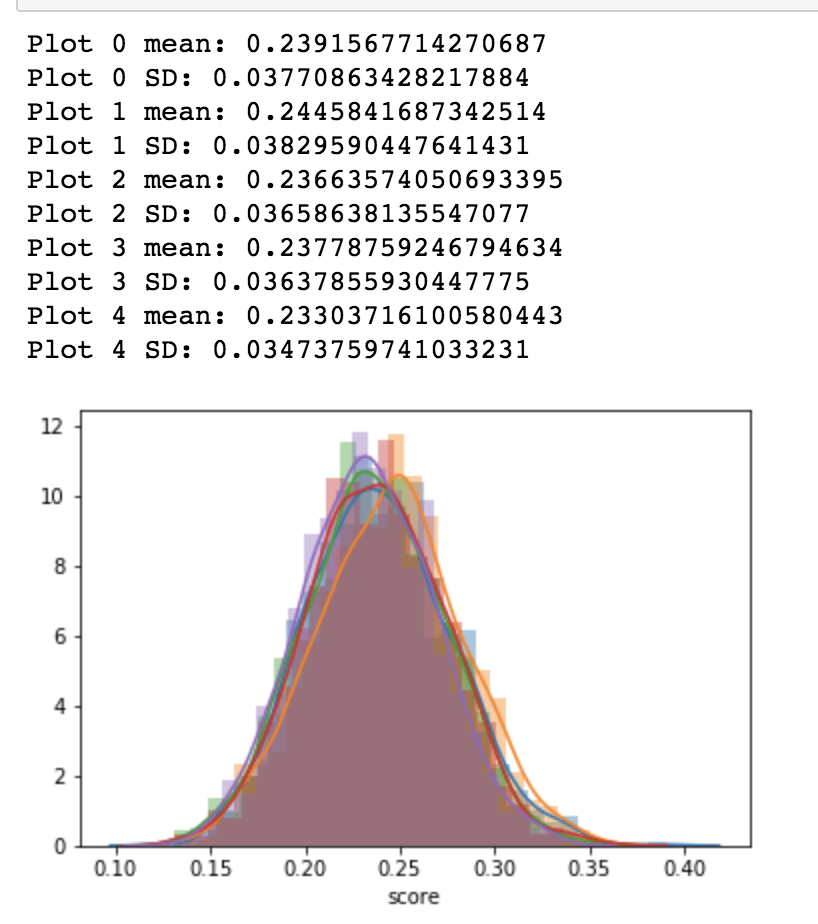

As the LDA model suffers from high variance due to its use of Gibb's Sampling, a way to minimize variance is to generate ratings across multiple models and then getting the average. From tests done on multiple models, the ratings given from each model is pretty consistent i.e. small intra-model variance, which suggests that the LDA model approach is useful and that we do not need to average on too many models to minimize variance (Central Limit Theorem).

Plots of 5 different LDA-trained models on the same dataset. The mean and standard deviations have low variance and all 5 models are pretty similar in shape to each other.

Plots of 5 different LDA-trained models on the same dataset. The mean and standard deviations have low variance and all 5 models are pretty similar in shape to each other.

We can also model uniqueness (3 in Domain Knowledge) by using the topic distributions of each resume document and comparing the dissimilarity. However, due to its high instability, it is not incorporated into the model until proper function modelling is done.

Between Divergence (BD): Look for diversity amongst candidates (Uniqueness)

- For each candidate, get the topic probability distributions (default=20 for our LDA model)

- Compare the distribution with the distributions of other candidates using Jensen-Shannon divergence[4], a symmetric and finite version of the Kullback-Leibler divergence.

- We then get the mean of the JS divergences to get the

BDscore

A naive implementation of incorporating the BD score is by dividing the BD score with KM*WM, since BD is inversely proportional to uniqueness. However, that strategy distorts the important of KM*WM, making the ratings seem no different from random. Another strategy could be having it as a separate score or adding a function of BD to the score i.e. final_score = KM*WM + f(BD). Due to the sophisticated of this feature, it is not incorporated into the model as of now.

For information extraction, a rule-based prediction approach is used due to reasons mentioned in the Introduction. The rules-based approach does pretty well for standard resumes, but suffers from low precision on sophisticatedly-designed resumes (irrelevant info captured). False positives are minimized by first detecting category boxes (Like "Work Experience" and "Education") and then performing relevant extractions.

- For a good company and name detection algorithm, a Named Entity Recognition (NER) model has to be trained on a large dataset containing many different CVs well-labelled for it to generalize well.

- Since labelling takes a lot of time, and since there are no well pre-trained NER models out there, non-AI methods are used

- Some CVs have very complicated document formatting (very hard to parse)

- Some people have names that SpaCy's NER model has not learnt before, so it cannot detect his/her name

- Some CVs have company names that are very different from modern companies e.g. (Usaha Tegas Sdn Bhd), thus the NER model cannot detect it

- Some CVs have names and company names that are of different languages to what the NER model could detect

- Some CVs have their job duration in front of their job description instead of behind, which was initially not detected

- Sometimes the pdf parser parses a list dot as the letter o, which renders the detection algorithm not being able to detect that the line is a list item

- Using a non-AI approach, it's not possible to detect anything if the text converted is screwed up e.g. all the titles are all the way below the text

- For docx documents, list bullets cannot be extracted with

python-docx, so for now a lot of irrelevant information will be shown until a custom docx parser is built

src/data/test/Dong Xing_Catherine Zhang_Equity Research Intern.pdf

Name: ZHANG Xiaoyu Catherine

Email: zhangxy923@126.com

Number: +852 54871558

City/Country: China

Work Experience:

6 years 4 months

- Dongxing Securities Shenzhen, China

- Equity Research Intern, Computer Team Jan 2017 - Now

- AI and Internet+. Collect and analyze industry and firms’ information; get financial data by Wind and Bloomberg).

- Roland Berger Strategy Consultants Shanghai, China

- Part-time Assistant, Financial Services Department Sep 2016 - Nov 2016

- Bank of China Beijing, China

- Clients Manager, Individual Financial Department Sep 2012 - Jun 2016

- PROJECT EXPERIENCE

- ABT) Hong Kong

- Team Leader Mar 2017 - Apr 2017

Education:

5 years 1 months

- City University of Hong Kong Hong Kong

- Master of Science in Financial Services Aug 2016 - Nov 2017

- Investment, Derivatives and Risk Management, Corporate Finance, Financial Statement Analysis,

- Fixed Income Securities, Credit Risk Management.

- Capital University of Economics and Business Beijing, China

- Bachelor of Science in Finance Sep 2008 - Jun 2012

- RenMin Scholarship, 7 times (Top 10%).

- Investment Banking, Securities Investment Fund, Financial Model Design, Accounting, Economic Law.

- The University of Hong Kong Hong Kong

- Exchange Student Jul 2011- Aug 2011

- Financial Analysis, Marketing Management, Writing.

Skills:

Security, Finance, Writing, Analysis, Accounting, Research, Marketing, Email, Economics,

Strategy, Excel, Powerpoint, Financial analysis, Presentation, Design, Technical,

Cfa, Banking, Reports, Due diligence, Word, Mobile, Risk management, Analyze,

Ai, Data analysis, Microsoft office, Modeling

----------

Rating: 9

src/data/test/Brawn Capital_Minori Yoshida_FM.docx

Name: Minori Yoshida Yan Wo Yuet Building

Email: y.minori512@gmail.com

Number: +852-9544-5637

City/Country: Hong Kong

Work Experience:

6 years 4 months

- Brawn Capital Limited, Central, Hong Kong

- August 2016 Present

- Finance Manager

- Manage financials of project finance working closely with developer and asset management company in Japan

- Establish and improve internal financial processes of both project and corporate

- Manage budget, financial reports and tax flings both in Hong Kong and Japan

- KPMG AZSA LLC, Tokyo, Japan February 2012 February 2015

- Audit Practice

- Perform annual financial audits, quarterly reviews under J-GAAP and internal control audit under J-SOX for audit engagements of various clients including one of the biggest Japanese public companies listed in Tokyo Stock Exchange

- Perform reporting package reviews under IFRS and US-GAAP for various clients who have overseas parent companies

- Consult on management accounting issues and deliver solutions.

- Identify and communicate accounting and auditing matters to managers and partners

Education:

3 years 11 months

- Meiji University Bachelor of Business Administration April 2007 March 2011

- Department of Accounting

Skills:

Sales, Budget, Analysis, Auditing, Process, Tax, Administration, Reports, Gaap, Budgeting,

Financial analysis, English, Reporting, Finance, Modeling, Accounting, Financial reports,

Consulting, Journal entries, Flower, Communication, Audit, Word, Relationship management,

Excel

----------

Rating: 8

In conclusion, for a general resume rater, such an approach could work well as a first-pass test. However, for a more robust rater that can achieve high true positives (ratings similar to what HR would give or translates to actually hiring good fits), a more supervised approach should be taken.

For information extraction, a Named Entity Recognition approach would work much better than a rule-base approach. However, the only challenge is knowing what labels to label and labelling the labels correctly, as well as getting a dataset suitable for the company. A big company with many employees would benefit very well with such an approach. However, for smaller companies, rule-based approach still works best given its high recall (ensuring relevant information is captured).

Use Python3

pip3 install -r requirements.txt

python3 pre_requisites.py

Test run

python3 main.py --type fixed "src/data/test/Dong Xing_Catherine Zhang_Equity Research Intern.pdf" --model_name model

Test resumes can be found in src/data/test

For the fixed keyword implementation, the pre-trained model is trained on the keywords:

[fund, asset, investment, accounting, trust, strategy]

To use it, type:

python3 main.py path/to/resume

or, long-form,

python3 main.py --type fixed path/to/resume

for specific model (no default):

python3 main.py --type fixed path/to/resume --model_name model

or using model path (no default):

python3 main.py --type fixed path/to/resume --model_path path/to/model

Resume should be pdf or docx format

Pre-trained model: src/models/model_fixed/model.json

Pre-trained model_name: model

To use the LDA-generated keyword implementation:

python3 main.py --type lda path/to/resume

for specific model:

python3 main.py --type lda path/to/resume --model_name model

or using model path (no default):

python3 main.py --type lda path/to/resume --model_path path/to/model

Resume should be pdf or docx format

Pre-trained model: src/models/model_lda/model.json

Pre-trained model name: model

Training resumes can be found in src/data/train (size=1468)

or for a smaller dataset (size=30), src/data/sample_train

For the fixed keyword implementation:

python3 main.py --train path/of/training_directory --type fixed --keywords fund asset investment accounting trust strategy --model_name abc_model

Model and keywords will be saved in src/models/model_fixed

For the LDA-generated keyword implementation:

python3 main.py --train path/of/training_directory --type lda --model_name abc_model

Model and LDA model will be saved in src/models/model_lda

[1] https://github.com/DataTurks-Engg/Entity-Recognition-In-Resumes-SpaCy

[2] https://spacy.io/usage/linguistic-features#named-entities

[3] https://en.wikipedia.org/wiki/Latent_Dirichlet_allocation

[4] https://en.wikipedia.org/wiki/Jensen%E2%80%93Shannon_divergence