- The main objective is to run 🦙 Ollama and 🐬 TinyDolphin on a Raspberry Pi 5 with 🐳 Docker Compose.

- The stack provides development environments to experiment with Ollama and 🦜🔗 Lanchain without installing anything:

- Python dev environment (available)

- JavaScript dev environment (available)

- The compose file use the

includefeature, so you need at least the 2.21.0 version

git clone https://github.com/bots-garden/pi-genai-stack.git

cd pi-genai-stackAt start, Pi GenAI Stack will download 7 models:

- TinyDolphin

- TinyLlama + TinyLlama Chat

- DeepSeek Coder + DeepSeek Coder Instruct

- Gemma + Gemma Instruct

Then all the samples of the demos will use one of these models.

start the stack with the demo

docker compose --profile demo upUse the python with the interactive mode:

docker exec --workdir /python-demo -it python-demo /bin/bashRun the python files:

python3 1-give-me-a-dockerfile.py

# or

python3 2-tell-me-more-about-docker-and-wasm.pystart the stack with the Python dev environment

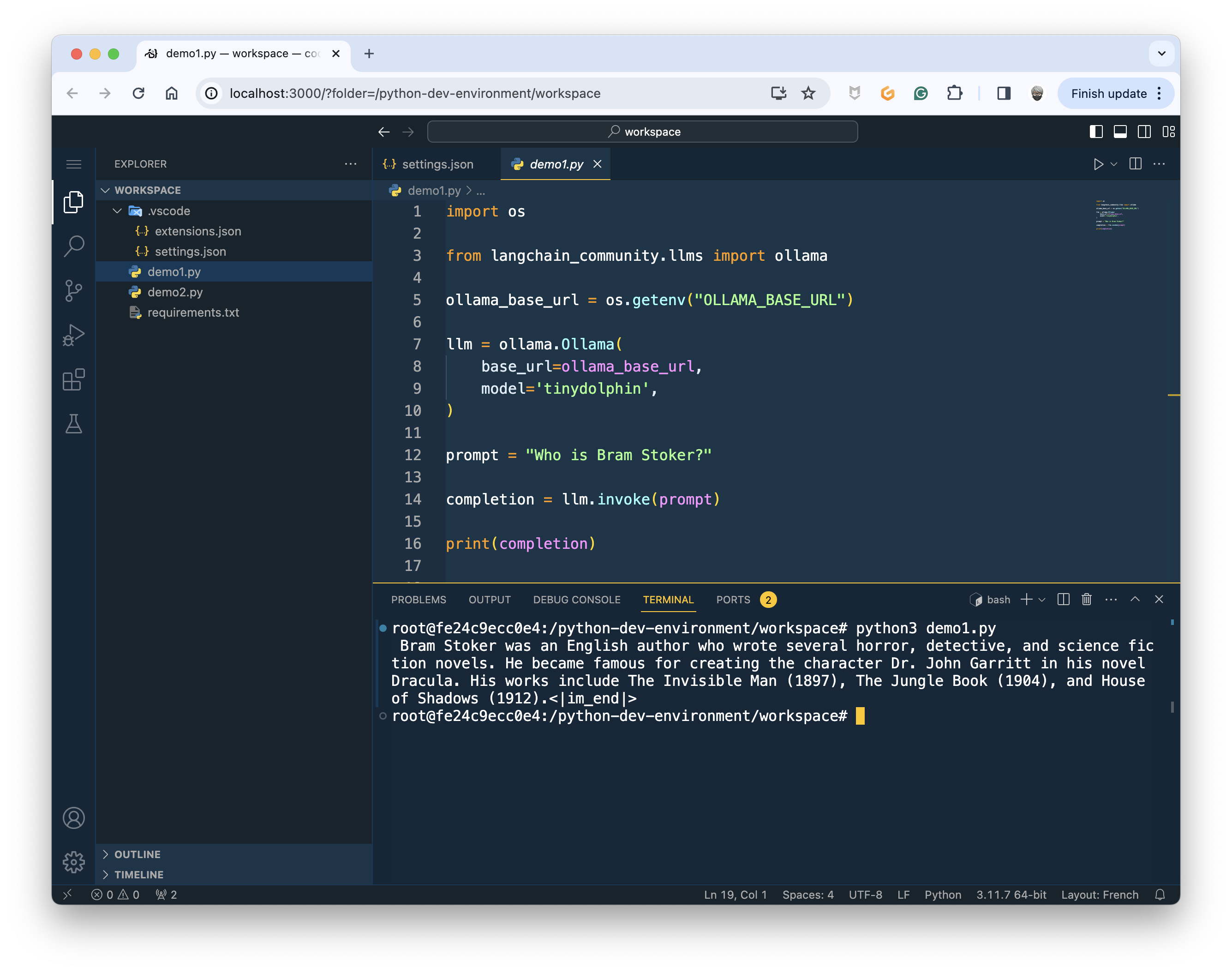

docker compose --profile python upThe Python dev environment is a Web IDE based on Coder Server with the Python runtime and tools. The environment is starded as a Docker Compose service and you can open the IDE with this URL: http://localhost:3000.

To use it remotely (to connect to the WebIDE running on the Pi from your workstation), use the DNS name of your Pi or its IP address. For example

http://hal.local:3000(wherehal.localis the DNS name of my Pi).

start the stack with the JavaScript dev environment

docker compose --profile javascript upThe JavaScript dev environment is a Web IDE based on Coder Server with the Node.js runtime and tools. The environment is starded as a Docker Compose service and you can open the IDE with this URL: http://localhost:3001.

To use it remotely (to connect to the WebIDE running on the Pi from your workstation), use the DNS name of your Pi or its IP address. For example

http://hal.local:3001(wherehal.localis the DNS name of my Pi).

- The answer time is long because we do not use streaming

- Where

hal.localis the DNS name of my Pi

curl http://hal.local:11434/api/generate -d '{

"model": "tinydolphin",

"prompt": "Explain simply what is WebAssembly",

"stream": false

}'Or try this one:

curl http://hal.local:11434/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "tinydolphin",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello!"

}

]

}'curl http://hal.local:11434/api/tagscurl http://hal.local:11434/api/pull -d '{

"name": "phi"

}'✋ llama2 is too big for a Pi

curl -X DELETE http://hal.local:11434/api/delete -d '{

"name": "llama2"

}'- Host Ollama and TinyDolphin LLM on a Pi5 with Docker Compose: Run Ollama on a Pi5

- First Steps with LangChain and the Python toolkit: Ollama on my Pi5: The Python dev environment

- Prompts and Chains with Ollama and LangChain

- Make a GenAI Web app in less than 40 lines of code

- Make a GenAI Conversational Chatbot with memory

- Create a GenAI Rust Teacher

- Let's talk with a GenAI French cook