TomoDRGN: analyzing structural heterogeneity in cryo-electron sub-tomograms

CryoDRGN has proven a powerful deep learning method for heterogeneity analysis in single particle cryo-EM. In particular, the method models a continuous distribution over 3D structures by using a Variational Auto-Encoder (VAE) based architecture to generate a reconstruction voxel-by-voxel once given a fixed coordinate from a continuous learned latent space.

TomoDRGN extends the cryoDRGN framework to cryo-ET by learning heterogeneity from datasets in which each particle is sampled by multiple projection images at different stage tilt angles. For cryo-ET samples imaging particles in situ, tomoDRGN therefore enables continuous heterogeneity analysis at a single particle level within the native cellular environment. This new type of input necessitates modification of the cryoDRGN architecture, enables tomography-specific processing opportunities (e.g. dose weighting for loss weighting and efficient voxel subset evaluation during training), and benefits from tomography-specific interactive visualizations.

Installation / dependencies:

Setting up a new tomoDRGN environment:

# Create conda environment

conda create --name tomodrgn "python>=3.7"

conda activate tomodrgn

# Install dependencies

conda install "pytorch-gpu>=1.11.0" "cudatoolkit>=11.0" -c pytorch

conda install pandas seaborn scikit-learn

conda install umap-learn notebook -c conda-forge

pip install "ipyvolume>=0.6.0" "pythreejs>=2.4.2"

# Clone source code and install

git clone https://github.com/bpowell122/tomodrgn.git

cd tomodrgn

pip install .

Optional: verify code+dependency functionality on your system

cd tomodrgn/testing

python ./quicktest.py # ~1 minute, tests train_vae and analyze

python ./unittest.py # ~30 minutes, tests all commands with multiple options (except jupyter notebooks)

Example usage

Below are minimal examples of various common tomoDRGN commands. By design, syntax parallels cryoDRGN's syntax where possible. All commands require initialization of the conda environment: conda activate tomodrgn

-

Train a decoder-only network to learn a homogeneous structure:

tomodrgn train_nn particles_imageseries.star --outdir 01_nn_particles --num-epochs 30Click to see all options:

tomodrgn train_nn --helpusage: tomodrgn train_nn [-h] -o OUTDIR [--load WEIGHTS.PKL] [--checkpoint CHECKPOINT] [--log-interval LOG_INTERVAL] [-v] [--seed SEED] [--ind IND] [--uninvert-data] [--no-window] [--window-r WINDOW_R] [--datadir DATADIR] [--lazy] [--Apix APIX] [--recon-tilt-weight] [--recon-dose-weight] [--dose-override DOSE_OVERRIDE] [--l-dose-mask] [--sample-ntilts SAMPLE_NTILTS] [--sequential-tilt-sampling] [-n NUM_EPOCHS] [-b BATCH_SIZE] [--wd WD] [--lr LR] [--norm NORM NORM] [--no-amp] [--multigpu] [--layers LAYERS] [--dim DIM] [--l-extent L_EXTENT] [--pe-type {geom_ft,geom_full,geom_lowf,geom_nohighf,linear_lowf,gaussian,none}] [--pe-dim PE_DIM] [--activation {relu,leaky_relu}] [--feat-sigma FEAT_SIGMA] [--num-workers NUM_WORKERS] [--prefetch-factor PREFETCH_FACTOR] [--persistent-workers] [--pin-memory] particles Train a NN to model a 3D density map given 2D images from a tilt series with consensus pose assignments positional arguments: particles Input particles_imageseries.star options: -h, --help show this help message and exit -o OUTDIR, --outdir OUTDIR Output directory to save model (default: None) --load WEIGHTS.PKL Initialize training from a checkpoint (default: None) --checkpoint CHECKPOINT Checkpointing interval in N_EPOCHS (default: 1) --log-interval LOG_INTERVAL Logging interval in N_PTCLS (default: 200) -v, --verbose Increases verbosity (default: False) --seed SEED Random seed (default: 74161) Dataset loading: --ind IND Filter particle stack by these indices (default: None) --uninvert-data Do not invert data sign (default: True) --no-window Turn off real space windowing of dataset (default: True) --window-r WINDOW_R Windowing radius (default: 0.85) --datadir DATADIR Path prefix to particle stack if loading relative paths from a .star or .cs file (default: None) --lazy Lazy loading if full dataset is too large to fit in memory (default: False) --Apix APIX Override A/px from input starfile; useful if starfile does not have _rlnDetectorPixelSize col (default: 1.0) --recon-tilt-weight Weight reconstruction loss by cosine(tilt_angle) (default: False) --recon-dose-weight Weight reconstruction loss per tilt per pixel by dose dependent amplitude attenuation (default: False) --dose-override DOSE_OVERRIDE Manually specify dose in e- / A2 / tilt (default: None) --l-dose-mask Do not train on frequencies exposed to > 2.5x critical dose. Training lattice is intersection of this with --l-extent (default: False) --sample-ntilts SAMPLE_NTILTS Number of tilts to sample from each particle per epoch. Default: min(ntilts) from dataset (default: None) --sequential-tilt-sampling Supply particle images of one particle to encoder in starfile order (default: False) Training parameters: -n NUM_EPOCHS, --num-epochs NUM_EPOCHS Number of training epochs (default: 20) -b BATCH_SIZE, --batch-size BATCH_SIZE Minibatch size (default: 1) --wd WD Weight decay in Adam optimizer (default: 0) --lr LR Learning rate in Adam optimizer. Should co-scale linearly with batch size. (default: 0.0002) --norm NORM NORM Data normalization as shift, 1/scale (default: mean, std of dataset) (default: None) --no-amp Disable use of mixed-precision training (default: False) --multigpu Parallelize training across all detected GPUs. Specify GPUs i,j via `export CUDA_VISIBLE_DEVICES=i,j` before tomodrgn train_vae (default: False) Network Architecture: --layers LAYERS Number of hidden layers (default: 3) --dim DIM Number of nodes in hidden layers (default: 256) --l-extent L_EXTENT Coordinate lattice size (if not using positional encoding) (default: 0.5) --pe-type {geom_ft,geom_full,geom_lowf,geom_nohighf,linear_lowf,gaussian,none} Type of positional encoding (default: gaussian) --pe-dim PE_DIM Num sinusoid features in positional encoding (default: D/2) (default: None) --activation {relu,leaky_relu} Activation (default: relu) --feat-sigma FEAT_SIGMA Scale for random Gaussian features (default: 0.5) Dataloader arguments: --num-workers NUM_WORKERS Number of workers to use when batching particles for training. Has moderate impact on epoch time (default: 0) --prefetch-factor PREFETCH_FACTOR Number of particles to prefetch per worker for training. Has moderate impact on epoch time (default: 2) --persistent-workers Whether to persist workers after dataset has been fully consumed. Has minimal impact on run time (default: False) --pin-memory Whether to use pinned memory for dataloader. Has large impact on epoch time. Recommended. (default: False) -

Assess convergence of a decoder-only network relative to an external volume:

tomodrgn convergence_nn 01_nn_particles sta_reference_volume.mrcClick to see all options:

tomodrgn convergence_nn --helpusage: tomodrgn convergence_nn [-h] [--max-epoch MAX_EPOCH] [--include-dc] [--fsc-mask {none,sphere,tight,soft}] training_directory reference_volume Assess convergence of a decoder-only network relative to an external volume by FSC positional arguments: training_directory train_nn directory containing reconstruct.N.mrc reference_volume volume against which to calculate FSC options: -h, --help show this help message and exit --max-epoch MAX_EPOCH Maximum epoch for which to calculate FSCs (default: None) --include-dc Include FSC calculation for DC component, default False because DC component default excluded during training (default: False) --fsc-mask {none,sphere,tight,soft} Type of mask applied to volumes before calculating FSC (default: soft) -

Train a VAE to simultaneously learn a latent heterogeneity landscape and a volume-generative decoder:

tomodrgn train_vae particles_imageseries.star --zdim 8 --outdir 02_vae_z8 --num-epochs 30Click to see all options:

tomodrgn train_vae --helpusage: tomodrgn train_vae [-h] -o OUTDIR --zdim ZDIM [--load WEIGHTS.PKL] [--checkpoint CHECKPOINT] [--log-interval LOG_INTERVAL] [-v] [--seed SEED] [--pose POSE] [--ctf CTF] [--ind PKL] [--uninvert-data] [--no-window] [--window-r WINDOW_R] [--datadir DATADIR] [--lazy] [--Apix APIX] [--recon-tilt-weight] [--recon-dose-weight] [--dose-override DOSE_OVERRIDE] [--l-dose-mask] [--sample-ntilts SAMPLE_NTILTS] [--sequential-tilt-sampling] [-n NUM_EPOCHS] [-b BATCH_SIZE] [--wd WD] [--lr LR] [--beta BETA] [--beta-control BETA_CONTROL] [--norm NORM NORM] [--no-amp] [--multigpu] [--enc-layers-A QLAYERSA] [--enc-dim-A QDIMA] [--out-dim-A OUT_DIM_A] [--enc-layers-B QLAYERSB] [--enc-dim-B QDIMB] [--enc-mask ENC_MASK] [--pooling-function {concatenate,max,mean,median,set_encoder}] [--num-seeds NUM_SEEDS] [--num-heads NUM_HEADS] [--layer-norm] [--dec-layers PLAYERS] [--dec-dim PDIM] [--l-extent L_EXTENT] [--pe-type {geom_ft,geom_full,geom_lowf,geom_nohighf,linear_lowf,gaussian,none}] [--feat-sigma FEAT_SIGMA] [--pe-dim PE_DIM] [--activation {relu,leaky_relu}] [--num-workers NUM_WORKERS] [--prefetch-factor PREFETCH_FACTOR] [--persistent-workers] [--pin-memory] particles Train a VAE for heterogeneous reconstruction with known pose for tomography data positional arguments: particles Input particles (.mrcs, .star, .cs, or .txt) options: -h, --help show this help message and exit -o OUTDIR, --outdir OUTDIR Output directory to save model (default: None) --zdim ZDIM Dimension of latent variable (default: None) --load WEIGHTS.PKL Initialize training from a checkpoint (default: None) --checkpoint CHECKPOINT Checkpointing interval in N_EPOCHS (default: 1) --log-interval LOG_INTERVAL Logging interval in N_PTCLS (default: 200) -v, --verbose Increases verbosity (default: False) --seed SEED Random seed (default: 18828) --pose POSE Optionally override star file poses with cryodrgn-format pose.pkl (default: None) --ctf CTF Optionally override star file CTF with cryodrgn-format ctf.pkl (default: None) Dataset loading: --ind PKL Filter particle stack by these indices (default: None) --uninvert-data Do not invert data sign (default: True) --no-window Turn off real space windowing of dataset (default: True) --window-r WINDOW_R Windowing radius (default: 0.85) --datadir DATADIR Path prefix to particle stack if loading relative paths from a .star or .cs file (default: None) --lazy Lazy loading if full dataset is too large to fit in memory (Should copy dataset to SSD) (default: False) --Apix APIX Override A/px from input starfile; useful if starfile does not have _rlnDetectorPixelSize col (default: 1.0) --recon-tilt-weight Weight reconstruction loss by cosine(tilt_angle) (default: False) --recon-dose-weight Weight reconstruction loss per tilt per pixel by dose dependent amplitude attenuation (default: False) --dose-override DOSE_OVERRIDE Manually specify dose in e- / A2 / tilt (default: None) --l-dose-mask Do not train on frequencies exposed to > 2.5x critical dose. Training lattice is intersection of this with --l-extent (default: False) --sample-ntilts SAMPLE_NTILTS Number of tilts to sample from each particle per epoch. Default: min(ntilts) from dataset (default: None) --sequential-tilt-sampling Supply particle images of one particle to encoder in starfile order (default: False) Training parameters: -n NUM_EPOCHS, --num-epochs NUM_EPOCHS Number of training epochs (default: 20) -b BATCH_SIZE, --batch-size BATCH_SIZE Minibatch size (default: 1) --wd WD Weight decay in Adam optimizer (default: 0) --lr LR Learning rate in Adam optimizer. Should co-scale linearly with batch size. (default: 0.0002) --beta BETA Choice of beta schedule or a constant for KLD weight (default: None) --beta-control BETA_CONTROL KL-Controlled VAE gamma. Beta is KL target (default: None) --norm NORM NORM Data normalization as shift, 1/scale (default: 0, std of dataset) (default: None) --no-amp Disable use of mixed-precision training (default: False) --multigpu Parallelize training across all detected GPUs (default: False) Encoder Network: --enc-layers-A QLAYERSA Number of hidden layers for each tilt (default: 3) --enc-dim-A QDIMA Number of nodes in hidden layers for each tilt (default: 256) --out-dim-A OUT_DIM_A Number of nodes in output layer of encA == ntilts * number of nodes input to encB (default: 128) --enc-layers-B QLAYERSB Number of hidden layers encoding merged tilts (default: 3) --enc-dim-B QDIMB Number of nodes in hidden layers encoding merged tilts (default: 512) --enc-mask ENC_MASK Circular mask of image for encoder (default: D/2; -1 for no mask) (default: None) --pooling-function {concatenate,max,mean,median,set_encoder} Function used to pool features along ntilts dimension after encA (default: concatenate) Set transformer args: --num-seeds NUM_SEEDS number of seeds for PMA (default: 1) --num-heads NUM_HEADS number of heads for multi head attention blocks (default: 4) --layer-norm whether to apply layer normalization in the set transformer block (default: False) Decoder Network: --dec-layers PLAYERS Number of hidden layers (default: 3) --dec-dim PDIM Number of nodes in hidden layers (default: 256) --l-extent L_EXTENT Coordinate lattice size (if not using positional encoding) (default: 0.5) --pe-type {geom_ft,geom_full,geom_lowf,geom_nohighf,linear_lowf,gaussian,none} Type of positional encoding (default: gaussian) --feat-sigma FEAT_SIGMA Scale for random Gaussian features (default: 0.5) --pe-dim PE_DIM Num features in positional encoding (default: None) --activation {relu,leaky_relu} Activation (default: relu) Dataloader arguments: --num-workers NUM_WORKERS Number of workers to use when batching particles for training. Has moderate impact on epoch time (default: 0) --prefetch-factor PREFETCH_FACTOR Number of particles to prefetch per worker for training. Has moderate impact on epoch time (default: 2) --persistent-workers Whether to persist workers after dataset has been fully consumed. Has minimal impact on run time (default: False) --pin-memory Whether to use pinned memory for dataloader. Has large impact on epoch time. Recommended. (default: False) -

Assess convergence of a VAE model after 30 epochs training using internal / self-consistency heuristics:

tomodrgn convergence_vae 02_vae_z8 29Click to see all options:

tomodrgn convergence_vae --helpusage: tomodrgn convergence_vae [-h] [-o OUTDIR] [--epoch-interval EPOCH_INTERVAL] [--force-umap-cpu] [--subset SUBSET] [--random-seed RANDOM_SEED] [--random-state RANDOM_STATE] [--n-epochs-umap N_EPOCHS_UMAP] [--skip-umap] [--n-bins N_BINS] [--smooth SMOOTH] [--smooth-width SMOOTH_WIDTH] [--pruned-maxima PRUNED_MAXIMA] [--radius RADIUS] [--final-maxima FINAL_MAXIMA] [--Apix APIX] [--flip] [--invert] [-d DOWNSAMPLE] [--cuda CUDA] [--skip-volgen] [--ground-truth GROUND_TRUTH [GROUND_TRUTH ...]] [--max-threads MAX_THREADS] [--thresh THRESH] [--dilate DILATE] [--dist DIST] workdir epoch Assess convergence and training dynamics of a heterogeneous VAE network positional arguments: workdir Directory with tomoDRGN results epoch Latest epoch number N to analyze convergence (0-based indexing, corresponding to z.N.pkl, weights.N.pkl), "latest" for last detected epoch options: -h, --help show this help message and exit -o OUTDIR, --outdir OUTDIR Output directory for convergence analysis results (default: [workdir]/convergence.[epoch]) (default: None) --epoch-interval EPOCH_INTERVAL Interval of epochs between calculating most convergence heuristics (default: 5) UMAP calculation arguments: --force-umap-cpu Override default UMAP GPU-bound implementation via cuML to use umap-learn library instead (default: False) --subset SUBSET Max number of particles to be used for UMAP calculations. 'None' means use all ptcls (default: 50000) --random-seed RANDOM_SEED Manually specify the seed used for selection of subset particles (default: None) --random-state RANDOM_STATE Random state seed used by UMAP for reproducibility at slight cost of performance (default 42, None means slightly faster but non-reproducible) (default: 42) --n-epochs-umap N_EPOCHS_UMAP Number of epochs to train the UMAP embedding via cuML for a given z.pkl, as described in the cuml.UMAP documentation (default: 25000) --skip-umap Skip UMAP embedding. Requires that UMAP be precomputed for downstream calcs. Useful for tweaking volume generation settings. (default: False) Sketching UMAP via local maxima arguments: --n-bins N_BINS the number of bins along UMAP1 and UMAP2 (default: 30) --smooth SMOOTH smooth the 2D histogram before identifying local maxima (default: True) --smooth-width SMOOTH_WIDTH width of gaussian kernel for smoothing 2D histogram expressed as multiple of one bin's width (default: 1.0) --pruned-maxima PRUNED_MAXIMA prune poorly-separated maxima until this many maxima remain (default: 12) --radius RADIUS distance at which two maxima are considered poorly-separated and are candidates for pruning (euclidean distance in bin-space) (default: 5.0) --final-maxima FINAL_MAXIMA select this many local maxima, sorted by highest bin count after pruning, for which to generate volumes (default: 10) Volume generation arguments: --Apix APIX A/pix of output volume (default: 1.0) --flip Flip handedness of output volume (default: False) --invert Invert contrast of output volume (default: False) -d DOWNSAMPLE, --downsample DOWNSAMPLE Downsample volumes to this box size (pixels). Recommended for boxes > 250-300px (default: None) --cuda CUDA Specify cuda device for volume generation (default: None) --skip-volgen Skip volume generation. Requires that volumes already exist for downstream CC + FSC calcs (default: False) --ground-truth GROUND_TRUTH [GROUND_TRUTH ...] Relative path containing wildcards to ground_truth_vols*.mrc for map-map CC calcs (default: None) Mask generation arguments: --max-threads MAX_THREADS Max number of threads used to parallelize mask generation (default: 8) --thresh THRESH Float, isosurface at which to threshold mask; default None uses 50th percentile (default: None) --dilate DILATE Number of voxels to dilate thresholded isosurface outwards from mask boundary (default: 3) --dist DIST Number of voxels over which to apply a soft cosine falling edge from dilated mask boundary (default: 10) -

Run standard analysis of a VAE model after 30 epochs training (PCA and UMAP of latent space, volume generation along PC's, k-means sampling of latent space and generation of corresponding volumes):

tomodrgn analyze 02_vae_z8 29 --Apix 3.5Click to see all options:

tomodrgn analyze --helpusage: tomodrgn analyze [-h] [--device DEVICE] [-o OUTDIR] [--skip-vol] [--skip-umap] [--Apix APIX] [--flip] [-d DOWNSAMPLE] [--pc PC] [--pc-ondata] [--ksample KSAMPLE] [--invert] workdir epoch Visualize latent space and generate volumes positional arguments: workdir Directory with tomoDRGN results epoch Epoch number N to analyze (0-based indexing, corresponding to z.N.pkl, weights.N.pkl) options: -h, --help show this help message and exit --device DEVICE Optionally specify CUDA device (default: None) -o OUTDIR, --outdir OUTDIR Output directory for analysis results (default: [workdir]/analyze.[epoch]) (default: None) --skip-vol Skip generation of volumes (default: False) --skip-umap Skip running UMAP (default: False) Extra arguments for volume generation: --Apix APIX Pixel size to add to .mrc header (default: 1 A/pix) --flip Flip handedness of output volumes (default: False) -d DOWNSAMPLE, --downsample DOWNSAMPLE Downsample volumes to this box size (pixels) (default: None) --pc PC Number of principal component traversals to generate (default: 2) --pc-ondata Find closest on-data latent point to each PC percentile (default: False) --ksample KSAMPLE Number of kmeans samples to generate (default: 20) --invert Invert contrast of output volumes (default: False) -

Interactive analysis of a VAE model: run

jupyter notebookto open02_vae_z8/analyze.29/tomoDRGN_viz+filt_template.ipynbgenerated bytomodrgn analyze. Note: certain widget features used in the notebook are only available in jupyter notebook, not jupyter lab. Click here for an example of one type of interactive visualization.Click to see two ways to run jupyter notebook

To run a local instance of Jupyter Notebook (e.g. you are viewing a monitor directly connected to a computer with direct access to the filesystem containing 02_vae_z8_box256 and the tomodrgn conda environment):jupyter notebookTo run a remote instance of Jupyter Notebook (e.g. you are viewing a monitor NOT connected to a computer with direct access to the filesystem containing 02_vae_z8_box256 and the tomodrgn conda environment, perhaps having run tomodrgn on a remote cluster):

# In one terminal window, set up port forwarding to your local machine ssh -t -t username@cluster-head-node -L 8888:localhost:8888 ssh active-worker-node -L 8888:localhost:8888 # In a second terminal window connected to your remote system, launch the notebook jupyter notebook --no-browser --port 8888 -

Filter a star file (imageseries or volumeseries) by particle indices identified and written by

tomoDRGN_viz+filt_template.ipynb:tomodrgn filter_star particles.star --ind ind.pkl --outstar particles_filt.starClick to see all options:

tomodrgn filter_star --helpusage: tomodrgn filter_star [-h] [--tomo-id-col TOMO_ID_COL] [--ptcl-id-col PTCL_ID_COL] [--starfile-source {warp,relion31}] [--ind IND] [--ind-type {particle,image}] [--tomogram TOMOGRAM] [--action {keep,drop}] -o O input Filter a .star file generated by Warp subtomogram export positional arguments: input Input .star file options: -h, --help show this help message and exit --tomo-id-col TOMO_ID_COL Name of column in input starfile with unique values per tomogram (default: _rlnMicrographName) --ptcl-id-col PTCL_ID_COL Name of column in input starfile with unique values per particle, if `index` then each row is treated as a unique particle (default: _rlnGroupName) --starfile-source {warp,relion31} Software that created starfile; used to identify particles data block (default: warp) --ind IND selected indices array (.pkl) (default: None) --ind-type {particle,image} use indices to filter by particle or by individual image (default: particle) --tomogram TOMOGRAM optionally select by individual tomogram name (if `all` then writes individual star files per tomogram (default: None) --action {keep,drop} keep or remove particles associated with ind.pkl (default: keep) -o O Output .star file (default: None) -

Generate 3-D volumes from (per-particle) unique positions in latent space:

tomodrgn eval_vol 02_vae_z8/weights.pkl --config 02_vae_z8/config.pkl -o 02_vae_z8/tomogram_vols --zfile 02_vae_z8/z.pkl --downsample 64. This command can benefit from per-tomomgramz.pklfiltering withintomoDRGN_viz+filt_template.ipynbto create volumes for all particles associated with a particular tomogram.Click to see all options:

tomodrgn eval_vol --helpusage: tomodrgn eval_vol [-h] [-w WEIGHTS] -c CONFIG -o O [--prefix PREFIX] [--no-amp] [-b BATCH_SIZE] [--multigpu] [-v] [-z [Z ...]] [--z-start [Z_START ...]] [--z-end [Z_END ...]] [-n N] [--zfile ZFILE] [--Apix APIX] [--flip] [--invert] [-d DOWNSAMPLE] Evaluate the decoder at specified values of z options: -h, --help show this help message and exit -w WEIGHTS, --weights WEIGHTS Model weights from train_vae (default: None) -c CONFIG, --config CONFIG config.pkl file from train_vae (default: None) -o O Output .mrc or directory (default: None) --prefix PREFIX Prefix when writing out multiple .mrc files (default: vol_) --no-amp Disable use of mixed-precision training (default: False) -b BATCH_SIZE, --batch-size BATCH_SIZE Batch size to parallelize volume generation (32-64 works well for box64 volumes) (default: 1) --multigpu Parallelize training across all detected GPUs. Specify GPUs i,j via `export CUDA_VISIBLE_DEVICES=i,j` (default: False) -v, --verbose Increases verbosity (default: False) Specify z values: -z [Z ...] Specify one z-value (default: None) --z-start [Z_START ...] Specify a starting z-value (default: None) --z-end [Z_END ...] Specify an ending z-value (default: None) -n N Number of structures between [z_start, z_end] (default: 10) --zfile ZFILE Text/.pkl file with z-values to evaluate (default: None) Volume arguments: --Apix APIX Pixel size to add to output .mrc header (default: 1) --flip Flip handedness of output volume (default: False) --invert Invert contrast of output volume (default: False) -d DOWNSAMPLE, --downsample DOWNSAMPLE Downsample volumes to this box size (pixels) (default: None) -

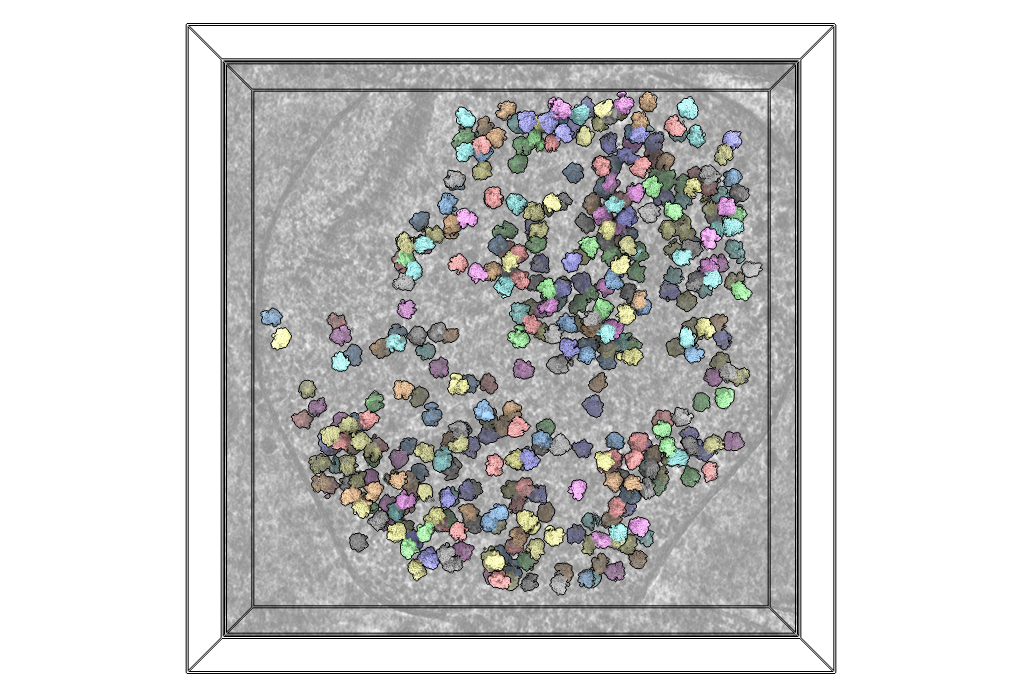

Write a ChimeraX script to place tomoDRGN-generated individual particle volumes in the spatial context of a chosen tomogram with optional color mapping:

tomodrgn subtomo2chimerax particles_volumeseries.star -o mytomogram_tomodrgnvols.cxs --tomoname mytomogram.tomostar --vols-dir 02_vae_z8/tomogram_vols --coloring-labels 02_vae_z8/analyze.29/kmeans20/labels.pklClick to see all options:

tomodrgn subtomo2chimerax --helpusage: tomodrgn subtomo2chimerax [-h] -o OUTFILE [--tomoname TOMONAME] [--vols-dir VOLS_DIR] [--vol-path VOL_PATH] [--ind IND] [--tomo-id-col-override TOMO_ID_COL_OVERRIDE] [--star-apix-override STAR_APIX_OVERRIDE] [--vols-apix-override VOLS_APIX_OVERRIDE] [--vols-render-level VOLS_RENDER_LEVEL] [--coloring-labels COLORING_LABELS] [--matplotlib-colormap MATPLOTLIB_COLORMAP] starfile Create a .cxs script for ChimeraX to arrange uniquely generated tomoDRGN volumes into tomogram with optional label-based color mapping Adapted from relionsubtomo2ChimeraX.py, written by Huy Bui, McGill University, doi: https://doi.org/10.5281/zenodo.6820119 positional arguments: starfile Input particle_volumeseries starfile from Warp subtomogram export options: -h, --help show this help message and exit -o OUTFILE, --outfile OUTFILE Output .cxc script to be opened in ChimeraX (default: None) --tomoname TOMONAME Name of tomogram in input starfile for which to display tomoDRGN vols in ChimeraX (default: None) --vols-dir VOLS_DIR Path to tomoDRGN reconstructed volumes (default: None) --vol-path VOL_PATH Path to single consensus volume (instead of tomoDRGN volumes) (default: None) --ind IND Ind.pkl used in training run that produced volumes in vols-dir (if applicable) (default: None) --tomo-id-col-override TOMO_ID_COL_OVERRIDE Name of column in input starfile to filter by --tomoname (default: None) --star-apix-override STAR_APIX_OVERRIDE Override pixel size of input particle_volumeseries starfile (A/px) (default: None) --vols-apix-override VOLS_APIX_OVERRIDE Override pixel size of tomoDRGN-reconstructed particle volumes (A/px) (default: None) ChimeraX rendering options: --vols-render-level VOLS_RENDER_LEVEL Isosurface level to render all tomoDRGN reconstructed volumes in ChimeraX (default: 0.7) --coloring-labels COLORING_LABELS labels.pkl file used to assign colors to each volume (typically kmeans labels.pkl (default: None) --matplotlib-colormap MATPLOTLIB_COLORMAP Colormap to apply to --coloring-labels (default = ChimeraX color scheme per label value) from https://matplotlib.org/stable/tutorials/colors/colormaps.html (e.g. tab10) (default: None) -

Generate latent embeddings for images using a pretrained model:

tomodrgn eval_images particles_imageseries_new.star 02_vae_z8/weights.pkl -c 02_vae_z8/config.pkl --out-z 02_vae_z8/eval_images/z.pklClick to see all options:

tomodrgn eval_images --helpusage: tomodrgn eval_images [-h] [-w WEIGHTS] -c CONFIG --out-z PKL [--log-interval LOG_INTERVAL] [-b BATCH_SIZE] [-v] [--no-amp] [--datadir DATADIR] [--ind IND] [--lazy] [--uninvert-data] [--sequential-tilt-sampling] [--num-workers NUM_WORKERS] [--prefetch-factor PREFETCH_FACTOR] [--persistent-workers] [--pin-memory] particles Evaluate z for a stack of images using the config.pkl and weights.pkl of a pretrained model positional arguments: particles Input particles (.mrcs, .star, .cs, or .txt) options: -h, --help show this help message and exit -w WEIGHTS, --weights WEIGHTS Model weights (default: None) -c CONFIG, --config CONFIG config.pkl file from train_vae (default: None) --out-z PKL Output pickle for z (default: None) --log-interval LOG_INTERVAL Logging interval in N_IMGS (default: 1000) -b BATCH_SIZE, --batch-size BATCH_SIZE Minibatch size (default: 64) -v, --verbose Increases verbosity (default: False) --no-amp Disable use of automatic mixed precision (default: False) Override configuration values: --datadir DATADIR Path prefix to particle stack if loading relative paths from a .star or .cs file (default: None) --ind IND Filter particle stack by these indices (default: None) --lazy Lazy loading if full dataset is too large to fit in memory (default: False) --uninvert-data Do not invert data sign (default: True) --sequential-tilt-sampling Supply particle images of one particle to encoder in starfile order (default: False) Dataloader arguments: --num-workers NUM_WORKERS Number of workers to use when batching particles for training. Has moderate impact on epoch time (default: 0) --prefetch-factor PREFETCH_FACTOR Number of particles to prefetch per worker for training. Has moderate impact on epoch time (default: 2) --persistent-workers Whether to persist workers after dataset has been fully consumed. Has minimal impact on run time (default: False) --pin-memory Whether to use pinned memory for dataloader. Has large impact on epoch time. Recommended. (default: False) -

Find particle indices and latent embeddings most directly connecting chosen "anchor" particles in latent space:

tomodrgn graph_traversal 02_vae_z8/z.pkl --anchors 137 10 20 -o 02_vae_z8/graph_traversal/path.txt --out-z 02_vae_z8/graph_traversal/z.path.txtClick to see all options:

tomodrgn graph_traversal --helpusage: tomodrgn graph_traversal [-h] --anchors ANCHORS [ANCHORS ...] [--max-neighbors MAX_NEIGHBORS] [--avg-neighbors AVG_NEIGHBORS] [--batch-size BATCH_SIZE] [--max-images MAX_IMAGES] -o O --out-z OUT_Z data Find shortest path along nearest neighbor graph positional arguments: data Input z.pkl embeddings options: -h, --help show this help message and exit --anchors ANCHORS [ANCHORS ...] Index of anchor points (default: None) --max-neighbors MAX_NEIGHBORS --avg-neighbors AVG_NEIGHBORS --batch-size BATCH_SIZE --max-images MAX_IMAGES -o O Output .txt or .pkl file for path indices (default: None) --out-z OUT_Z Output .txt or .pkl file for path z-values (default: None)

Training requirements and limitations

TomoDRGN model training requires as inputs:

- a .mrcs stack containing the 2D pixel array of each particle extracted from each stage tilt micrograph, and

- a corresponding RELION3.0-format .star file describing pose and CTF parameters for each particle's tilt image as derived from traditional subtomogram averaging.

At the moment, the only tested workflow to generate these inputs is Warp+IMOD tomogram generation, STA using RELION 3.1 (and optionally M), followed by subtomogram extraction as image series in Warp (or M) (making use of the excellent dynamo2warp tools from Alister Burt). We are actively seeking to expand the range of validated workflows; please let us know if you are exploring a novel approach.

Additional details about required inputs for train_vae

TomoDRGN requires a number of specific metadata values to be supplied in the star file (a sample star file is provided at tomodrgn/testing/data/10076_both_32_sim.star). Users testing upstream workflows that do not conclude with 2D particle extraction in Warp/M should ensure their RELION 3.0 format star files contain the following headers:

_rlnGroupName: used to identify multiple tilt images associated with the same particle, with formattomogramID_particleIDas used in Warp (e.g.001_000004identifies particle 5 from tomogram 2)_rlnCtfScalefactor: used to calculate stage tilt of each image, equal tocosine(stage_tilt_radians)as defined in Warp_rlnCtfBfactor: used to calculate cumulative dose imparted to each image, equal todose_e-_per_A2 * -4as defined in Warp_rlnImageName: used to load image data'_rlnDetectorPixelSize','_rlnDefocusU', '_rlnDefocusV', '_rlnDefocusAngle', '_rlnVoltage', '_rlnSphericalAberration', '_rlnAmplitudeContrast', '_rlnPhaseShift': used to calculate the CTF and optimal dose_rlnAngleRot, _rlnAngleTilt, _rlnAnglePsi: used to calculate pose as defined in RELION_rlnOriginX, _rlnOriginY: used to calculate pose as defined in RELION (optional, Warp re-centers upon particle extraction and therefore does not have these headers by default)- tomoDRGN ignores but maintains all other headers, notably allowing interactive post-training analysis to probe per-particle correlations with user-defined metadata

Changelog

-

v0.2.2

- Changes

- Subtomo2chimerax now writes a text file summarizing which models are associated with each label and color

- Made jupyter notebook interactive particle viewer UI layout more flexible

- Bugfixes

- Fixed several jupyter notebook bugs including reindexing prefiltered indices when saving new subset indices, reduced memory usage, interactive particle viewer colormap generation and assignment

- Fixed FSC calculation to properly handle Nyquist bin

- Fixed subtomo2chimerax alignment of coloring labels with volume indices

- Changes

-

v0.2.1

- New features

- Added

--dose-overrideoption when loading images to manually specify dose in e-/A2/image - Added

--starfile-sourceoption tofilter_star.pyto allow filtering RELION3.1-format star files from RELION - Added

--write-tiltseries-starfileoption todownsample.pyto write RELION3.0-format star file to downsampled images - Added multiple options for pooling tilt images between

train_vae.pyencoder A and encoder B per particle - Exposed pytorch dataloader options to user in

train_vae.py,train_nn.py,eval_images.py convergence_nn.pysaves FSCs calculated per epoch to pkl file- Added

--batch-sizetoeval_vol.pycalling parallelizedmodels.FTPositionalDecoder.gen_volume_batchfor accelerated volume generation - Added

--vol-pathtosubtomo2chimerax.pyallowing use of consensus reconstruction instead of unique tomodrgn volumes - Added new

quicktest.pyandunittest.pyinterface - Added

loadmethod usingconfig.pklfile to easily createdataset.TiltSeriesMRCDataobject

- Added

- Changes

- Faster dataset loading with improved file I/O

- Dataset preprocessing refactored to numpy arrays

- Decreased memory utilization in

dataset.TiltSeriesMRCDatapreprocessing - Decreased memory utilization in

starfile.TiltSeriesStarfileinitialization - Decreased memory utilization by gaussian positional encoding

- Changed default positional encoding to

gaussian - Changed default latent dimensionality to

--zdim 128 train_vae.pychecks for NaN/inf during checkpoint evaluationutils.calc_fscallows input volumes to be either paths to .mrc files or direct numpy arrays- New plots in

convergence_vae.pyincluding all loss types, all-to-all volumetric correlation coefficients among tomodrgn (and optionally ground truth) volumes - Changed default

subtomo2chimerax.pycolor map to ChimeraX color scheme - Added requirement for pytorch>=1.11

- Bugfixes

- Fixed syntax of internal calls to

eval_vol.py - Fixed dataset index filtering of pre-filtered datasets

- Added assert for convergence scripts that requisite input files exist

- Fixed bug where

config.pklcontaining CUDA tensors could not be loaded on cpu - Fixed bug where precalculated

normwould be ignored when loading dataset fromconfig.pklwith--lazy

- Fixed syntax of internal calls to

- New features

-

v0.2.0

- Features

- added support for datasets with variable tilts per tomogram (tilt counts/schemes/etc)

- new scripts

subtomo2chimeraxandfilter_star - additional features and checks in

tomoDRGN_viz+filt.ipynb eval_imagessupportsz.pkloutput;eval_volsupportsz.pklinput- new tests

unittest.shandquicktest.sh - validated compatibility with python 3.7 - 3.10 and pytorch 1.8 - 1.12

- Changes

- refactored the majority of codebase for explicit internal tomoDRGN compatibility and some performance improvements

- updated tomoDRGN install requirements and changed to

pip-based installation --ampis now enabled by default and can be disabled with--no-amp--do-dose-weighting,--do-tilt-weighting,--dose-maskhave been renamed to--recon-dose-weight,--recon-tilt-weight,--l-dose-mask, respectivelytomodrgn downsamplenow benefits from pytorch-based GPU acceleration- updated training hyperparameter defaults

- (many) various bugfixes

- Features

-

v0.1.0

- initial tomoDRGN release

Relevant literature

- Powell, B.M., Davis, J.H. Learning structural heterogeneity from cryo-electron sub-tomograms with tomoDRGN. bioRxiv

- Zhong, E.D., Bepler, T., Berger, B. & Davis, J.H. CryoDRGN: Reconstruction of Heterogeneous cryo-EM Structures Using Neural Networks. Nature Methods, doi:10.1038/s41592-020-01049-4 (2021)

- Kinman, L.F., Powell, B.M., Zhong, E.D. et al. Uncovering structural ensembles from single-particle cryo-EM data using cryoDRGN. Nat Protoc 18, 319–339 (2023). https://doi.org/10.1038/s41596-022-00763-x

- Sun, J., Kinman, L., Jahagirdar, D., Ortega, J., Davis. J. KsgA facilitates ribosomal small subunit maturation by proofreading a key structural lesion. bioRxiv, doi:10.1101/2022.07.13.499473 (2022)

Contact

Please reach out with bug reports, feature requests, etc to bmp[at]mit[dot]edu.