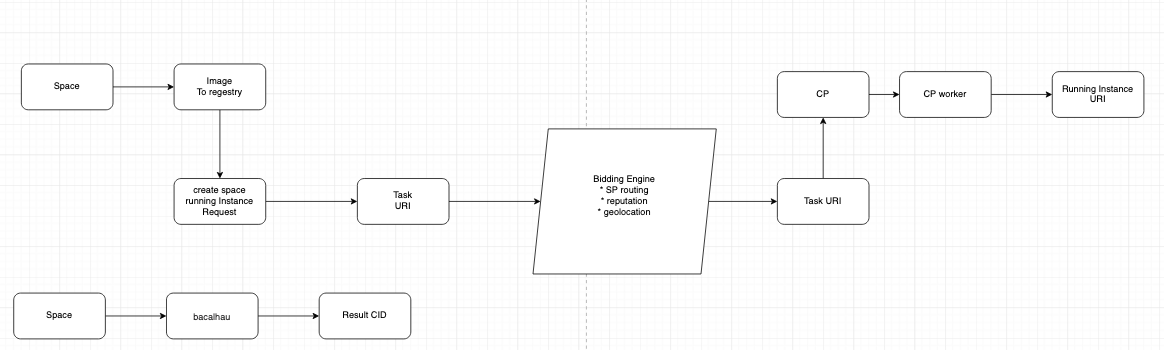

As a pipeline, the states are changing according to the build needs.

Standard build process:

Standard build process:

- Webhook trigger

- Task creation

- Task details from CID

- Download the Space

- Make build

- Push to the remote docker hub

- Clean up

- build file

- local cache

- images

Install and Start Redis

docker run -d --name computing_provider -p 6379:6379 redis/redis-stack-server:latest computing provider

git clone https://github.com/lagrangedao/computing-provider

cp .env_smaple .envfor variables in the .env file

MCS_API_KEY= <From multichain.storage>

MCS_ACCESS_TOKEN= <From multichain.storage>

MCS_BUCKET=<Your bucket name in multichain.storage>

FILE_CACHE_PATH= <local folder name for store local file, e.g.temp_file >

OUR_DOCKER_USERNAME= < for login docker hub>

OUR_DOCKER_PASSWORD=< for login docker hub>

OUR_DOCKER_EMAIL=< for login docker hub>Setup config file

cp config/config_template.toml config/config.toml

For values in config.toml

api_url = "https://api.lagrangedao.org"

computing_provider_name = <Your computing provider name>

multi_address = "/ip4/<Your public ip>/tcp/<your port>"

lagrange_key = "xxxxx" <Check the document below for how to get it>How to get lagrange_key

- Go to lagrangedao.org and connet with your wallet

- Open https://lagrangedao.org/personal_center/setting/tokens, create a new token

Start the CP Node

sudo apt install -y gunicorn

pip install -r requirements.txt

gunicorn -c "python:config.gunicorn" --reload "computing_provider.app:create_app()"You will see the following output if start properly:

[2023-04-17 00:28:09 -0400] [916988] [INFO] Starting gunicorn 20.1.0

[2023-04-17 00:28:09 -0400] [916988] [INFO] Listening at: http://0.0.0.0:8000 (916988)

[2023-04-17 00:28:09 -0400] [916988] [INFO] Using worker: sync

[2023-04-17 00:28:09 -0400] [916989] [INFO] Booting worker with pid: 916989

[2023-04-17 00:28:09,602] INFO in node_service: Found key in .swan_node/private_key

[2023-04-17 00:28:09,604] INFO in boot: Node started: 0x601e25ab158ba1f7Start a CP worker

sudo apt install -y python-celery-common

celery --app computing_provider.computing_worker.celery_app worker --loglevel "${CELERY_LOG_LEVEL:-INFO}"Build all services:

docker-compose buildStart all services:

docker-compose upStop all serivces:

docker-compose stop