Cloud NeRF

This is the official implementation of Neural Radiance Fields with Points Cloud Latent Representation. [Paper][Project Page]

Instruction

- Please download and arrange the dataset same as the instruction.

- For the environment, we provide our Docker image for the best reproduction.

- The scen optimization scripts are provided in the instruction.

Data

- We evaluate our framework on forward-facing LLFF dataset, available at Google drive.

- We also need to download the pre-trained MVS depth estimation at Google drive.

- Our data folder structure is same as follow:

├── datasets

│ ├── nerf_llff_data

│ │ │──fern

│ │ │ | |──depths

│ │ │ | |──iamges

│ │ │ | |──images_4

│ │ │ | |──sparse

│ │ │ | |──colmap_depth.npy

│ │ │ | |──poses_bounds.npy

│ │ │ | |──...

Docker

- We provide the Docker images of our environment at DockerHub.

- To create docker container from image, run the following command

docker run \ --name ${CONTAINER_NAME} \ --gpus all \ --mount type=bind,source="${PATH_TO_SOURCE}",target="/workspace/source" \ --mount type=bind,source="${PATH_TO_DATASETS}",target="/workspace/datasets/" \ --shm-size=16GB \ -it ${IMAGE_NAME}

Train & Evaluation

- To train from scratch, run the following command

CUDA_VISIBLE_DEVICES=1 python train.py \ --dataset_name llff \ --root_dir /workspace/datasets/nerf_llff_data/${SCENE_NAME}/ \ --N_importance 64 \ --N_sample 64 \ --img_wh 1008 756 \ --num_epochs 10 \ --batch_size 4096 \ --optimizer adam \ --lr 5e-3 \ --lr_scheduler steplr \ --decay_step 2 4 6 8 \ --decay_gamma 0.5 \ --exp_name ${EXP_NAME} - To evaluate a checkpoint, run the following command

CUDA_VISIBLE_DEVICES=1 python eval.py \ --dataset_name llff \ --root_dir /workspace/datasets/nerf_llff_data/${SCENE_NAME}/ \ --N_importance 64 \ --N_sample 64 \ --img_wh 1008 756 \ --weight_path ${PATH_TO_CHECKPOINT} \ --split val

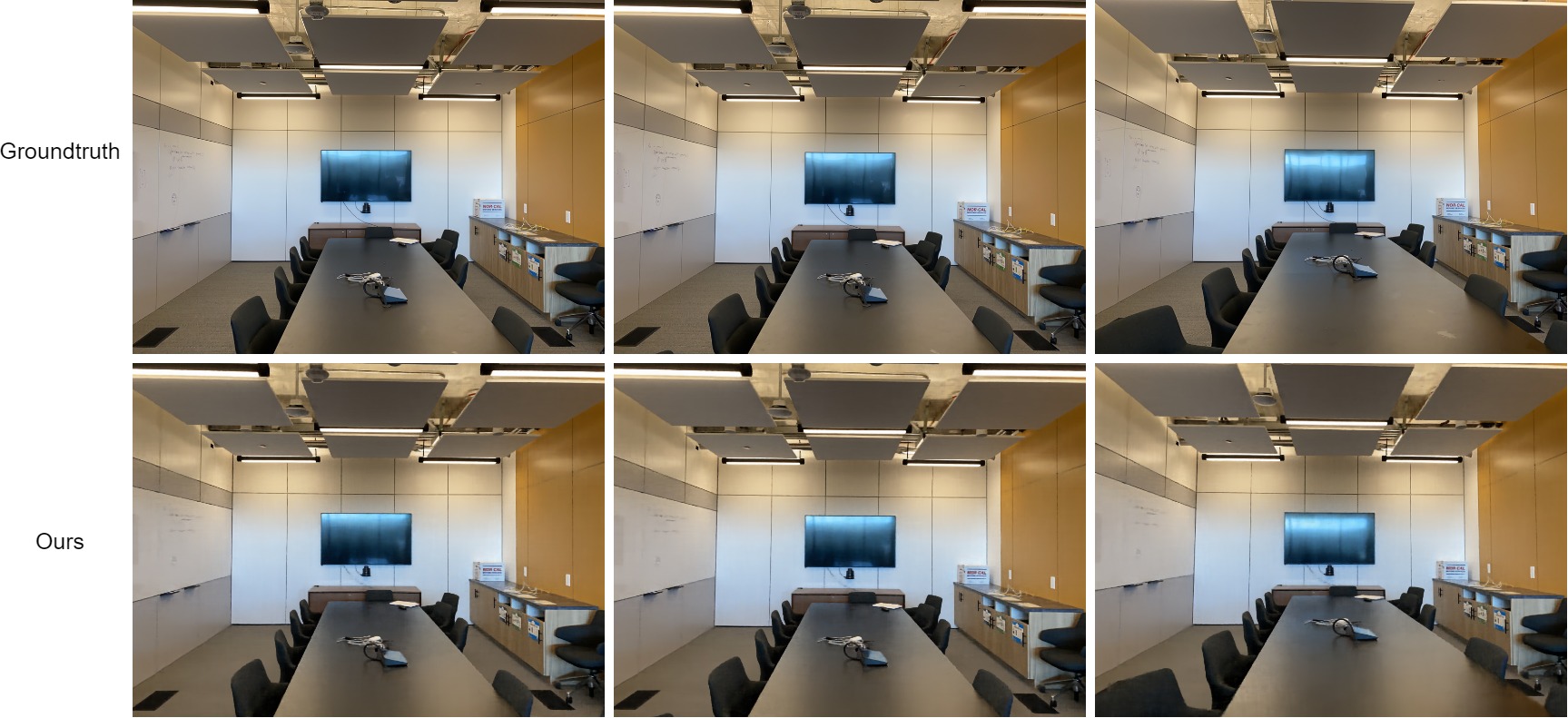

Visualization

- Visualization of Fern scene

Acknowledgement

Our repo is based on nerf, nerf_pl, DCCDIF, and Pointnet2_PyTorch.