Contributors

This repository has been established to analyze and compare video object detection models. It explores design choices, differences, and performance while addressing challenges specific to video object detection. We reviewed and implemented these papers.

- Please find the final report here

- The ppt used in the video can be found here

- YouTube video can be found here

Download the ImageAI library using pip and proceed with the executions.

pip install imageai

The RetinaNet, YOLOV and TinyYOLOV weights can be loaded from the offcial repository here

Change the current working directory

cd Models

Run the FirstVideoObjectDetection.py to detect objects from parsed video file (change path to file location) :

python FirstVideoObjectDetection.py file_name

Run real-time statistics:

python gui.py file_name

Run the selected model for real time detection with camera input:

python CamDetect.py

To install vstam object detector:

git clone https://github.com/Malik1998/VSTAM.git

python -m pip install 'git+https://github.com/facebookresearch/detectron2.git'

Run vstam evaluation for an example image:

python Models/vstam/evaluate.py

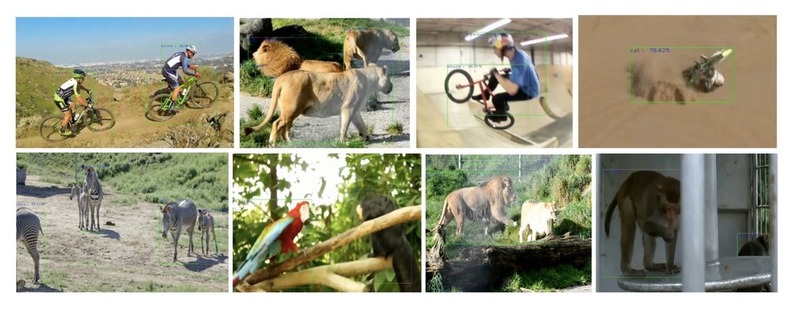

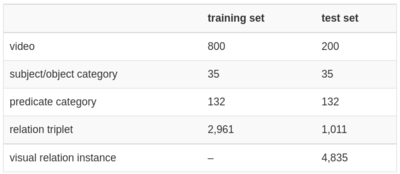

We used ImageNet-VidVRD Video Visual Relation Dataset

It contains 1,000 videos from ILVSRC2016-VID, split into 800 training and 200 test videos. The dataset covers 35 subject/object categories and 132 predicate categories. Labeled by ten individuals, it includes object trajectory and relation annotations.

- Mask R-CNN

- Temporal ROI Align- for Video Object Recognition

- RetinaNet- Focal Loss for Dense Object Detection

- BoxMask

- YOLOV: Making Still Image Object Detectors Great at Video Object Detection

- TransVOD

- VSTAM- Video Sparse Transformer With Attention-Guided Memory for Video Object Detection

@inproceedings{shang2017video,

author={Shang, Xindi and Ren, Tongwei and Guo, Jingfan and Zhang, Hanwang and Chua, Tat-Seng},

title={Video Visual Relation Detection},

booktitle={ACM International Conference on Multimedia},

address={Mountain View, CA USA},

month={October},

year={2017}

}