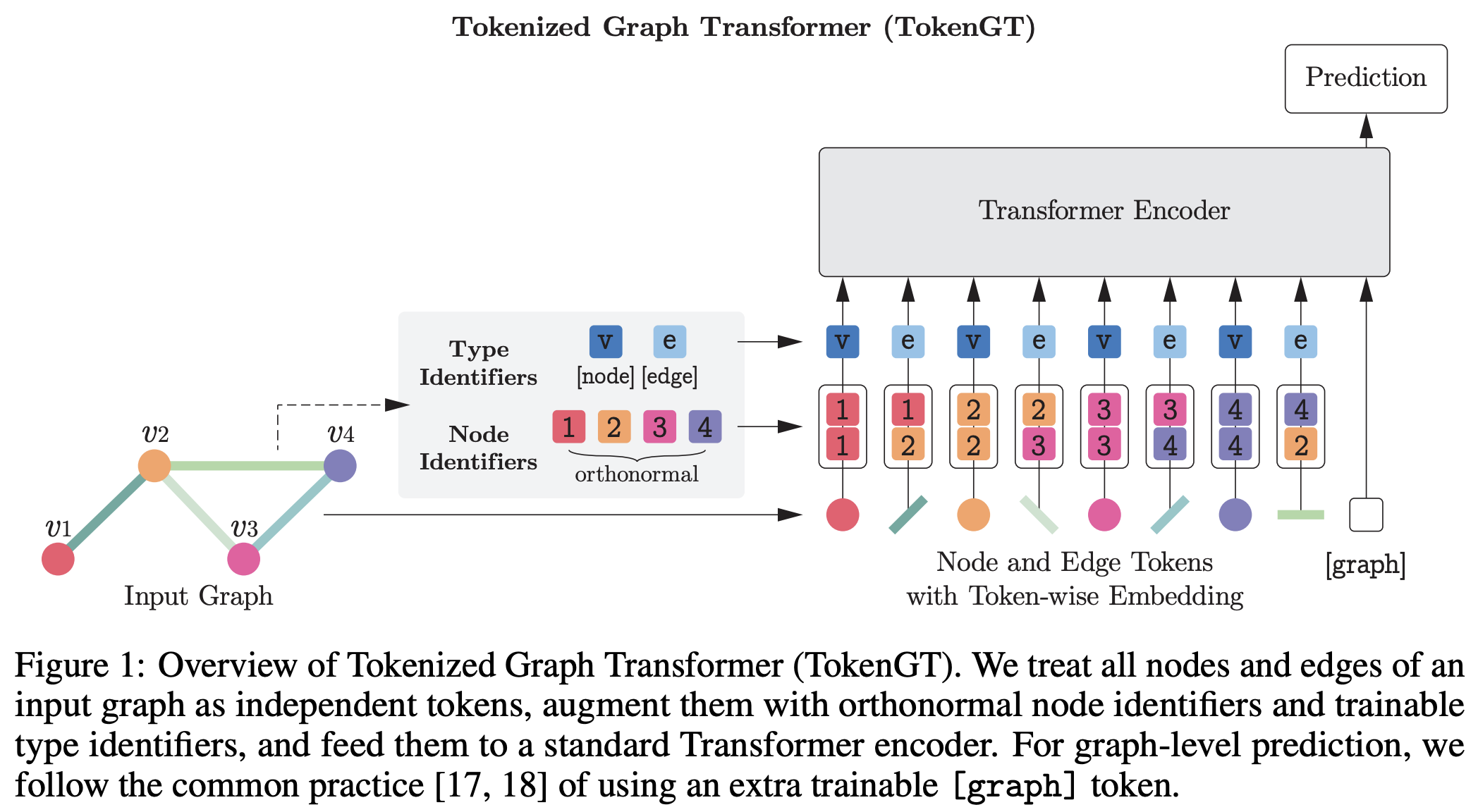

Pure Transformers are Powerful Graph Learners

Jinwoo Kim, Tien Dat Nguyen, Seonwoo Min, Sungjun Cho, Moontae Lee, Honglak Lee, Seunghoon Hong

arXiv Preprint

Using the provided Docker image (recommended)

docker pull jw9730/tokengt:latest

docker run -it --gpus=all --ipc=host --name=tokengt -v /home:/home jw9730/tokengt:latest bash

# upon completion, you should be at /tokengt inside the containerUsing the provided Dockerfile

git clone --recursive https://github.com/jw9730/tokengt.git /tokengt

cd tokengt

docker build --no-cache --tag tokengt:latest .

docker run -it --gpus all --ipc=host --name=tokengt -v /home:/home tokengt:latest bash

# upon completion, you should be at /tokengt inside the containerUsing pip

sudo apt-get update

sudo apt-get install python3.9

git clone --recursive https://github.com/jw9730/tokengt.git tokengt

cd tokengt

bash install.shPCQM4Mv2 large-scale graph regression

cd large-scale-regression/scripts

# TokenGT (ORF)

bash pcqv2-orf.sh

# TokenGT (Lap)

bash pcqv2-lap.sh

# TokenGT (Lap) + Performer

bash pcqv2-lap-performer-finetune.sh

# TokenGT (ablated)

bash pcqv2-ablated.sh

# Attention distance plot for TokenGT (ORF)

bash visualize-pcqv2-orf.sh

# Attention distance plot for TokenGT (Lap)

bash visualize-pcqv2-lap.shWe provide checkpoints of TokenGT (ORF) and TokenGT (Lap), both trained with PCQM4Mv2.

Please download ckpts.zip from this link.

Then, unzip ckpts and place it in the large-scale-regression/scripts directory, so that each trained checkpoint is located at large-scale-regression/scripts/ckpts/pcqv2-tokengt-[NODE_IDENTIFIER]-trained/checkpoint_best.pt.

After that, you can resume the training from these checkpoints by adding the option --pretrained-model-name pcqv2-tokengt-[NODE_IDENTIFIER]-trained to the training scripts.

Our implementation uses code from the following repositories:

- Performer for FAVOR+ attention kernel

- Graph Transformer, SignNet, and SAN for Laplacian eigenvectors

- Graphormer for PCQM4Mv2 experiment pipeline

- timm for stochastic depth regularization

If you find our work useful, please consider citing it:

@article{kim2021transformers,

author = {Jinwoo Kim and Tien Dat Nguyen and Seonwoo Min and Sungjun Cho and Moontae Lee and Honglak Lee and Seunghoon Hong},

title = {Pure Transformers are Powerful Graph Learners},

journal = {arXiv},

volume = {abs/2207.02505},

year = {2022},

url = {https://arxiv.org/abs/2207.02505}

}