This extension is meant to be used with Raycast to access LLMs using the various different clients. Currently supported Large Language Models include:

- OpenAI

- Anthropic

- Groq

- Perplexity

- Google's Deepmind (Discontinued)

Simply, the main advantages are the speed of interaction with the LLM, the privacy (in most cases companies don't collect data from API interactions), and reduced cost while using the latest LLMs.

This is a quick list of some of the limitations on this current version.

Currently the extension is meant for personal use, thus it doesn't have a consumer friendly onboarding process. All api codes are stored in a JSON file in src/enums/index.tsx with this format:

export const API_KEYS = {

ANTHROPIC: '',

OPENAI: '',

GROQ: '',

DEEPMIND: '',

PERPLEXITY: '',

}

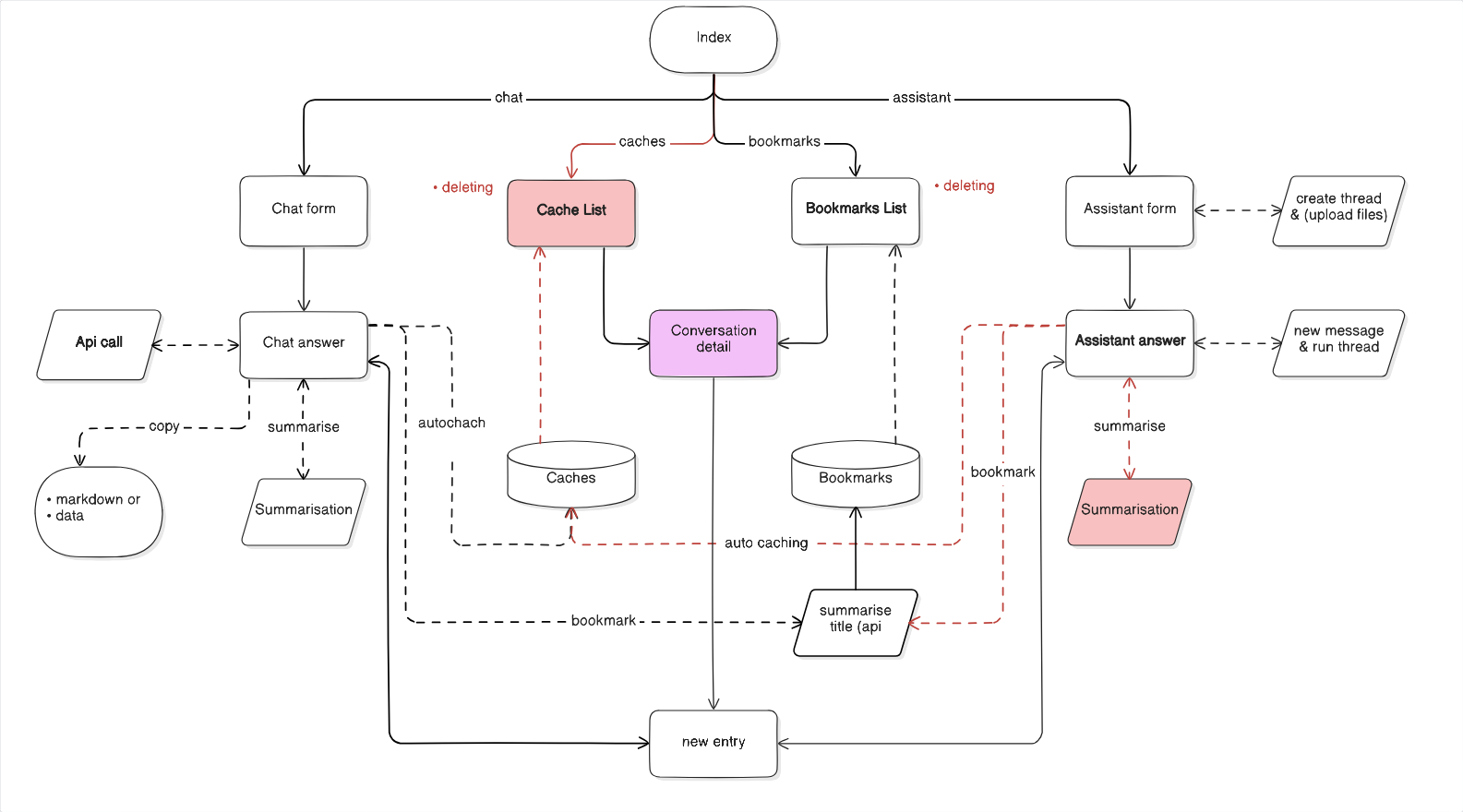

While file uploads are now supported with the latest version—using OpenAI's Assistants (Beta)—the experience is not as seamless as using a proprietary application (at least using Raycast). This issue also affects long chats. Although the latest chats and assistant interactions will be saved in Raycast's cache, continuation and recalling conversations and information aren't as easy.

I couldn't figure out how to use Google's client to access its servers. I suspect it is how it handles fetch declaration differently from other company's software. Hopefully they change or I can find a solution in the future.

Voice-to-text and Text-to-voice aren't currently supported (Raycast limitation).

Raycast renders information using CommonMark, which doesn't support math/latex rendering.

Still improving and adding new features.

Red sections need additional work.