This repository is the official implementation of Long-CLIP

Long-CLIP: Unlocking the Long-Text Capability of CLIP

Beichen Zhang, Pan Zhang, Xiaoyi Dong, Yuhang Zang, Jiaqi Wang

- 🔥 Long Input length Increase the maximum input length of CLIP from 77 to 248.

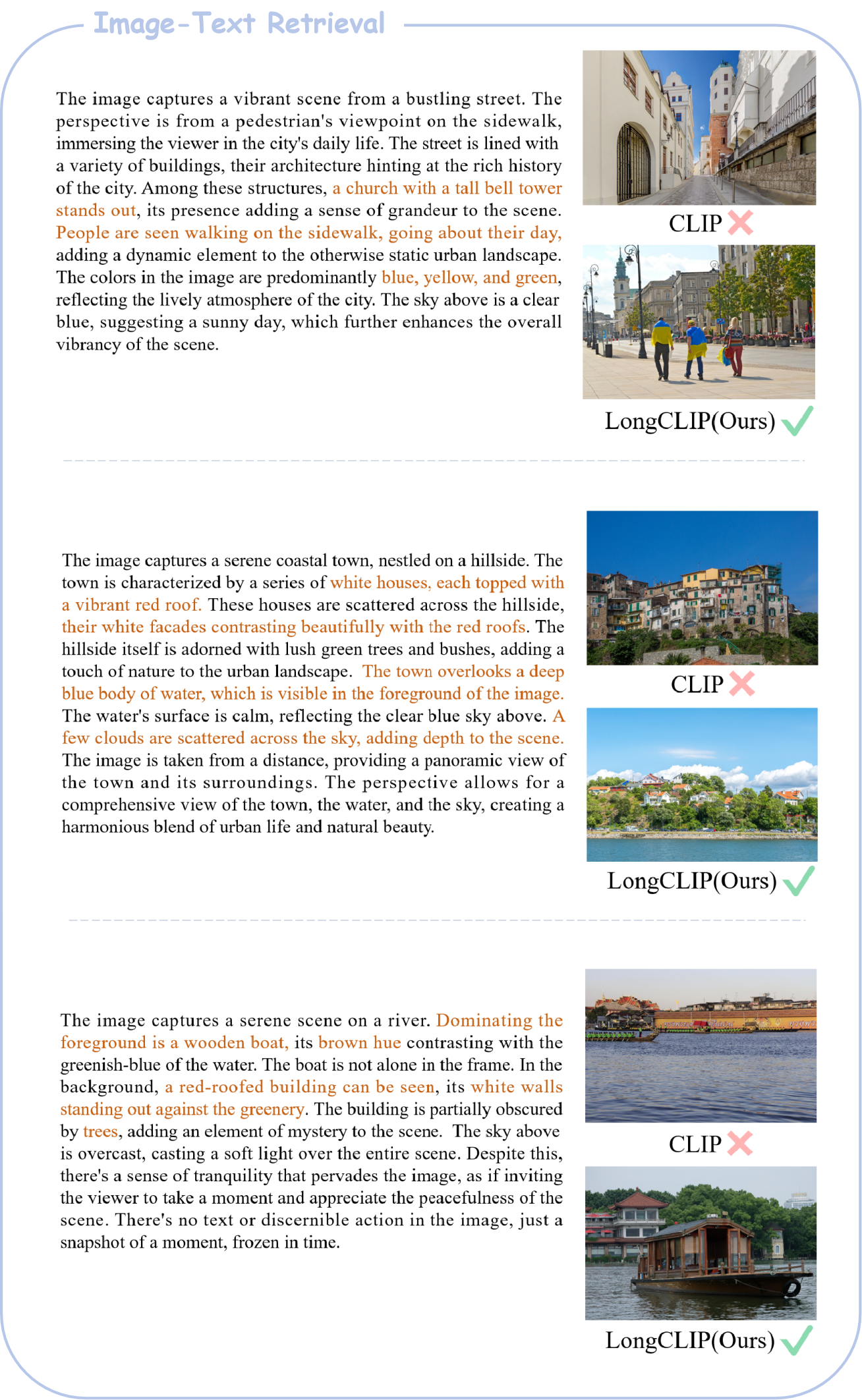

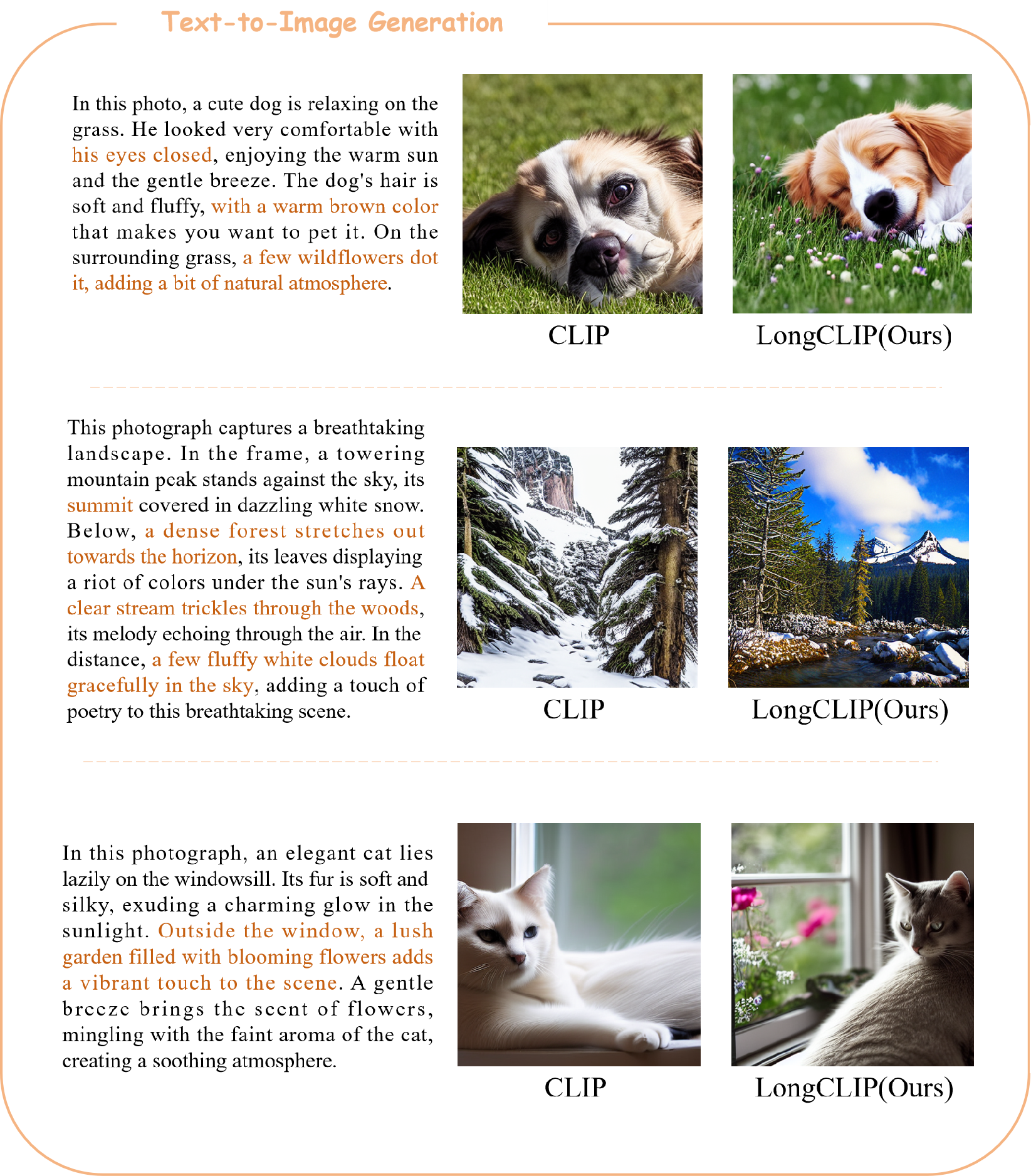

- 🔥 Strong Performace Improve the R@5 of long-caption text-image retrieval by 20% and traditional text-image retrieval by 6%.

- 🔥 Plug-in and play Can be directly applied in any work that requires long-text capability.

🚀 [2024/4/1] The training code is released!

🚀 [2024/3/25] The Inference code and models (LongCLIP-B and LongCLIP-L) are released!

🚀 [2024/3/25] The paper is released!

- Training code for Long-CLIP based on OpenAI-CLIP

- Evaluation code for Long-CLIP

- evaluation code for zero-shot classification and text-image retrieval tasks.

- Usage example of Long-CLIP

- Checkpoints of Long-CLIP

Our model is based on CLIP, please prepare environment for CLIP.

Please first clone our repo from github by running the following command.

git clone https://github.com/beichenzbc/Long-CLIP.git

cd Long-CLIPThen, download the checkpoints of our model LongCLIP-B and/or LongCLIP-L and place it under ./checkpoints

from model import longclip

import torch

from PIL import Image

device = "cuda" if torch.cuda.is_available() else "cpu"

model, preprocess = longclip.load("./checkpoints/longclip-B.pt", device=device)

text = longclip.tokenize(["A man is crossing the street with a red car parked nearby.", "A man is driving a car in an urban scene."]).to(device)

image = preprocess(Image.open("./img/demo.png")).unsqueeze(0).to(device)

with torch.no_grad():

image_features = model.encode_image(image)

text_features = model.encode_text(text)

logits_per_image, logits_per_text = model(image, text)

probs = logits_per_image.softmax(dim=-1).cpu().numpy()

print("Label probs:", probs) # prints: [[0.982 0.01799]]To run zero-shot classification on imagenet dataset, run the following command after preparing the data

cd eval/classification/imagenet

python imagenet.pySimilarly, run the following command for cifar datset

cd eval/classification/cifar

python cifar10.py #cifar10

python cifar100.py #cifar100To run text-image retrieval on COCO2017 or Flickr30k, run the following command after preparing the data

cd eval/retrieval

python coco.py #COCO2017

python flickr30k.py #Flickr30kPlease refer to train/train.md for training details.