project page | arXiv | webvid-data

Repository containing the code, models, data for end-to-end retrieval. WebVid data can be found here

Repository containing the code, models, data for end-to-end retrieval. WebVid data can be found here

-

Create conda env

conda env create -f requirements/frozen.yml -

Create data / experiment folders

mkdir data; mkdir exps, note this can just be a symlink to where you want to store big data.

-

wget https://www.robots.ox.ac.uk/~maxbain/frozen-in-time/data/MSRVTT.zip -P data; unzip data/MSRVTT.zip -d data -

Change

num_gpusin the config file accordingly. -

Train

python train.py --config configs/msrvtt_4f_i21k.json -

Test

python test.py --resume exps/models/{EXP_NAME}/{EXP_TIMESTAMP}/model_best.pth

For finetuning a pretrained model, set "load_checkpoint": "PATH_TO_MODEL" in the config file.

-

Download WebVid-2M (see https://github.com/m-bain/webvid-dataset)

-

Download CC-3M (see https://ai.google.com/research/ConceptualCaptions/download)

-

Train.

python train.py --config CONFIG_PATH. Here are the different options:a. Dataset combinations

i. CC-3M + WebVid2M: configs/cc-webvid2m-pt-i2k.json ii. WebVid2M : configs/webvid2m-pt-i2k.jsonYou can add in an arbitrary number of image/video datasets for pre-training by adding as many dataloaders to the config file dataloader list as your heart desires. Adding more datasets will likely to higher downstream performance.

b. Number of frames

For image datasets, this should always be set to

video_params": {"num_frames": 1, ...}.For video datasets, set this to what you want. N.B. More frames requires = more gpu memory.

If, like us, you are not a big company and have limited compute, then you will benefit by training via a curriculum on the number of frames. A lot of the knowledge can be learned in the 1-frame setting, as we show in the paper. You can then finetune with more frames.

c. Finetuning

Set

"load_checkpoint": "FULL_MODEL_PATH"in the config file. You can now use different experiment params, such as num_frames, to do curriculum learning for example.

This repository uses a sacred backbone for logging and tracking experiments, with a neptune front end. It makes life a lot easier. If you want to activate this:

- Create a neptune account.

- Create a project, copy in your credentials in

train.pyand remove the ValueError - Set

neptune: truein your config files.

If you use this code in your research, please cite:

@misc{bain2021frozen,

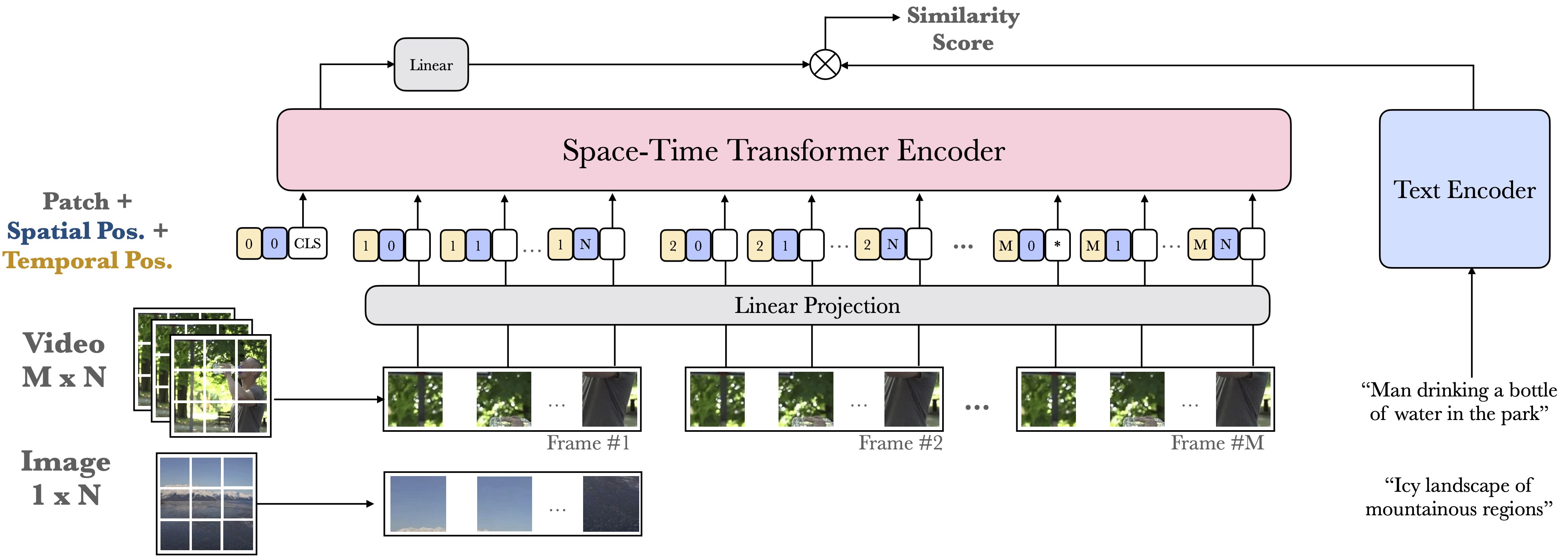

title={Frozen in Time: A Joint Video and Image Encoder for End-to-End Retrieval},

author={Max Bain and Arsha Nagrani and Gül Varol and Andrew Zisserman},

year={2021},

eprint={2104.00650},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

This code is based off the pytorch-template https://github.com/victoresque/pytorch-template

As well as many good practices adopted from Samuel Albanie's https://github.com/albanie/collaborative-experts