The primary objective of this project is to design an autonomous agricultural robot specifically used for the removal of weed on the real-time basis without any human involvement. This will help to offer better and nutrients rich yield involving less man-power than the conventional agriculture. This project can also be extended to design robot various other applications involved in farming like ploughing, harvesting, etc. in turn making agriculture more autonomous and providing better yields which in turn will impact on the country’s GDP and lesser farmer suicide rates.

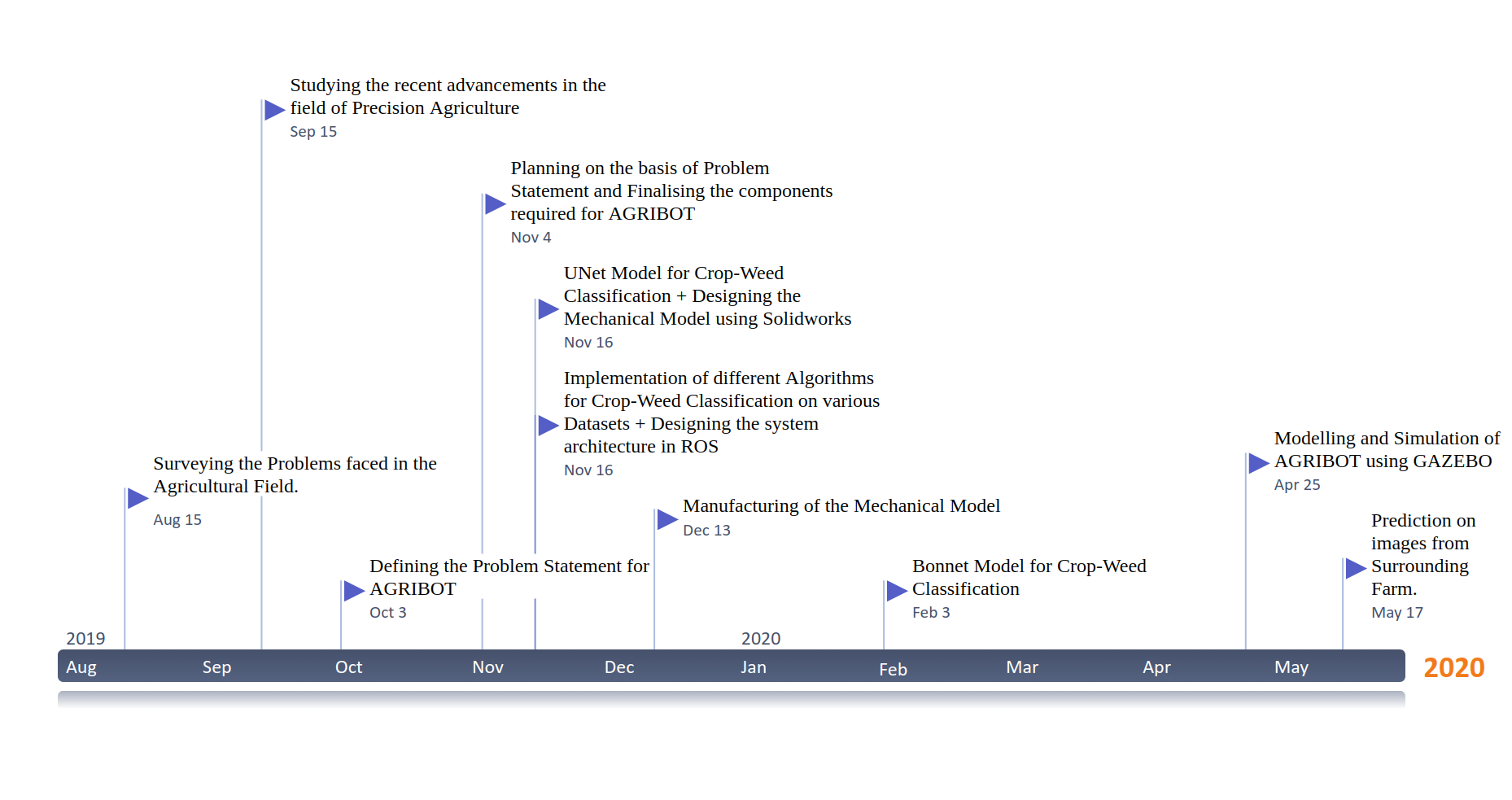

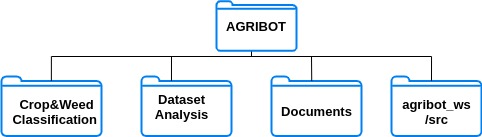

Mainly project is divided into two part, i.e. Autonomous Navigation & Crop Weed Classification. The main Autonomous-Farm-Robot contains all of the documentation and scripts required for the project, broken down into the four sections: Classification Model, Dataset Analysis, Documentation, and agribot_ws. We have created Wiki for better understanding for our project.

- Crop_Weed_Classification: Contains scripts for classification task.

- agribot_ws: Catkin workspace for navigation and modelling.

- Documents: Documentation of the project. Includes Project Report & Presentation, Proposal, Research Papers etc.

- Datasets(Git): For Analysis of Datasets. Bonn Dataset contains samples with missing images. So, there is a need to remove such samples. We have listed these samples which contains missing images in it.

For more details about project and implementation of modules, visit below links:

- Autonomous Navigation

- Configure Jetson Nano for Remote access

- Crop Weed Classification

- Electronics Components and Sensor Modelling

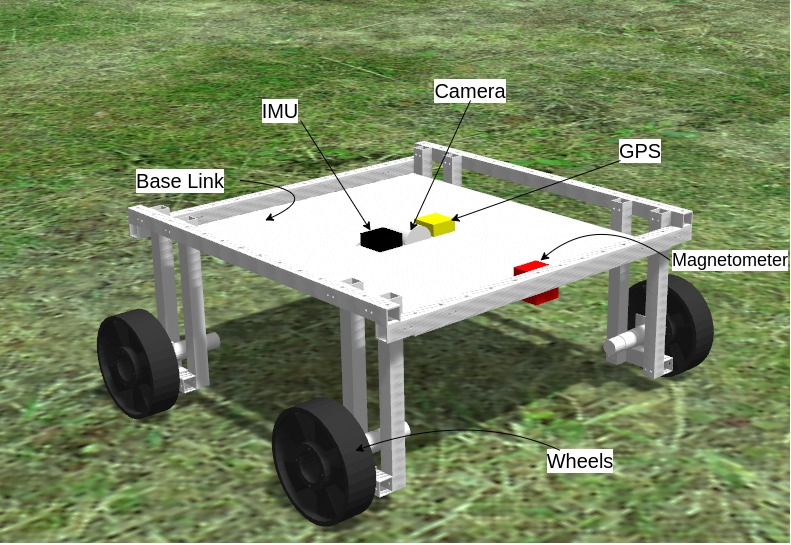

First we designed our generalized robotic structure using Solidworks-2016 and generated URDF for same and then spawn our model into GAZEBO. Crops were designed as cylindrical shape and given texture of plants, further we can design using 3D modelling software like Blender. Other models were taken from Gazebo model library. At this stage, We've used GPS & Magnetometer for autonomous traversing in field. For reduction of sensor noise, we implemented Moving Median and Single dimention Kalman Filter. You can find scripts in agribot_ws/src/autonomous_drive. For installation of pkgs and dependencies visit Autonomous Navigation wiki page.

Note: Here,

- Left side window: Mapviz, Right side window: Gazebo

- Green points are end points of row in field, Blue line is traced trajectory of AGRIBOT.

- Image window from Camera is being directly fed into classification model.

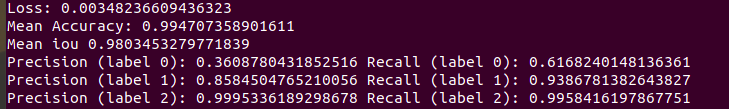

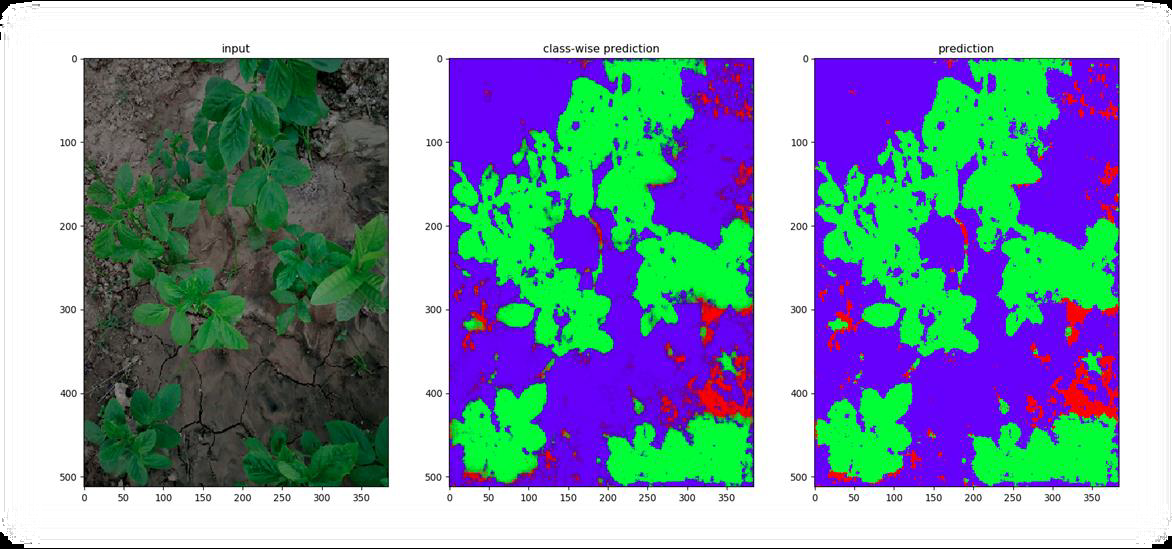

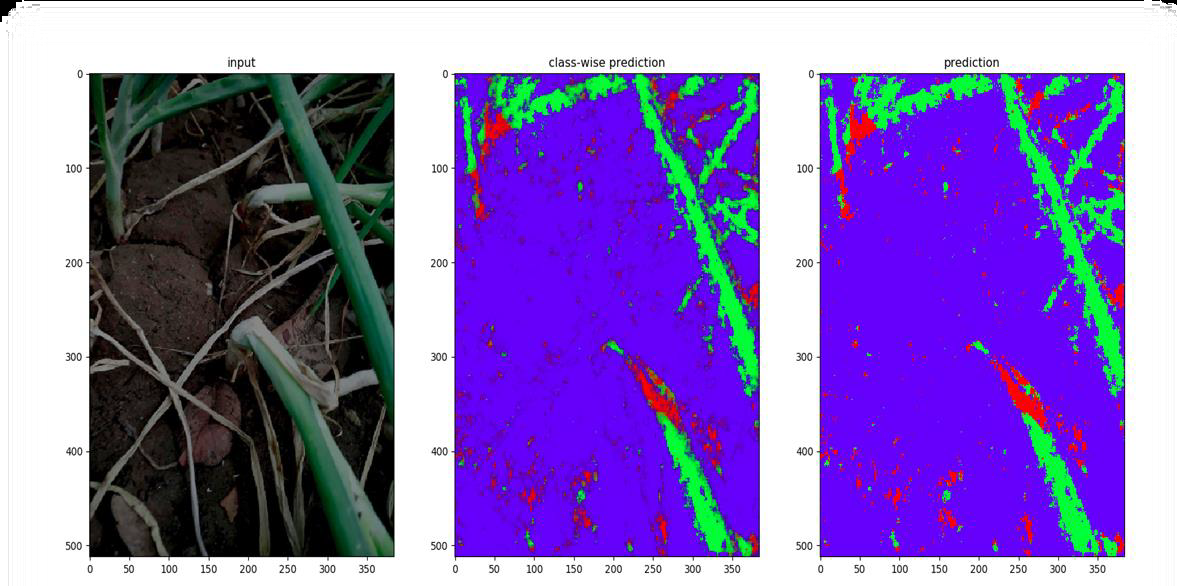

We trained and tested 2 models: UNet & Bonnet on Datasets namely: CWFID & Bonn. Bonnet Architecture by PRBonn (Photogrammetry & Robotics Lab at the University of Bonn) can be viewed here. Implementation of both architecture is done in Crop_Weed_ Classification/model.py. Bonnet performed better than its UNet counterpart and was suitable for real-time deployment due to its approx. 100x lesser parameters compared to UNet. Hence, Bonnet was selected as the final classification model.

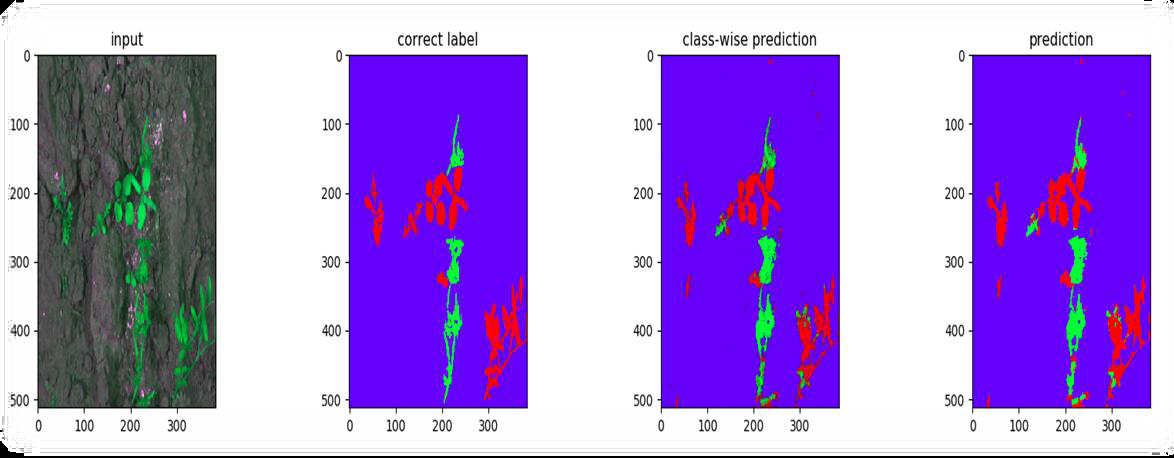

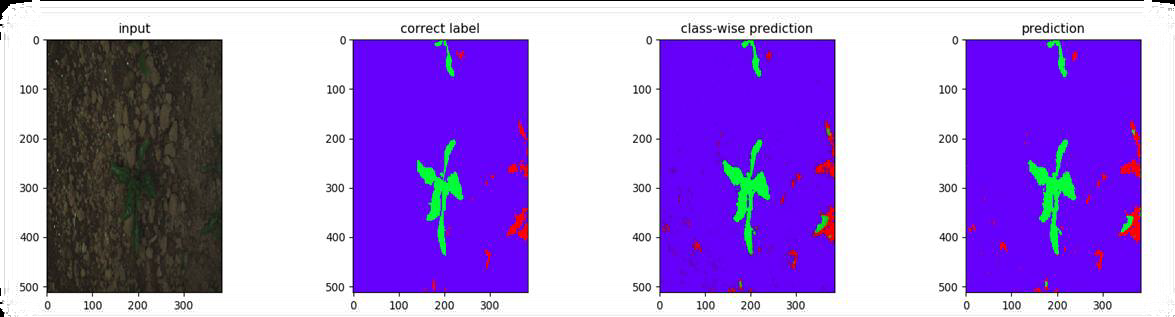

NOTE: Here, Red => Weed, Green => Crop and Blue => Soil.

- Label 0,1,2 are in order of Weed,Crop and Soil(i.e. R,G,B).

Avg. 2.5 fps on i7 8th Gen + 4GB NVIDIA 940 MX.

NOTE: For setting up environment,understanding of scripts and model weights, visit Crop-Weed-Classification Wiki page.

Various Sensor such as NEO-M8N GPS, MPU-9265 and Raspberry PI Cam-v2 were integrated on Nvidia Jetson Nano. However, due to COVID-19 pandemic, further development on hardware was not possible. Hence, we shifted our approach on simulation-basis. We tested our software and algorithms through modelling and simulation and have tried to make it close to real-case scenarios. For Eg: Modelling Sensors with noise to create a real-case scenario.

Access the Project Report at Documents/G-13 UG Project Report.pdf.

Feel free to raise an issue if you face any problems while implementing the modules. If you have any questions or run into problems during understanding of our project, please reach out to us through mail. We would be happy to share as much as possible.

.png?raw=true)