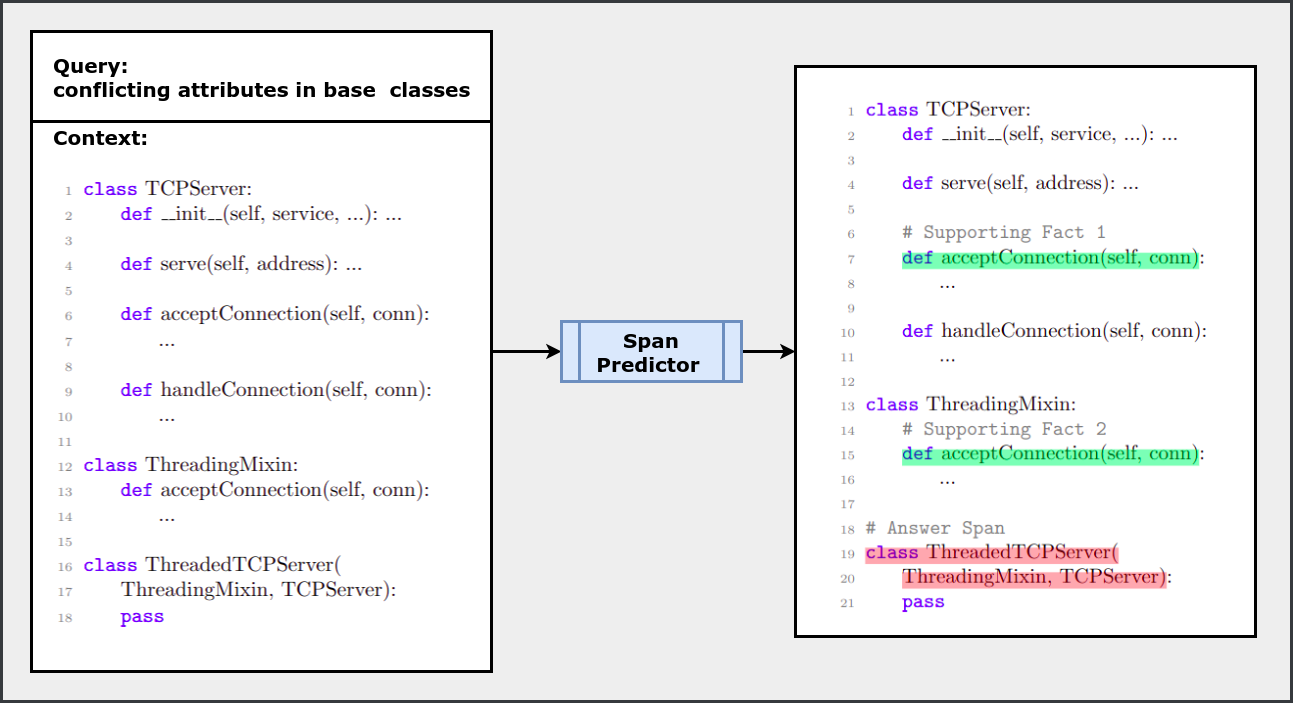

CodeQueries is a dataset to evaluate various methodologies on answering semantic queries over code. Existing datasets for question-answering in the context of programming languages target comparatively simpler tasks of predicting binary yes/no answers to a question or range over a localized context (e.g., a source-code method). In contrast, in CodeQueries, a source-code file is annotated with the required spans for a code analysis query about semantic aspects of code. Given a query and code, a Span Predictor system is expected to identify answer and supporting-fact spans in the code for the query.

The dataset statistics is provided in the Codequeries_Statistics file.

More details on the curated dataset for this benchmark are available on HuggingFace.

The repo provides scripts to evaluate the dataset for LLM generations and in a two-step setup. Follow the steps to use the scripts -

- Clone the repo in a virtual environment.

- Run

setup.shto setup the workspace. - Run the following commands to get performance metric values.

We have used the GPT3.5-Turbo model from OpenAI with different prompt templates (provided at /prompt_templates) to generate required answer and supporting-fact spans for a query. We generate 10 samples for each input and the generated results downloaded as a part of setup. Following scripts can be used to evaluate the LLM results with diffrerent prompts.

To evaluate zero-shot prompt,

python evaluate_generated_spans.py --g=test_dir_file_0shot/logs

To evaluate few-shot prompt with BM25 retrieval,

python evaluate_generated_spans.py --g=test_dir_file_fewshot/logs

To evaluate few-shot prompt with supporting facts,

python evaluate_generated_spans.py --g=test_dir_file_fewshot_sf/logs --with_sf=True

In many cases, the entire file contents do not fit in the input to the model. However, not all code is relevant for answering a given query. We identify the relevant code blocks using the CodeQL results during data preparation and implement a two-step procedure to deal with the problem of scaling to large-size code:

Step 1: We first apply a relevance classifier to every block in the given code and select code blocks that are likely to be relevant for answering a given query.

Step 2: We then apply the span prediction model to the set of selected code blocks to predict answer and supporting-fact spans.

To evaluate the two-step setup, run

python3 evaluate_spanprediction.py --example_types_to_evaluate=<positive/negative> --setting=twostep --span_type=<both/answer/sf> --span_model_checkpoint_path=<model-ckpt-with-low-data/Cubert-1K-low-data or finetuned_ckpts/Cubert-1K> --relevance_model_checkpoint_path=<model-ckpt-with-low-data/Twostep_Relevance-512-low-data or finetuned_ckpts/Twostep_Relevance-512>

| Zero-shot prompting (Answer span prediction) |

Few-shot prompting with BM25 retrieval (Answer span prediction) |

Few-shot prompting with supporting fact (Answer & supporting-fact span prediction) |

|||

|---|---|---|---|---|---|

| Pass@k | Positive | Negative | Positive | Negative | Positive |

| 1 | 9.82 | 12.83 | 16.45 | 44.25 | 21.88 |

| 2 | 13.06 | 17.42 | 21.14 | 55.53 | 28.06 |

| 5 | 17.47 | 22.85 | 27.69 | 65.43 | 34.94 |

| 10 | 20.84 | 26.77 | 32.66 | 70.0 | 39.08 |

| Answer span prediction | Answer & supporting-fact span prediction | ||

|---|---|---|---|

| Variant | Positive | Negative | Positive |

| Two-step(20, 20) | 9.42 | 92.13 | 8.42 |

| Two-step(all, 20) | 15.03 | 94.49 | 13.27 |

| Two-step(20, all) | 32.87 | 96.26 | 30.66 |

| Two-step(all, all) | 51.90 | 95.67 | 49.30 |

| Variants | Positive | Negative |

|---|---|---|

| Two-step(20, 20) | 3.74 | 95.54 |

| Two-step(all, 20) | 7.81 | 97.87 |

| Two-step(20, all) | 33.41 | 96.23 |

| Two-step(all, all) | 52.61 | 96.73 |

| Prefix | 36.60 | 93.80 |

| Sliding window | 51.91 | 85.75 |