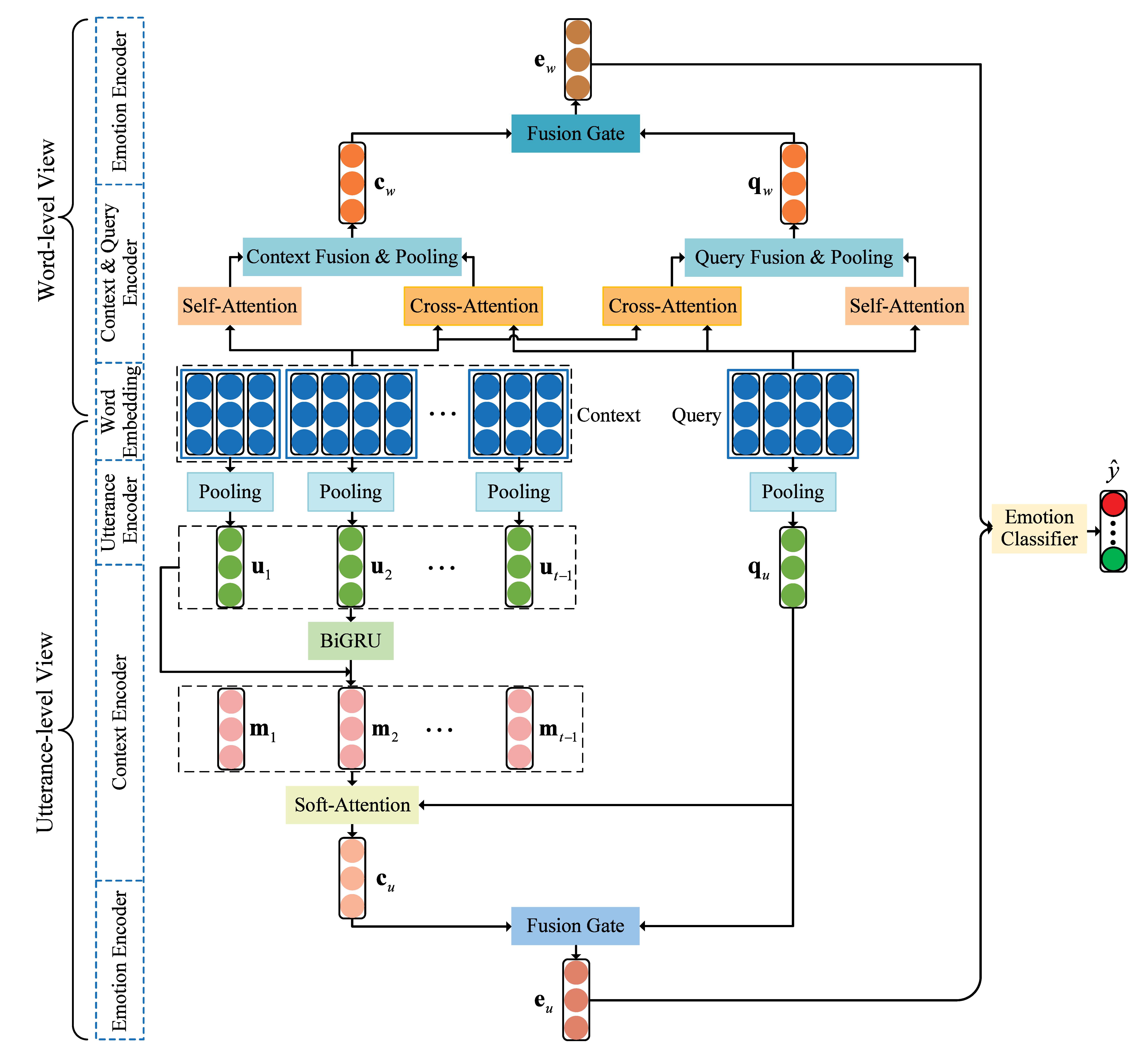

This repository is the implementation for our paper A multi-view network for real-time emotion recognition in conversations.

- Check the packages needed or simply run the command:

pip install -r requirements.txt- Download the IEMOCAP and MELD datasets from AGHMN storage.

- For each dataset, we use

Preprocess.pyto preprocess it. You can downloaded the preprocessed datasets from here, and put them intoData/. - Download the Pretrained Word2Vec Embeddings and save it in

Data/too.

- Run the model on IEMOCAP dataset:

bash exec_iemocap.sh- Run the model on MELD dataset:

bash exec_meld.sh- Special thanks to the AGHMN for sharing the codes and datasets.

If you find our work useful for your research, please kindly cite our paper. Thanks!

@article{ma2022multi,

title={A multi-view network for real-time emotion recognition in conversations},

author={Ma, Hui and Wang, Jian and Lin, Hongfei and Pan, Xuejun and Zhang, Yijia and Yang, Zhihao},

journal={Knowledge-Based Systems},

volume={236},

pages={107751},

year={2022},

publisher={Elsevier}

}