This repository is the implementation for our paper A Transformer-based Model with Self-distillation for Multimodal Emotion Recognition in Conversations.

- Check the packages needed or simply run the command:

pip install -r requirements.txt- Download the preprocessed datasets from here, and put them into

data/.

- Run the model on IEMOCAP dataset:

bash exec_iemocap.sh- Run the model on MELD dataset:

bash exec_meld.shIf you find our work useful for your research, please kindly cite our paper. Thanks!

@article{ma2024sdt,

author={Ma, Hui and Wang, Jian and Lin, Hongfei and Zhang, Bo and Zhang, Yijia and Xu, Bo},

journal={IEEE Transactions on Multimedia},

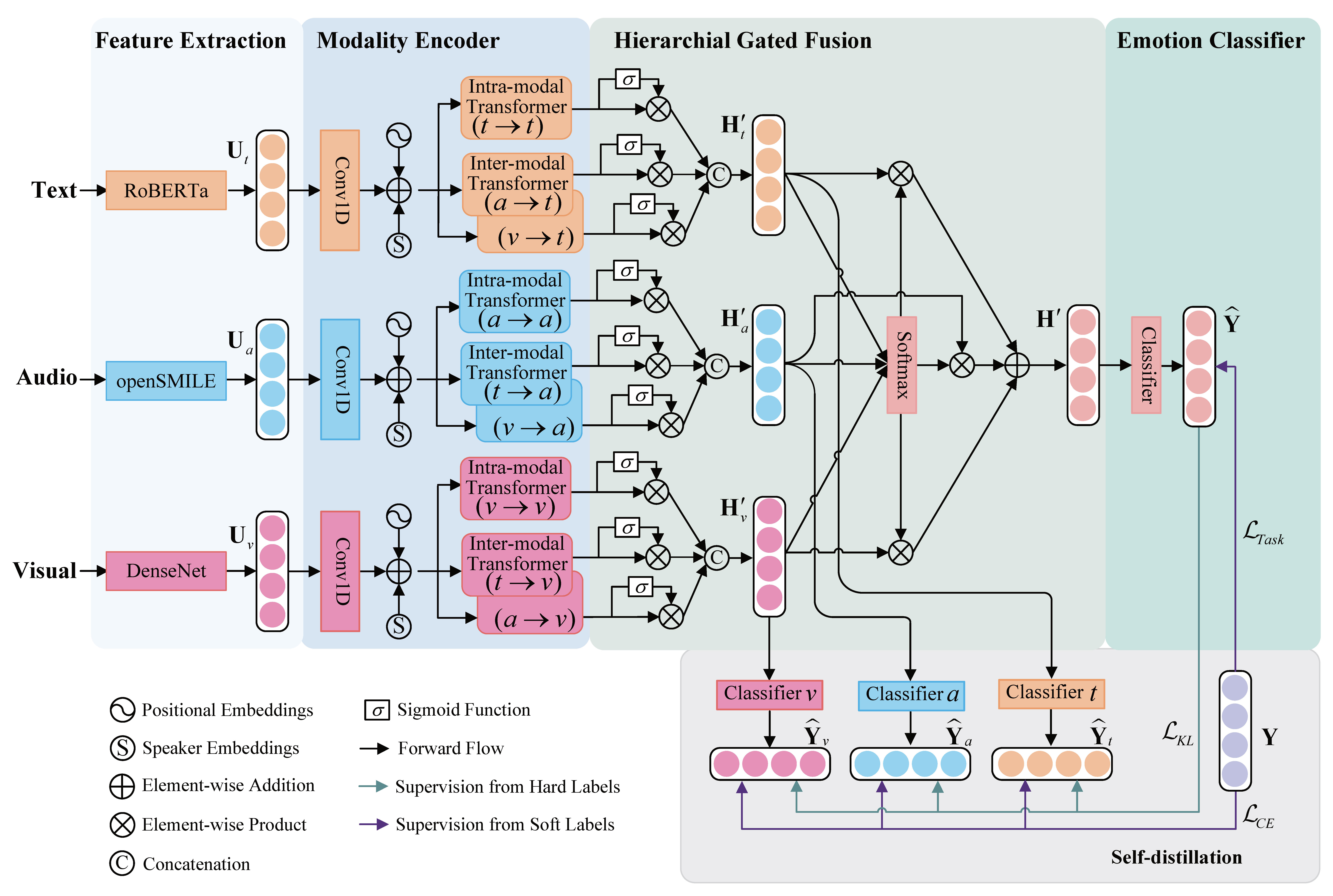

title={A Transformer-Based Model With Self-Distillation for Multimodal Emotion Recognition in Conversations},

year={2024},

volume={26},

number={},

pages={776-788},

keywords={Emotion recognition;Transformers;Oral communication;Context modeling;Task analysis;Visualization;Logic gates;Multimodal emotion recognition in conversations;intra- and inter-modal interactions;multimodal fusion;modal representation},

doi={10.1109/TMM.2023.3271019}}