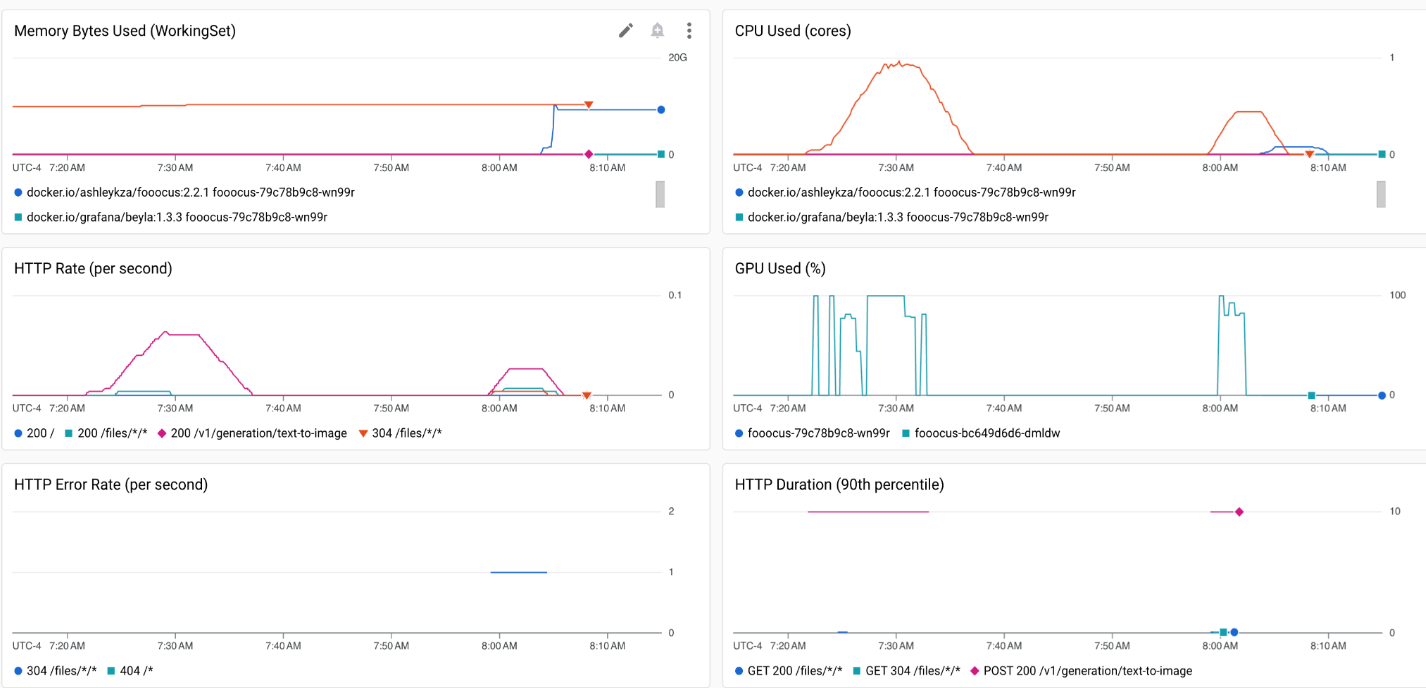

Demo of observability setup for AI workloads on GKE

https://github.com/lllyasviel/Fooocus

-

Create GKE cluster e.g. standard one in e.g. us-central1 region.

bash fooocus/1-patched.sh

Then follow up with the pool:

bash fooocus/2.sh

-

After cluster is up, setup kubectl

gcloud container clusters get-credentials ${CLUSTER_NAME} --location=${REGION}

-

Add more observability (soon will be automatic with monitoring packages)

# DCGM exporter, will be automatically done for you soon with DCGM package. kubectl apply -f fooocus/dcgm-monitoring.yaml # Same here, should be part of KUBELET,CADVISOR soon. kubectl apply -f fooocus/gcm-cadvisor.yaml

-

Install Fooocus inference server on your cluster, instrumented for HTTP metrics with eBPF

If you want UI (without REST API):

kubectl apply -f fooocus/server-instrumented-ui.yaml

If you want without UI (REST API), see

fooocus/stress.shon how to access it.kubectl apply -f fooocus/server-instrumented-rest.yaml

-

Wait for it to come up, in the meantime you can check the logs:

kubectl logs -f -l app=fooocus

Unfortunately it's configured to download GBs (in total) of deps and models on start.

-

Setup port-forwarding

For UI:

kubectl port-forward service/fooocus 3000:3000

For REST:

kubectl port-forward service/fooocus 3000:8088

-

Open 3000 port

-

Enjoy, create your own dashboard or e.g. import Grafana dashboard like https://grafana.com/grafana/dashboards/12239-nvidia-dcgm-exporter-dashboard/ into the GCM