This is the official implementation of ViT visualization tool

The anaconda env file is vit_visualize.yml

To create the environment, please install annaconda first and then run:

conda env create -f vit_visualize.yml

To make the jupyter notebook could load the environment, run:

python -m ipykernel install --user --name=vit_visual

During this analysis we use model Vision Transformer with version ViT-B16/224. To download the pretrain-weights of aforementioned model over ImageNet21k + ImageNet2012 and save to weights folder, we should run the below bash commands:

mkdir -p weights

wget -O weights/ViT-B_16-224.npz /tmp/Ubuntu.iso https://storage.googleapis.com/vit_models/imagenet21k+imagenet2012/ViT-B_16-224.npz

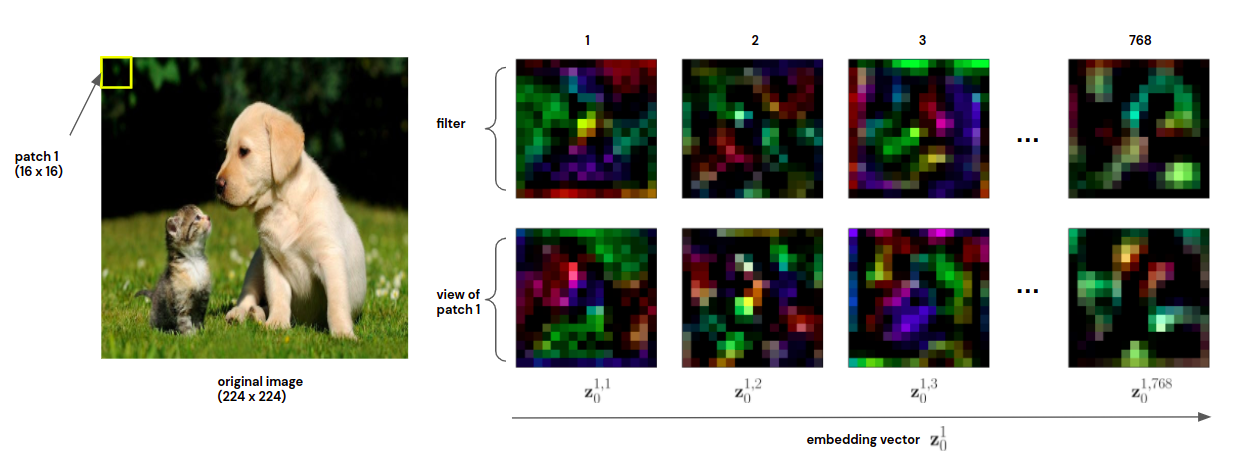

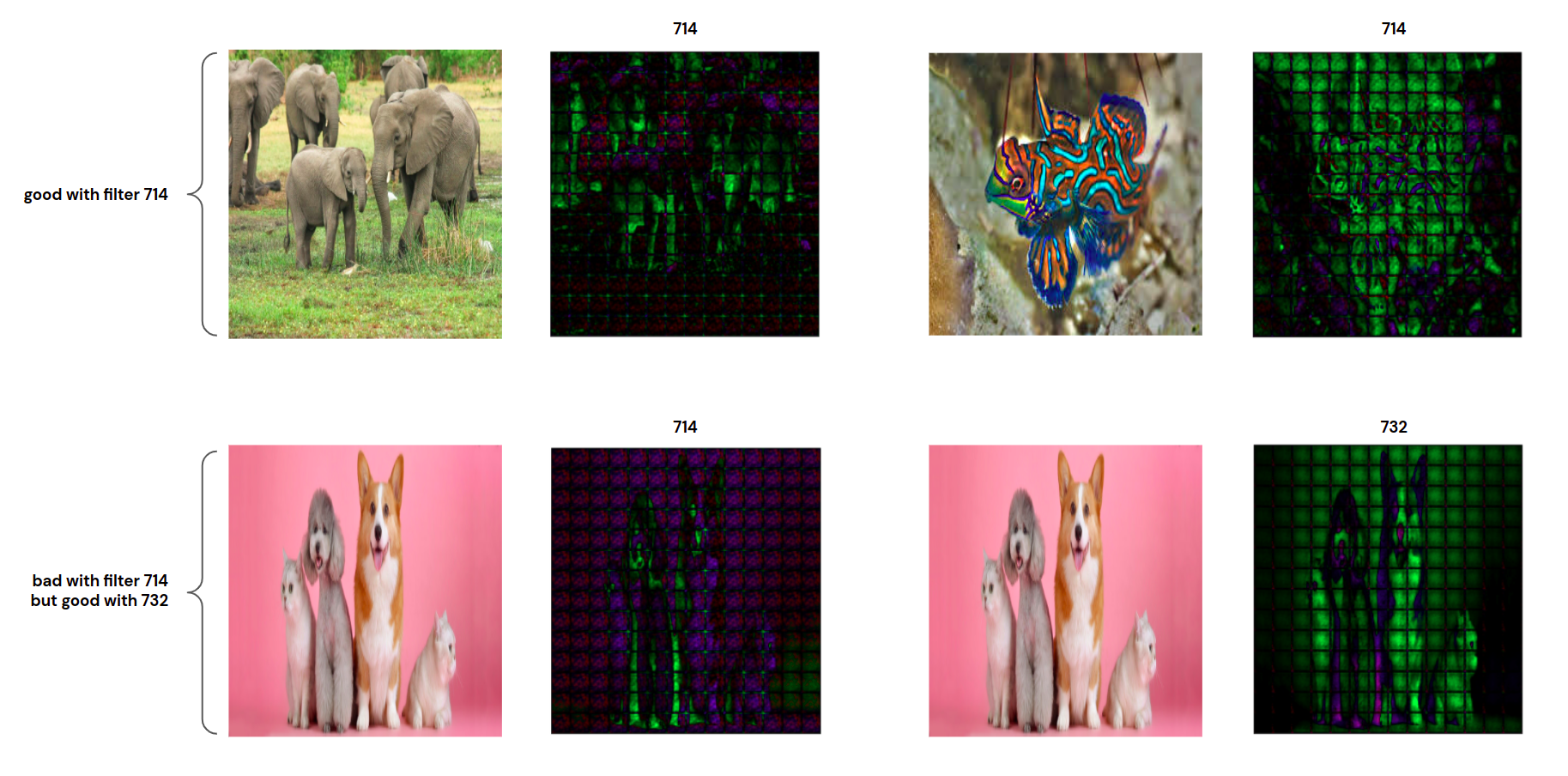

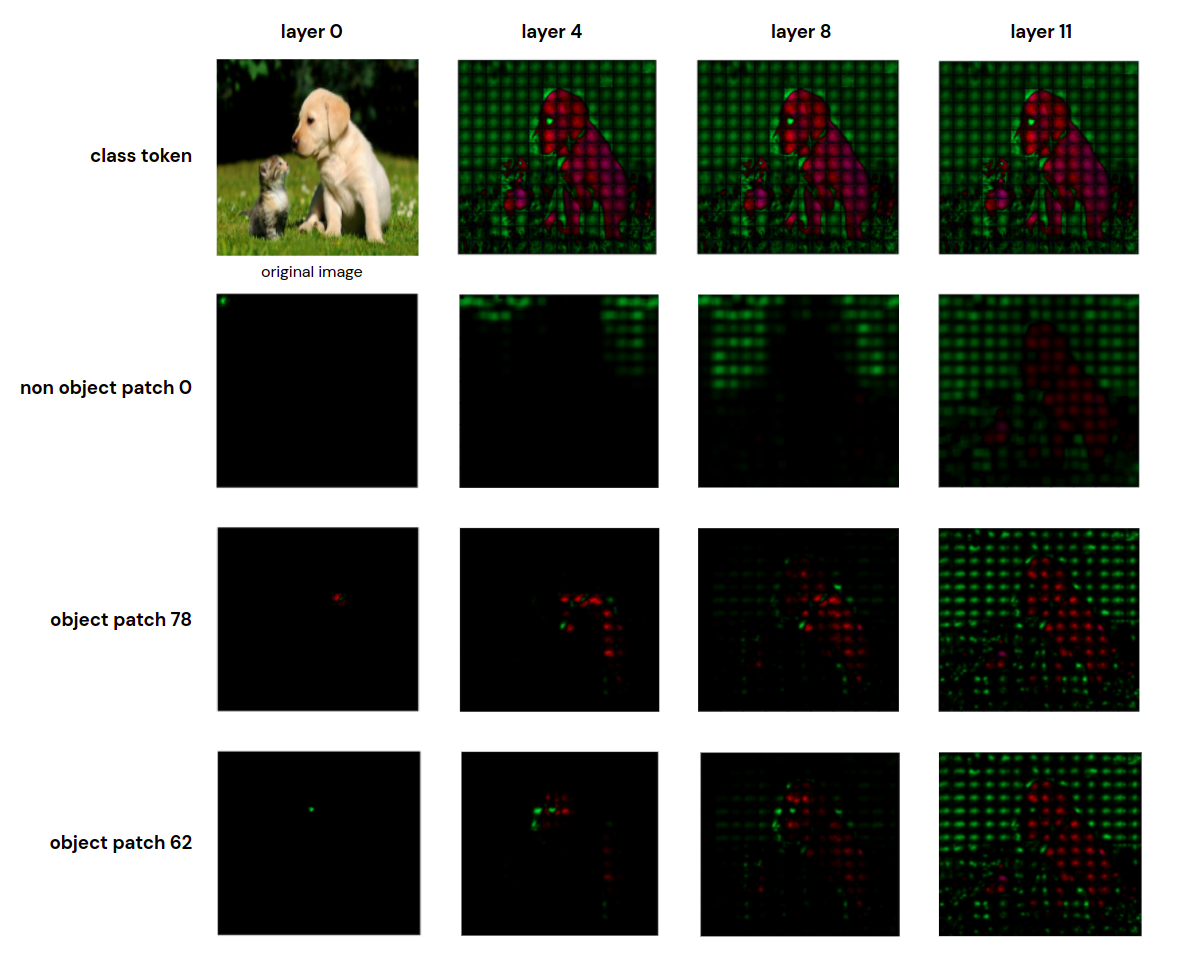

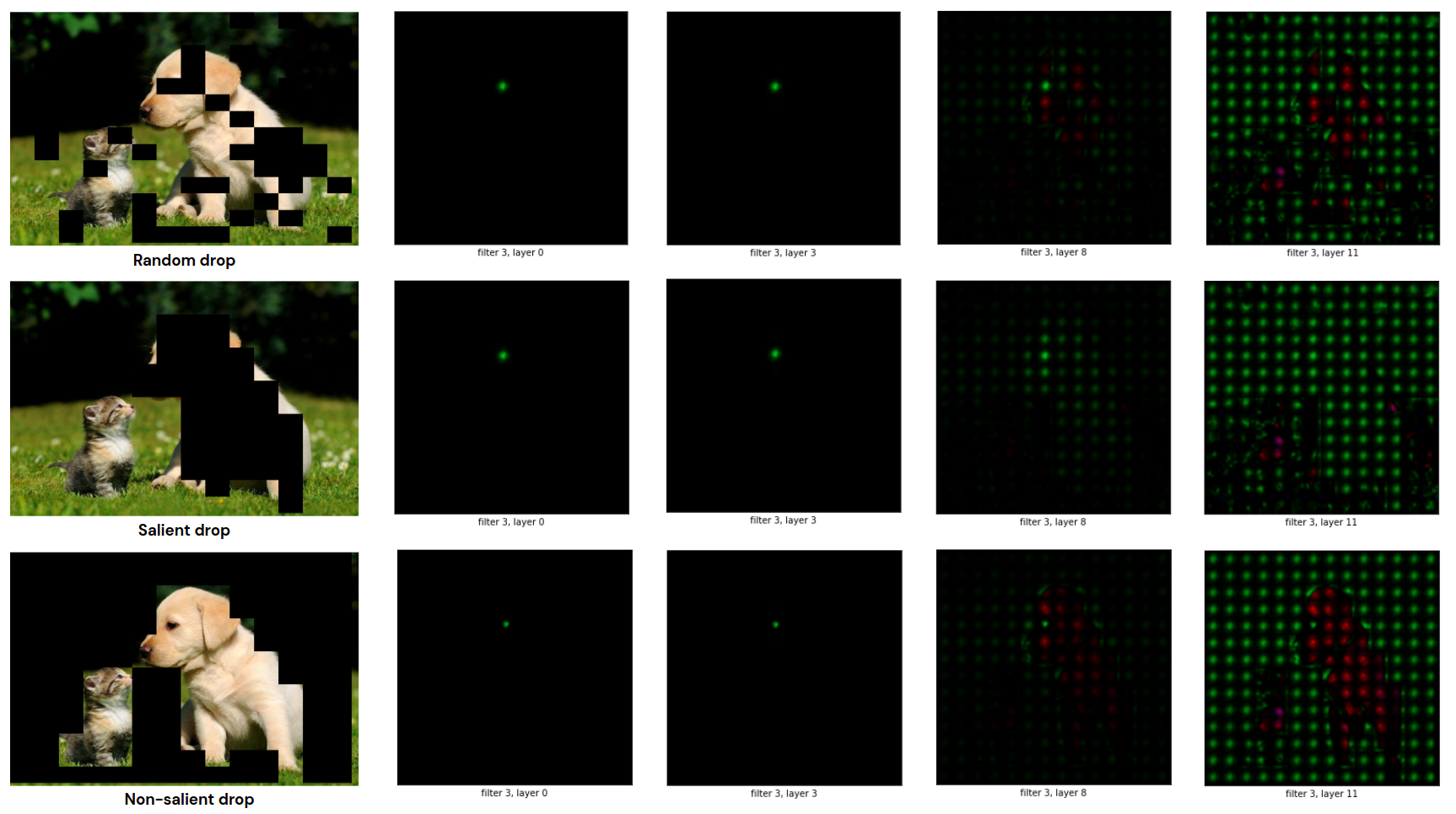

The ViT_neuron_visualization notebook file includes the code that we analyze the neuron's view. According to the chapter What Neurons Tell of the paper, we introduce the below features and analysis:

- Visualize filters and views of a specific input patche at 0'th layer:

- Comparing the views of different filters. Afterward, concluding that each filter is good for a specific group images but not good for the others group images.

- Create a global view at the higher layers and compare the global views corresponding with different patches.

- Analyze the views of salient, non-salient, and random occlusion cases over the depth-level layers.

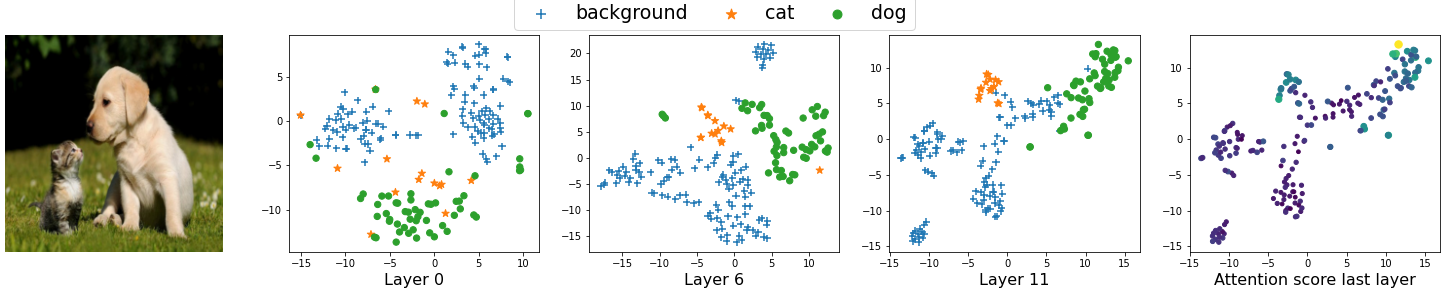

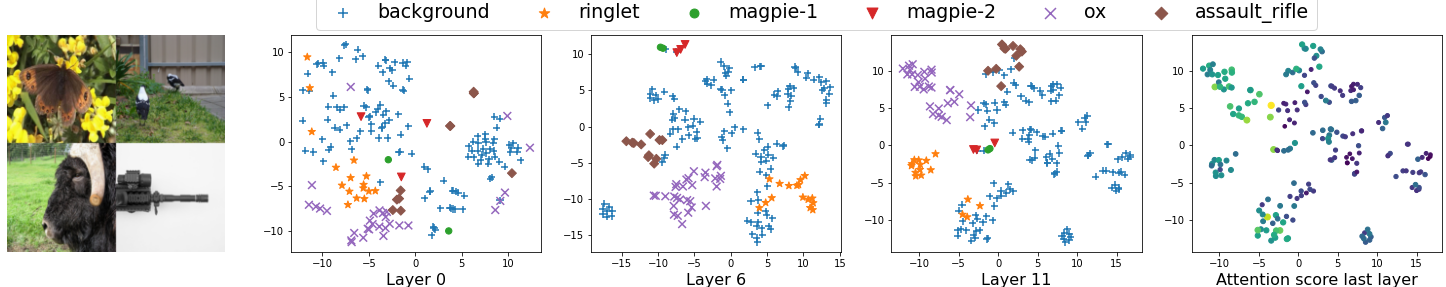

We implement the code the generate clustering behavior of embeddings in ViT_embedding_visualization with full instruction to reproduce the result