This repository contains an official PyTorch implementation for the paper "Frequency Domain-based Dataset Distillation" in NeurIPS 2023.

Donghyeok Shin *, Seungjae Shin *, and Il-Chul Moon

* Equal contribution

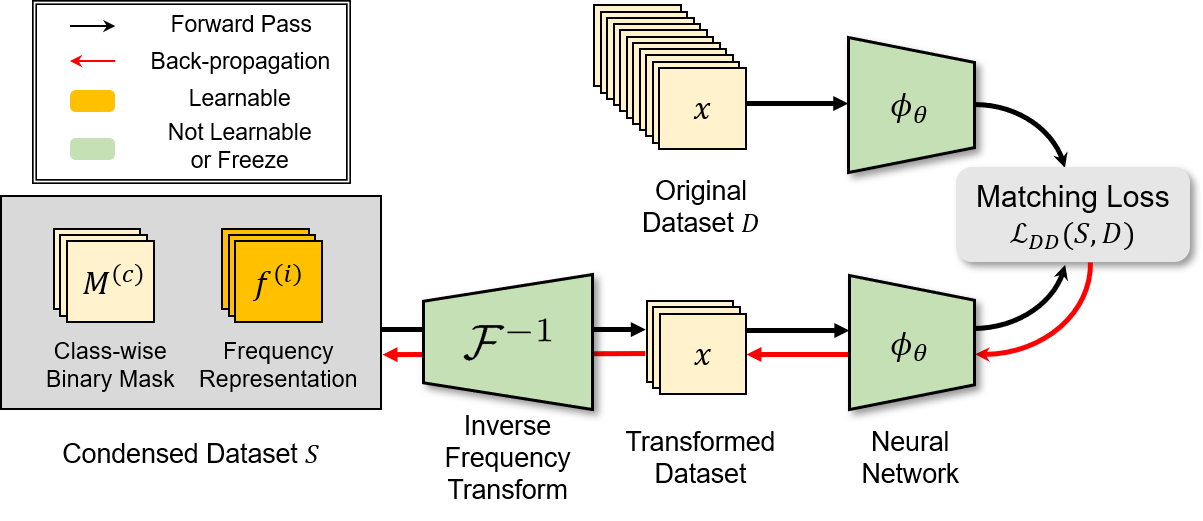

Abstract This paper presents FreD, a novel parameterization method for dataset distillation, which utilizes the frequency domain to distill a small-sized synthetic dataset from a large-sized original dataset. Unlike conventional approaches that focus on the spatial domain, FreD employs frequency-based transforms to optimize the frequency representations of each data instance. By leveraging the concentration of spatial domain information on specific frequency components, FreD intelligently selects a subset of frequency dimensions for optimization, leading to a significant reduction in the required budget for synthesizing an instance. Through the selection of frequency dimensions based on the explained variance, FreD demonstrates both theoretical and empirical evidence of its ability to operate efficiently within a limited budget, while better preserving the information of the original dataset compared to conventional parameterization methods. Furthermore, based on the orthogonal compatibility of FreD with existing methods, we confirm that FreD consistently improves the performances of existing distillation methods over the evaluation scenarios with different benchmark datasets.

This code was tested with CUDA 11.4 and Python 3.8.

pip install -r requirements.txt

The main hyper-parameters of FreD are as follows:

msz_per_channel: Memory size per each channellr_freq: Learning rate for synthetic frequency representationmom_freq: Momentum for synthetic frequency representation

The detailed values of these hyper-parameters can be found in our paper. For other hyper-parameters, we follow the default setting of each dataset distillation objectives. Please refer to the bash file for detailed arguments to run the experiment.

Below are some example commands to run FreD with each dataset distillation objective.

- Run the following command:

cd DC/scripts

sh run_DC_FreD.sh

- Run the following command:

cd DM/scripts

sh run_DM_FreD.sh

- Since TM need expert trajectories, run

run_buffer.shto generate expert trajectories before distillation:

cd TM/scripts

sh run_buffer.sh

sh run_TM_FreD.sh

FreD is a highly compatible parameterization method regardless of the dataset distillation objective. Herein, we provide simple guidelines for how to use FreD with different dataset distillation objectives.

# Define the frequency domain-based parameterization

synset = SynSet(args)

# Initialization

synset.init(images_all, labels_all, indices_class)

# Get partial synthetic dataset

images_syn, labels_syn = synset.get(indices=indices)

# Get entire dataset (need_copy is optional)

images_syn, labels_syn = synset.get(need_copy=True)

- For ImageNet-[A,B,C,D,E] experiments, we built upon the GLaD's official code.

- Run the following command with appropriate objective in

XXX: - If you want to run FreD with TM, run

run_buffer.shbefore just like above.

cd ImageNet-abcde/scripts

sh run_XXX_FreD.sh

- Download 3D-MNIST dataset.

- Run the following command:

cd 3D-MNIST/scripts

sh run_DM_FreD.sh

- Download the corrupted dataset: CIFAR-10.1, CIFAR-10-C, and ImageNet-C.

- Place the trained synthetic dataset at

corruption-exp/trained_synset/FreD/{dataset_name}. - Run the following command:

cd corruption-exp/scripts

sh run.sh

- Test accuracies (%) on low-dimensional datasets (≤ 64×64 resolution) with TM.

| MNIST | FashionMNIST | SVHN | CIFAR-10 | CIFAR-100 | Tiny-ImageNet | |

|---|---|---|---|---|---|---|

| 1 img/cls | 95.8 | 84.6 | 82.2 | 60.6 | 34.6 | 19.2 |

| 10 img/cls | 97.6 | 89.1 | 89.5 | 70.3 | 42.7 | 24.2 |

| 50 img/cls | - | - | 90.3 | 75.8 | 47.8 | 26.4 |

- Test accuracies (%) on Image-[Nette, Woof, Fruit, Yellow, Meow, Squawk] (128 × 128 resolution) with TM.

| ImageNette | ImageWoof | ImageFruit | ImageYellow | ImageMeow | ImageSquawk | |

|---|---|---|---|---|---|---|

| 1 img/cls | 66.8 | 38.3 | 43.7 | 63.2 | 43.2 | 57.0 |

| 10 img/cls | 72.0 | 41.3 | 47.0 | 69.2 | 48.6 | 67.3 |

- Test accuracies (%) on Image-[A, B, C, D, E] (128 × 128 resolution) with TM under 1 img/cls.

| ImageNet-A | ImageNet-B | ImageNet-C | ImageNet-D | ImageNet-E | |

|---|---|---|---|---|---|

| DC w/ FreD | 53.1 | 54.8 | 54.2 | 42.8 | 41.0 |

| DM w/ FreD | 58.0 | 58.6 | 55.6 | 46.3 | 45.0 |

| TM w/ FreD | 67.7 | 69.3 | 63.6 | 54.4 | 55.4 |

More results can be found in our paper.

If you find the code useful for your research, please consider citing our paper.

@inproceedings{shin2023frequency,

title={Frequency Domain-Based Dataset Distillation},

author={Shin, DongHyeok and Shin, Seungjae and Moon, Il-chul},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems},

year={2023}

}This work is heavily built upon the code from

- Bo Zhao, Konda Reddy Mopuri, and Hakan Bilen. Dataset condensation with gradient matching. arXiv preprint arXiv:2006.05929, 2020. Code Link

- Bo Zhao and Hakan Bilen. Dataset condensation with distribution matching. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pages 6514–6523, 2023. Code Link

- George Cazenavette, Tongzhou Wang, Antonio Torralba, Alexei A Efros, and Jun-Yan Zhu. Dataset distillation by matching training trajectories. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4750–4759, 2022. Code Link

- George Cazenavette, Tongzhou Wang, Antonio Torralba, Alexei A Efros, and Jun-Yan Zhu. Generalizing dataset distillation via deep generative prior. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3739–3748, 2023. Code Link