Jiaxiang Cheng, Pan Xie*, Xin Xia, Jiashi Li, Jie Wu, Yuxi Ren, Huixia Li, Xuefeng Xiao, Min Zheng, Lean Fu (*Corresponding author)

AutoML, ByteDance Inc.

⭐ If ResAdapter is helpful to your images or projects, please help star this repo. Thanks! 🤗

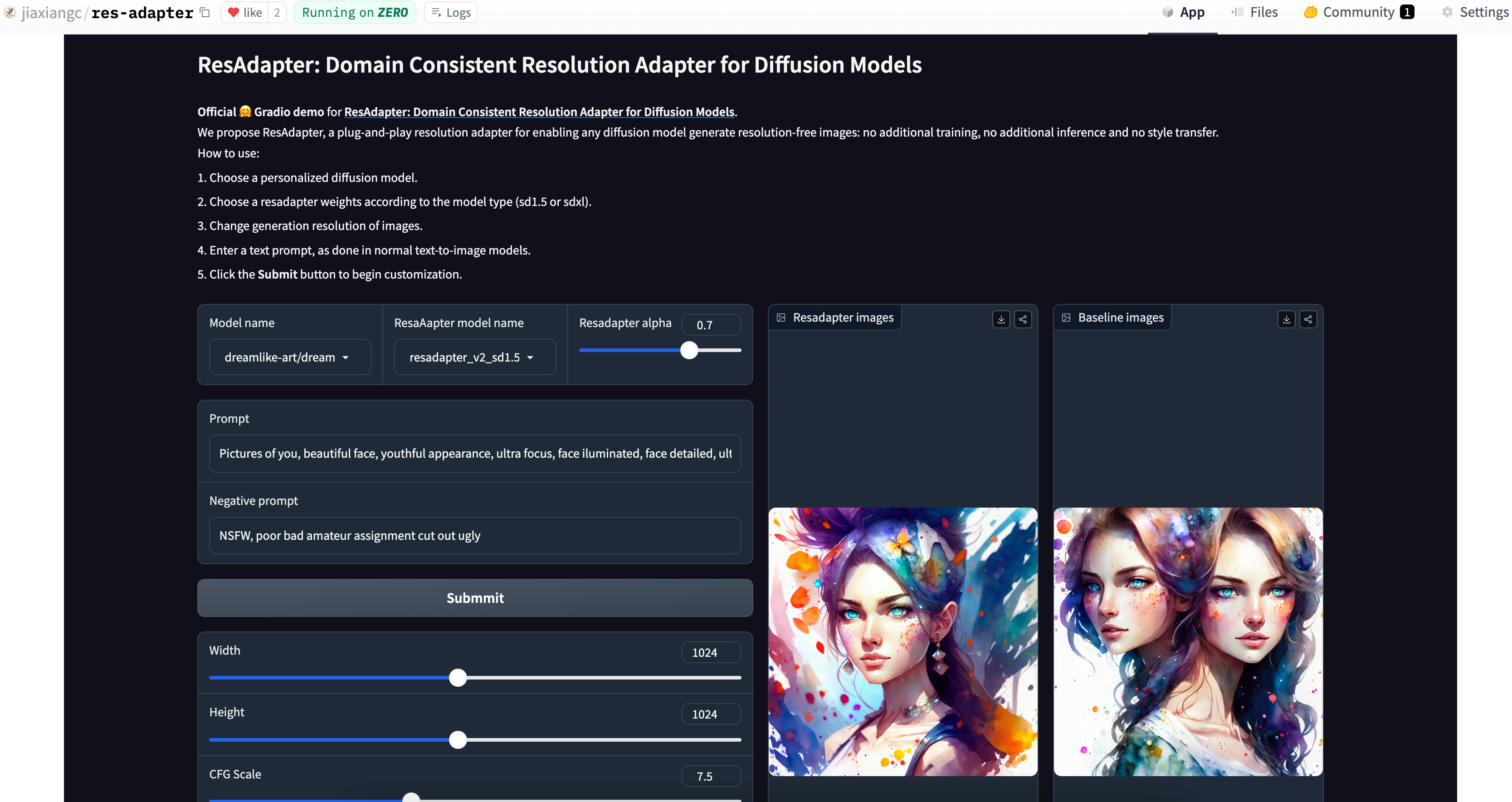

We propose ResAdapter, a plug-and-play resolution adapter for enabling any diffusion model generate resolution-free images: no additional training, no additional inference and no style transfer.

Comparison examples between resadapter and dreamlike-diffusion-1.0.

Comparison examples between resadapter and dreamlike-diffusion-1.0.

[2024/04/07]🔥 We release the official gradio space in Huggingface.[2024/04/05]🔥 We release the resadapter_v2 weights.[2024/03/30]🔥 We release the ComfyUI-ResAdapter.[2024/03/28]🔥 We release the resadapter_v1 weights.[2024/03/04]🔥 We release the arxiv paper.

We provide a standalone example code to help you quickly use resadapter with diffusion models.

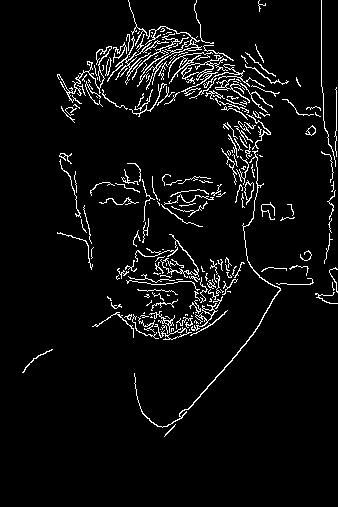

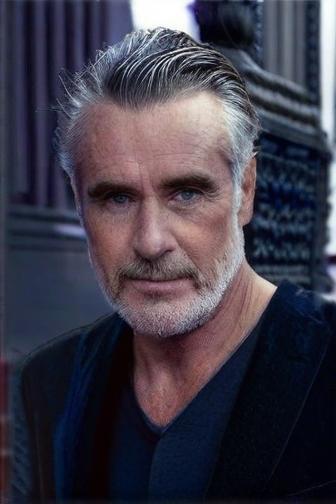

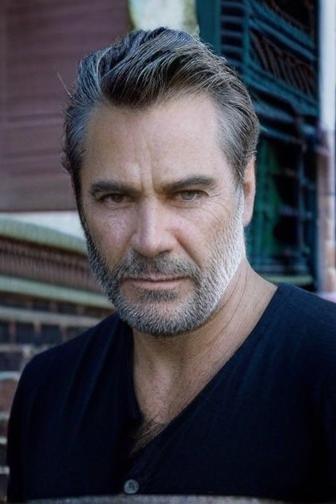

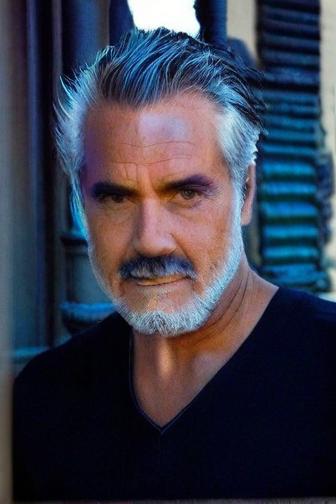

Comparison examples (640x384) between resadapter and dreamshaper-xl-1.0. Top: with resadapter. Bottom: without resadapter.

# pip install diffusers, transformers, accelerate, safetensors, huggingface_hub

import torch

from torchvision.utils import save_image

from safetensors.torch import load_file

from huggingface_hub import hf_hub_download

from diffusers import AutoPipelineForText2Image, DPMSolverMultistepScheduler

generator = torch.manual_seed(0)

prompt = "portrait photo of muscular bearded guy in a worn mech suit, light bokeh, intricate, steel metal, elegant, sharp focus, soft lighting, vibrant colors"

width, height = 640, 384

# Load baseline pipe

model_name = "lykon-models/dreamshaper-xl-1-0"

pipe = AutoPipelineForText2Image.from_pretrained(model_name, torch_dtype=torch.float16, variant="fp16").to("cuda")

pipe.scheduler = DPMSolverMultistepScheduler.from_config(pipe.scheduler.config, use_karras_sigmas=True, algorithm_type="sde-dpmsolver++")

# Inference baseline pipe

image = pipe(prompt, width=width, height=height, num_inference_steps=25, num_images_per_prompt=4, output_type="pt").images

save_image(image, f"image_baseline.png", normalize=True, padding=0)

# Load resadapter for baseline

resadapter_model_name = "resadapter_v1_sdxl"

pipe.load_lora_weights(

hf_hub_download(repo_id="jiaxiangc/res-adapter", subfolder=resadapter_model_name, filename="pytorch_lora_weights.safetensors"),

adapter_name="res_adapter",

) # load lora weights

pipe.set_adapters(["res_adapter"], adapter_weights=[1.0])

pipe.unet.load_state_dict(

load_file(hf_hub_download(repo_id="jiaxiangc/res-adapter", subfolder=resadapter_model_name, filename="diffusion_pytorch_model.safetensors")),

strict=False,

) # load norm weights

# Inference resadapter pipe

image = pipe(prompt, width=width, height=height, num_inference_steps=25, num_images_per_prompt=4, output_type="pt").images

save_image(image, f"image_resadapter.png", normalize=True, padding=0)We have released all resadapter weights, you can download resadapter models from Huggingface. The following is our resadapter model card:

| Models | Parameters | Resolution Range | Ratio Range | Links |

|---|---|---|---|---|

| resadapter_v2_sd1.5 | 0.9M | 128 <= x <= 1024 | 0.28 <= r <= 3.5 | Download |

| resadapter_v2_sdxl | 0.5M | 256 <= x <= 1536 | 0.28 <= r <= 3.5 | Download |

| resadapter_v1_sd1.5 | 0.9M | 128 <= x <= 1024 | 0.5 <= r <= 2 | Download |

| resadapter_v1_sd1.5_extrapolation | 0.9M | 512 <= x <= 1024 | 0.5 <= r <= 2 | Download |

| resadapter_v1_sd1.5_interpolation | 0.9M | 128 <= x <= 512 | 0.5 <= r <= 2 | Download |

| resadapter_v1_sdxl | 0.5M | 256 <= x <= 1536 | 0.5 <= r <= 2 | Download |

| resadapter_v1_sdxl_extrapolation | 0.5M | 1024 <= x <= 1536 | 0.5 <= r <= 2 | Download |

| resadapter_v1_sdxl_interpolation | 0.5M | 256 <= x <= 1024 | 0.5 <= r <= 2 | Download |

Hint1: We update the resadapter name format according to controlnet.

Hint2: If you want use resadapter with personalized diffusion models, you should download them from CivitAI.

Hint3: If you want use resadapter with ip-adapter, controlnet and lcm-lora, you should download them from Huggingface.

Hint4: Here is an installation guidance for preparing environment and downloading models.

If you want generate images in our inference script, you should install dependency libraries and download related models according to installation guidance. After filling in example configs, you can directly run this script.

python main.py --config /path/to/fileComparison examples (960x1104) between resadapter and dreamshaper-7. Top: with resadapter. Bottom: without resadapter.

Comparison examples (840x1264) between resadapter and lllyasviel/sd-controlnet-canny. Top: with resadapter, bottom: without resadapter.

Comparison examples (336x504) between resadapter and diffusers/controlnet-canny-sdxl-1.0. Top: with resadapter, bottom: without resadapter.

Comparison examples (864x1024) between resadapter and h94/IP-Adapter. Top: with resadapter, bottom: without resadapter.

Comparison examples (512x512) between resadapter and dreamshaper-xl-1.0 with lcm-sdxl-lora. Top: with resadapter, bottom: without resadapter.

- Replicate website: bytedance/res-adapter by (@Chenxi)

- Huggingface space:

- jiaxiangc/res-adapter (official space)

- ameerazam08/Res-Adapter-GPU-Demo by (@Ameer Azam)

An text-to-image example about res-adapter in huggingface space. More information in jiaxiangc/res-adapter.

- jiaxiangc/ComfyUI-ResAdapter (official comfyui node)

- blepping/ComfyUI-ApplyResAdapterUnet by (@blepping)

An text-to image example about ComfyUI-ResAdapter. More examples about lcm-lora, controlnet and ipadapter can be found in ComfyUI-ResAdapter.

github_text_to_image.mp4

I am learning how to make webui extension.

Run the following script:

# pip install peft, gradio, httpx==0.23.3

python app.py- If you are not satisfied with interpolation images, try to increase the alpha of resadapter to 1.0.

- If you are not satisfied with extrapolate images, try to choose the alpha of resadapter in 0.3 ~ 0.7.

- If you find the images with style conflicts, try to decrease the alpha of resadapter.

- If you find resadapter is not compatible with other accelerate lora, try to decrease the alpha of resadapter to 0.5 ~ 0.7.

- ResAdapter is developed by AutoML Team at ByteDance Inc, all copyright reserved.

- Thanks to the HuggingFace gradio team for their free GPU support!

- Thanks to the IP-Adapter, ControlNet, LCM-LoRA for their nice work.

- Thank @Chenxi and @AMEERAZAM08 to provide gradio demos.

- Thank @fengyuzzz to support video demos in ComfyUI-ResAdapter.

If you find ResAdapter useful for your research and applications, please cite us using this BibTeX:

@article{cheng2024resadapter,

title={ResAdapter: Domain Consistent Resolution Adapter for Diffusion Models},

author={Cheng, Jiaxiang and Xie, Pan and Xia, Xin and Li, Jiashi and Wu, Jie and Ren, Yuxi and Li, Huixia and Xiao, Xuefeng and Zheng, Min and Fu, Lean},

booktitle={arXiv preprint arxiv:2403.02084},

year={2024}

}

For any question, please feel free to contact us via chengjiaxiang@bytedance.com or xiepan.01@bytedance.com.