The source codes and models of Natural Image Matting via Guided Contextual Attention which will appear in AAAI-20.

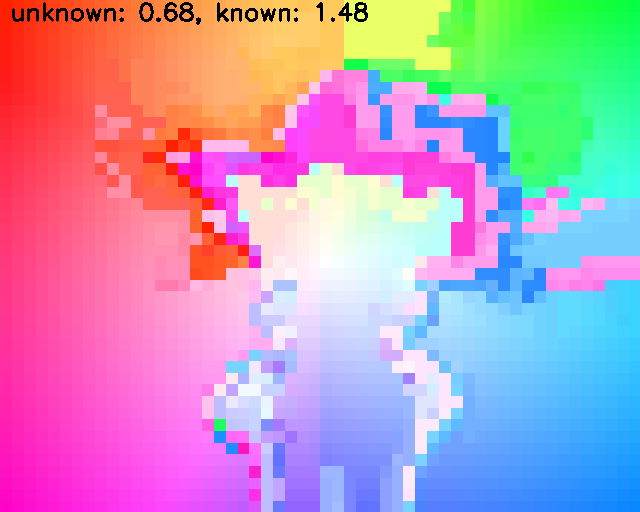

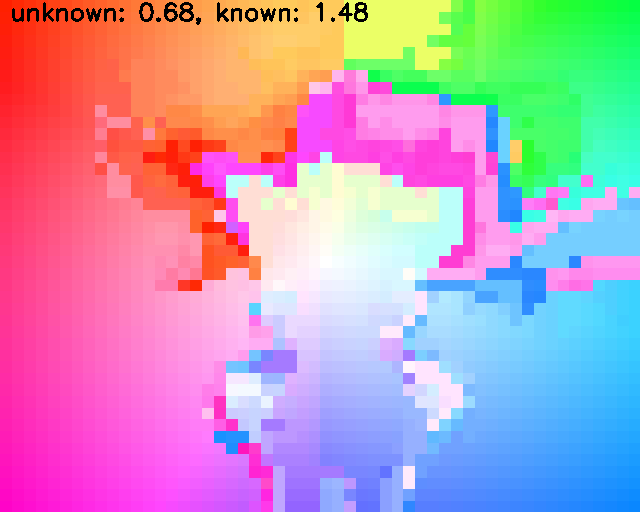

Matting results on test data from alphamatting.com with trimap-user.

- torch >= 1.1

- tensorboardX

- numpy

- opencv-python

- toml

- easydict

- pprint

GPU memory >= 8GB for inference on Adobe Composition-1K testing set

The models pretrained on Adobe Image Matting Dataset are covered by Adobe Deep Image Mattng Dataset License Agreement and can only be used and distributed for noncommercial purposes.

| Model Name | Training Data | File Size | MSE | SAD | Grad | Conn |

|---|---|---|---|---|---|---|

| ResNet34_En_nomixup | ISLVRC 2012 | 166 MB | N/A | N/A | N/A | N/A |

| gca-dist | Adobe Matting Dataset | 96.5 MB | 0.0091 | 35.28 | 16.92 | 32.53 |

| gca-dist-all-data | Adobe Matting Dataset + Composition-1K |

96.5 MB | - | - | - | - |

- ResNet34_En_nomixup: Model of the customized ResNet-34 backbone trained on ImageNet. Save to

./pretrain/. The training codes of ResNet34_En_nomixup and more variants will be released as an independent repository later. You need this checkpoint only if you want to train your own matting model. - gca-dist: Model of the GCA Matting in Table 2 in the paper. Save to

./checkpoints/gca-dist/. - gca-dist-all-data: Model of the GCA Matting trained on both Adobe Image Matting Dataset and the Composition-1K testing set for alphamatting.com online benchmark. Save to

./checkpoints/gca-dist-all-data/.

(We removed optimizer state_dict from gca-dist.pth and gca-dist-all-data.pth to save space. So you cannot resume the training from these two models.)

python demo.py \

--config=config/gca-dist-all-data.toml \

--checkpoint=checkpoints/gca-dist-all-data/gca-dist-all-data.pth \

--image-dir=demo/input_lowres \

--trimap-dir=demo/trimap_lowres/Trimap3 \

--output=demo/pred/Trimap3/This will load the configuration from config and save predictions in output/config_checkpoint/*. You can reproduce our alphamatting.com submission by this command.

Since each ground truth alpha image in Composition-1K is shared by 20 merged images, we first copy and rename these alpha images to have the same name as their trimaps.

If your ground truth images are in ./Combined_Dataset/Test_set/Adobe-licensed images/alpha, run following command:

./copy_testing_alpha.sh Combined_Dataset/Test_set/Adobe-licensed\ imagesNew alpha images will be generated in Combined_Dataset/Test_set/Adobe-licensed images/alpha_copy

TOML files are used as configurations in ./config/. You can find the definition and options in ./utils/config.py.

Default training requires 4 GPUs with 11GB memory, and the batch size is 10 for each GPU. First, you need to set your training and validation data path in configuration and dataloader will merge training images on-the-fly:

[data]

train_fg = ""

train_alpha = ""

train_bg = ""

test_merged = ""

test_alpha = ""

test_trimap = ""You can train the model by

./train.shor

OMP_NUM_THREADS=2 python -m torch.distributed.launch \

--nproc_per_node=4 main.py \

--config=config/gca-dist.tomlFor single GPU training, set dist=false in your *.toml and run

python main.py --config=config/*.tomlTo evaluate our model or your own model on Composition-1K, set the path of Composition-1K testing and model name in the configuration file *.toml:

[test]

merged = "./data/test/merged"

alpha = "./data/test/alpha_copy"

trimap = "./data/test/trimap"

# this will load ./checkpoint/*/gca-dist.pth

checkpoint = "gca-dist" and run the command:

./test.shor

python main.py \

--config=config/gca-dist.toml \

--phase=testThe predictions will be save to** ./prediction by default, and you can evaluate the results by the MATLAB file ./DIM_evaluation_code/evaluate.m in which the evaluate functions are provided by Deep Image Matting.

Please do not report the quantitative results calculated by our python code like ./utils/evaluate.py or this test.sh in your paper or project. The Grad and Conn functions of our reimplementation are not exactly the same as MATLAB version.