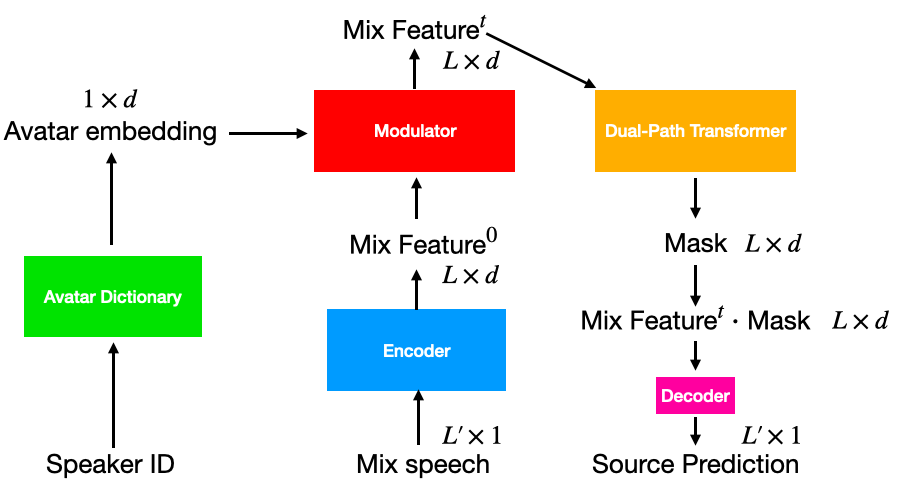

The idea is based on Dual-path Transformer. We add a modulator to incorporate speaker information and thus achieve personalized speech models. The overview of the model architecture is shown below.

- Download the

Avatar10Mix2dataset, which contains audios recorded from 10 speakers:

cd datasets

sh download_avatar10mix2.sh

cd ..- Install dependencies:

pip install -r requirements.txt(↑up to contents)

The training and testing code for separating speech from ambient noise is provided in speech_vs_ambient.

Change the directory to speech_vs_ambient and run the following commands:

- Training

python train.py --exp_dir exp/speech_vs_ambient- Testing

python eval.py --exp_dir exp/speech_vs_ambient(↑up to contents) We provide a simple webpage to review good test examples, which can be found at

exp/speech_vs_ambient/vis/examples/The training curves are logged with Tensorboard. To view them, run

tensorboard --logdir exp/speech_vs_ambient/lightning_logs/