An pytorch implementation of Paper "Improved Training of Wasserstein GANs".

Python, NumPy, SciPy, Matplotlib A recent NVIDIA GPU

A latest master version of Pytorch

-

gan_toy.py : Toy datasets (8 Gaussians, 25 Gaussians, Swiss Roll).(Finished in 2017.5.8)

-

gan_language.py : Character-level language model (Discriminator is using nn.Conv1d. Generator is using nn.Conv1d. Finished in 2017.6.23. Finished in 2017.6.27.)

-

gan_mnist.py : MNIST (Running Results while Finished in 2017.6.26. Discriminator is using nn.Conv1d. Generator is using nn.Conv1d.)

-

gan_64x64.py: 64x64 architectures(Looking forward to your pull request)

-

gan_cifar.py: CIFAR-10(Great thanks to robotcator)

-

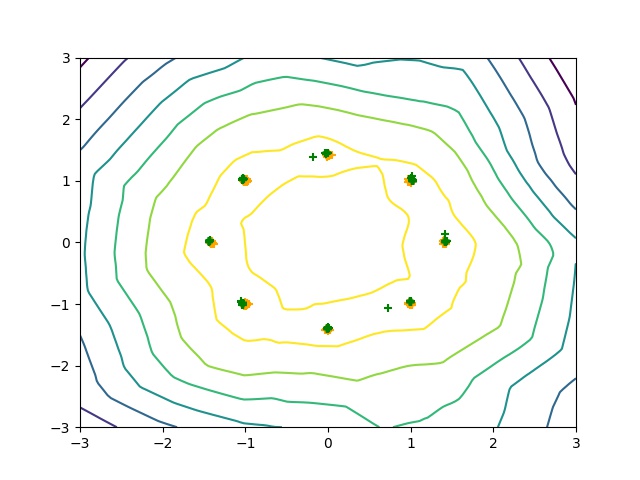

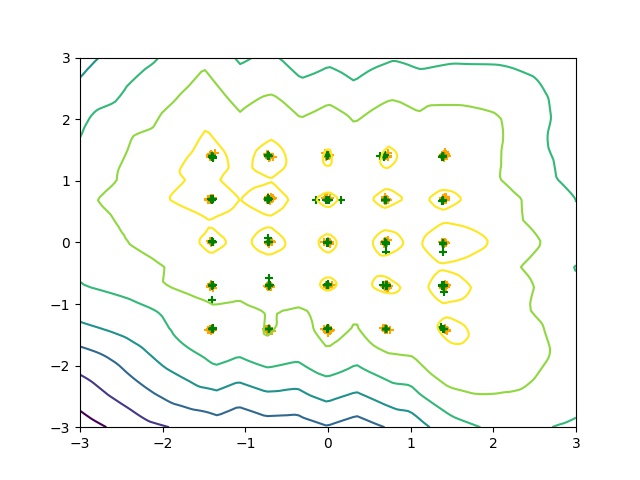

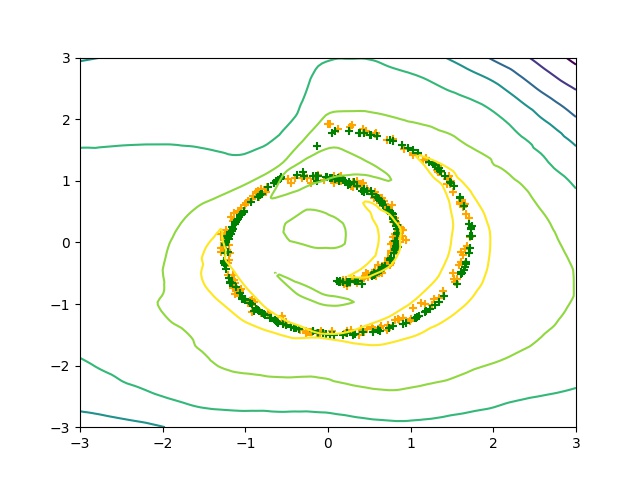

Some Sample Result, you can refer to the results/toy/ folder for details.

- 8gaussians 154500 iteration

-

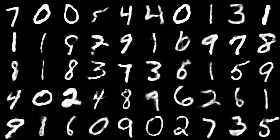

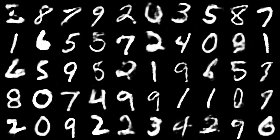

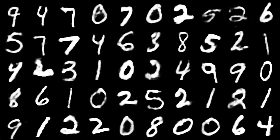

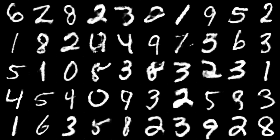

Some Sample Result, you can refer to the results/mnist/ folder for details.

-

Billion Word Language Generation (Using CNN, character-level)

Some Sample Result after 8699 epochs which is detailed in sample

I haven't run enough epochs due to that this is very time-comsuming.

He moved the mat all out clame t

A fosts of shores forreuid he pe

It whith Crouchy digcloued defor

Pamreutol the rered in Car inson

Nor op to the lecs ficomens o fe

In is a " nored by of the ot can

The onteon I dees this pirder ,

It is Brobes aoracy of " medurn

Rame he reaariod to thim atreast

The stinl who herth of the not t

The witl is f ont UAy Y nalence

It a over , tose sho Leloch Cumm

-

Some Sample Result, you can refer to the results/cifar10/ folder for details.

Based on the implementation igul222/improved_wgan_training and martinarjovsky/WassersteinGAN