[Arxiv Paper]

Authors : Pierre Gleize, Weiyao Wang and Matt Feiszli

Conference : ICCV 2023

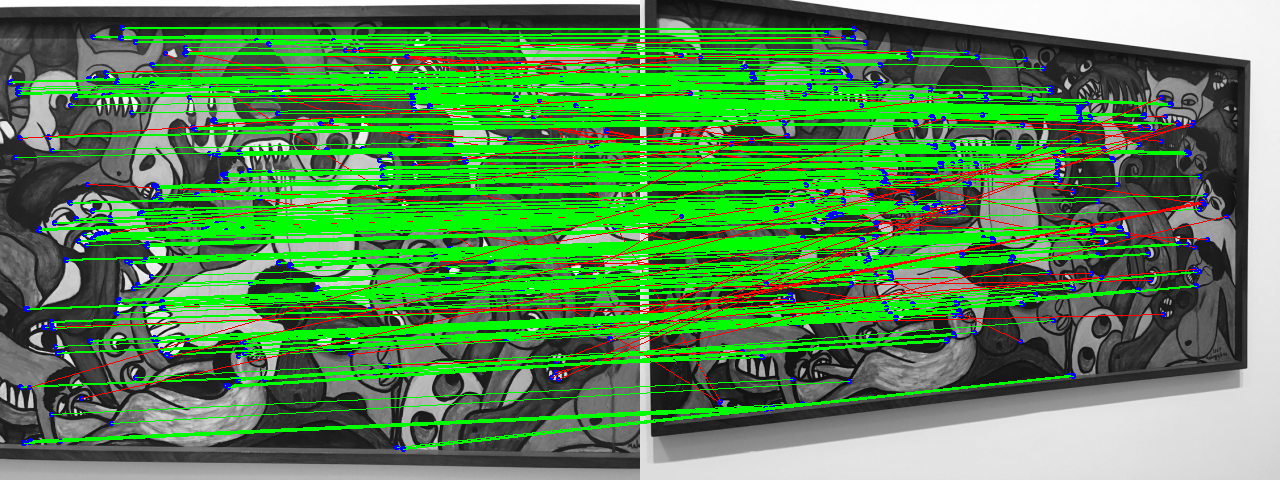

SiLK is a self-supervised framework for learning keypoints. SiLK focuses on simplicity and flexibility, while also providing state-of-art and competitive results on existing benchmarks.

Pre-trained models are also provided.

The released code has been tested on Linux, with two Tesla V100-SXM2 GPUs and takes about 5 hours to train.

- conda should be installed in order to setup the silk environment.

- Two GPUs are required to train SiLK.

- How to setup the python environment ?

- How to setup datasets ?

- How to train SiLK ?

- How to add a backbone ?

- How to run the evaluation pipeline ?

- How to run inference ?

- How to convert SiLK to torch script ?

- How to import SiLK features to COLMAP ?

- FAQ

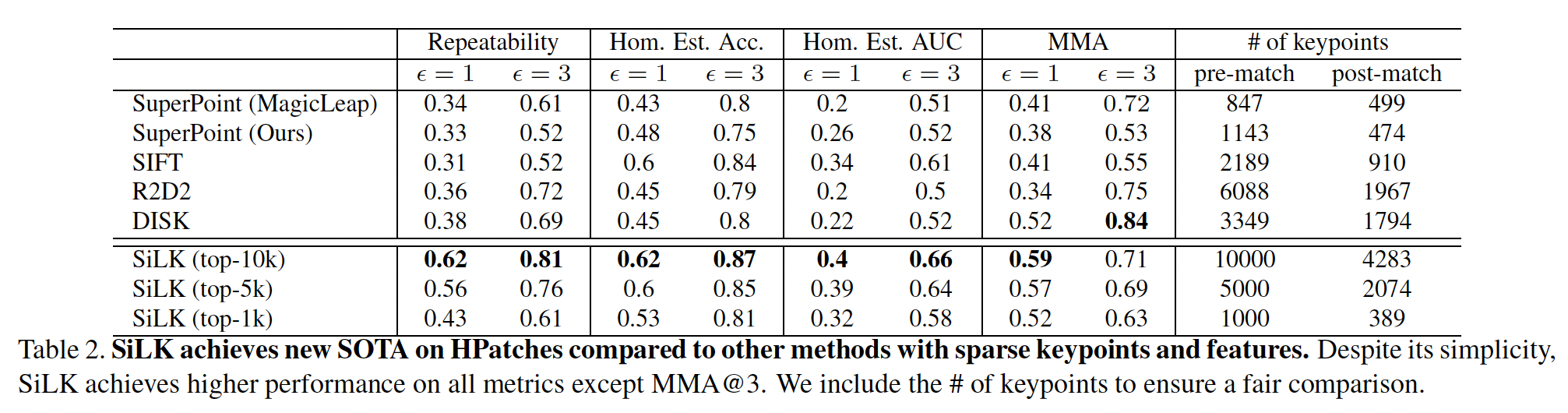

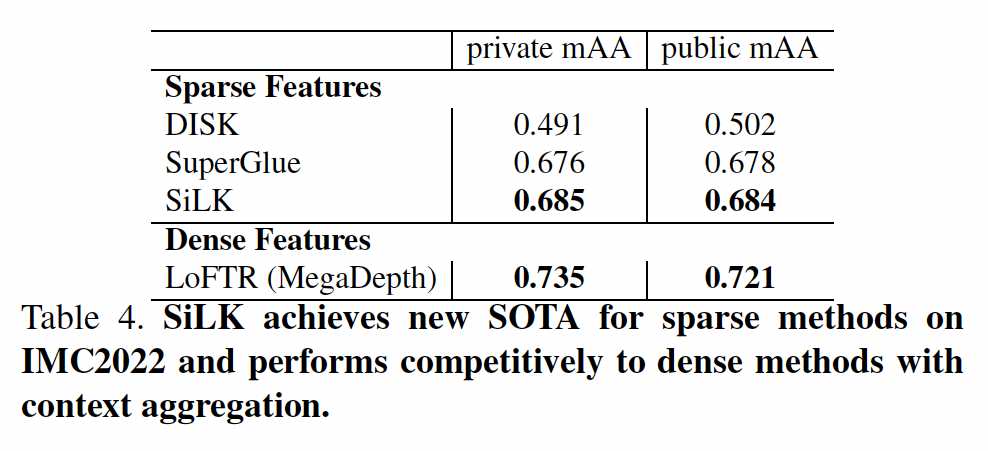

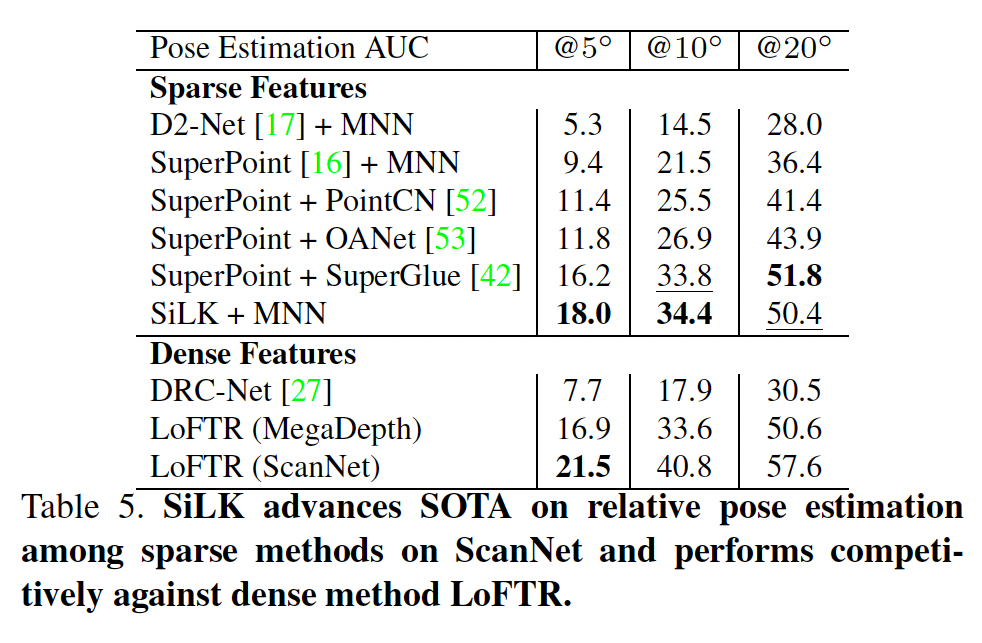

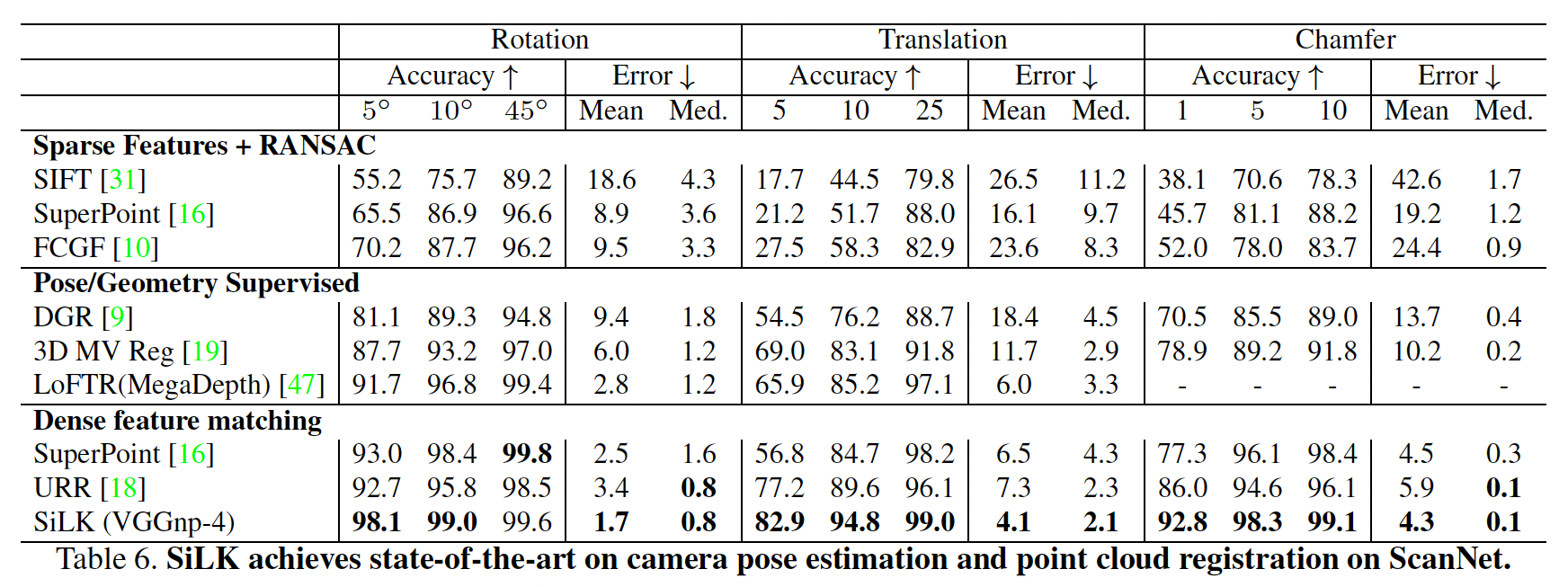

The results below have been computed using our VGG-4 backbone (checkpoint pvgg-4.ckpt for tab 2,3,6 and coco-rgb-aug for tab 4,5). To reproduce the IMC2022 results, we also provide the Kaggle notebook.

We provide a documentation, but it is non-exhaustive. Please create a new issue if clarification is required regarding some part of the code. We will add documentation if required by the community.

Our documentation can be found here.

See the CONTRIBUTING file for how to help out.

SiLK is licensed under a GNU General Public License (Version 3), as specified in the LICENSE file.