A Multi-Fidelity Bayesian Method for Complex Model Optimization: An Industrial Case Study

MFBO is an efficient method for reducing uncertainty using multi fidelity and Bayesian optimization with a general overview of possible acquisition functions. It is developed for a graduate project at Politecnico di Milano in a joint collaboration with Università della Svizzera italiana.

This tutorial required Anaconda or mamba being installed. After cloning the repository, set up the python environment using the command:

conda env create -f advpy.ymlOr fork the repository for having your own copy. The main modules depends on botorch and pytorch.

The complete paper can be found in the report file.

Computational models are often evaluated via stochastic simulation or numerical approximation. Fitting these models implies a difficult optimization problem over complex, possibly noisy parameter landscapes. The need for multiple realizations, as in uncertainty quantification or optimization, makes surrogate models an attractive option. For expensive high-fidelity models, however, even performing the number of simulations needed for fitting a surrogate may be too expensive. Inexpensive but less accurate low-fidelity data or models are often also available. Multi-fidelity combine high-fidelity and low-fidelity in order to achieve accuracy at a reasonable cost. Here we consider an hybrid method based on Multi-fidelity for implementing a Bayesian optimization algorithm. At the heart of this algorithm is maximizing the information criterion called acquisition function, and a list of the possible available choices is presented. Multi-fidelity Bayesian optimization achieves competitive performance with an affordable computational overhead for the running time of non-optimized models. We compare the results created be six acquisition functions, with Expected Improvement EI performing best.

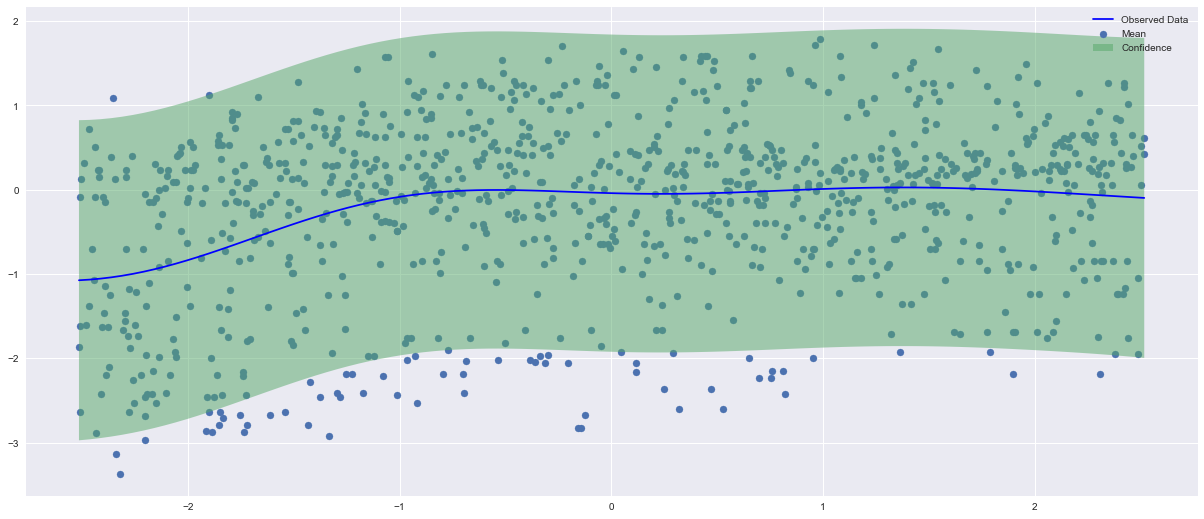

Here, it is shown the initial green uncertainty level described by the standard deviation before the MFBO method is applied:

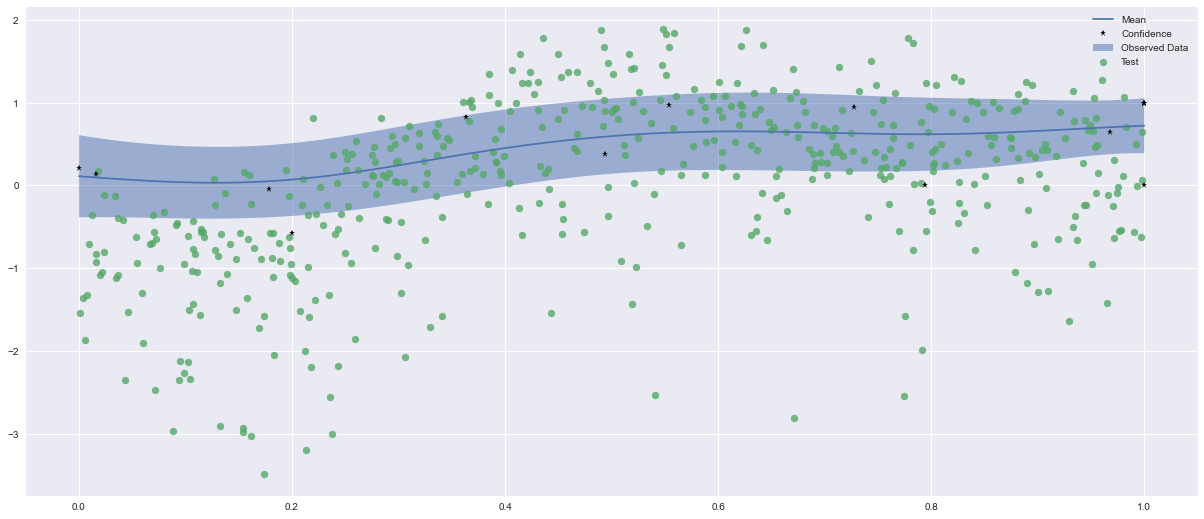

Following, it is shown the EI acquisition function outcome applied to one input (angle of the cargo-wing industrial test case):

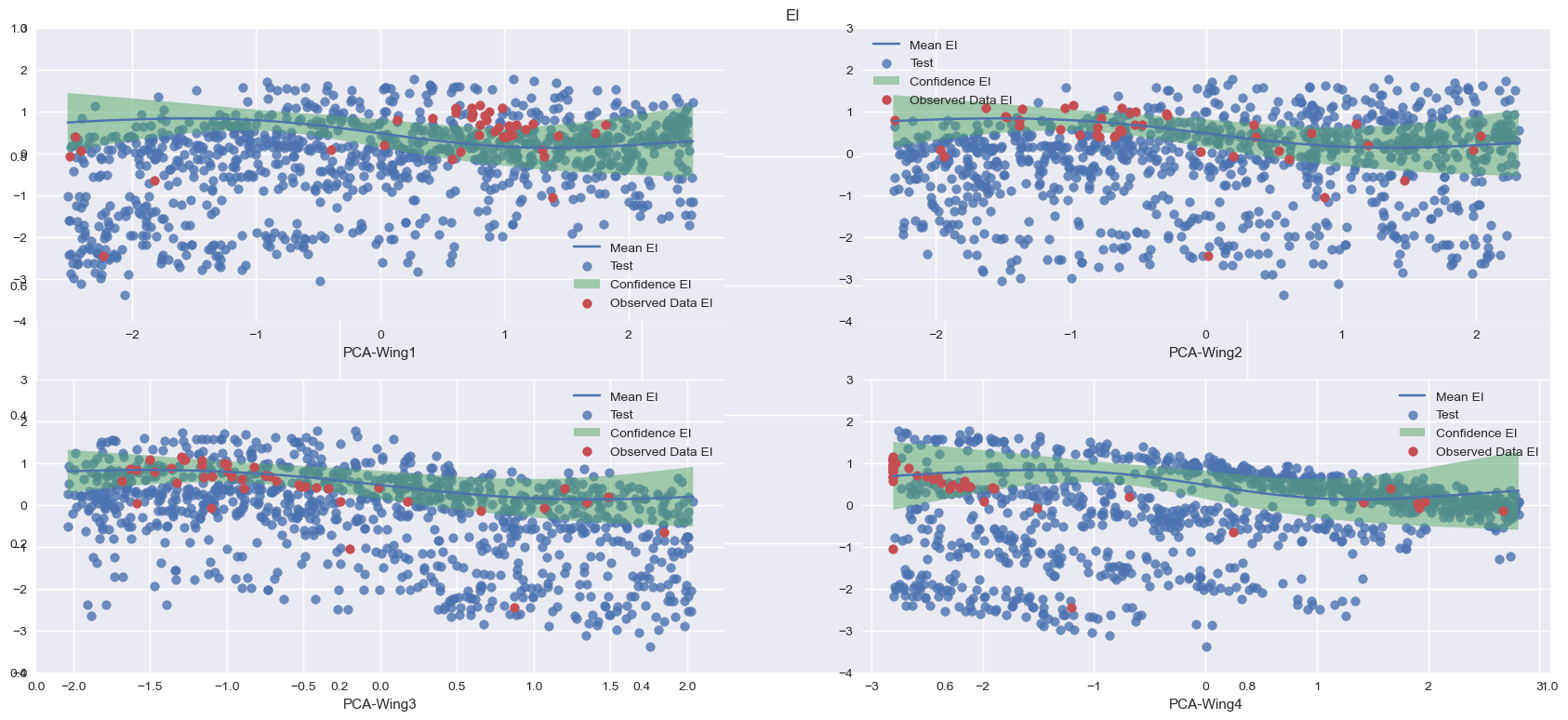

Finally, it is shown the result provided again by EI acquisition function after PCA performing, this time applied to all the inputs:

[3]P. I. Frazier. A tutorial on bayesian optimization, 2018

[6]P. Hennig, M. A. Osborne, and H. P. Kersting. Probabilist numerics. Cambridge University Press, 2022

[7]A. Zhang, Z. C. Lipton, M. Li, and A. J. Smola. Dive into deep learning