This is the project repo for the final project of the Udacity Self-Driving Car Nanodegree: Programming a Real Self-Driving Car. For more information about the project, see the project introduction here.

| Name | Udacity account email |

|---|---|

| Tianqi Ye | ye.tianqi1900@gmail.com |

| FC Su | dragon7.fc@gmail.com |

| Zhong | 546764887@qq.com |

| Fujing Xie | emiliexiaoxiexie@hotmail.com |

| Chengnan Hu | twinsnan@foxmail.com |

-

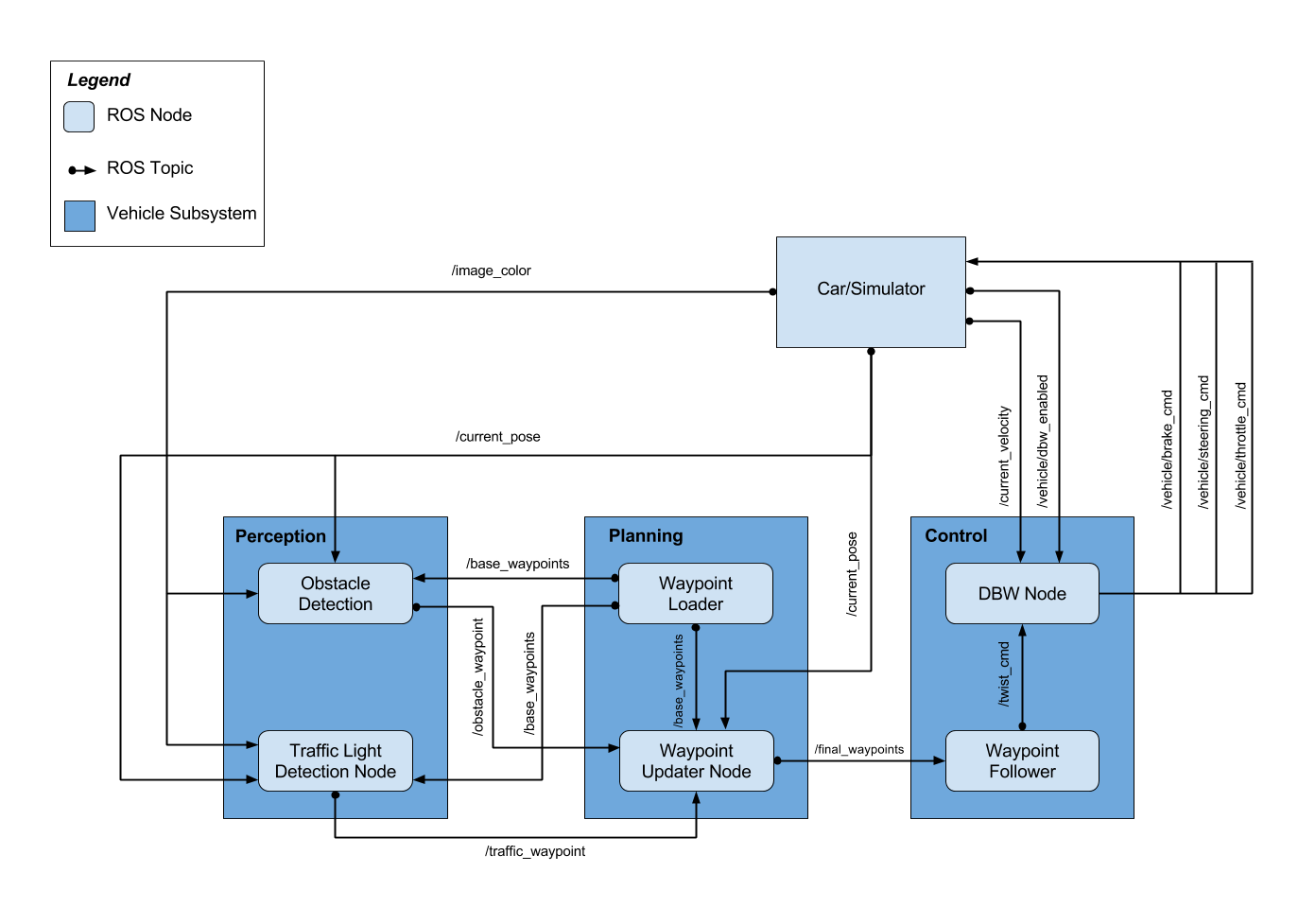

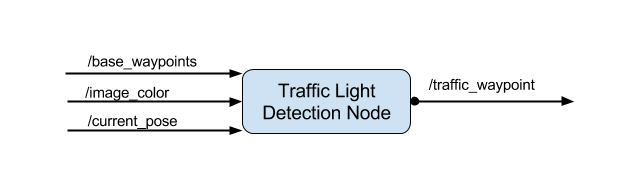

Traffic Light Detection Node

-

(path_to_project_repo)/ros/src/tl_detector/

This package contains the traffic light detection node:

tl_detector.py. This node takes in data from the/image_color,/current_pose, and/base_waypointstopics and publishes the locations to stop for red traffic lights to the/traffic_waypointtopic.The

/current_posetopic provides the vehicle's current position, and/base_waypointsprovides a complete list of waypoints the car will be following.Traffic light detection should take place within

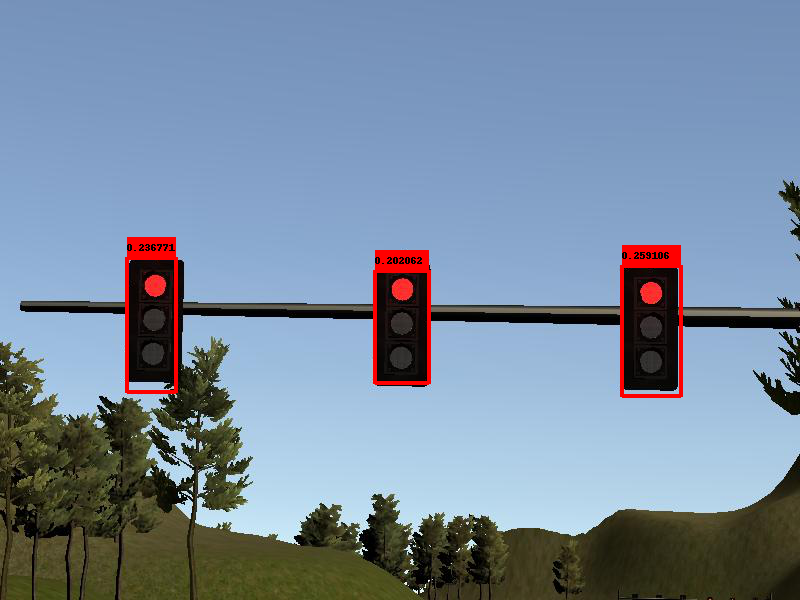

tl_detector.py, whereas traffic light classification should take place within../tl_detector/light_classification_model/tl_classfier.py.We applied Tensorflow to detect traffic light. The real-time object detection model we used is SSD_Mobilenet 11.6.17 version.

After successfully detected traffic light, we converted RGB to HSV color space to identify red/green/yellow light.

-

-

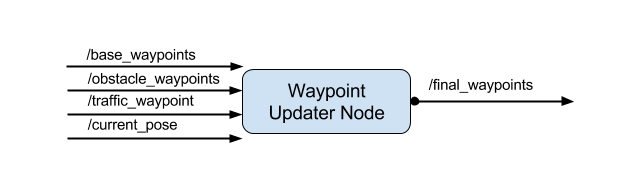

Waypoint Updater Node

-

(path_to_project_repo)/ros/src/waypoint_updater/

This package contains the waypoint updater node:

waypoint_updater.py. The purpose of this node is to update the target velocity property of each waypoint based on traffic light and obstacle detection data. This node will subscribe to the/base_waypoints,/current_pose,/obstacle_waypoint, and/traffic_waypointtopics, and publish a list of waypoints ahead of the car with target velocities to the/final_waypointstopic. The waypoint updater node serves the following functions:- A KD-Tree algorithm was used to search for the nearest waypoint, and bring down the search time to O(logn).

- A vector products function was used to help the vehicle detect if the nearest waypoint is behind the vehicle.

- A decelerate waypoint will be generated if a red traffic light is detected.

The pure pursuit line-follow strategy was used after the waypoint gerneation. A DBW control node(details in next section) was used to help the vehicle keep tracking of the target waypoint. The steering angle is generated based on the position next target waypoint for each loop, using the vehicle kinematics and the trun curvature calculation.

-

-

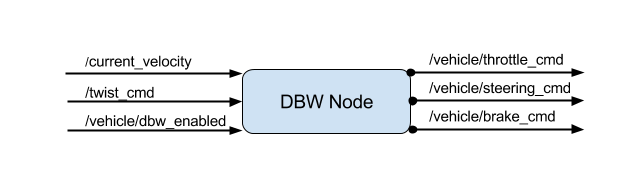

DBW Node

-

(path_to_project_repo)/ros/src/twist_controller/

Carla is equipped with a drive-by-wire (dbw) system, meaning the throttle, brake, and steering have electronic control. This package contains the files that are responsible for control of the vehicle: the node

dbw_node.pyand the filetwist_controller.py, along with a pid and lowpass filter that you can use in your implementation. Thedbw_nodesubscribes to the/current_velocitytopic along with the/twist_cmdtopic to receive target linear and angular velocities. Additionally, this node will subscribe to/vehicle/dbw_enabled, which indicates if the car is under dbw or driver control. This node will publish throttle, brake, and steering commands to the/vehicle/throttle_cmd,/vehicle/brake_cmd, and/vehicle/steering_cmdtopics. A brake of 400Nm is applied to make the vehicle hold staionary and it will dynamically change with the vehicle current speed and vehicle mass(v/s^2 * kg = N*m) during a deceleration situation.

-

Please use one of the two installation options, either native or docker installation.

-

Be sure that your workstation is running Ubuntu 16.04 Xenial Xerus or Ubuntu 14.04 Trusty Tahir. Ubuntu downloads can be found here.

-

If using a Virtual Machine to install Ubuntu, use the following configuration as minimum:

- 2 CPU

- 2 GB system memory

- 25 GB of free hard drive space

The Udacity provided virtual machine has ROS and Dataspeed DBW already installed, so you can skip the next two steps if you are using this.

-

Follow these instructions to install ROS

- ROS Kinetic if you have Ubuntu 16.04.

- ROS Indigo if you have Ubuntu 14.04.

-

- Use this option to install the SDK on a workstation that already has ROS installed: One Line SDK Install (binary)

-

Download the Udacity Simulator.

Build the docker container

docker build . -t capstoneRun the docker file

docker run -p 4567:4567 -v $PWD:/capstone -v /tmp/log:/root/.ros/ --rm -it capstoneTo set up port forwarding, please refer to the instructions

- Clone the project repository

git clone https://github.com/udacity/CarND-Capstone.git- Install python dependencies

cd CarND-Capstone

pip install -r requirements.txt- Make and run styx

cd ros

catkin_make

source devel/setup.sh

roslaunch launch/styx.launch- Run the simulator

NOTE: Below are some requirements to run catkin_make successfully in Project Workspace.

- sudo apt-get install -y ros-kinetic-dbw-mkz-msgs

- pip install --upgrade catkin_pkg_modules

- Download training bag that was recorded on the Udacity self-driving car.

- Unzip the file

unzip traffic_light_bag_file.zip- Play the bag file

rosbag play -l traffic_light_bag_file/traffic_light_training.bag- Launch your project in site mode

cd CarND-Capstone/ros

source devel/setup.sh

roslaunch launch/site.launch- Confirm that traffic light detection works on real life images

NOTE: Docker Instruction

-

rosbag

-

LINUX:

docker run --rm --name capstone \ --net=host -e DISPLAY=$DISPLAY \ -v $HOME/.Xauthority:/root/.Xauthority \ -v $PWD:/capstone -v /tmp/log:/root/.ros/ \ -v traffic_light_bag_file:/bag \ -p 4567:4567 \ -it capstone -

MAC:

socat TCP-LISTEN:6000,reuseaddr,fork UNIX-CLIENT:\"$DISPLAY\" docker run --rm --name capstone \ -e DISPLAY=[IP_ADDRESS]:0 \ -v $PWD:/capstone -v /tmp/log:/root/.ros/ \ -v traffic_light_bag_file:/bag \ -p 4567:4567 \ -it capstone

rosbag play -l /bag/traffic_light_training.bag

-

-

roslaunch

docker exec -it capstone bash source devel/setup.sh roslaunch launch/site.launch