Demo for the paper

Visual Dialog

Abhishek Das, Satwik Kottur, Khushi Gupta, Avi Singh, Deshraj Yadav, José M. F. Moura, Devi Parikh, Dhruv Batra

arxiv.org/abs/1611.08669

CVPR 2017 (Spotlight)

Live demo: http://visualchatbot.cloudcv.org

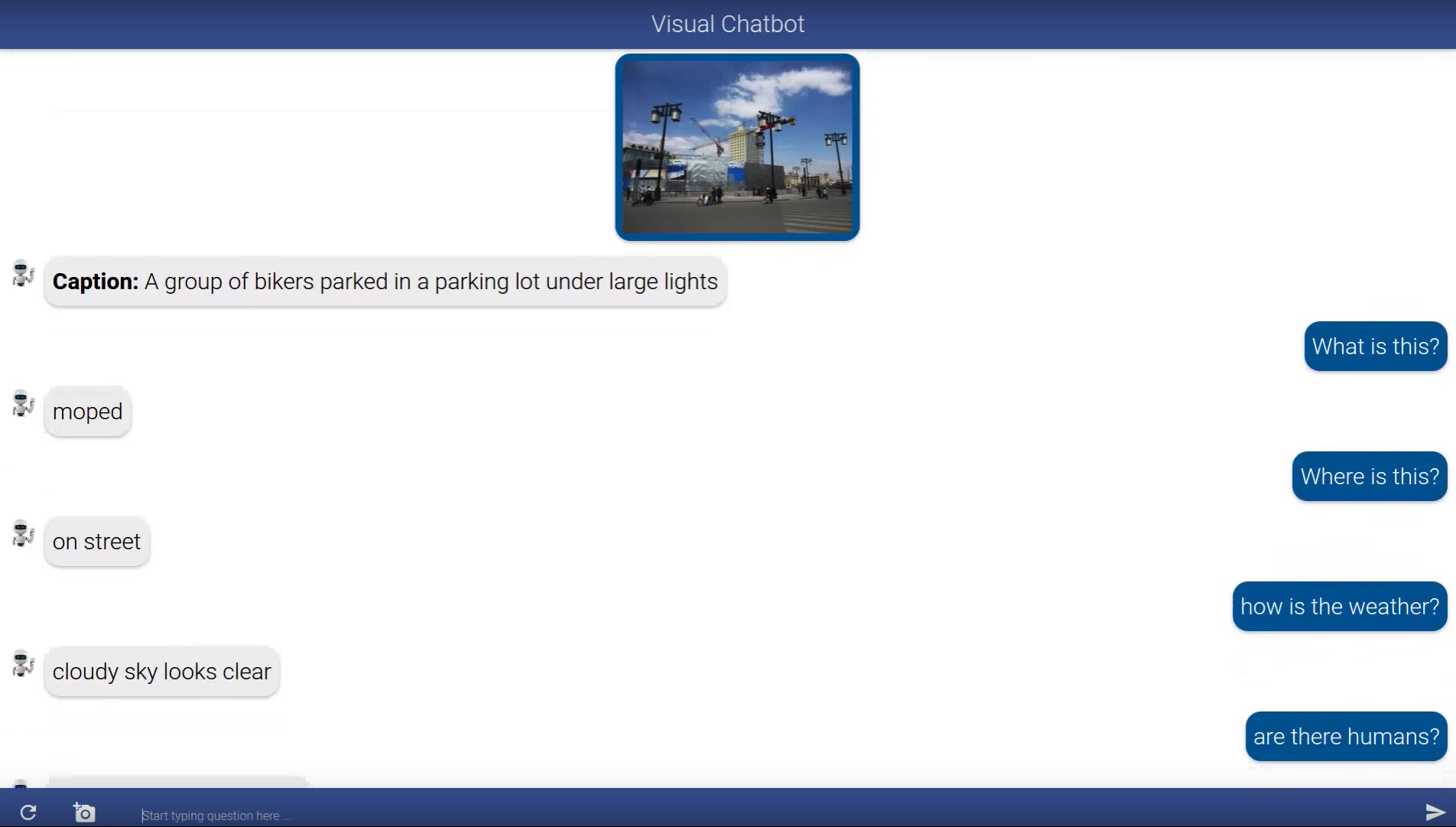

Visual Dialog requires an AI agent to hold a meaningful dialog with humans in natural, conversational language about visual content. Given an image, dialog history, and a follow-up question about the image, the AI agent has to answer the question. Putting it all together, we demonstrate the first ‘visual chatbot’!

sudo apt-get install -y git python-pip python-dev

sudo apt-get install -y python-dev

sudo apt-get install -y autoconf automake libtool curl make g++ unzip

sudo apt-get install -y libgflags-dev libgoogle-glog-dev liblmdb-dev

sudo apt-get install libprotobuf-dev libleveldb-dev libsnappy-dev libopencv-dev libhdf5-serial-dev protobuf-compilergit clone https://github.com/torch/distro.git ~/torch --recursive

cd ~/torch; bash install-deps;

./install.sh

source ~/.bashrcgit clone https://github.com/hughperkins/pytorch.git

cd pytorch

source ~/torch/install/bin/torch-activate

./build.shsudo apt-get install -y redis-server rabbitmq-server

sudo rabbitmq-plugins enable rabbitmq_management

sudo service rabbitmq-server restart

sudo service redis-server restartluarocks install loadcaffeThe below two dependencies are only required if you are going to use GPU

luarocks install cudnn

luarocks install cunnNote: CUDA and cuDNN is only required if you are going to use GPU

Download and install CUDA and cuDNN from nvidia website

git clone https://github.com/Cloud-CV/visual-chatbot.git

cd visual-chatbot

git submodule init && git submodule update

sh models/download_models.sh

pip install -r requirements.txtChange lines 2-4 of neuraltalk2/misc/LanguageModel.lua to the following:

local utils = require 'neuraltalk2.misc.utils'

local net_utils = require 'neuraltalk2.misc.net_utils'

local LSTM = require 'neuraltalk2.misc.LSTM'Open 2 different terminal sessions and run the following commands:

python worker.py

python worker_captioning.py

python manage.py runserverYou are all set now. Visit http://127.0.0.1:8000 and you will have your demo running successfully.

If you find this code useful, consider citing our work:

@inproceedings{visdial,

title={{V}isual {D}ialog},

author={Abhishek Das and Satwik Kottur and Khushi Gupta and Avi Singh

and Deshraj Yadav and Jos\'e M.F. Moura and Devi Parikh and Dhruv Batra},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

year={2017}

}

BSD